Report mAIstro: Multi-agent research and report writing

8.73k views6013 WordsCopy TextShare

LangChain

Research Summarization was the most popular agent use case in our recent State of AI Agents survey...

Video Transcript:

hey this is Lance from Lang chain we recently asked 1300 Professionals in the AI industry what they think agents are best suited to solve and the highest rank task was research and summarization as you can see here in this chart now I've spent a lot of time working on different types of report writing and research agents and today I want to build one from scratch and show a bunch of lessons I've learned along the way now before I get into all the code I want to show you the output of the agent just to motivate

this so this is me just simply asking the agent write me a report about different agent Frameworks Lang graph crew AI open swarm LOM Index workflows this is the report I get out so the agent does all this form you can see it kind of partitions each framework into its own section gives nicely formatted bullets uh this is all a markdown file by the way provides sources for me same for crew sources open AI Swarm linic workflows at the end nice distillation clear table this is all done for me takes less than a minute and

all this web research and Report generation and writing is automated by the agent here are some other report examples I asked about how did repet recently use lsmith and langra to build their agents and all this research is done for me it gives kind of a nicely uh broken down overview of what was done the various sources um details of implementation and again kind of a nice summary section on key technical takeaways here's another example where I asked for kind of news and recent events across various AI observability offerings we can see we get again

some nice sources here it goes into Langs Smith Brain Trust recent in news it talks about their series a uh data dog it talks about uh kind of their their work on AI observability here and arise Phoenix and some nice kind of summary comments here so you can see the we're going to build here has a few advantages all I need to do is pass in an input topic it can produce reports of different types you can see the structure of the reports varies a little bit in terms of the section number in terms of

how the conclusion is formatted whether it uses tables we can all customize this and it's very flexible I can produce reports on many different types of Topics in many different userdefined formats now let me talk about one of the motivations for kind of agentic report writing so there's been a lot of emphasis on rag systems which are extremely useful and actually a lot of times can be kind of core components under the hood for report writing but the point is with rag you're really just saving time with finding answers okay now usually answers in service

of a bigger goal like a actual decision you're trying to make it as an organization or as an individual reports kind of get you closer to decisionmaking by presenting information in a structured wellth thought out way way so the leverage you get from high quality reports is often times a lot greater than what you get just from a Q&A or rag system rexism only gives you answers reports kind of present information in such a way that actually can accelerate a decision now I want to spend just a quick minute or two on kind of the

state-of-the-art how to think about report writing what's been done previously and what do we want to kind of build and extend so you can think about report writing in three phases one is planning there's been a lot of interesting Works around report writing that first start with some kind of planning phase in particular GPD researcher very nice work from the folks at tavali um uses what you might think of as a plan and solve approach where it looks at the input topic and it basically breaks that topic out into a set of subtopics which each

can be individually researched okay that's kind of idea one now idea two is the storm paper in particular starts with the topic and builds a skeletal outline of the final report up front which has some advantage that we'll talk about a bit later but this kind of outline generation up front follows in that case a Wikipedia style structure because storm is really meant to produce Wikipedia entries so it follows kind of a prescribed structure but the point is that the outline is actually generated up front so the two big ideas in planning are one take

the input topic from the user fan it out into subtopics that'll indep independently be researched to kind of flesh out the overall theme that the user wants researched that's idea one idea two is kind of generating an outline up front of the over research report now phase two once you have a set of subtopics and or an outline of your final report then how do you actually conduct the research now there's at least three different strategies that I've seen for doing the research itself so one is just very simply taking the subtopics and creating search

queries from each subtopic and then performing a query either on a web or ad okay so that's just kind of parallelized search and retrieval from some Source be it rag be at the web now another idea that takes it a bit one step further is what we see in the AMA researcher work which basically does retrieval and then kind of does a grading on the retrieved in this case it's web resources um so kind of looks at the retriev web pages and determines does this contain information necessary to address the input question if not rewrite

the question try again so it kind of has this iterative search and evaluation process now the most sophisticated approach that we've seen here is Storm actually does a multi- turn interview between a like what they call an analyst persona and an expert which is effectively your search engine could be rag it could be Wikipedia could be some other search service you're using but the point is you basically have a p an AI Persona asking questions receiving answers from the search service and continuing to ask questions till they're satisfied so that's kind of the most ofis

the simplest case is just query retrieval like we see GPD researcher there's also kind of it search so you do a search retrieve evaluate potentially search again and the most of it's cated is an explicit multi- turn interview between like an analyst and your sources now finally writing so you've done research using one of these approaches how do you take on the writing task a few different ideas here one of the more popular is just sequential section writing so write each section individually storm paralyzes some of the sections but uses kind of an iterative process

where it has the outline generate at the beginning it will generate sections All In Parallel add them to the outline and then it'll do kind of a refinement to fill in for example introduction and conclusion now another process worth noting here is you can also take all the research you've done and try to write a port in a single shot basically have all these sources and let LM just write the entire report all at once so Lum index shows this in some interesting um examples they've shared recently so that's another way to think about this

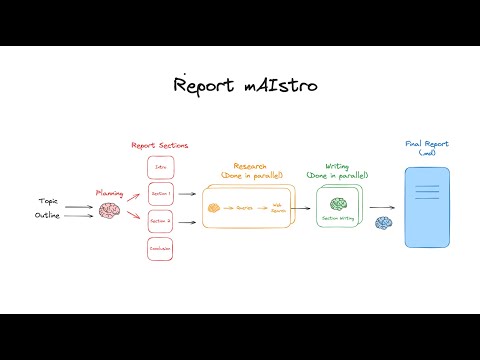

so one is kind of writing your report section sequentially and the other is on the other kind of full extreme is kind of a single shot process of writing your entire report at once now let me very briefly cover what we're going to do here and I'm going to give some general lessons I've learned from working on a lot of different report writing agents um over the last few months basically we're going to break up into three phases planning research writing now here's the first Insight I've kind of learned it's nice to do a pre-planning

phase to lay out the structure of your report ahead of time for a bunch of different reasons one is it makes it very flexible you can produce many different styles of reports this way so for example let's say I want a report that always has five sections an intro and conclusion and I always want a summary table in the conclusion this particular agent implementation allows you to do that what if I want just a single section kind of like a short form report no introduction you can also do that so what's really nice is this

planning phase allows you to take both a topic from a user and a description desired report structure and it'll build the skeleton of the report for you upfront so it makes it very flexible two it allows you to set up paralyzed research and writing across different report sections you can debug report planning and isolation so you can kind of look at the plan really before the research is done if you don't like it you can modify the prompts and it's very useful for debugging as I'll show in a little bit the other cool thing is

you can actually decide what sections of your of your report actually need research up front so let me give you an example let's say I report that I want an introduction and a conclusion well those sections don't actually need research only the main body of the report actually needs research and so in this planning phase I can kind of create all the sections of the port and also earmark these need research these don't so it's a nice way that you can kind of do all your pre-planning up front including and planning for what sections report

AC you research now let's talk about research itself so what I found actually here is just simple parallelized search and retrieval is a very nice way to go when I say paralized what do I mean I mean in particular multiquery generation is really good so basically taking an input question for a given section Fanning that out into a bunch of sub questions to help flesh out the theme of that section that's a good thing because I can all be paralyzed it's very easy to kind of have an llm call produce a set of subqueries search

for all those in parallel get all those sources back all in parallel and then do the section writing in parallel which I'll talk about in a little bit I've done quite a bit of work on more sophisticated style of kind of question answer uh the stormw work for example shows a very interesting example of kind of again like iterative question answer ask another question produce another answer T style of kind of research basically I found these approaches are really token costly and and really can blow up latency so I kind of like to keep it

as simple as possible fan out your search queries do parallelized query uh generation paralyze query retrieval and then use all those sources for your writing now let's talk about writing a little bit the lesson I've kind of learned here is it is often better to write each section individually that's because you can get much better section quality if you make an LM right the entire report in one shot I've seen that quality can really degrade so I do like writing sections individually but to save latency I like parallelizing all sections that can be parallelized so

really all the sections that kind of have their own independent research I paralyze writing of them you can kind of see this in the diagram down here so basically this is the a toy report structures intro conclusion two sections those sections get researched in parallel they get written in parallel because they're kind of Standalone right they have their own topics they have their own research now what I've also found though is you should have a sequential Final Phase of writing on any sections that don't require research for example intro and conclusion because this can utilize

everything you've already written to tie together the themes really nicely so that's another little trick I found in writing write sections individually but then do it sequentially such that your kind of main body sections that require research are all written first in parallel and then you write any final sections in parallel as well for example int C conclusion that distill everything you've learned from your research that's kind of what I found works really nicely now let's build this from scratch I'm in an empty notebook here here's the outline of what we're going to do and

now let's start with the planning so this planning phase is going to have two inputs it's going to have a topic and a general outline from the user and it's going to Output a set of report sections that adheres to the topic and the outline that the user provides now step one first I'm going to define a general data model for a section of the report that's all this is this is so it's pantic model it's called section it has a name the name for the section description an overview of the main topics covered in

the section whether or not the section needs research you'll see why it's interesting later and this is the content of the section which we're going to fill out later and this is just a list of sections now why do we do this we Define a data model because this can be passed to an llm and the LM can know to produce output that adheres to this model that's why this is really cool now I've just added another data model for search queries which we going to be using throughout this entire process and I've added a

type dict which is basically a class for overall report state now what's this all about we're going to be using langra to build this agent langra uses a state to internally manage everything we want to track over the lifetime of the agent we don't have to worry about this all for now I'm just I'm just setting it up so we have it now here's where we're going to get into some of the heavy lifting and interesting aspects of this so for this planning we're going to use two different LM calls which require two different prompts

now the first is a little bit subtle and I want to explain it cuz I kind of came at this through a bunch of trial and error over the you know last weeks and months basically in report planning it is beneficial to have a little bit of external information in the form of web search to help with planning the report so this first uh prompt just explains that your technical writer helping to write to plan a report here's the topic from the user here's the general report structure structure again from the user generate some number

of queries to help gather information for planning the report sections now the second point is this is really the overall report planner instructions so this is you're a writer helping a plan a report your goal is to generate an outline of the report you're going to generate a set of sections here's a topic here's organization here is any information gathered from web search related to this topic so now we can see we're going to set a function here gener report plan it takes in the state which we defined previously we extract some keys from the

state okay now we go ahead and first generate some queries to help us plan the report we perform web search we do duplicate and format the the sources uh results from web search and then then we format the report planner instructions with our topic the report structure from the user and everything we got from web search then we generate the section of report I'm using Claud here we return that to our state as the report sections I can try out this planning phase and isolation let's give it a shot so let's say here's a structure

I can pass in a pretty detailed structure that I want I want a report focus on comparative analysis is it include an intro I want uh main body sections each dedicated to an offering being compared um I can give a whole bunch of preferences here I want a conclusion with a comparison table and here's the input topic give an overview the capabilities of different AI agent Frameworks focus on Lang graph crew open swarm mom index workflows now we're going to go ahead and run now you'll see I'm actually using the Tav search API which is

pretty nice for a few different reason reasons one is that it automatically retrieves the raw sources for you so you don't have to independently scrape Pages um it's free up to a pretty high usage limit um and the apad just works really well so I've used it for a bunch of different things I find it to be quite nice now what's cool is it actually has uh General search and also news search which is geared to current events which is can be quite useful for certain types of reports uh I'm defaulting to General search and

this parameter days is not relevant for General search search only for news now given this topic and this structure let's just test that function so here we go now we can see these are the sections of our report it automatically generates them for us in introduction no research needed description overview cool one section on land graph one on crew AI one on Swarm one on llama workflows conclusion comparison table very nice this is all Auto generated and it's Guided by this outline we provide you can do anything you want here for the outline it'll automatically

kind of and dynamically change the report formatting for your guidelines so you'll see here in this outline we've laid out 1 2 3 four of the sections require research so now we have to build that out how would actually perform web research and write those sections let's do that now now I'm going to create a new state called section State you're going to see this is something very useful that we're going to do in Lang graph I'm going to set up each section to be its own independent state and subgraph such that the research and

writing of each section can all be done by what you might call like a sub agent All In Parallel I'll show you exactly how to do that right now so here's the code that I'm going to use to write each section of the report and conduct research for each section of the report let's go ahead and just look at it quickly so you can see right here I can compile and show the graph I'm going to use to do this so what's going to happen is for each section I'm going to generate some search queries

about the topic of that section I'll search the web and from the sources I gather I'm going to go ahead and write that section okay so that's a scope of work we're going to do here now what's kind of nice is that in these section objects we can see that we get a description of what the section supposed to be about right so we can use this information when we a generate some search queries to populate the information of that section and then B do the final writing of that section so this outline you can

see is very important it kind of sets the entire scope of work Downstream for us now let's look at the prompts we use here so first I'm going to have this query writer prompt now here the goal is to generate some search queries that'll Gather Comprehensive information needed to write report section um here's a section topic which we get from the section description now this is all very customizable these are some things I found to be useful U generating some number of queries Ure they cover different aspects you know you want them to be kind

of unique and not redundant right that's the main idea here now section writing this is where you can also be extremely um you know uh customized in your uh instruction this is kind of the way I'd like to set it up so you're an expert writer here's a section topic I give it a bunch of writing guidelines uh technical accuracy length and style uh a bunch of points on structure now look here's really what I did I went back and forth with Claud a lot to write the these prompts so really I use Claud to

help tune these prompts from a lot of iteration basically writing a section feeding the section back to Claud saying hey I like this I don't like this having CLA update the prompt and so forth so basically this is a very kind of personal process and really allows for uh a lot of prompt engineering and optimization this is just what I arrived on but don't limit yourself to this you can modify this in any way you want is the point the real big Ideas here is I'm going to Plum in the context that I got from

web search um I'm going to give it here's a section topic and I give it a bunch of guidelines that I want for the style of the section that's really it so the only functions I need in my graph we kind of saw them below when we compiled the graph basically generate queries so again like you know I have some number of search queries I want to actually generate those are that sort State here's information about the section um I pass some of that into the prompt I get the queries I write those back out

easy enough then I have this search web um where basically um in this particular case I call uh the TBL API again note that I'm using async so basically that's going to do all these web searches in parallel for me which is just faster and then the section writing it's really all there is to it this is the source information I got from web search it was written to state right here I just get that from State I get the section from State um go ahead and format The Prompt uh go ahead and kick it

off and again I'm using claw 35 for my writing now this is again as a preference you can try different models I found claw 35 be very very strong at writing and that's why I kind of prefer using it for this style of longer form report writing um and there I get my section now what's also nice about this is I can test section writing in isolation so let's test on one of the sections that we defined previously so let's go ahead and actually just look at what section we want to work okay so let's

write a section on Lang graph the description is detailed examination of Lang graph core features architecture implementation and so forth right cool so here's a report section we can see these are all things that we kind of asked for I wanted um kind of a bold sentence to start I added that to my instructions um you know really what's going on here Lang graph enables creation of stateful multi applications with LMS as graphs yeah that's you know about right um it's open source yes um yes enable Cycles cool nice now another thing I I I

ask for is the sections to have at least one structural element um in this particular case like a list just to kind of break it up a little bit so it includes that which is pretty nice persistence complex workflows integration with Langs Smith ver durability pretty nice cool example gbd newspaper project um that's actually mentioned in one of the sources so right here pretty neat and kind of how it differs from other Frameworks so and again a couple sources so look not bad you can customize this in any way you want with the prompts above

um but pretty nice it gets us nicely formatted sources a nice overview of what L graph is and kind of formatting that we asked for so that's pretty cool so now we have nearly everything we need we can do planning we can generate individual sections of a report and do research now the final things we want to do are we want to parallelize that uh research and writing for all sections that need it and then we want to do any final kind of writing of sections that didn't actually require research but are like introductory or

concluding so for that let me just compile it so we can look at it this is what it's going to look like in the end so we're going to take what we just did generate queries search web write section this is now a subgraph as Zone State as we saw section State and what we're going to do is we're going to use a very nice trick in land graph called the send API to basically parallelize writing and research for all sections of the port in parallel so the send API we use in this function right

here initiate section writing so let's look at what's going on on here the sections are created in our planning phase which we talked about initially okay all we need to do is iterate through those sections and see this send thing this basically initiates a subgraph that will produce a research and write the section and we can simply pass in whatever inputs are necessary now note that we send these inputs to build section with web research and let's look at that briefly so that is just you can see we use section builder. compile this is basically

creating a subgraph so section Builder was defined up here this is where we defined all the logic necessary to just research and write a single section this thing is pulled into our main graph as a subgraph and we kick it off in parallel for each section that needs research that's really all we did now we can see in that section writing phase we kicked us off for every section that requires research so that's great now we also have this write final sections function which is going to write any final sections of the port that are

kind of summarizing it could be an introduction it could be concluding but any section that are planner deemed does not require research those are done last now what's kind of cool is we can use the same send API to basically kick off that process right here now what we pass is simply the section we're writing and the result from all the research and writing we've already done so that's the key point we say here's a section to write for example introduction and here's everything we've written already so you can use it to help build this

final section now we can see here we're going to use this prompt final section writer instructions that's all defined up here very similar intuition we saw before but in this case you're an expert writer you're synthesizing information for the final sections of report here's a section to write here's the entire report context and for introduction I give some instruction for conclusion summary I give some other instructions again you can tune this any way you want and that's how we get to our overall graph we do the planning which we talked about in detail previously we

do research and write each section requires it first and we parallelize that you can see that speeds this up a whole lot because we're basically doing all that research in parallel we get all the completed sections we then in parallel write any final sections introduction or conclusion basically any sections that don't require research and we find then we compile the final report so let's test this all end to end again we pass into topic I'll just show you that again overview of a few agent Frameworks I give a structure that I want there we go

cool and we're done we can look at the whole report so again we get kind of a breakdown of Lang graph crew AI you can see it kind of preserves a similar style tries to maintain a structural element but it's not totally enforced some sections don't have one uh again each section has sources um kind of a nice crisp distill at the end here with a table pretty neat you can see this is all done uh pretty quickly and I'll show you the trace to prove it so here's the trace in lsmith we can see

it took 43 seconds for all that not too bad it's because of all the parallelization we do now let's go ahead and look and and kind of inspect what we did so what was the process here so first we use anthropic to generate some search queries to help us plan the report then we do web search so it's pretty nice you can see we get a whole bunch of information about the various Frameworks then from all that information from the search results and from what we provided anthropic will write the outlines you can see here's

the sections and there's an introduction description Lang graph crew AI swarm workflows conclusion so there we go we have our planning done we've done web search to help kind of make sure our plan is interesting and up to date and and we now have like a very clear scope of work now what's neat here is you can see we kick off four of these build session with web research and these all run in parallel which is pretty nice you can see each of these are 15 to 25 seconds so it takes some time right because

you have to do web search you have to write the section of the report and then we can see we paralyze writing the final sections we write the introduction and conclusion um in parallel as well so you can see it's only about 40 seconds to get an output that looks like this which collates a lot of really interesting information with sourcing for you from the web into this nicely adjustable form which is entirely customizable now let me show you how I actually use this I'm in the repo right now go to this EnV example copy

this to an EV EnV file and fill this out that's step one now step two is I actually can spin up this project in langra studio which is a very nice way to interact with my agent locally or can be deployed for now I'm going I'm going to use just the locally running desktop app so here I'm in studio here's the agent we just showed and we walked in gory detail about how you actually build this now how do you actually use it so what's pretty nice is we saw that the input is both a

topic and a structure if you go to the repo go to that report examples directory and I show a few different report styles that I like here's a cool one on kind of business strategy so what I can do is I go back over here to Studio I open assistants and you can see I've created a few different assistants now let's open up one business strategy you can see here I just paste in the report structure I want for this particular kind of writer type so this is really nice because the report structure is just

a configurable par of my agent I can create many different report writing assistants that all have different styles different focuses and can write really nicely refined specific reports per my specifications here now this is one I like on business case studies so it's going to build a report type that's focused on business strategy per the specifications I have in that outline now I can give it a topic what do we want to learn about say successful software developer focused marketplaces okay that off we can see we're generating the plan first cool it's going to do

a search query about developer marketplaces in general we can see this right here generated the plan and now it's basically researching each section All In Parallel neat you can see there was the web search now it's doing the section writing now it's kind of hard to see but this is actually happening for all of our sections in parallel neat you can kind of get a clue what it's going to talk about you see it looked at it looked up upw work uh stack overflow GitHub cool and here's our final report so here's the report we

just created I just brought it over to the repo as a markdown file so we can see it did a deep dive in github's developer ecosystem and what kind of drove success there um some interesting insights bunch of nice sources stack Overflow and upwork as well kind of summary table highlighting an overview of the research so this is showing an example of how can create a customized assistant that writes reports of a particular type in this case focusing on business case studies looking specifically at kind of analogous prior comps to a current kind of business

question or scenario but you can create as many assistants as you have general report Styles or types that you want to write so overall this repo provides a kind kind of nice way to do very customized report generation is the result of a lot of trial and error that I've kind of worked on over the last few months um and again it does kind of this planning up front generates report sections paraliz research on all the sections that require it and then a final synthesis writing any final sections like introduction conclusion based on the results

of the other sections that you just all researched and wrote in parallel so you can see it's very customizable because you can kind of use user defined kind of outline to create different report kind of structures or Styles um entirely up to you in terms of optimizing and tuning the prompts um all the research is done in parallel so it's pretty quick like 40 seconds to get a really nice kind of like long form report and yeah feel free to play with it leave any comments below and the repo is of course open source so

feel free to add any contributions or uh leave any comments thank you

Related Videos

31:04

Reliable, fully local RAG agents with LLaM...

LangChain

69,702 views

58:06

Stanford Webinar - Large Language Models G...

Stanford Online

28,840 views

27:29

From RAG to Knowledge Assistants

LlamaIndex

26,970 views

20:36

Reinforcement Fine-Tuning—12 Days of OpenA...

OpenAI

183,754 views

3:06:23

SESSION 1 | Multi-Agent Reinforcement Lear...

IIIA-CSIC

6,617 views

21:16

How To Make The Most Out of Your 20s

Y Combinator

108,756 views

17:34

PydanticAI - Building a Research Agent

Sam Witteveen

11,260 views

47:08

Build a Customer Support Bot | LangGraph

LangChain

49,352 views

1:01:22

Day 3 Livestream with Paige Bailey – 5-Day...

Kaggle

19,132 views

26:52

Andrew Ng Explores The Rise Of AI Agents A...

Snowflake Inc.

156,091 views

21:45

PydanticAI - The NEW Agent Builder on the ...

Sam Witteveen

21,897 views

8:58

Conceptual Guide: Multi Agent Architectures

LangChain

28,480 views

14:56

OpenAI o1 and o1 pro mode in ChatGPT — 12 ...

OpenAI

498,852 views

57:45

Visualizing transformers and attention | T...

Grant Sanderson

176,392 views

25:28

Multi-agent Workflow to Generate a Structu...

LlamaIndex

7,066 views

10:30

Let Cursor Agent Build Your Agents for You...

Arseny Shatokhin

9,459 views

3:42:37

NEURAL NETWORKS ARE REALLY WEIRD...

Machine Learning Street Talk

28,110 views

8:29

AI is not Designed for You

No Boilerplate

106,768 views

19:21

Why Agent Frameworks Will Fail (and what t...

Dave Ebbelaar

97,003 views

20:03

Will Anthropic's MCP work with other LLMs?...

mikegchambers

5,068 views