SVD Visualized, Singular Value Decomposition explained | SEE Matrix , Chapter 3 #SoME2

295.82k views2215 WordsCopy TextShare

Visual Kernel

A video explains Singular Value Decomposition, and visualize the linear transformation in action.

...

Video Transcript:

[Music] SVD singular value decomposition is a grand finale of linear algebra on one hand it combines all important concept of the subject into just one theorem on the other hand it's extremely relevant and applicable in the age of data science and machine learning this is a one Matrix decomposition that rules them all in surface the SVD is saying that any Matrix regardless of symmetry rank shape can unconditionally be decomposed into three very special matrices but there is actually an intuitive and elegant visual interpretation underneath I think it will be quite cool to see for ourself

don't you and yep there is a catch to truly internalize and appreciate the theorem a journey is required and hence the previous chapters for example it's good to know that diagonal matrix stretches each axis while the orthogonal Matrix produces a rotation I highly recommend chapter 2 to be honest if you understand spectral decomposition you understand 80% of SVD and there are three more Tiny Steps we need to take to complete the journey you probably notice all the visualization we have seen so far comes from Square matrices very sneaky on my part however the primary appeal

of SVD is it generalizes two rectangular matrices as well therefore we need to understand how to visualize such things but firstly what exactly is the difference between the two vectors here my teachers say the left one is in R2 the right one is in R3 I used to think those are the same thing I mean they both have one for x two for y and they got nothing for Z right even if we do represent on graph they look somewhat similar I go ahead asking the vector on the right about Z value it said oh

zero I go asked the Z value of the left Vector it got very confused having no idea what the third dimension is ever like in addition I can go nudge the R3 Vector around such that Z value is no longer zero but for the R2 Vector no matter how I rotate stretch scale it is always devoid of Z despite looking similar in reality there are completely different species that live in different dimension of the universe if those two types of vector are so different is there a mechanism which can transform one to the other and

that's the power of rectangular Matrix in particular a 2x3 Matrix has the ability to take a vector in R3 transforming that down to a vector in R2 makes quite a lot of sense if we just look at the Matrix Vector multiplication a matrix of size M byn has the power to transform a vector in the nth dimension to a vector in the M Dimension this is why we say Matrix apply a linear transformation from RN to RM remember we can represent Vector as arrow and represent Arrow as Dot and a collection of dots as object

simply illustrating the visual of a matrix is simple but the process of interpretation is hard the visualization of rectangular Matrix gets very confusing so it's good if we try to understand the simplest case first which is this Matrix you see it's similar to the identity matrix by the rectangular version of it for the context of this video let's give it a cool name the dimension rasor this Matrix here represents the simplest form of linear transformation from R3 to R2 its multiplication with any Vector always preserve the X and Y but completely remove the Z value

regardless of the initial Z so what this means is that for example the vector 1 2 1 in R3 we can map down to the vector 1 two but all the vector in the form of one two anyz transforms to one two we can also have something like Dimension Adder for example this 3x2 Dimension Adder here basically append zero as a z value for whichever input R2 vector oh actually I think here's a good place for me to show you a composition from R3 to R2 whenever we multiply Matrix and Matrix we essentially combine their

distinct linear Transformations here we can multiply the dimension eraser with this diagonal matrix and let's call this product Matrix C the dimension eraser takes away the third dimension and then diagonal matrix stretches the X and Y axis accordingly The Matrix C since it's a composition of the two matrices on the right it basically encapsulates the two Transformations but in just one go chapter 2 Flashback symmetric Matrix is a square Matrix in which on two sides of the diagonal line the entries are identical it has a very strong property which almost no other matrices have the

IG vectors symmetric Matrix are perpendicular to each other so that means if we normalize the igon vectors and package them into a matrix we get an orthogonal Matrix which implies rotation the transpose rotates the IG Vector to align with the standard basis if we don't take the transpose that Matrix rotates a standard basis to the icon Vector symmetric Matrix is nice we got to take advantage yet every everyone knows most matrices in nature are not symmetrical but we just happen to have the ability to artificially construct symmetry out of nowhere consider this Matrix here which

is obviously not symmetrical if we take the transpose and multiply them together we get a square Matrix but also symmetric we can also put the transpose on the left and do the multiplication we once again see a square Matrix that is also symmetrical it's good to take a moment here to appreciate we just created two symmetric matrices from a rectangular Matrix a in general this is true for any Matrix a which a a transpose and a transpose a are symmetric matrices see if you can prove this statement on your own for now we stick to

the concrete case when a is 2x3 and let's give them some meaningful names as well since they're symmetric matrices the letter s better be in there let's call A A transpose s left and a transpose a s right I know that you know s left and S right are symmetric matrices so s left would have two perpendicular IG vectors in R2 and as right would have three perpendicular IG vectors in R3 since all those IG vectors are closely related to the original Matrix a we have special names for them as well the igam vector of

s left are known as the left singular Vector of a and likewise the igam vectors of s right are the right singular vectors up next I'm about to provide two facts without going to the detail s left and S right are known as PSD m icies this implies the igen value for each IG Vector are non- negative the second fact which is now obvious if we sort the igen values in descending order for both set the overlap ones are numerically identical the biggest ion value of s left equals to the biggest ion value of s

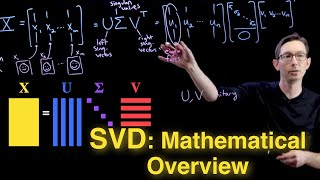

right and so [Music] forth the left over igen value is guaranteed to be zero just like the singular vectors those shared igen values are indirectly derived from the very original Matrix a if we take the square root and my friend those are the singular values of Matrix a a lot of things we did so far seem random we have ambiently collected all pieces of knowledge which we need to understand SBD Now Behold his entrance once again any Matrix a can be unconditionally decomposed into three very special matrices in which the Matrix Sigma is rectangularly diagonal

The Matrix V and U are orthogonal matrices but what exactly are they the Matrix Sigma would have the same Dimension as Matrix a the numbers on the diagonal are the singular values of Matrix a arranged in descending orders every other entry is zero The Matrix U contains the normalized igam vectors of s left which are arranged in descending order of their igen values another way of saying this is Matrix U contains the left singular vectors of Matrix a on the other hand Matrix V contains the normalized r singular vectors of Matrix a also arranging descending

order and then we transpose that to get V transpose and remember this generalizes to all kinds of Matrix a the moment I've been waiting for the visualization The Matrix a here by self applies a complicated linear transformation from R3 to R2 but we know using SVD it can be perfectly understood as sequentially applying the three simple matrices on the right the blue Matrix or V transpose is an orthog Matrix which applies a rotation such that the right singular vectors return to the standard basis so more precisely the singular Vector with the biggest singular value lands

on x-axis the singular Vector with the second biggest singular value goes on the y axis and so forth The Matrix Sigma is rectangularly diagonal and it's essentially a square diagonal matrix composed with a dimension eraser the dimension eraser is removing the third dimension and see that the diagonal matrix stretches X and Y AIS based on the singular value and the final step The Matrix U rotates the standard bases to align with the left singular vectors and boy you bet those Transformations compos is exactly the same as [Music] a so Wikipedia said a few things about

the visual flavor of SVD that any Matrix essentially just Maps sphere into ellipsoid across different dimensions let's see for ourself after singular value decomposition the first step of any linear transformation is always a rotation in RN if you rotate a sphere it's still a sphere taking away the third dimension of a sphere in R3 makes it a circle in R2 stretching uniformly along the X and Y AIS makes a circle and ellipse and the final rotation still preserve the geometry of the ellipse just changing that to a different position in R2 and by no means

that was an rigorous proof but certainly some intuitions and lastly let's look at an example of a linear transformation from R2 to R3 the spirit of SVD very much still holds firstly we begin with the rotation in R2 but next since the Matrix Sigma is 3x2 we Scale based on the singular values and then we're adding a new dimension and finally we rotate the standard bases XYZ to align with the left singular vectors this video should end now nonetheless it's good to contemplate on a question is the entire purpose of SVD to decompose a linear

transformation into those four sequential actions is our visualization the correct interpretation of SVD itself not exactly I mean what even is a matrix to begin with this mathematical object has different interpretations under different contexts the same Matrix can mean very different things to different people another popular interpretation of SVD is to view a high rank Matrix as a summation of rank one [Music] matrices an application of this is low rank approximation we can view a picture as a massive Matrix of pixels and gradually approximate that picture by adding more and more rank one matrices A

wise man once said the purpose of computing is Insight not numbers I think the same goes for visualizations as well SVD as a math exercise is an intense outbreak procedure to follow sometimes after three pages of matrix multiplication and characteristic polinomial I understood of nothing of what I just did but decompose and visualize a matrix a into four distinct pieces of simple transformation gives you so much more insights than you'd otherwise have each every single step interpretable and makes perfect sense we know precisely what what's going on in particular that very initial step of rotation

from the right singular Vector onto the standard basis actually captures the essence of something called principal component analysis I promise a video in the future even when things extends beyond the three dimension this type of decomposition still give us a reasonable intuition of linear transformation on vectors allowing us to say about things which we couldn't see we know that a rotation does do not make things bigger or smaller therefore when this Matrix acts on higher dimensional object nothing scale squash blown out of proportion but pristine rotation around the origin and after that the last two

dimensions of the object is taken away sometimes learning the final theorems of subject is like climbing a mountain we get a nice scenery on the top a higher level overview but it's nice to look afar at the other Summits and what we see is a fouryear transform I just want to say some similarity they share about the two Monumental theorems obsession with extreme generalization and decomposition the 4year transform is saying any function can be decomposed as a sum of sinusoidal wave functions and then when you see the pie creature you already know it's a good

video I left link here and thank you for watching through the the end [Music]

Related Videos

15:55

Visualize Spectral Decomposition | SEE Mat...

Visual Kernel

105,560 views

22:44

Matrix trace isn't just summing the diagon...

Mathemaniac

228,445 views

14:51

Visualize Different Matrices part1 | SEE M...

Visual Kernel

103,167 views

13:40

Lecture 47 — Singular Value Decomposition ...

Artificial Intelligence - All in One

344,396 views

31:17

Singular Value Decomposition | Linear alge...

All Angles

5,322 views

11:41

Visualization of tensors - part 1

udiprod

676,212 views

26:49

Orthogonal Projection Formulas (Least Squa...

Sam Levey

3,949 views

18:56

The Art of Linear Programming

Tom S

787,147 views

53:34

6. Singular Value Decomposition (SVD)

MIT OpenCourseWare

241,196 views

12:21

Visualize Different Matrices part2 | SEE M...

Visual Kernel

56,682 views

27:07

How (and why) to raise e to the power of a...

3Blue1Brown

3,083,671 views

26:01

The Matrix Transpose: Visual Intuition

Sam Levey

49,549 views

40:29

29. Singular Value Decomposition

MIT OpenCourseWare

164,593 views

35:11

Is the Future of Linear Algebra.. Random?

Mutual Information

404,068 views

34:29

Wavelets: a mathematical microscope

Artem Kirsanov

693,234 views

12:51

Singular Value Decomposition (SVD): Mathem...

Steve Brunton

433,782 views

25:41

The deeper meaning of matrix transpose

Mathemaniac

417,951 views

13:18

Understanding Lagrange Multipliers Visually

Serpentine Integral

403,964 views

31:13

What is Group Theory? — Group Theory Ep. 1

Nemean

1,140,105 views

23:45

The applications of eigenvectors and eigen...

Zach Star

1,180,699 views