Apple DROPS AI BOMBSHELL: LLMS CANNOT Reason

173.25k views4563 WordsCopy TextShare

TheAIGRID

Prepare for AGI with me - https://www.skool.com/postagiprepardness

🐤 Follow Me on Twitter https://...

Video Transcript:

so Apple has come out with some pretty incredible research and I think this has the AI Community completely divided because it fundamentally shifts what we know with AI models and I think this genuinely could change everything because of what this research is currently suggesting so I'm going to break this all down for you because this is largely one of the biggest papers that have come out recently and it's fundamentally quite surprising so essentially Apple research has come out with this paper called GSM symbolic understanding the limitations of mathematical reasoning in large language models okay and basically the short summary of what this paper is suggesting is that it says that we hypothesize the current llms are not capable of genuine logical reasoning instead they attempt to replicate the reasoning steps observed in their training data so basically this paper is trying to suggest that current large language models as they are models like GPT 40 claw 3. 5 Sonet that these models aren't as smart as we think they are and they are not reasoning through any kind of problem the only thing that they're doing is just statistical pattern matching which means that these models are simply not as smart as we once thought they were which would have severe implications for certain things moving forward now the research dives into a few things which I'm going to explain and it's pretty simple to understand so let's get into why this is really impactful so one of the things that we used to do to assess how models score on their reasoning or essentially assess how smart these models are is by doing certain benchmarks okay and one of those benchmarks is called the GSM 8K this is called the grade school mathematics 8000 which is 8,000 grade school mathematics question the Apple researcher says here that when open ey released GSM 8K 3 years ago gpt3 one of the first iterations of the GPT series models scored 35% on G DSM 8K test today's models with 3 billion parameters are surpassing 85% and the large ones are hitting 95% okay but he argues that has reasoning really improved like how much of this is genuine logical SL symbolical reasoning versus pattern recognition SL inadvertent data contamination or overfitting so basically what he's stating is that you know if we look back to the smaller models the smaller models like gpt3 which were 175 billion parameters scored 35% okay and today's smaller models like the tiny models from today that are only three billion parameters are surprisingly surpassing 85% and even the larger ones hitting 95% but he's like okay we've made such incredible progress in such a short space of time but we need to realize how much of this reasoning has actually improved okay versus data contamination which basically means that the data that we use to actually train these models you know more parameters more data the data that we actually use how much of this increase in percentage is actually related to data contamination where unfortunately some of the data from the test sets and the answers have slipped to the training data so the model essentially remembers what it was trained on and isn't actually getting smarter and how much of this is also just pattern recognition so what we can see here is we can see this graph which shows us the gpt3 models from 2021 and then of course we can see these state-of-the-art models in 2024 and we can see that the GSM 8K accuracy is just going up completely okay we can see that this is continuing to go up okay but this is where this you know this apple team of researchers decided that okay if we want to test to see if these models are actually increasing their reasoning what we need to do is we need to come up with a new Benchmark okay and this new Benchmark that they've used is one that is a little bit different okay and it's literally a little bit different because what it does is it changes things ever so slightly so they said introducing GSM symbolic on new tool to test the limits of llm in mathema matical reasoning we create symbolic templates from the GSM akk test set enabling the generation of numerous instances and the design of controllable experiments we generate 50 unique GSM symbolic sets essentially like GSM AK examples but with different values and names so all they did was they took the test set so the GSM a they took a few of those questions and they decided to change the values and the name so basically in the math questions sometimes you're like Jimmy has five apples so what they decided to do was they decided okay I'm going to change the names okay so from Jimmy it's going to be John and from apples it could be oranges and from six oranges it could be seven oranges so they decided to change the values and the names and if these models are truly capable of reasoning they should be able to handle these problems fine as long as we've you know just changed the names and the numbers and not the actual problems so essentially you you can see right here that this is the GSM 8K on the left hand side we can see that the values that are going to be changed are located here we can see that Sophie then of course a nephew then we can see 31 8 9 Sophie nephew 62 so those are the only values that are going to be changed with regards to the changing and you can see right here that the GSM symbolic template of course the name it has the family uh the total and you can see it changes these within you know a certain range and this is how the template is constructed now it's important that you understand that all they've done is just change the names and the values but the crazy thing about this was that when they change the names and the values we can see that was that there was essentially a large discrepancy in terms of the results between what the models claim to have got from various research Labs versus what they actually tested and they actually got so she says here that current accuracies on the gsmk are not reliable we observe a large performance variation for example llama 88b scores anywhere between 70 to 80% 53 scores between 75% and 90% and so on and for most models the average performance on the GSM symbolic which is just a variation of a test that they already took with different names and different values is lower than the GSM AK we can see here that there is quite the the uh you know discrepancy between the reported values and then of course the actual values on the GSM symbolic test which is quite surprising and um we can see that the actual test results are in the uh dash line so if you want to look at what these models got we can see what these models got here in the dash line then we can see the variations of what these models got which is between 90 to 98 then of course 70 to 80% then of course from here this one looks like from 70 to 85% so they're starting to question why on Earth is there such a giant discrepancy in terms of these models results purely based on the fact that the only thing that has been changed is of course just the names and the values and this is a bigger chart and we can see that some of the models that have the largest drop are f 2 Gemma 2 and of course some of these other models and it seems that these smaller models are going to be the ones that probably have a lot more overfitting a lot more data contamination amongst other things so we can see right here that the rearers are stating that the fragility of supposed llm reasoning llms remain sensitive to changes in proper names for example the people the foods the objects and even more so when numbers are altered and he's basically asking here would a Grade School student's math test score by you know vary by 10% if we only change the names and I think it wouldn't like if you gave someone a test and you only change the names would the outcome of a mathematical based question change by 10% I don't think so considering the fact that it's just a name which could mean that potentially these llms are simply memorizing things or doing pattern recognition and this isn't good because if this is true it would mean that these models aren't as smart as we think and it might mean that we need better architectures for solving the reasoning issue now there's a lot more that goes on here we can see how this has been affecting other models with these changes we can see that we have these changes plot here so for example we can see the GSM 8K is the dash line then we can see if we change the names there is a slight drop if we change the numbers there's a another drop and if we change both there's an even bigger drop so this is something that is quite surprising because it means that these models are getting confused once these names are being changed it doesn't really make any sense whatsoever now we also have something where he decided okay what if we adjust the question difficulty we're going to introduce three new variants of the GSM symbolic to study Model Behavior removing one Clause GSM M1 and adding one clause or adding two Clauses basically it's just the GSM symbolic which is just one change and then of course the GM symbolic P1 which is an increase in difficulty and GSM symbolic P2 which is you know another increase in difficulty now of course this specific result I don't think is too bad because of course course as you increase the difficulty the model should go down and this is what we do experience here but the difficulty doesn't seem to be too much but there are really large drops in performance which does also beg the question are these models truly understanding what's going on because there are a few difficulties added and there's such a large drop off we can see that of course the 01 models do seem to perform a Little Bit Stronger but on other models like GPT 40 and GPT 40 mini they there are a lot bigger drops now this is where the research starts to get really crazy and I start to question whether or not these models truly understand what's going on because the researchers decided to do something even crazier okay so this is where they State this begs the question do these models truly understand the mathematical Concepts introducing the GSM no o o we add a single Clause that seems relevant but doesn't contribute to the overall reasoning hence no op so basically what they've decided to do here was to have these you know traditional GSM 8 8K exam questions but what they did was they added something that wasn't relevant to the question really at all so we've got one example here and you're going to see the results of this which are pretty crazy so it says Oliver picks 44 kiwis on Friday he then picks 58 kiwis on Saturday on Sunday he picks double the number of kiwis he did on Friday but five of them were a bit smaller than average how many kiwis does Oliver have a statement like but five of them were a bit smaller than average doesn't impact the number of kiwis that you do have like it literally doesn't impact that you know uh the math the mass at all okay it doesn't matter if it's bigger or if it's smaller a kiwi is still a kiwi okay and the crazy thing about this is that it says we added seemingly relevant statements to the questions that are in fact irrelevant to the reasoning and conclusion however the majority of models fail to ignore these statements and then blindly convert them into operations leading to mistakes okay so what these models will do is they'll be like okay if five of them are smaller than average and then they get really really confused where they should just disregard that because it's not relevant but take a look at the performance drops on models when this is done the performance drop is just I mean this is insane like this is incredible you've got a uh you know the GSM 8K if we look at the GSM no op accuracy the performance drop is around you know even on 01 preview the Orion model that we think is probably the best model that currently exists there is a 177% drop in the GSM 8K to the GSM no op accuracy which is pretty outstanding and the reason I say and the reason I highlight the 01 preview model which is this model right here is because this is the model that is supposed to have the best reasoning capabilities there shouldn't be like such a large slope here because what we have is we have a consistent drop in performance across the models despite these benchmarks having irrelevant questions what we should see if 01 preview is as good as reasoning as open eye claims this bar should be you know up here because these models work through the problems step by step in order to get to the final solution I'm not sure how opening ey trained 01 there's a lot there's a lot of secrecy behind it but I mean if a model drops its reasoning capabilities 44% 32% for GPT 40 when seemingly you know irrelevant information is added I think this is absolutely remarkable because how many times have we added data to a problem to chat GPT to GPT 40 we've provided it with so much context and sometimes arguably Sometimes some of context is completely irrelevant and I mean a 30 to 40% drop in terms of the reasoning output I mean that's pretty awful like that's pretty bad like there's no way to put this other than this is a truly shocking find in my honest opinion because I wouldn't have thought that adding such a relevant statements would have resulted in such a large performance drop and what's crazy about this is that we see a similar performance drop for GPT 40 to 01 mini we can see 01 mini drop is at 29% and GPT 4 O's is at 32 01 preview does do better at 17. 5 but I wouldn't be expecting that big of a drop for a model that is specifically trained to reason okay um and we can see the other open source models here you know a lot of of these other models are pretty smaller but I am glad that they did include the 01 series of models because that would have been a glaringly obvious uh thing to do considering it's just trained on reasoning so the craziest thing about this this which is why I said that this could genuinely fundamentally change everything is because he says here that can data models or compute fundamentally solve this we don't think so they state that scaling data models or compute cannot fundamentally solve this issue okay it says the open AI 01 series is performing better but still suffers from slight performance variations and 01 preview shows significant improvements but it still suffers from silly mistakes like this okay you can see that this problem just blindly applies the inflation rate so the question is Liam wants to buy some school supplies he buys some erasers that now cost 675 each 10 notebooks that now cost $11 each okay a ream of bond paper that now cost $19 how much should Liam pay now okay assuming that due to inflation prices were 10% cheaper last year when you read this question you have to disregard the fact that due to inflation prices were 10% cheaper last year because Liam is buying this stuff now so this bit right here you just completely disregard that okay most humans who look at this kind of problem are going to completely understand that because you're going to be thinking wait a minute why would I look at inflation last year okay disregard that how much does everything cost now and then you answer the question so of course we can see here that you know when this model is doing the reasoning we can see that it says step one calculate last year's prices after reducing the current price by 10% which is just not what you would do at all you're not even supposed to do this step so the model completely gets this wrong and the question is is that if these models were actually good at reasoning if they truly understood what was going on would they make such simple mistakes he then goes on to state that understanding lm's true reasoning capabilities is crucial for deploying them in real world scenarios where accuracy and consistency are non-negotiable especially in AI safety alignment Education Health Care and decision making systems and that is truly important if you are going to be saying that okay we're going to get to AGI we're going to be potentially deploying this kind of Technology worldwide we need to make sure that like a simple you know input into a prompt canot throw off the model by 40 to 19% you have to understand that one of the reasons that these models aren't used in certain environments is because there's certain you know applications where the degree of accuracy that you need to have is very close to 100% And anything that deviates from that is going to result in a catastrophic impact for example the amount of planes that go down I think it's like 0.

it's it's it's ridiculous okay like the failure rate for certain parts is insane and the point I'm trying to make guys is that we can't have models that fail like in 90% of scenarios if something is added to the end of a prompt which the model cannot distinguish if it's relevant or not okay and I think that's really important to understand because now we can understand okay if this is the case maybe we're not going to you know put AI in certain areas like maths or certain reasoning areas because it fundamentally doesn't understand some of these issues so he goes on to state that developing models that move Beyond pattern recognition to True logical reasoning is the next big challenge for AI so the reason that this is crazy is because this could be a massive setback for AI if this paper has essentially proved that these models aren't capable of reasoning it would somehow mean that opening eyes recent series of 01 models just simply aren't that good and I know that that might come as a huge surprise but it might mean that potentially these models are just simply bigger which means they have more data and potentially more data contamination I mean open AI doesn't always reveal how they train the models they don't say you know where they got their data from there's no third parties that can analyze these data sources of course these are private companies but I think this paper is really important because the sooner we can bridge the gap and solve this problem that's going to be you know the moment we can actually start to speedrun and get to AGI now now he also says that of course overall we found zero evidence okay and that's an insane statement we found no evidence of formal reasoning in language models including open- Source models like llama thigh Gemma and mistra and of course leading Clos models like GPT 40 and the 01 series and one of the statements that you might want to take away from this is that their behavior is better explained by sophis iated pattern matching so fragile in fact just changing the names of the questions can alter results by 10% okay so understand that this is just you know truly shocking research genuinely because I mean if you take a test and by changing the names 10% drop that's crazy okay especially for the future implications for where this stuff is you know needing to be used I mean just think about it guys I mean imagine applying your problem to a model that you say okay this model has a 95% rate but because you in put you know your problem with your name and your numbers the model manages to get it wrong on a variety of different occasions okay it might it might get it right 80% of the time but 20% of the time the reasoning steps are wrong which means are you going to catch that 20% are you going to be aware of that I mean this is something that's really important for any of you who are you know using AI in certain use cases because AI is generative and of course the results are not always the same so it's says we can scale data parameters and compute or use better training data for 54 llama 4 and gp5 but we believe okay and this is Apple's research saying this that this will result in better pattern matches not necessarily better reasoners okay so this is absolutely crazy this is crazy they're basically saying that you know these models are essentially just pattern matches which is I mean it's quite surprising like genuinely this is surprising I would have thought that okay this could happen for models like GP T 40 gbt 3. 5 the open source you know you know open source models but for 01 series uh that's that's genuinely crazy like when we look back at this chart and see a 17. 5% drop that's a bombshell paper Okay um and we can see some more results for the 01 mini here you guys can screenshot this and look at this now the crazy thing about this is that this isn't the only paper that actually talks about this there was another paper that you know it just it just genuinely didn't get that much attention I'm not sure why but this uh consequent AI uh they said functional benchmarks for robust evaluation of reasoning performance and the reasoning Gap now essentially what they did was pretty much the same thing and we can see here that uh they you know kind of found that there was a reasoning gap of 58% to 80% among the state-of-the-art and open-source models on static benchmarks okay okay um it was pretty crazy it was pretty pretty crazy okay so it said the reasoning Gap here we can see that opening eyes GPT 4 was 58.

3 5% and basically before I get into actually the results they just um did the same thing they said that models that solve a reasoning test should exhibit no difference in performance over the static version of a problem compared to a snapshot of the functional variant which is where once again they just changed the numbers where they could and these are the results that they had at the time time now this is a research paper from earlier on in the year which is why we only see GPT 4 there and we see all of these you know anthropics claw 2.

Related Videos

26:45

Big AI News : Googles Gemini -2 , Claude 3...

TheAIGRID

22,478 views

8:27

AI Can Only Do 5% of Jobs: MIT Economist F...

Bloomberg Technology

76,366 views

25:29

Co-Creator Stone on South Park and the Pro...

Bloomberg Live

107,580 views

21:04

Are we sleepwalking into an AI nightmare? ...

BBC News

92,041 views

46:22

It's Not About Scale, It's About Abstraction

Machine Learning Street Talk

42,953 views

13:06

HUGE Magnet VS Copper Sphere - Defying Gra...

Robinson Foundry

1,825,375 views

15:36

How the UK is becoming a ‘third-world’ eco...

CaspianReport

2,265,711 views

8:52

A math GENIUS taught me how to LEARN ANYTH...

Python Programmer

652,137 views

14:59

Math News: The Bunkbed conjecture was just...

Dr. Trefor Bazett

175,596 views

16:33

Why Jeep And Dodge’s Parent Company Stella...

CNBC

952,241 views

31:22

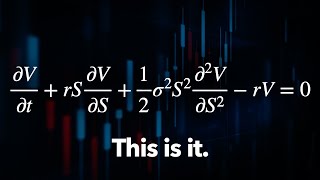

The Trillion Dollar Equation

Veritasium

8,876,782 views

9:21

‘Godfather of AI’ on AI “exceeding human i...

BBC Newsnight

266,057 views

31:59

Microservices are Technical Debt

NeetCodeIO

512,663 views

6:55

I Didn’t Believe that AI is the Future of ...

Sabine Hossenfelder

394,927 views

9:02

Linus Torvalds: Speaks on Hype and the Fut...

SavvyNik

227,976 views

24:02

The Race to Harness Quantum Computing's Mi...

Bloomberg Originals

1,985,861 views

15:44

Let's Talk About Panels

Marques Brownlee

2,945,159 views

7:16

Surprise Comeback: Dark Energy Could Be Ho...

Sabine Hossenfelder

285,189 views

24:07

AI can't cross this line and we don't know...

Welch Labs

1,058,716 views

16:09

Craziest Nature Videos of the Decade

Daily Dose Of Internet

2,292,095 views