So, a couple objectives for you today and you'll see Manuel Manuel and I were fairly ambitious in developing this workshop. Um, we're going to start by talking about emerging generative AI capabilities and I think it's valuable to think about what capabilities these tools currently have. There's a lot of misunderstandings around that. Secondly, we're going to think critically examine how Genai can enhance or complicate assessment strategies in educational contexts. We're going to analyze examples of structural assessment changes that we can use to assure learning in the presence of AI tools. Reflect reflect on the impact of

Gen AI on assessment validity, student learning struggle, and the purpose of academic integrity. and identify strategies for integrating generative AI into formative and summitative assessment practices. So we have a lot of people in the room and since I started doing these workshops, one of the things that has has really changed is a lot of faculty have had a chance to develop assessments that integrate AI, develop assignments and assessments that mitigate AI. And I think it's really helpful to share some of these together. So, as a first activity, we're going to use a Padlet here. And

what I'd like you to do on the Padlet is to think about an assessment. And I'll just drop this into the chat here. Actually, Manuel, do you mind doing that? Think of an assessment that you have developed that has integrated AI. So you're using AI as a purposeful learning tool or ways that you've mitigated AI use within an assessment. So an example of that could be uh mitigating AI use might be having an in-person exam so that students are not able to use AI and you can have a secure uh assessment space and AI as

a purposeful tool might be a way that you've specifically integrated into a class. So I want to spend 5 minutes and just use the Padlet. Now if you click on the P plus sign, you can create a post for your subject. Uh just name the assignment, describe the assignment or the way that you've mitigated AI below. And then when you've done that, publish the post. So I'm going to give you 5 minutes on this step now. And we can kind of watch the Padlet fill up a little bit. And then again acknowledging that really a

lot of the expertise right now in how we can integrate AI and assessment is held by the faculty and the instructors who are doing this every day. So let's learn from each other and I may call on you if that's okay um based on your comment to hear more. So, so far transforming one part of an assignment to an in-person collaborative session that helps to collaborate expectations of workflow and effort on subsequent parts. I like this idea of using an assignment to kind of collaborate learning. This is something I have not done. I have not

done successfully. Most of my assignments are written. So I'm really trying to merge the two. Wonderful story tool. Uh create visual story and code with space with edu spaces. Really interesting. Holding an in-person final exam. So lots of in-person exams inclass writing dewaiting homework. And again, in-person exams seems to be the most common way of mitigating the use of AI right now. Nursing simulation. Students were given prompts to have chat GPT trade a client to work through a conversation with the client and then debrief the simulation. Lots of conversations about the biases of AI. Could

the person who shared the nursing simulation, do you mind sharing a little bit about more about that and how the students responded to it? Please feel free just unmute yourself and jump in. >> Sure. Hi. Um, yeah, it was done a little over a year ago, so it's interesting to see how the AI has evolved since then. Um, but it was specifically a simulation where the students were interacting with an unhoused client with some significant medical needs. And uh the AI really had a bias towards certain types of clients. Like even though they all were

supposed to generate unique clients, there was a lot of similarities that kind of revealed um what the AI thought about unhoused people. And the students were they really liked the opportunity. Some of them were actually quite angry afterwards about the biases and the stereotypes. So it was really valuable debriefing learning. I don't know that the the sim itself taught the students as much as their reflection on it after did. >> Wonderful. So, getting them to kind of critically evaluate the AI output of the simulation. Wonderful. >> Thanks for sharing that. >> Wonderful. And I'm just

taking a look here at a couple more. So, oral presentation exams, research paper with some oral exams, uh scaffold assignments that students still build writing skills. Uh we have AI used as a coach. I'll just give everyone maybe a minute more here and thanks for sharing all of this. It's exciting to see over the years um the assignments um start to kind of build up. There's lots of more creative ways that we're starting to use AI in the classroom. All right. Wonderful. And you know, while we're doing a little bit of interaction, I'd also like

to get an idea of where everyone is at right now with AI. So, I'm just going to stop sharing and I'm going to do a quick poll. We have about 120 people in the room now and I'm just going to poll us to think about where we're at with AI. So, I'll get you to fill this out. This is just a Zoom poll that I'm doing now. So, which statement best reflects your current approach to AI assessment? Are you avoiding AI entirely in your assessments? Are you only trying to make my assessments your assessments AI

proof? Are you experimenting with integrating AI into student work? Are you redesigning assessments to include ethical AI use? Or are you not sure where to start? We'll see if we can get a 100 folks to answer this. So, it looks like right now only about 7% of you are completely avoiding AI in your assessments. 18% are only trying to make their assessments AI proof, 29% experimenting with it, 20% redesigning assessments to include ethical AI use, and 26% who are not sure where to start. So, if you're not sure where to start, just keep in mind

this is only a single workshop. And you know, things like Google LM Notebook and the recording will allow you to go a little bit deeper. If you are in the process of redesigning, this is a pretty early stage still and I think everyone's looking for examples. So, as much as you can, please share what you're doing. This is a real opportunity to be all together in the same room and we've made some spaces to share some of those examples. So, here's the final results for you. And let's jump in now that we've got to know

each other a little bit more and where the group's at. So I want to start with generative AI capabilities. And in the past, you know, when chat GPT3.5 came out, when we generated output, it was very easy to see the errors in the output. There were no citations. Uh often the output was full of errors. Often the output was very generic. This has changed quite a bit in the last 2 and 1/2 3 years. But what I've been finding in worksheet in workshops is there's often misunderstandings about how much this capability has changed. So one

of the goals of this section is when we're thinking of assuring learning and securing learning, it's worth understanding what students are able to do with generative AI. So, I want to go over a couple ways that capabilities have changed. Number one, if you've done any workshops with me, I really love this quote from Ethan Mullik uh in his 2023 book co-intelligence. Today's AI is the worst AI you'll ever use. And he means worse in terms of functionality, in terms of things like adw wear and subscription, etc. I'm sure he's totally wrong. If you read anything

about cory cory doctr and and n shitification, I'm sure AI in some ways is going to get a lot worse. However, the capacity has been improving almost by the day. And I wanted to share a couple examples of current capacity. One of these is this study by deans at all. And what they did in this study is they enrolled AI uh covertly in a graduate program for a master's of health administration degree. They had someone using AI complete all the coursework, all the assignments, all the discussion posts without the professor knowing. The AI's final performance

closely match or exceeded class averages. the final grade of 99.36 in the course and no one in the course knew that AI was enrolled. So I really think the capabilities of these tools are such that in many disciplines they're able to complete many of the assignments uh with unless we've revised them. Another example of this change is this chart here that talks about the doubling time of generative AI's ability to do more complex and longer tasks. And what you'll see in this chart is since chat GPT2, which is a little previous to 3.5 when it

kind of caught everyone's attention, we've had a task rate doubling every 7 months. So we're seeing really significant improvements within these tools that we need to acknowledge as faculty. And I want to go over a couple limitations. I find this fascinating to think about things that um folks who are doing workshops like this have suggested to faculty and how these have changed in terms of what AI can do. So first of all when in with chat GPT3.5 I think one of the misunderstandings we had was that AI wasn't good at reflection and this has completely

changed now generative AI is very good at critical reflections in a lead all study in pharmaceutical sciences they found that when evaluated blindly AI was able to do better than the majority of pharmaceutical science students. um and the evaluators weren't able to tell what was created by AI and what was created by students. In addition, another aspect of reflection that I find fascinating now is AI memory. Um I use chat GPT3 or sorry 40. I'm logged into it. I pay for it. I use it extensively and I could actually get generative AI now to write

reflections based on its memory of how I use it. Um so I could ask it you know based on our interactions write a reflection using um a particular model um and it would be very good at doing that. It would also know reflection models like Gibbs etc. Number two, this is a more contentious one. Some universities have adopted the stance that by using authentic assessments, uh, generative AI is not able to do these. And while this is true in terms of things like performance simulations, role plays, in terms of written output, as Tim FS found

in some of his research, generative AI is very capable of completing uh authentic assessments. Number three, and this is probably the most common one that I hear, is inaccurate or missing citations. While for some student work, inaccurate citations are a way to detect that AI has been used, many students are able to use deep research models or even 03 reasoning models and generate citations. And I'm going to show you an example of that. So, if you're going through AI work, if you're trying to develop assignments that can't be completed by AI, you really can't use

citations as a way to do this. Next is no support for complex math. So, chat GPT 3.5, Chat GPT for Omega did not support complex math. It often fails at simple math equations. This also has completely changed. We now have reasoning models like chat GPT03 and 01 that can do complex mathematical equations. So I'd like to do a quick demo around some of those newer tools and then I'm going to get you a chance to play with them. So I'm just going to go to my worksheet for the demo. And the first thing I wanted

to demo for you is chat GPT03. And again, can I get a thumbs up if you've used chat GPT03 before just going to take a look through the group. So I see one thumbs up from Alex there, Jared. So a couple of you have definitely used it. Yeah. So we have a few thumbs up there. I have some nose and I have some empty thumbnails. So, chat GPT03 is called a reasoning model. And what reasoning models do is they show their thinking when they do equations, when they do prompting. I actually do a lot of

my prompting through chat GPT03 because the quality is a little bit better. Um there are limits on this. Um even though I pay for it, I do have a select number of queries per month. So I'm just going to paste in this math problem and I won't read the math problem here, but I will show you the output of it. So you'll see unlike uh chat GPT40, there's now a thinking aspect of this and it also shows its work. So if I click on thought, it's going to show you kind of the thinking it's doing

behind this. And now it's able to come up with a solution. Frankly, I don't do math well. So for those of you who are more mathematical-minded, please let me know if this solution is incorrect. However, tools like 03 and 01 are much better at doing computation and math. The second example I wanted to show you is illicit. And if you've used some of the AI research tools like illicit and site, they are far better than chat GPT at citing. So I'm just going to pop in the prompt that I created here. And I'm going to

enter that prompt there. And what you'll find elicit does, which is neat, is it actually evaluates the strength of the question you're using to research with it. And it will give you feedback on that. So, I'm going to generate a report. And what this is going to do is you'll see that it's using 50 sources. It's going to use scholarly sources for its output. I'm not going to be able to show you the end of this because it takes about 10 minutes to generate the report but towards the end of the session I may be

able to show it to you. The third thing I wanted to demo on a similar note is something called deep research. So tools like Clode, Chat GPT, and Google Gemini all have something built in called deep research. At the bottom of the tool, you'll see this little button here that says research. If you click on it, it's going to do deep research for you. So, I'm not going to actually give you the prompt right now, but I'll show you the output. So, a little bit like a cooking show. Imagine I put the cake in the

oven. Here's the output and you'll see I asked it to create a literature review about digital assessment in higher education. It used more than 150 peerreview studies for this review. And you'll if you take a look, you'll see while it didn't use inline citations, it did site all of these different studies. and a quick look at it. It did a pretty good job of covering this area. If you use chat GPT deep research, you're going to get the highest quality kind of most in-depth research with all three of these tools. So, there's really been a

big change in terms of capabilities of these tools. And when you're thinking about creating assessments, it's worth thinking about these changes both for how you might integrate generative AI in an assessment as well as thinking about how you're going to mitigate it when you don't feel it's appropriate for learning. And I'll get to your question in in the chat in one moment. Before I do that, I want to give you a quick activity. Let's explore AI capabilities a little bit. or I want you to explore AI capabilities a little bit for 5 minutes. So if

you look on the worksheet on activity 1, what I've done is I've included all of the prompts that I just did. So here's the prompt for chat GPT03. Here's the prompt for elicit or perplexity. Here's the prompt for code. And I've also included a prompt if you just want to use chat GPT and enter your assignment. So what I'd like you to do is pick one of these, one or two of these, acknowledging if you do a deep research query, you won't see the results until the end of the workshop, but give it a try.

These generally require a tool login. If you're uncomfortable with doing that, you're uncomfortable with these new tools, what I'd like you to do is select an assignment that you currently do and go to chat GPT and use this prompt here. Enter the assignment description into the prompt and see the quality of output that you get currently. Okay? So, I'm going to give you five minutes to do that. Now, while you're working on that, I'm going to um move around and ask any questions that have come up in the discussion. Please feel free again while you're

working on this, raise your hand, ask a question to the group or ask one within uh the comments. I think part of my approach to teaching and facilitating is I I don't imagine all of you are all paying attention at your computers. If you're going to do other things, uh, please feel free to, but you know, try the Google notebook, try things out, ask questions, etc. So, five minutes starting now. Give those capabilities a try. And, uh, Pam asks in the comments, how do different AI tools compare student popularity, co-pilot verse, chat, GPT? This is

a really good question. I actually don't have the answer to this now. I would assume that co-pilot's used a lot more by students with classwork just because a lot of institutions have enabled co-pilot. I also think asking this question it's worth thinking about what discipline the students are from. I know an anthropic study recently looked at and I'll talk about this later how students were using code and they found that with the code LMS there was a large number of STEM and computer science students. So from my understanding code is used more for things like

coding and STEM answers. Wonderful. Alex mentions that regular chat GPT co-pilot and Gemini all successfully completed the problem I shared with the same answer which shows how these have changed with math and also shows my inability to write a really complex math problem. Yeah, Karina asks at one point was this image on the slide generated using AI and the answer is yes. I think you're referring to the image where I was comparing um things that have changed with AI. And you'll notice there was a repeated line and there was a couple weird spelling mistakes. So,

good catch on that. I imagine these will get better in a little bit of time. Just to kind of speak to my generation for when I do generate slides with AI gener generally I put in the content to chat GPT and I have it generate the slide rather than the whole slides. And Manuel just shared the educ cause report which has some more data on how students are using AI. Thanks Manuel. And uh Vanessa asks would you know which tool is best or more used for deep research? From my understanding, and this may have changed

because this was before Clo was able to do deep research, chat GPT deep research was the best at doing very detailed research. Uh, Google Gemini at the time was good at doing overview research. I'm not sure where Clo fits in. Deep research only came out a few weeks ago for Clo, so I haven't seen them benchmarked against each other. Um, Nicholas asks, "How do you do deep research on chat GPT?" Um, you would need to I think you need to be logged in to chat GPT and there should be a research button at the bottom

of the search bar. All right. So, I'm going to move on now, but before I do that, I just want to hear from the room perhaps. What did you find in your capability testing? And how do you feel about the current capabilities now? So, how did your capability testing go? Does anyone want to share? So, I see a couple B+es, a wow, I feel blown away. And Charlene asks a great question. Can we assume that all students have equivalent access to tools with different capabilities? Thanks for sharing that question. I think that's really important. is

one of the challenges with generative AI is that some of our students are going to have access to very different tools than other students will. Um, and some of your students are going to be much better at able better able to use these tools than other students are. And I think this makes for a huge challenge in terms of learning equity. If I'm using a free version of chat GPT online, it is going to be or or a copilot, it's going to be a completely different experience than I'm using than using a paid version with

reasoning and deep research. And one of the concerns I have is when faculty are trying to catch students using AI, they're going to catch students who are using poor, you know, unpaid AI tools and using AI tutor. Oh, sorry, using AI well while the students who are using paid tools are going to slip through the cracks. So I think learning equity is a really significant challenge on this. All right. So that section was a chance to think about current capabilities of these tools and I want to move on now to thinking starting to think more

about assessment design. So again I want to start by doing an interactive activity. I think we have a lot of knowledge in this room. I'd like to share it. In this case, I'd like to hear some of your concerns about generative AI and some of the opportunities in assessment. So, Manuel, do you mind copying these two questions and pasting them into the chat? And then what I'm going to do is share the Zoom whiteboard with you and do a whiteboard activity now where we can share some of our concerns and some of the opportunities for

using AI in assessment. So just give me a moment to pop open the whiteboard here. So, if you haven't used the Zoom whiteboard before, what I'm going to get you to do is to click on the sticky note, and you'll see the sticky note will give you different colors. Select blue for concerns and select yellow for opportunities. So, I'm just going to select blue now. Put my sticky note on there. Uh, you know, a concern is academic integrity. All right. So, I'll give you folks say four minutes to do some talk, right? Using the Post-it

notes. If you find yourself using the drawing tools, um they're a little clunky. You may switch to the Post-it notes to do that. So I I love this concern that students won't actually learn anything. Writing skills won't be developed. There won't be an understanding. Ability to teach critical thinking processes. And I'm just looking at some of the opportunities use. Oh, I lost that one. One moment. Pressure to redesign writing prompts. Concerned about metacognition. Will students know what they know and what they don't? I don't see how students will be able to demonstrate a lot of

different types of learning. And I'm seeing a lot more blues than yellows here, which is I think what we were expecting a little bit. I I think when it comes to assessment for many of us this is a very fraught and challenging time uh thinking about student learning. So again the idea of critical thinking writing is thinking we all know this. If students no longer write what does this mean? I think this is a really interesting one. I want to speak to this this uh posted a little bit here. So the the idea that um

we write for learning um it's part of our learning process and I think for many of it uh writing is that but I want to give a little bit of a different example. So for myself if you've been to any workshops that I've that I've led I have really poor written output. I've had challenges with that since I was a kid. So I find that for myself writing is not part of learning. Uh speaking preparing a presentation is part of learning but for me writing is part not part of the learning process. So absolutely I

think writing is a core part of our process in higher education but perhaps not for all of our students. So lots of emphasis on critical thinking. students won't take their learning seriously. Great point. I'm just going to read one of the opportunities. Opportunity to spend more time discussing and reflecting. Um, another opportunity. It may be better for dyslexic students. And we're going to talk a little bit in a moment about the idea of universal design for learning and inclusive education and how AI can be helpful with that. So, give everyone a couple more minutes here

and I'll share this whiteboard with you after to refer to if you're interested. Uh, someone put work smarter not harder. That's that's really interesting when we think about AI and potential output. another opportunity, individualized or one-on-one feedback opportunity. So, I'm going to stop sharing now if you're still writing your post-it note. Thanks for sharing some of those concerns and again acknowledging that there's a lot of emphasis on the challenges here and in this presentation we are going to talk a lot about challenges. So let me walk through a couple of the opportunities and challenges when

we think about integrating AI in assessment. So, one of the opportunities that I've spoke about uh just now is the new opportunities for accessible learning. And I mentioned my own situation. Someone who has issues with written output. I've had them all my life. AI has made a massive difference for me to be able to write something and not be embarrassed by it. not be wor worried that someone's going to find these errors that they think are because I'm sloppy or I'm not putting enough effort in but are really related to my writing. So AI is

making a big difference I would expect to many students in students with dyslexia, students dealing with ADHD, students dealing with written output to make assignments more accessible. Number two, students are already using AI in their learning. So when we think about opportunities for integrating AI in their assessment, I think a big driver is students are using AI more and more in their day-to-day learning. And I would expect that many of you are probably using AI more in their day-to-day learning. A study about four months ago found in the UK that 92% of students were using

AI. study the number of UBC students is much lower. I think 28% of students were not using AI. It's much higher, but we still do have a lot of students using it. And as these tools are um integrated into all of our tools, integrated into Outlook, integrated into Google Docs, integrated into Gmail. Students are going to be using these tools without even knowing it. Next is there's a lot of angst right now with students that I've spoken to about AI. They're really unsure about what future skills look like in their discipline or profession and how

they can meet those skills and competencies. So I think helping students with that challenge and thinking about disciplinary change, professional change and what skills they need is an important value for integrating AI into our assessments. Next is AI has the potential to personalize learning. >> Screen anymore. We see you. >> Oh, thanks Manuel. I'm not sharing screens. There we are. Is that better? I'm so happy you're there to tell me that. That's I think one of the worst feelings is when you think you're sharing and you're not. Uh the ability to personalize learning. So generative

AI can help us create scalable tutors, create prompts, create different resources to help personalize our day-to-day learning. And finally, and I think a couple of you commented on the post-it notes about our students learning, students are shortcutting learning. Not necessarily. I think we have an opportunity now to help students use AI in a way that helps their learning rather than takes away from their learning. And as educators, I feel that this is our responsibility to help them use new tools in a way that promote learning. But with these opportunities, there's significant challenges with AI and

assessment. And I wanted to start by reading this quote from Simon Bates, which I really like. Learning is not frictionless. It requires struggle, persistence, iteration, and deep focus. The risk of a too hasty fullcale AI adoption in universities, it is that offers students a way around that struggle. Replacing the hard cognitive labor of learning with quick polished outputs that do little to build real understanding. Another way to say this is learning is tears. learning is challenging. How do we help our students use these tools in a way that don't shortcut their learning? And what does

it mean if students are shortcutting their learning in different disciplines? Secondly, and this is a recent article I'll I'll make sure that I share in the resource that I shared with you from David Wy talking about questions we need to ask. And I think one of the challenges of AI that a lot of you touched on in the post-it note activity was measuring learning. So WY says the primary problem AI causes for educators is that its existent changes assessment evidence that was previously persuasive into evidence is that is no longer persuasive. So we used to

be able to get an essay from students and with the exception of contract cheating etc. we would have a good idea that the student probably did the essay. Now we have challenges with this. So how do we think about what persuasive assessment evidence looks like? And this brings up this large question. What do we want students to learn with AI and what do we need students to learn without AI? And a good example of this, I was just driving around the other day using my Siri. I use Siri to navigate and my Apple Maps and

I would be completely lost if it weren't for those. Now, I grew up with maps, so I probably have some mapping skills around spatial awareness, coordinates, etc. But what about my son? What about uh, you know, younger people growing up who've never used a map? What are the skills that they're losing by not using a map? And if we were teaching someone to drive, do we need to teach them how to use a map to drive or should we be teaching them how to drive with a GPS? And I think this is a really good

analogy to some of the thinking that faculty are doing right now when redesigning their assessments is what are these skills like map reading that they're going to need to learn without AI and what are these skilled like GPS navigation that they're going to need to learn with AI assistance for the workforce for disciplinary practice etc. So I want to do a quick chat activity this time in what I'd like you to do is we'll spend about four minutes. You'll see I use the term four minutes. I have a 8-year-old daughter and 4 minutes is usually

anywhere from 2 minutes to 10 minutes. Um, we're going to take four minutes in the chat and what I'd like you to do is to write down one skill or concept students in your discipline need to learn without AI. So, what's that one skill that students need to learn without AI? And I'm going to stop sharing now. I'll get Manuel to paste that question in the chat. And I'd like you to turn your attention to the chat and take a look at some of these skills from other folks. So people skills, communicating, critical thinking comes

up a lot, close reading of a passage, therapeutic communication, musical performance, basic computational skills, collaboration, collaborations, collaboration and communication are interesting ones because while students do need to learn them without AI, I think we could also think about the opposite where collaborating now is often done it can be done with AI in the middle of a collaboration and communicating also needs to be learned without but we can also we also probably need to learn to use AI to communicate stats critical thinking communication presentation skills uh identification of species lab skills understanding correlation between two variables.

Clear synthesis of complex food systems. So, lots resilience. What a what a great example. Thank you. Perspective taking where knowledge comes from. These are great. I'll just give everyone another minute to finish. And while you're doing that, try to read over some of the other comments within the chat. relational skills, experimental design in internalization. Thanks, Alex. So, the idea of internalizing our learning for communication and complex ideas, visual analysis. So, we all have areas that we need students to learn without AI. And in addition, we know that struggle is part of learning and that AI

can shortcut struggle. So I think this brings up a larger question that we're going to explore for the next section is on one hand we need to be confident that students can do the work themselves. on the other in a world where chat GPT exists we have to ask what counts as work that's the challenge so this quote comes from a video uh by Kath Ellis I put a link to the video Kath Ellis is an Australian educator at University of Sydney who focuses on academic integrity I put a link to the video in the

worksheet that I've shared I think this is a a great she does a really good short explanation of the challenge and chair. So when we think of assurance of learning, how do we secure our assessments so that when we want students to learn those skills that they need to learn without AI, how can we have them securely do that? And when we want them to learn new skills and leverage AI, how do we use AI as a learning tool? So that's what we're going to look at for the remainder of the workshop. And we're going

to start with secure assessment. So assuring learning um how do we assure learning and I want to do talk about two things. One is how can we assure learning through communication and the second is how we can assure learning through structure. So, we know this is a graph or a histogram from UBC, a survey done in UBC in January of UBC students. And they were asked, "In your courses, do you know if you're allowed to use AI and you'll see 42% said yes in a few of my courses, only 15% said in all of my

courses." So, one challenge we have here is that students are very unsure of when they can use AI and when they can't use AI. And I think that has a couple associated challenges with it. One is that when faculty want students to use AI, I've spoken to a couple colleagues about this. They have found students very uncomfortable touching it. They're not sure how they can use it. They're not sure if it's okay, etc. Secondly is that if students don't know if they can use it, I remember one student from an early panel saying, "If we

don't know, there's a good chance I'm just going to use it." And um you know, academic integrity be damned. They're just going to go ahead and use it. So clear communication is important. And one of the ways we can do this is just setting expectations for AI use in your course. So the purpose for using AI or not using AI, the assessments where students can use AI and the assessments where they can't use AI and how students are going to disclose AI use and how they're going to site AI use. So this can be shared

in the syllabus with students or shared as part of your class. Different universities are using different approaches to communicating AI usage and some of these approaches will talk about whether you use AI or not. So for example, King's College in London had students do declarations with an assignment. They needed to declare AI use or that they didn't use AI use. Interestingly, they found that only 70% of students acknowledged that they were appropriately uh letting folks know if they were using it, they were still concerned about disclosure. Um, University of Melbourne and Mashe are using declarative

requirements. A lot of schools are using the AI assessment scale by Perkins. I'll just quickly throw that up here. I didn't have it in my slides. So AI assessment scale. So what the AI assessment scale actually could I get a thumbs up if you've seen the AI assessment scale before? If you've used it. Okay, I see a couple there. So the AI assessment scale which I've linked to in the document talks about how and why students can use AI for different assignments. So it's a scale that goes from no AI. So this is an assignment

where students can't use any AI to AI planning where students might be able to use AI in drafting. They might be able to use AI for brainstorming to AI collaboration where students may evaluate AI output. Um, and they might use it to draft, they might create a uh, you know, an essay, evaluate what was in it, rewrite it based on that full AI, which I'm seeing more and more where students can use AI throughout a class or throughout an assignment. and AI exploration where they're using AI in new ways that we weren't able to do

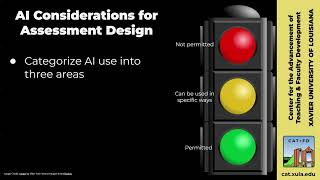

before. I'm going to talk about challenges with this scale in a couple minutes when we think about structure. But where this scale can be helpful for you is one is kind of getting some ideas on how you can integrate AI into your assessments and two as trans as a way to transparently discuss AI use with your students for different assignments. So communication is one way that we can assure learning. The second way is through structure. And I'm sharing this quote from Corbin Dawson and Lou. This is one of my favorite papers in the last year

or two about assessment. Again, I've linked to it on the document. They're very critical of things like the AI assessment scale and using traffic lights and using stop lightss. What they say is communication and transparent conversations are important, but it can provide a false sense of security and a risk to assessment validity. So we can tell a student that they can only use AI for brainstorming, but do we know if they only used AI for brainstorming? And this brings up issues around learning equity. The other challenge that Corbin and Dawson mentioned is that if we're

using a scale or if we're using traffic lights, it can be very hard to differentiate those lines between brainstorming and drafting or brainstorming and evaluating. And a colleague of mine used this example. Imagine if we gave students a calculator for a math exam and said you can only use AI sorry only use the calculator for multiplication not addition that would be really challenging so the AI assessment scale is helpful but I don't think it's sufficient to assure learning and that brings us to structural assessment changes this is from the same Corbin Dawson and Lou article

structure al assessment or modifications that directly alter the nature, format or mechanics of how a task must be completed. These changes reshape the underlying framework of the task, constraining or opening the students approach in ways that are built into the assessment itself. So rather than only relying on communication, Corbin Dawson and Lou suggests structuring your assessments in a way that mitigates AI when it's appropriate to mitigate it. One approach that has been quite successful in this area is an approach taken by the University of Sydney called the two-lane approach. they the University of Sydney and

Danny Louu started this approach actually very early I think in 2023 when AI was first being uh you know kind of emerging in higher education and the way the two-lane approach works is that faculty need to decide if their assessment is going to be in lane two or in lane one. If their assessment is in lane one, it's deemed a secure assessment. And what a secure assessment means is that it basically needs to be in person. Lou has suggested that without it being in person, they're unable to really ensure learner equity and that some learners

won't use AI for it. What they've done at Sydney, and this has been a little controversial, is they've said that if you're not in lane one, you need to allow students to use AI in some form and scaffold AI use in your other assessments. Um, so lane two are open assessments. You're using generative AI as relevant. You're scaffolding and using it with assignments. So they had their faculty choose between these two lanes for their different assessments. But I want to walk through a couple other ways that we can structure our assessments to mitigate AI use

or to assure learning when we need to. One is through the use of authenticated checkpoints and iterative engagements. And what this means is building in-person checkpoints into an assessment where students show their ideas, how their ideas work or evolved over time. So this could be students writing an essay and then going into the classroom and being graded on a discussion about their uh that particular essay topic. It could be in the in the example I shared in a research methods course, students submit a research proposal, they receive pure feedback on it, they revise it, and

then defend their changes in a short recorded presentation. So you're taking a single assessment, you're breaking it down, and you're including components in it where AI um usually could not be used very easily, for example, in a recorded presentation, um in a discussion, etc. And this could involve using live discussions, peer feedback or reflective submissions. Secondly is making reasoning process visible. And a good way to think of this is mathematics who I think has been dealing with a lot of these questions already often has students show their work. So by getting students to break down

their show their work we're making reasoning more visible. And I am seeing a growth in this um from faculty where they're getting students for example if they're creating an essay not only to upload the essay into canvas or whatever learning management they're using but also include their notes for the essay perhaps include annotations they use to create the essay but uploading things within a package rather than only focusing on the product. So, we're starting to move away from the idea of not polish but final answers and think about the the reasoning process and how students

are showing this. So, this could be reflection activities where they justify their thinking um reasoning activities and activities where students show their work. And I have an example from computer science here. Students develop a coding project in stages, submitting code snapshots with explanations of key design decisions and trade-offs made at each step. So, you know, this isn't perfect at mitigating the use of AI, but it does take us uh some of the way there. And finally, designing assessments that focus on different modality. So again, as someone who has written output issues, I'm very excited about

this. Um the emergence of oral examinations again. So thinking about visual, oral, performative formats, interactive discussions um where students are graded by based on that and using structured multimodal assessments which deepen engagement and can provide evidence of students learning. An example of this is students analyze a clinical psychology case in stages creating a visual concept map of their interpretation, a short written report summarizing their diagnosis and a rationale and a recorded oral explanation where they walk through their reasoning and theoretical choices. So you'll see that that combines a number of different structural approaches that we

can use to mitigate AI use and to assure learning when necessary. So I have an activity for you now based on uh structuring assessments with generative AI and I'll be giving you five minutes to do this. I'm just going to go to the activity in the worksheet. What I would like you to do is I've created a prompt here. So under activity two, I've created a prompt based on those three structural areas that I've spoken about. And what I'd like you to do is select a current assignment that you use that perhaps you haven't restructured

to think about AI. If you're not currently teaching, think about a generic assignment that you might know of. How think for a moment how could you revise the structure to make the learning more visible and verifiable and then I want to take you to take a minute or two on your own just brainstorming think about that assignment how might you uh integrate structure to mitigate AI use once you've done that I'd like you to take this prompt here you're an expert assessment designer given in the assignment. I'm just going to remove this part here. It's

a little confusing. Suggest ways to actually I'll leave that. Suggest ways to strengthen the assignment in three structural areas. Authenticated checkpoint activities that expose reasoning and multimodal evidence. Then what I'd like you to do is to paste a short description of the assignment below and see what sort of ideas chat GPT or code can give you. So again to break down that activity, you're going to start by brainstorming ways that you could structure a current assignment to assure learning or have secure assessment. After you've brainstormed, I want you to use Chat GPT as a brainstorming

tool using this prompt with the same assignment. When you've done that, compare the two brainstorms, see if there's some alignment, and see if generative AI has come up with some ideas for you. I'm going to stop sharing. I'll give you five minutes to do that activity. While you're doing that, I'll look through the comments again, please. So Rhysa Sergeant, this will require a lot more grading resources. Is UBC thinking about this? I'm unsure if this is a conversation right now. My main focus is around professional development. I can look into this if it would be

helpful. Please email me and I can, you know, talk to some folks I know who are more connected in policy activities. And I would say a lot of these approaches are more resource uh intensive. And that becomes really challenging when we think about our limited time and the scale of the teaching that we're often doing within the classroom. And so a couple folks have mentioned grading workload. And uh Hannah mentions it might be better to spend time more time on assessing more secured assessments than trying to detect generative AI use in all assessments. And I

haven't mentioned that um yet within the presentation but maybe I'll mention it now is currently we know that AI detectors um for example the turn it in AI detectors have significant false positives and false negatives. So in the literature uh AI detectors are have anywhere between four and 50% false positives. I know that's a confusing statistic. There's a lot of really big variations in the research right now. And we also know that humans are generally not good at detecting AI work. So the idea of being a policeman or a detective and trying to figure out

something is written by AI or not is quite challenging and we may or may not want to spend our time doing that. Yeah. And Karina mentions, I wonder if there are any lessons we could learn from folks in mathematics as I think back to my undergraduate degree and being introduced by Wolf from Alpha. Absolutely. And this is an invitation from for anyone in the mathematics area who's played in these spaces. Please uh reach out to me. It'd be great to do a presentation around this. I could help with the slides and stuff if you wanted

to. I think we could learn a lot from math um about how they've dealt with this before. Uh Vincent says, "At UBC, is it true that we can allow students to use AI but cannot require it to be used in coursework? Just want to check." From my understanding, Vincent, we can ask students to use AI as long as it has a PIA for it. So that would be chat GPT without a login and uh co-pilot as long as they don't put any personal information into these tools. With that said, I do know that there's a

significant number of students right now that I've worked with actually who will not use AI. They have concerns about sustainability, concerns about intellectual property. So even in those cases, we might want to think about those students and alternate ways that they might be able to do an assessment without AI to do with those ethical challenges around it. And as uh Eric mentions, often these policies are also set at the course level or department level at UBC. Thank you. And Bri was kind enough to share a link to the PAS completed around co-pilot and chat GPT.

And I would expect there will be more of these in um the next little and tomorrow. I don't have an answer to your question right away, but please feel free to email me and I can look into it if that's helpful. Wonderful. So, I'm going to give everyone about two more minutes and then I'm going to get you to share um how you might make your assessments more secure as well as any areas that I have haven't touched on in terms of securing assessments so far that you want to share with the group. So, I'll

give you two minutes and then I'll get a couple folks to share. All right, let's switch it up a little bit. this time. Um, I'm wondering if a couple folks would mind either raising your virtual hand or just unmuting yourself and sharing either the results of their structural brainstorm or ways that they're structuring assessments to uh secure them when dealing with generative AI. Anyone want to jump on the mic? Yeah, Tamara, please go ahead. Hello. Thank you. Um I thought it was really interesting. I was I used chat GPT and I I teach a public

relations course. Yeah. >> And it was um >> I entered a course your prompt and and an assignment that I do think students rely I allow Gen AI but I think that think critically enough about it. So just the authenticated checkpoints the verify evolving work. It talked about an interesting thing where seeking feedback and sharing a screenshot of peer feedback conversation was something never thought about like so it's not sharing you know a photo of us in a coffee chat together but >> I thought that was really interesting. I got to think I just thought

it was fun and it >> my students are communicating. They're chatting together in social media screenshots an easy one. I don't know interesting. >> Wonderful. Thanks for sharing that. It's interesting. When I I first started UBC 15 years ago and my position was e portfolios which are collecting artifacts that show evidence of meeting learning outcomes. And in a way what we're thinking about assessment has come full circle. Um we're thinking that would be a great artifact a screenshot of peer assessment or an annotated screenshot of peer assessment even. Thanks Tamar. Yeah, Jonathan. >> Hey, thank

you. Um, apologies, my camera's uh not working great today, so I'm gonna keep it off. But, um, I, uh, I teach, uh, writing centered courses. And so, >> um, one of the things that I've been thinking through is is having my students assess uh, their own writing. So providing kind of high level of assessments uh of the decisions they're making around the writing practices that they're they're doing. >> Yeah. >> Um and um I use chat GBT for this assignment. And one of the other things I suggested was having the students create kind of logic

maps. So if they're writing, >> you know, research proposal or something um explaining how their research question connects to other elements of their of their research proposal and doing that as an in-class assignment. >> Wonderful. So they're so they'll sit in class and make these logic maps and then share them as as an assignment. >> That was my understanding of it. Yes. >> Wonderful. Thanks for sharing those examples. And it it's so exciting to hear again my background is in education and is to hear the way we're changing these assignments really aligns with effective approaches

to assessment. thinking about process and iteration and authenticity. So I think while this as many of you have noted in the comments, this is a significant research or sorry resource challenge for many of us, we're also really thinking about assessments in ways that align with good assessment practices. Maybe we can hear from one other person uh before we move on. All right. So, just for time, why don't we move on? And what we've looked at so far is structuring and communicating AI. Um, when we want to assure learning and assure that students aren't shortcutting their

learning. What I'd like now to talk about is the other side of the equation is when we want to start integrating AI in our assessments themselves. And what I'm not going to talk about today is using AI to provide feedback or to evaluate assessments. There are a couple projects at UBC that do this. Um, I think right now it's a really challenging space because of AI bias, AI hallucinations, and AI privacy. Um, so I'm not going to address that today. But what I do want to think about is what AI looks like when we might

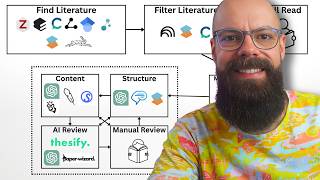

integrate it in our assessments. And I wanted to share this uh diagram developed by Anthropic. And I've shared a link to this study in the worksheet that I shared with you. Anthropic did a fascinating study this year where they looked at a million students who are using AI. They were able to do this through the students.edu edu accounts in the US and they wanted to look at the way they were quering AI. And what they found was that there was almost an equal split between students who were using AI in a direct way and students

using it in a collaborative way. They came up with this typology. Direct would be something like solve and explain differentiation problems in calculus. collaborative might be teach programming fundamentals with Python examples. So I think it's easy to think that if students use AI, they're not learning and they're shortcutting learning. However, what this study shows and anecdotally what I'm seeing with students in my own AI use is there are ways of using AI that help with learning rather than shortcut and hindering it. For example, I do a lot of my writing with AI. I would say

that it takes me longer to write with AI than to write manually or write by hand. Um, so while it may not work on the same type of skills, for me by writing with AI, I'm still writing to learn and I am still going through a rigorous process which does involve struggle. So what does AI look like when it's integrated in assessments? Um, one of the ways I think one of the lowest hanging fruits for integrating AI and assessments is not thinking about our summitive assessments or assessments at of learning but thinking about our formative

assessments. So we're seeing AI used as shown in that Padlet activity for simulations. So virtual patients, role plays, we're seen AI used for personalized feedback as part of a writing process, for scalable tutoring, as part of a peerreview process, and as a way that students can do practice questions and self- testing. Want to start by talking about AI and peer review. So this is the pair framework. The pray pair framework was developed out of UC Davis and it's a way of integrating AI into the peerreview process. They have a great website on this, a couple

of useful research articles as well. The way this process works is students discuss and reflect on short readings about AI and language equity. They then um once they've written their draft, they're peer-reviewed on their draft. Once they've done the peer review, they prompt the AI to review the same draft with instructor guidance on how to do this privately. So that could be removing their name from it. Um it could be using a local model if they really don't want to use online. and then they critically reflect on and assess both types of feedback considering their

goals and audience and then they revise. Um the folks who developed pair were very intentional with having students do the peer review first and then the AI review secondly. And what they found uh in the research on this, the research they did on this was that the peer review and the AI review were actually quite similar. Students found a lot of similarities between the points that were brought up in both. And here is a diagram showing the pair approach to integrating AI as part of the peerreview process. So, this is one way that we can

integrate generative AI into formative assessment and use AI, but also um in some way give students more opportunities to look at feedback, more opportunities to critically think about their work. Actually, I will jump back to that. The second example that I'm sharing here is a tool developed at the University of Sydney where they had instructors sorry students use AI to get feedback on a particular application. So this was a bot that they developed using chat GPT that students used to get feedback. They they then improved on their writing. We don't need to develop a specific

bot to do this. We can actually give students prompts like this that they can use with generative AI to get some feedback on their writing before they hand it in. Next is the idea of scalable tutoring. We know from articles like bloom sigma 2 problem that tutoring is an effective way of teaching and it generally measures well or better than most other approaches. We have the opportunity now with AI to have scalable tutoring that's integrated in our classes so that students can do formative assessments on themselves throughout a course, throughout a program or for a

particular assignment. And this is an example of a bot developed by Dr. Raymond Lawrence at UBC Okonogan where he has students uh use a bot to better understand Python when they're programming. But again, we don't need a bot to do this. This is an example of a tutor prompt that students can use, enter into chat GPT or into Copilot or into Clode and turn the tool into a tutor that they can go back and forth on. What I did here which was interesting is when I was developing this prompt, I actually fa I actually used

educational research around tutoring to have the prompt reflect good approaches to tutoring. So you'll see it's using microconcept. If something is partially wrong, it's giving hints. So just with a prompt, we can really develop tutoring tools that can help our students. Another way that and probably the most common way that I'm seeing faculty integrate generative AI into their assignments and assessments is having students evaluate AI outputs. So having students enter um you know generate an AI output or look at an AI output and then check it for accuracy, biance, coherence, relevance. A couple different ways

they might look look at an AI output. They might analyze an AI output based on accuracy. What are the errors in the output? What is the bias located in the output? How specific and how general is the output? We still have output from generative AI which can often be fairly generic. Um what resources and reference is AI drawing its output from and kind of chasing those different references and citations. We can scaffold these sort of activities by using things like the SIFT method. So, the SIFT method developed by Mike Cawfield has students look at an

AI output, stop and reflect on it, investigate its source, find better coverage, and trace all of the claims that it's making. So, by scaffolding this process rather than just having students evaluate an output, we can ensure that we're helping students through this process. often when we're evaluating AI outputs as these tool improve evaluating becomes harder and harder. An example at UBC is Dr. Neil Leverage who teaches uh communicate forestry communications has his students develop their own forestry communication assignment. They then use the same assignment prompt. They generate equivalent concept content using chat GPT and then

they compare the two and they talk about accuracy specificity and limitations and then as their main assignment they do a critical reflection on the difference and they write about communicating using AI and without AI. We also have a number of courses and assignments that are using full AI. And when I talked about structure and some of the challenges in differentiating between how AI is used, I think just allowing students to use AI for an assignment, scaffolding their process, making some suggestions um is an effective approach. So, a couple ways I've seen this doing in architecture

at BCIT, they had students use AI to generate speculative architectural renderings and then use those as part of their projects. In communicating science at UBC, they allowed students to use AI to help them communicate a scientific concept to the general public. The professor who did this said she's seen some of the most creative uses of a of uh public communication she's ever seen. Students created podcasts, they created children's book, etc. Another example of this is Weaver Shaw Advantage College at UBC who was teaching Envirro 2011 had students do an open book exam that allowed them

to use AI as well as other uh resources for the open book exam. and she did some interesting uh research on this finding that by using AI it helps students authentically think about real world problems. It helps them teach responsible use of AI focus on application as well as on autonomy and time management. And she found that by doing this openbook AI exam, students responded to it well. And because of the nature of the coursework she was doing, she was able to evaluate this validly as long as as she had scaffolded the use of AI

within her course. So that brings me to the end and it looks like it is 11:32. I apologize for going 2 minutes over with you. I got excited there at the end. I want to thank everyone for joining us today.