Inside Anthropic's Race to Build a Smarter Claude and Human-Level AI | WSJ

4.23k views6721 WordsCopy TextShare

WSJ News

At WSJ Journal House Davos, Anthropic CEO Dario Amodei outlines Claude’s next chapter—from web brows...

Video Transcript:

Well, I want to start with a thank you because I built a Davos assistant using Claude, and I mean really my bosses should thank you. It doesn't get jet lagged. We don't have to pay for it, you know, any lodging, um, and it's been so helpful to me.

It's got my calendar, it's got my notes, it's got all of these things, um, but there are some shortcomings and I wanted to go through some of the some product questions, and I wanted to first get a sense of people in the crowd, how many people here use Claude any power. Users, OK, perfect. Then this is gonna be a great conversation for for everyone, um, so I wanted to go through some of the lists of the stuff that I wanted and you, you can, you can this is it's gonna be a fun game.

You can decide if you know you want to tell me exact timing you can tell me if it's coming, not coming but so here we go access to the web access to the web. So yeah, that is an area that we're working on and is going to come uh uh relatively soon. Um, I think one thing to understand about Ethropic is, you know, the majority of our business is enterprise focused, and so often enterprise focused things get prioritized first.

Web is more on the consumer side of things, but we've been working on it for a while. We have some ideas that we think are different from what other model providers have done, and so, you know, I'm not going to give an exact. But that's coming very soon.

We understand the importance of it for consumers and specifically for power users on the consumer side and you know, so we are in fact prioritizing it. Love it. OK.

2 is a voice mode, a voice mode so I can talk to talk back to me. I think that, I think that will, I think that will come eventually. I mean we currently Have you can, you know, a loud can transcribe your voice and it can also, you know, it can also read things out, but the kind of two-way audio mode, that is something we are going, that is something we are going to do at some point.

But again, less usage of that on the on the on the on the kind of enterprise side and some of the power users. So, so that will happen eventually. A photo, let's say photo generation photo generation.

Yeah, so I've often seen generation of images or video as somewhat separate from a lot of the rest of the stuff in generative AI. If you think about what humans do, humans ingest. Um, you know, humans ingest audio, photos, video, but they can't actually make photos.

I mean, you have painters, but they can't actually make photos that easily. I've often seen this as somewhat different, and I think on the on the safety and security side, there are a number of unique issues associated with image generation, video generation that are not that are not associated with text. Also, I.

I think there's not that much enterprise use case for these. So this is generally an area we don't plan to prioritize. If it turns out to be important on the consumer side, we may simply contract with one of the companies that specializes in working on this.

That makes sense. So I also asked this on Twitter or X or whatever it's called these days, and I got 200 responses. I got 200 responses to everything, but the majority of them are asking for higher rate limits.

Yes. So we are working very hard on that. What has happened is that the surge in demand we've seen over the last year and particularly in the last 3 months has overwhelmed our ability to provide the needed to compute.

If you want to buy compute in any significant quantity, there's a lead time for doing so. Our revenue grew by roughly 10x in the last year from something that was. You know, from, from, I won't give exact numbers, but from, you know, of the order of $100 million to the order of 1 billion, it's not, it's not slowing down.

And so we're bringing on efficiency improvements as fast as we can. We're also, as we announced with Amazon at reinvent, we're going to have a cluster of trannium 2 of several 100,000 ranium 2. I would not be surprised if in 2026 we have, we have more than a million of some kind of chip.

So we're working as fast as we can to bring those chips online and to make inference on them as efficient as possible, but it just takes time. We've seen this enormous surge in demand and we're working as fast as we can to like provide for all that demand and probably even keeping like the experience will get better even for those that are in the paying group now. Yes, yes, yes.

All right, I'm going to hold you to that one. Um, and lastly, like a memory feature, a way for Claude to remember things not only in a project but across. Yeah, that's, that's really important.

That's, that's, I think a part of our broader vision of kind of what we call virtual collaborators and you know, I think an important part of that is you think about when you talk to a coworker that coworker, you know, they need to remember conversations that you've had before. So that's very important and that that is coming soon. And you mentioned before that you really are focused on the enterprise, but as a consumer, and I mean we're all, we're all consumers as even as working in our companies, the character of Claude is just so compelling.

It's one of the things that makes it so useful and and makes it that I find it I myself going back to Claude versus another uh LLM or or a product. How are you thinking about that though? I mean, you've designed this character, you've designed a character that works in enterprise.

What about the consumer? Yeah, so actually I think Claude character is important on both sides, right, because it's obviously very important to consumers interacting. I think it's also important on the enterprise side because we we serve, uh, you know, we serve some companies like Intercom and many others that do customer service.

And so the model then gets used for customer service and, you know, the, the. mode of interaction is important there as well. Even applications like coding, the character is really important.

There was, there was a study from Stanford Medical School just last week where they compared between Claude and other models, you know, both the accuracy of the models for things like radiology image analysis, but also how much did the doctors adopt what the models suggested. And what they found is that lads, the doctors actually listen. To Claude, they adopted its recommendations much more, and I think that has something to do with the way they're they're interacting with it.

And and so I think on the both the enterprise side and the consumer side, we've put a lot of effort into making sure that interacting with Claude is a good experience and an authentic experience. And and I think one reason we've put so much effort into this is we see it as having a larger significance in the world where, you know, individual consumers, especially for productivity, are interacting with models. You know, for, for, for hours a day they're acting like they're assistants or at work again you have these as your like very smart assistants who can you know probably solve math problems much better than than you can.

The level of intertwinement there, I think we really need to get it right. That doesn't just mean being engaging and friendly. It means the model needs to interact with you in a way that like after months and years of interacting with the model, after it becomes part of your workflow, you are actually.

Better off as a person. You, you become, you become more productive. You, you, you learn things of course the model gets work done for you, helps you do work, but, but in, in, in the long run that relationship has to be productive.

I, I think that didn't go well for things like, for example, social media, right? Like it's, you know, the biggest problem I think with, with social media is that, you know, it's really engaging, it gives you that quick dopamine hit. But, but I think we all suspect that over the long term it's, it's, it's doing something.

Something unhealthy to people and I've really taken that lesson to heart and I want to make sure AI is not like that and Clawed character is one of the, I think, the most important pieces of that. Absolutely. And you've mentioned models a little bit, and this was also a big request of my, my ex and talking about social media.

I won't lean on them too much, but I thought it was really, and as we're seeing in the room, there's you've got a huge group of power users and so lots of people wondering about your next models. OpenAI is obviously gone with these new reasoning models 01 003. What's your plans there?

Yeah, so you know we are, we are, I think, in the not too distant future going to release some some some some very good models. I won't I won't specifically say I won't specifically say names. To say a little about the reasoning models, our perspective actually is a little different, which is that you know there's been this whole thing of like reasoning models and test time compute as if there's the.

models and the reasoning models, there's somehow a totally different way of doing things. That's not our perspective. We see it more as a continuous spectrum that there's this ability for models to to think, to reflect on their own thinking, and at the end to produce a result.

If you use Sonnet 3. 5 already, sometimes it does that to some extent, but I think the change. that we're going to see is that we're going to see a larger scale use of reinforcement learning, and when you train the model with reinforcement learning, it starts to think and reflect more.

And so it's not like reasoning or test time computer, the various things that this is called, is a totally new method. It's, it's more like an emergent property, a consequence of of of of training the model more. In an outcome-based way at a larger scale and so I think what that's going to lead to and we may, you know, still, still have to see how it how it works, how it pans out, I think something that more continuously interpolates between them, that that more fluidly combines reasoning with all the other things that that models do.

I I think that's what's going to be the most effective thing. As you, as you've said, we've often focused on, you know, make sure using the model is a smooth experience that people can get the full thing out of it. And, and I think you may see with reasoning models we'll have a similar, we'll have a, we, we, we will have a similar spin and may do something that's different from what others are doing with reasoning models.

I want to just back up for a second because you just mentioned your, I should probably even, so you won't give me a date. I, I, I will not, I will not give you any dates on anything in this in any of them or any other that I'm doing at this event. OK, no dates specifically, but we heard in the not so distant future, I think was the quote.

So it's in your mind, is that like 6 months, is that like 3 months? So between 3 and 6 months. If if we if we zoom out and I think there's a lot of, a lot of talk about how fast this stuff is progressing and we're hearing, you know, just this week, you know, some reports OpenAI's got a PhD level agent.

Is this stuff really moving as fast? Uh, yeah, so, uh, you know, if I were to describe my own kind of journey and how fast this stuff is moving. My own kind of like, I don't know, almost like epistemic and emotional journey.

Uh, if we go back all the way to 2018, 2017, a couple of years after I was first in this field, my perspective then, you know, I was one of the first to document along with my, you know, co-founders at Anthropic, the, the scaling laws, which says you pour more computing these models, they get better at everything and really everything. My view from about then to to to 6 months ago was, I, I suspect based on these trends that we are going to get to models that are better than almost all humans at almost everything. Um, I, you know, I guess that it would happen.

Sometime in the 2020s, probably, probably mid-2020s, but the way I always said it was, I don't know, I'm not sure. Like we could be wrong, and I think that's the right attitude to have, like, uh, you know, particularly when you're the CEO of one of these companies, like if you, if you just say it's definitely gonna happen, like, you know, it's, it's gonna be great, you're all, you know, you just sound like a hype man, right? Um, uh, so.

I think until about 3 to 6 months ago I had substantial uncertainty about it. I still do now, but that uncertainty is greatly reduced. I think that over the next 2 or 3 years I am relatively confident that we are indeed going to see models that show up in the workplace that consumers use that are, yes, assistance to humans but are.

Gradually get better than us at at almost everything, and the positive consequences are going to be great. The negative consequences, you know, we also will have to, we also will have to watch out for. I think progress really is as fast as people think it is.

One thing that I will criticize is I actually think it's very important now that Fast progress is relatively likely to, to uh to appreciate it with the, with the proper gravity and to talk seriously about it. Um, some of the other companies, I won't name any names, you know, there's just all these, you know, it's all these weird Twitter rumors like employees talk, you know, employees like, you know, have this, have this kind of sly winking, like, you know, um nod to like, oh, there's these amazing things we're doing here. Um, I, I actually I think that's dangerous because someone on the outside looking at it is like, oh man, that's just hype.

That's that kind of communication gives the impression that this stuff is not serious, and I think it's like real, that's really dangerous to do when it actually is serious. Like I think the AI industry as a whole actually has an obligation to point to the seriousness of the moment that we're in. If, if, if we're really saying there are incredible positive things that are that are possible.

And inevitably with any change this large, there are risks. We have an obligation to communicate seriously about it and to say what we actually think. And I want to get to what you want some of those, those, what the risks are and what you want some of the rules and regulations to be.

But before we get that, I want to back up to what you said about co-workers and all the talk here, they should just rename Davos Agent Palooza. It's just everything is agents. Everyone's talking about their they've got.

Agents, they're coming agents. You, you call this virtual collaborators, you have a different term for that. Um, how, how are you progressing on agents or virtual collaborators and we saw some signs of that with computer use at the end of last year.

Give us a little roadmap and and specifically, like I know you don't want to talk about, you know, dates, but what are we getting this year? What are we really realistically going to see this year? And your company is at the head of a lot of this, so we can kind of get rid of a lot of the first of all, I should just disambiguate a little.

I think agents, there are these terms that come up, you know, with a regular frequency in our in our field that don't really mean anything. Like I think agents is one, AGI is one, ASI is one, reasoning is one. They sound like they mean something if you're outside, but, but they have no precise technical meaning.

So agents are used for anything from, you know, clippy plus plus to, uh, uh, you know, to like, uh, I press the, I pressed the button on this AI system and it like, you know, writes a complete. App for me and founds a company or something. I think what what we have in mind is somewhat closer to the second one.

So you know our version of this is virtual collaborators and so you mentioned computer use. Computer use is maybe an early instantiation of that. It's one of the ingredients, but I think the thing we have in mind is, uh, you know, and this could be, you know, an assistant in your workplace, an assistant that that you use personally.

But there's a model that is able to do anything on a computer screen that a kind of virtual human could do, and you talk to it, you give it a task, and maybe it's a, you know, it's a task it does over like a day where you know you say, you know, we're going to implement this product feature and what that means it's writing some code, testing the code, you know, deploying, deploying that code to some test surface, talking to coworkers, writing design docs, writing Google Docs, writing slacks, and Emails to people and just like a human, the model goes off and does a bunch of those things and then checks in with you every once in a while. So, so, so I would think of it as an agent. I would think of it as like an autonomous virtual collaborator that that acts on your behalf on a very long time scale and that you check in with every once in a while and and you you think of it as having having all the all the piping, all the inputs and outputs of a human operating virtually.

And so. Time scale on that, as you, as you've mentioned, like, I, I, I suspect, I mean, you can never be sure with these things. The process of research is incredibly unpredictable.

I don't know for sure what will happen with us. I don't know for sure what will happen in the industry, but I do, I do suspect, I'm not promising, I do suspect that. A very strong version of these capabilities will come this year, um, and it may be in the first half of this year.

So let's talk about the elephant or agent in the room, which is that that's going to affect human work, right? The, the, you just described a lot of jobs that we have people doing. Yes, so I generally separate this out into short term and long term.

So in the, in the short term, you know, we've seen technological disruption of labor many times in the past, and I think in the short term and you know, you know, in my kind of time compressed world, short term is maybe a year or 2 years or maybe, you know, maybe 3. on that time scale, we, we, we, I won't say we have a perfect solution, but we've seen before technological disruption of labor, and I think there it's important to think in terms of workers being adaptable in terms of taking advantage of comparative advantage. Comparative advantage is remarkable.

Even if a machine does 90% of your job, what happens is that the 10% becomes super leveraged. You spend all your time doing that 10%. You do 1010 times more of the 10% than you did before, and you get 10 times more done because the other 90% is leveraged.

So that can be good for humans. You can also learn how to, how to sculpt your efforts around what the machine is doing. And so we can also think from a product perspective how to design our products.

To enable complementarity rather than substitution, there was this interesting study from the economist Erik Brynjolfsson about a year ago that said by default when companies deploy AI, they tend to deploy it in a replacement mode. But when they think more, when they pay more attention to the deployment, they often find ways to deploy it in a complementary mode. When they do that, the productivity.

Increases are greater, so that suggests there's some path dependence. There are different ways to deploy the technology and we are thinking about that as we design this virtual collaborator. Again, there's, I'm not gonna lie to you.

There's there's there's a, there's a brutally efficient market here, right? If, if doing things in a in a complimentary way is like, you know, you know, somehow will be reliably out. Competed in the market by not doing things in a complimentary way, then the whole industry is in a difficult position and we have to think about how to get around that.

So there are very powerful market forces here, but I think I think there may be an opportunity compatible with those market forces to take a different path that's better for the whole industry, that's that's that's innovative, that manages to be better for humans while out competing the other approaches. That's the short term. In the long term, um, I, I feel very strongly that I don't know exactly when it'll come.

I don't know if it'll be 2027. I think it's plausible it could be longer than that. I don't think it will be a whole bunch longer than that when AI systems are better than humans at almost everything, better than almost all.

Humans at almost everything and then eventually that all humans and everything. Even robotics, we make good enough AI systems they'll enable us to make better, better, better robots. And so when that when that happens we will need to have a conversation at places like this, right, at places like this event about.

You know, how do we organize our economy, right? How do humans find find meaning, right? There are a lot of assumptions we made when humans were the most intelligent species on the planet that that are going to be invalidated by what's happening with AI.

And I think the only good thing about it is that we'll all be in the same boat. I'm actually afraid of the world where 30% of human labor becomes fully, fully automated by AI and the other 70%. That's, that's cause this this just incredible, you know, class war between the groups that have been and the groups that haven't been.

If we're all in the same boat, it's not going to be easy, but I actually feel better about it because we're going to have to sit down and say, whoa, you know, it's not, it's not like we're randomly picking every third person and saying you're useless. We are, we're all in the same boat. We've we've recognized that we've reached the point as a technological civilization where the, the, the, the idea, there's huge abundance and huge.

value, but the idea that the way to distribute that value is for for humans to produce economic labor and this is where they feel their their sense of self-worth. Once that idea gets invalidated, we're we're all gonna have to sit down and and and figure it out and and you know I'm I'm, I'm not gonna lie. I, my true belief is that is that that is coming.

I think AI companies are you know are going to be at the center of that or maybe in the crosshairs of that and and you know we need to, we have a responsibility to have an answer there. Yeah, I was going to ask about that. Because as you talk about that though, right now there's there's a war over human talent where in your industry, right?

You've got OpenAI and Meta and all of these other competitors. How do you think about attracting the best minds to Anthropic? So you know, I would make two points.

One is that, and I've said this on podcasts, like my philosophy of talent is that talent density beats talent mass every time. You want a a relatively small set of people we're almost. You know, almost everyone you hire is really, really good.

Even if some other company is 10 times larger and has 2 times as many good people, there is some magic, there's some alchemy to good people working together with each other, where when, when, when you hire an engineer, a researcher, they look around. Everyone they look around at is incredibly talented like them. So that has been our philosophy all along.

That is why I think talent has flocked to Anthropic. The second thing is the the values and the honest presentation of those values. So it's been very important for us to us from the beginning to get these kind of safety and societal questions right.

I think there are multiple companies in the space that have, and, and I laud them for this, um, have stated. That these issues are important and and and you know, and and even have made some concrete efforts, but I think as time has gone on, as I've diverged from my previous employer along with my co-founders, and we've done things our way and they've done things their way and other companies have done things their ways, the difference start to emerge over time. You see, you see companies make promises and you see how those promises pan out over time.

Um, you see what their internal employees think of the promises they've made, right? Some things don't, don't become public, but you know, you see by their action, by how people vote, vote with their feet, and I think, I think this is very important. I think it's, I think.

It's very important to really live these values, to have it be substantive and not a marketing exercise, and I think the talent that has been inside multiple of these companies recognizes that and understands that. And I think where the talent goes is, is, you know, can be, I'm an optimist, a signal about who's really sincere. We don't have much time and there's so many things I want to get to, but I do want to talk a little bit about regulation, and I did notice you weren't at the inauguration yesterday that a.

Yeah, so, uh, one way I, you know, one way I think about things is, um, you know, I think anthropic is playing a little bit of a different game than some of the other players. One of the ways I state it is anthropic is a policy actor. Anthropic is not a political actor.

Um, you know, political actors are looking at, you know, how, how will I be treated by political players? How do I, you know, how do I make sure, you know, my things I want to do don't get blocked by antitrust or that regulation doesn't. Doesn't get in my way.

We, we have more of a perspective of we're looking at the global AI landscape and, and we have a set of views, a policy platform, maybe at some point we even put out a policy platform of what what we think needs to be done, right? from how we relate to China and maintain the lead over China to, uh, you know, what, what the right testing and measurement is for the dangers of our own AI system to some of these economic. Issues that we've discussed.

Our view is that we have a certain perspective on these issues and we're going to describe that perspective to whoever listens. We we describe that perspective to the Biden administration. Now we're describing that perspective to the Trump administration, for example, on China, China's issues.

I wrote an op ed with Matt Pottinger, who was principal deputy national security adviser in the first Trump administration. Just really, really to show that you know, across the political spectrum and in industry issues like export controls are of common interest. So I think what you'll see is, you know, anthropic is not rushing to declare its fealty to one side or another.

Anthropic has a set of policy positions that we think if everyone understood the situation, there should be bipartisan. In support for because these issues are so central because these issues are so important and and you know some political players, some individuals may be more or less sympathetic to them, but that that doesn't matter to us. We're just going to go to everyone and describe what our preferred policies are because I think if we don't get this right, society goes goes in a bad direction and sooner or later.

If we're right, if we're wrong, then our policy platforms are wrong platform. But if we're right, then it will be clear enough. It will be clear in 2 or 3 years that, you know, there, there, there, that there will be a need for, you know, economic policies related to labor that we, we really need to do testing and measurement of these models for national security risks that it is existential as the outgoing.

National Security Adviser Jake Sullivan said to, you know, to stay ahead of China, which is why some of these export controls were put in place. If those things are right, history will vindicate us and history will vindicate us pretty quickly, like within the current presidential term. So the thing we're going to do is we're going to say the things that we think are the things that we think are the right policies to everyone.

Increasingly in public and you know, whatever people's ideology is, whatever they might be, you know, in favor of or against now, if we're right, and that's the key part, it will become clear in a couple of years and then you know, and then we'll have been on the right side of history. So that's that's how I think about policy. Any reaction to the overturning of the executive order this morning?

Yes, so the executive order actually. you know, was, was really, you know, it didn't do that much. It was just the first step, you know, we were generally supportive of it because it imposed reporting requirements for training large models, which I think is a sensible thing.

I think it makes sense for the national security apparatus to understand where companies are doing. It wasn't very burdensome, I think, you know, it probably took like, I don't know, like one person day of time time and anthropic. But it also wasn't, you know, you know, it wasn't some super complicated regulatory regime.

It wasn't a regulatory regime, wasn't a regulatory regime at all. So I think while I'm generally, you know, was generally supportive of that of that executive order, you know, you know, I think, I think it was, it was relatively small, yeah, yeah, it was kind of relatively small potatoes in terms of What was being done. What I think is more important is one, the export controls against China and preventing smuggling of chips.

That was what I wrote in the op ed with Pottinger. I think that that's absolutely, that's absolutely existential. We are just starting to see the value for military intelligence of these models.

I would also say that to the extent that we need to take care of the risks of our own models. Having a lead against China, which is becoming increasingly difficult, really gives us the buffer to do that. And if we if we don't have that lead, we're in this Hobby and international competition where it's like you can be in a catch-22.

Well, if we slow down 3 months to mitigate the risks of our own models, then China will get there. We we don't want to we don't want to end up in that situation in the first place. Um.

Also important, I think, is internal testing and measurement. So the AISIs which we've worked with, which have done this internal testing and measurement, I think those are very important and you know we've seen bipartisan support for preserving those into the new administration. Again, these are mostly testing the models for national security risk.

Right, that should be a bipartisan thing. There's been some misunderstanding that, you know, that that kind of testing is, is, you know, is about DEI issues or something like that. That's not, that's not actually what the AIS, what any of the AISIs are about.

It's about testing for national security risk, and we need to continue to do that testing for national security risk, and I've seen bipartisan support for it. You, you mentioned you just said something as a turn of phrase and I just wanted to ask about. You said, um, it's a one person day at Anthropic.

Is that kind of how you guys think about things? Yeah, yeah, I mean, you know, is it like a one claw day like increasingly as as laude does, you know, more things or or helps people, you know, we, we kind of, you know, I, I. I think we are thinking in terms of like, you know, there's people and there's AI models and they're they're complimentary to each other in various ways.

There's a certain amount of computing and there's a certain amount of people. I mean, I think the whole economy is going to have to start thinking in this style, right? In my essay Machines of Loving Grace, I have this phrase, the marginal returns to intelligence, right?

Economists talk about marginal returns to land. And labor, capital, technology, whatever, I think we should start thinking in terms of marginal returns to intelligence because there are some tasks that intelligence helps with, and there are others where there's some physical limit or there's some societal limit where intelligence doesn't help. And so I think that style of thought is becoming increasingly common within anthropic and should become increasingly common within the world.

I'm gonna ask two last questions I know I'm going over and someone's gonna start yelling at us in the back. Um, you recently, well, Amazon recently wrote you a $4 billion check. You raised more money.

I think believe we reported $2 billion. want to confirm that for us. Uh, I, I can't, you know, I can't, I, I, I can't confirm anything about, about ongoing raises, but if you, if you do, um, uh.

You know, if you do add up all the money we've raised or reported to raise, you know, it's definitely well into the well into the double digit billions of dollars. And just like zooming out, I mean, how do you, how are you dancing with these tech giants? Are they leading?

Are you leading? Yeah, I mean, you know, I think on one hand these partnerships make a lot of sense because we need a lot of compute and there's a lot of forward capital needed to train these models and These models are deployed, they're often deployed on the clouds because then you can offer security standards there. So economically, the partnership, it just makes sense.

It just makes sense economically. But our independence is also very important to us. This is why we have a partnership with multiple cloud partners.

For example, we work with Amazon, but we also work with Google. And every time we sign a contract with one of these cloud partners, we make sure that some of the We've committed to, for example, our responsible scaling policy, which governs how we test and deal with and provide security to every new model that we build. Every time we work with one of these cloud partners, every time we sign a new agreement, we make sure that the way we deploy these models on the clouds are consistent with our responsible scaling policy, and actually that's had flow through effects where Awareness education about this policy that we have has encouraged some of the cloud players to speed up their adoption of similar policies as well.

So this is an example of, you know what I've called race to the top, which is set an example and and and you know other players may follow that example and do something good as well. Alright, I'm going to have one last question here because I feel like this is a question that I keep getting and we've been talking about AI coming to the workforce and What is your what is your best advice to a young person who's going to be starting their career in the AI era? Or even before that, I mean, you're in high school, you're in college.

What is, what is a few things. One is I would say, um, obviously learn to learn to use the technology that's that's the obvious one, right? Where, where it's changing, it's changing so quickly, um, and you know, I think those, those who are able to keep up will be in much better position than those who are not.

Um, my, my, my second is I think the most important. Skill to cultivate is, is a critical skill, a kind of critical thinking skill, right? Learning to be critical about the information you see.

Now that AI systems are able to generate very very plausible explanations, very plausible images, very plausible, very plausible videos, I think there's a sense in which the information ecosystem has really kind of scrambled itself or inverted itself, and you really have to try very hard to know what's true and what's not. True, it's just, it's turned into more of a jungle than a kind of curated environment, and I fear that some aspects of AI may make that worse. And so, you know, looking at something and saying, does that make sense?

Is that, is that really, you know, could that, could that really be true? Like, I don't know, you just look on X or Twitter. Whatever, you just, you see all these things and like, you know, 100,000 people like them, and you look and you're like, that doesn't make any sense like that just doesn't make any sense at all, right?

Um, uh, and, uh, you know, kind of the old world of things being curated is gone. So somehow we need to make the, the, the, the, the new world, this marketplace actually, actually, actually work and in some. Some way converge to um converge to things that are true.

Uh, and I think the critical thinking skills are going to be really important and can we use AI to enhance those critical thinking skills rather than, um, you know, rather than it kind of further corrupting the ecosystem.

Related Videos

26:55

OpenAI’s Friar: Strong AI Competition Comi...

Bloomberg Live

1,029 views

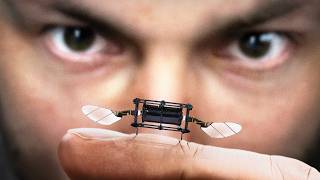

21:16

Why Are Scientists Making Robot Insects?

Veritasium

298,253 views

6:32

Anthropic CEO: More confident than ever th...

CNBC Television

21,061 views

30:13

President Trump’s Full Inauguration Speech...

WSJ News

17,855 views

20:54

Первые результаты Проверки на Твердые Част...

MODS

100,336 views

21:36

Build anything with DeepSeek R1, here’s how

David Ondrej

2,567 views

4:41

This free Chinese AI just crushed OpenAI's...

Fireship

110,672 views

24:27

How to Build Effective AI Agents (without ...

Dave Ebbelaar

33,415 views

18:37

NEW Tesla Prototype Spotted | We Didn't Ex...

Ryan Shaw

14,084 views

28:30

How do Graphics Cards Work? Exploring GPU...

Branch Education

3,075,851 views

41:45

Geoff Hinton - Will Digital Intelligence R...

Vector Institute

76,048 views

24:57

Putin can’t afford war as Russian economy ...

Times Radio

56,222 views

6:54

Tech CEOs Are Like Folk Heroes, Rubenstein...

David Rubenstein

5,684 views

5:22

Why we say “OK”

Vox

10,196,336 views

8:53

Interpreter Breaks Down How Real-Time Tran...

WIRED

7,991,976 views

30:10

The Inside Story of ChatGPT’s Astonishing ...

TED

1,809,671 views

15:27

Donald Trump, JD Vance dance with their wi...

TheColumbusDispatch

3,490,706 views

9:56

Novak Djokovic vs Carlos Alcaraz | Quarter...

Eurosport Tennis

313,787 views

9:30

This AI Robot Is Doing the Impossible - Un...

AI Revolution

134,703 views

1:55:51

AI: Grappling with a New Kind of Intelligence

World Science Festival

809,410 views