But what is the Riemann zeta function? Visualizing analytic continuation

4.93M views3606 WordsCopy TextShare

3Blue1Brown

Unraveling the enigmatic function behind the Riemann hypothesis

Help fund future projects: https://w...

Video Transcript:

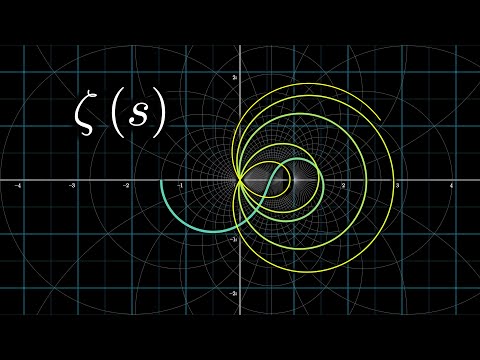

The Riemann zeta function. This is one of those objects in modern math that a lot of you might have heard of, but which can be really difficult to understand. Don't worry, I'll explain that animation that you just saw in a few minutes.

A lot of people know about this function because there's a one million dollar prize out for anyone who can figure out when it equals zero, an open problem known as the Riemann hypothesis. Some of you may have heard of it in the context of the divergent sum 1 plus 2 plus 3 plus 4 on and on up to infinity. You see, there's a sense in which this sum equals negative 1 twelfth, which seems nonsensical if not obviously wrong.

But a common way to define what this equation is actually saying uses the Riemann zeta function. But as any casual math enthusiast who started to read into this knows, its definition references this one idea called analytic continuation, which has to do with complex valued functions. And this idea can be frustratingly opaque and unintuitive.

So what I'd like to do here is just show you all what this zeta function actually looks like, and to explain what this idea of analytic continuation is in a visual and more intuitive way. I'm assuming that you know about complex numbers, and that you're comfortable working with them. And I'm tempted to say that you should know calculus, since analytic continuation is all about derivatives, but for the way I'm planning to present things I think you might actually be fine without that.

So to jump right into it, let's just define what this zeta function is. For a given input, where we commonly use the variable s, the function is 1 over 1 to the s, which is always 1, plus 1 over 2 to the s, plus 1 over 3 to the s, plus 1 over 4 to the s, on and on and on, summing up over all natural numbers. So for example, let's say you plug in a value like s equals 2.

You'd get 1 plus 1 over 4 plus 1 over 9 plus 1 sixteenth, and as you keep adding more and more reciprocals of squares, this just so happens to approach pi squared over 6, which is around 1. 645. There's a very beautiful reason for why pi shows up here, and I might do a video on it at a later date, but that's just the tip of the iceberg for why this function is beautiful.

You could do the same thing for other inputs s, like 3 or 4, and sometimes you get other interesting values. And so far, everything feels pretty reasonable. You're adding up smaller and smaller amounts, and these sums approach some number.

Great, no craziness here. Yet, if you were to read about it, you might see some people say that zeta of negative 1 equals negative 1 twelfth. But looking at this infinite sum, that doesn't make any sense.

When you raise each term to the negative 1, flipping each fraction, you get 1 plus 2 plus 3 plus 4 on and on over all natural numbers. And obviously that doesn't approach anything, certainly not negative 1 twelfth, right? And, as any mercenary looking into the Riemann hypothesis knows, this function is said to have trivial zeros at negative even numbers.

So for example, that would mean that zeta of negative 2 equals 0. But when you plug in negative 2, it gives you 1 plus 4 plus 9 plus 16 on and on, which again obviously doesn't approach anything, much less 0, right? Well, we'll get to negative values in a few minutes, but for right now, let's just say the only thing that seems reasonable.

This function only makes sense when s is greater than 1, which is when this sum converges. So far, it's simply not defined for other values. Now, with that said, Bernard Riemann was somewhat of a father to complex analysis, which is the study of functions that have complex numbers as inputs and outputs.

So rather than just thinking about how this sum takes a number s on the real number line to another number on the real number line, his main focus was on understanding what happens when you plug in a complex value for s. So for example, maybe instead of plugging in 2, you would plug in 2 plus i. Now, if you've never seen the idea of raising a number to the power of a complex value, it can feel kind of strange at first, because it no longer has anything to do with repeated multiplication.

But mathematicians found that there is a very nice and very natural way to extend the definition of exponents beyond their familiar territory of real numbers and into the realm of complex values. It's not super crucial to understand complex exponents for where I'm going with this video, but I think it'll still be nice if we just summarize the gist of it here. The basic idea is that when you write something like 1 half to the power of a complex number, you split it up as 1 half to the real part times 1 half to the pure imaginary part.

We're good on 1 half to the real part, there's no issues there. But what about raising something to a pure imaginary number? Well, the result is going to be some complex number on the unit circle in the complex plane.

As you let that pure imaginary input walk up and down the imaginary line, the resulting output walks around that unit circle. For a base like 1 half, the output walks around the unit circle somewhat slowly. But for a base that's farther away from 1, like 1 ninth, then as you let this input walk up and down the imaginary axis, the corresponding output is going to walk around the unit circle more quickly.

If you've never seen this and you're wondering why on earth this happens, I've left a few links to good resources in the description. For here, I'm just going to move forward with the what without the why. The main takeaway is that when you raise something like 1 half to the power of 2 plus i, which is 1 half squared times 1 half to the i, that 1 half to the i part is going to be on the unit circle, meaning it has an absolute value of 1.

So when you multiply it, it doesn't change the size of the number, it just takes that 1 fourth and rotates it somewhat. So, if you were to plug in 2 plus i to the zeta function, one way to think about what it does is to take the 1 half to the i part and think about what it does is to start off with all of the terms raised to the power of 2, which you can think of as piecing together lines whose lengths are the reciprocals of squares of numbers, which, like I said before, converges to pi squared over 6. Then when you change that input from 2 up to 2 plus i, each of these lines gets rotated by some amount.

But importantly, the lengths of those lines won't change, so the sum still converges, it just does so in a spiral to some specific point on the complex plane. Here, let me show what it looks like when I vary the input s, represented with this yellow dot on the complex plane, where this spiral sum is always going to be showing the converging value for zeta of s. What this means is that zeta of s, defined as this infinite sum, is a perfectly reasonable complex function as long as the real part of the input is greater than 1, meaning the input s sits somewhere on this right half of the complex plane.

Again, this is because it's the real part of s that determines the size of each number, while the imaginary part just dictates some rotation. So now what I want to do is visualize this function. It takes in inputs on the right half of the complex plane and spits out outputs somewhere else in the complex plane.

A super nice way to understand complex functions is to visualize them as transformations, meaning you look at every possible input to the function and just let it move over to the corresponding output. For example, let's take a moment and try to visualize something a little bit easier than the zeta function. Say f of s is equal to s squared.

When you plug in s equals 2, you get 4, so we'll end up moving that point at 2 over to the point at 4. When you plug in negative 1, you get 1, so the point over here at negative 1 is going to end up moving over to the point at 1. When you plug in i, by definition its square is negative 1, so it's going to move over here to negative 1.

Now I'm going to add on a more colorful grid, and this is just because things are about to start moving, and it's kind of nice to have something to distinguish grid lines during that movement. From here, I'll tell the computer to move every single point on this grid over to its corresponding output under the function f of s equals s squared. Here's what it looks like.

That can be a lot to take in, so I'll go ahead and play it again. And this time, focus on one of the marked points, and notice how it moves over to the point corresponding to its square. It can be a little complicated to see all of the points moving all at once, but the reward is that this gives us a very rich picture for what the complex function is actually doing, and it all happens in just two dimensions.

So, back to the zeta function. We have this infinite sum, which is a function of some complex number s, and we feel good and happy about plugging in values of s whose real part is greater than 1, and getting some meaningful output via the converging spiral sum. So to visualize this function, I'm going to take the portion of the grid sitting on the right side of the complex plane here, where the real part of numbers is greater than 1, and I'm going to tell the computer to move each point of this grid to the appropriate output.

It actually helps if I add a few more grid lines around the number 1, since that region gets stretched out by quite a bit. Alright, so first of all, let's all just appreciate how beautiful that is. I mean, damn, if that doesn't make you want to learn more about complex functions, you have no heart.

But also, this transformed grid is just begging to be extended a little bit. For example, let's highlight these lines here, which represent all of the complex numbers with imaginary part i, or negative i. After the transformation, these lines make such lovely arcs before they just abruptly stop.

Don't you want to just, you know, continue those arcs? In fact, you can imagine how some altered version of the function, with a definition that extends into this left half of the plane, might be able to complete this picture with something that's quite pretty. Well, this is exactly what mathematicians working with complex functions do.

They continue the function beyond the original domain where it was defined. Now, as soon as we branch over into inputs where the real part is less than 1, this infinite sum that we originally used to define the function doesn't make sense anymore. You'll get nonsense, like adding 1 plus 2 plus 3 plus 4 on and on up to infinity.

But just looking at this transformed version of the right half of the plane, where the sum does make sense, it's just begging us to extend the set of points that we're considering as inputs. Even if that means defining the extended function in some way that doesn't necessarily use that sum. Of course, that leaves us with the question, how would you define that function on the rest of the plane?

You might think that you could extend it any number of ways. Maybe you define an extension that makes it so the point at, say, s equals negative 1 moves over to negative 1 twelfth. But maybe you squiggle on some extension that makes it land on any other value.

I mean, as soon as you open yourself up to the idea of defining the function differently for values outside that domain of convergence, that is, not based on this infinite sum, the world is your oyster, and you can have any number of extensions, right? Well, not exactly. I mean, yes, you can give any child a marker and have them extend these lines any which way, but if you add on the restriction that this new extended function has to have a derivative everywhere, it locks us into one and only one possible extension.

I know, I know, I said that you wouldn't need to know about derivatives for this video, and even if you do know calculus, maybe you have yet to learn how to interpret derivatives for complex functions. But luckily for us, there is a very nice geometric intuition that you can keep in mind for when I say a phrase like, has a derivative everywhere. Here, to show you what I mean, let's look back at that f of s equals s squared example.

Again, we think of this function as a transformation, moving every point s of the complex plane over to the point s squared. For those of you who know calculus, you know that you can take the derivative of this function at any given input, but there's an interesting property of that transformation that turns out to be related and almost equivalent to that fact. If you look at any two lines in the input space that intersect at some angle, and consider what they turn into after the transformation, they will still intersect each other at that same angle.

The lines might get curved, and that's okay, but the important part is that the angle at which they intersect remains unchanged, and this is true for any pair of lines that you choose. So when I say a function has a derivative everywhere, I want you to think about this angle-preserving property, that any time two lines intersect, the angle between them remains unchanged after the transformation. At a glance, this is easiest to appreciate by noticing how all of the curves that the grid lines turn into still intersect each other at right angles.

Complex functions that have a derivative everywhere are called analytic, so you can think of this term analytic as meaning angle-preserving. Admittedly, I'm lying to you a little here, but only a little bit. A slight caveat for those of you who want the full details is that at inputs where the derivative of a function is zero, instead of angles being preserved, they get multiplied by some integer.

But those points are by far the minority, and for almost all inputs to an analytic function, angles are preserved. So if when I say analytic, you think angle-preserving, I think that's a fine intuition to have. Now, if you think about it for a moment, and this is a point that I really want you to appreciate, this is a very restrictive property.

The angle between any pair of intersecting lines has to remain unchanged. And yet, pretty much any function out there that has a name turns out to be analytic. The field of complex analysis, which Riemann helped to establish in its modern form, is almost entirely about leveraging the properties of analytic functions to understand results and patterns in other fields of math and science.

The zeta function, defined by this infinite sum on the right half of the plane, is an analytic function. Notice how all of these curves that the grid lines turn into still intersect each other at right angles. So the surprising fact about complex functions is that if you want to extend an analytic function beyond the domain where it was originally defined, for example, extending this zeta function into the left half of the plane, then if you require that the new extended function still be analytic, that is, that it still preserves angles everywhere, it forces you into only one possible extension, if one exists at all.

It's kind of like an infinite continuous jigsaw puzzle, where this requirement of preserving angles locks you into one and only one choice for how to extend it. This process of extending an analytic function in the only way possible that's still analytic is called, as you may have guessed, analytic continuation. So that's how the full Riemann zeta function is defined.

For values of s on the right half of the plane, where the real part is greater than 1, we can plug them into this sum and see where it converges. And that convergence might look like some kind of spiral, since raising each of these terms to a complex power has the effect of rotating each one. Then for the rest of the plane, we know that there exists one and only one way to extend this definition so that the function will still be analytic, that is, so that it still preserves angles at every single point.

So we just say that by definition, the zeta function on the left half of the plane is whatever that extension happens to be. And that's a valid definition because there's only one possible analytic continuation. Notice, that's a very implicit definition.

It just says, use the solution of this jigsaw puzzle, which through more abstract derivation we know must exist, but it doesn't specify exactly how to solve it. Mathematicians have a pretty good grasp on what this extension looks like, but some important parts of it remain a mystery. A million dollar mystery, in fact.

Let's actually take a moment and talk about the Riemann hypothesis, which is a million dollar problem. The places where this function equals zero turn out to be quite important, that is, which points get mapped onto the origin after the transformation. One thing we know about this extension is that the negative even numbers get mapped to zero.

These are commonly called the trivial zeros. The naming here stems from a long-standing tradition of mathematicians to call things trivial when they understand it quite well, even when it's a fact that is not at all obvious from the outset. We also know that the rest of the points that get mapped to zero sit somewhere in this vertical strip, called the critical strip, and the specific placement of those non-trivial zeros encodes a surprising information about prime numbers.

It's actually pretty interesting why this function carries so much information about primes, and I definitely think I'll make a video about that later on, but right now things are long enough, so I'll leave it unexplained. Riemann hypothesized that all of these non-trivial zeros sit right in the middle of the strip, on the line of numbers s, whose real part is one half. This is called the critical line.

If that's true, it gives us a remarkably tight grasp on the pattern of prime numbers, as well as many other patterns in math that stem from this. Now, so far, when I've shown what the zeta function looks like, I've only shown what it does to the portion of the grid on the screen, and that kind of undersells its complexity. So if I were to highlight this critical line and apply the transformation, it might not seem to cross the origin at all.

However, here's what the transformed version of more and more of that line looks like. Notice how it's passing through the number zero many, many times. If you can prove that all of the non-trivial zeros sit somewhere on this line, the Clay Math Institute gives you one million dollars.

And you'd also be proving hundreds, if not thousands, of modern math results that have already been shown contingent on this hypothesis being true. Another thing we know about this extended function is that it maps the point negative one over to negative one twelfth. And if you plug this into the original sum, it looks like we're saying one plus two plus three plus four, on and on up to infinity, equals negative one twelfth.

Now, it might seem disingenuous to still call this a sum, since the definition of the zeta function on the left half of the plane is not defined directly from this sum. Instead, it comes from analytically continuing the sum beyond the domain where it converges. That is, solving the jigsaw puzzle that began on the first line of the line, solving the jigsaw puzzle that began on the right half of the plane.

That said, you have to admit that the uniqueness of this analytic continuation, the fact that the jigsaw puzzle has only one solution, is very suggestive of some intrinsic connection between these extended values and the original sum.

Related Videos

21:58

Group theory, abstraction, and the 196,883...

3Blue1Brown

3,291,471 views

49:34

Analytic Continuation and the Zeta Function

zetamath

229,607 views

16:24

The Riemann Hypothesis, Explained

Quanta Magazine

6,051,785 views

12:38

The Key to the Riemann Hypothesis - Number...

Numberphile

1,392,740 views

28:33

Terence Tao on how we measure the cosmos |...

3Blue1Brown

2,438,503 views

22:08

When CAN'T Math Be Generalized? | The Limi...

Morphocular

614,619 views

26:06

Newton’s fractal (which Newton knew nothin...

3Blue1Brown

2,978,634 views

24:14

The Banach–Tarski Paradox

Vsauce

46,453,591 views

15:32

What is the graph of x^a when a is not an ...

Armando Arredondo

509,198 views

17:26

Researchers thought this was a bug (Borwei...

3Blue1Brown

4,215,551 views

17:03

Riemann Hypothesis - Numberphile

Numberphile

5,754,306 views

27:36

Beyond the Mandelbrot set, an intro to hol...

3Blue1Brown

1,616,690 views

33:01

The Man Who Almost Broke Math (And Himself...

Veritasium

9,666,624 views

21:05

Why is there no equation for the perimeter...

Stand-up Maths

2,421,161 views

24:02

The Dream: Riemann Hypothesis and F1 (RH S...

PeakMath

153,138 views

31:51

Visualizing quaternions (4d numbers) with ...

3Blue1Brown

4,934,900 views

52:49

Complex Integration and Finding Zeros of t...

zetamath

213,939 views

30:42

Pi hiding in prime regularities

3Blue1Brown

2,683,309 views

26:31

How to Take the Factorial of Any Number

Lines That Connect

1,423,186 views

29:24

But why would light "slow down"? | Visuali...

3Blue1Brown

2,013,016 views