4. Foundations: Skinner

623.95k views8273 WordsCopy TextShare

YaleCourses

Introduction to Psychology (PSYC 110)

Professor Bloom opens with a brief discussion of the value an...

Video Transcript:

Professor Paul Bloom: I actually want to begin by going back to Freud and hitting a couple of loose ends. There was a point in my lecture on Wednesday where I skipped over some parts. I said, "We don't have time for this" and I just whipped past it. And I couldn't sleep over the weekend. I've been tormented. I shouldn't have skipped that and I want to hit--Let me tell you why I skipped it. The discussion I skipped was the discussion of why we would have an unconscious at all. So, I was talking about the scientifically respectable

ideas of Freud and I want to talk about some new ideas about why there could be an unconscious. Now, the reason why I skipped it is I'm not sure this is the best way to look at the question. As we will learn throughout the course, by far the vast majority of what our brains do, the vast majority of what our minds do, is unconscious and we're unaware of it. So the right question to ask may not be, "Why are some things unconscious?" but rather, why is this tiny subset of mental life--why is this conscious? On

the other hand, these claims about the utility of unconsciousness, I think, are provocative and interesting. So I just wanted to quickly share them with you. So, the question is, from an evolutionary standpoint, "Why would an unconscious evolve?" And an answer that some psychologists and biologists have given is deception. So, most animals do some deception. And deception defined broadly is simply to act or be in some way that fools others into believing or thinking or responding to something that's false. There's physical examples of deception. When threatened, chimpanzees--their hair stands up on end and that makes them

look bigger to fool others to thinking they're more dangerous than they are. There's an angler fish at the bottom of the ocean that has a rod sticking up from the top of its head with a lure to capture other fish – to fool them in thinking that this is something edible and then to themselves be devoured. But humans, primates in general but particularly humans, are masters of deception. We use our minds and our behaviors and our actions continually to try to trick people into believing what's not true. We try to trick people, for instance, into

believing that we're tougher, smarter, sexier, more reliable, more trustworthy and so on, than we really are. And a large part of social psychology concerns the way in which we present ourselves to other people so as to make the maximally positive impression even when that impression isn't true. At the same time, though, we've also evolved very good lie detection mechanisms. So not only is there evolutionary pressure for me to lie to you, for me to persuade you for instance, that if we're going to have a--if you are threatening me don't threaten me, I am not the

sort of man you could screw around with. But there's evolutionary pressure for you to look and say, "No. You are the sort of man you could screw around with. I can tell." So how do you become a good liar? And here's where the unconscious comes in. The hypothesis is: the best lies are lies we tell ourselves. You're a better liar, more generally, if you believe the lie that you're telling. This could be illustrated with a story about Alfred Hitchcock. The story goes--He hated working with child actors but he often had to. And the story goes--He

was dealing with a child actor who simply could not cry. And, finally frustrated, Hitchcock went to the actor, leaned over, whispered in his ear, "Your parents have left you and they're never coming back." The kid burst into tears. Hitchcock said, "Roll ‘em" and filmed the kid. And the kid, if you were to see him, you'd say, "That's--Boy, he's--he really looks as if he's sad" because he was. If I had a competition where I'd give $100,000 to the person who looks the most as if they are in pain, it is a very good tactic to take

a pen and jam it into your groin because you will look extremely persuasively as if you are in pain. If I want to persuade you that I love you, would never leave you, you can trust me with everything, it may be a superb tactic for me to believe it. And so, this account of the evolution of the unconscious is that certain motivations and goals, particularly sinister ones, are better made to be unconscious because if a person doesn't know they have them they will not give them away. And this is something I think we should return

to later on when we talk about social interaction and social relationships. One other thing on Freud--just a story of the falsification of Freud. I was taking my younger child home from a play date on Sunday and he asked me out of the blue, "Why can't you marry your mother or your father?" Now, that's actually a difficult question to ask--to answer for a child, but I tried my best to give him an answer. And then I said--then I thought back on the Freud lecture and so I asked him, "If you could marry anybody you want, who

would it be?" imagining he'd make explicit the Oedipal complex and name his mother. Instead, he paused for a moment and said, "I would marry a donkey and a big bag of peanuts." [laughter] Both his parents are psychologists and he hates these questions and at times he just screws around with us. [laughter] Okay. Last class I started with Freud and now I want to turn to Skinner. And the story of Skinner and science is somewhat different from the story of Freud. Freud developed and championed the theory of psychoanalysis by himself. It is as close as you

could find in science to a solitary invention. Obviously, he drew upon all sorts of sources and predecessors but psychoanalysis is identified as Freud's creation. Behaviorism is different. Behaviorism is a school of thought that was there long before Skinner, championed by psychologists like John Watson, for instance. Skinner came a bit late into this but the reason why we've heard of Skinner and why Skinner is so well known is he packaged these notions. He expanded upon them; he publicized them; he developed them scientifically and presented them both to the scientific community and to the popular community and

sociologically in the 1960s and 1970s. In the United States, behaviorism was incredibly well known and so was Skinner. He was the sort of person you would see on talk shows. His books were bestsellers. Now, at the core of behaviorism are three extremely radical and interesting views. The first is a strong emphasis on learning. The strong view of behaviorism is everything you know, everything you are, is the result of experience. There's no real human nature. Rather, people are infinitely malleable. There's a wonderful quote from John Watson and in this quote John Watson is paraphrasing a famous

boast by the Jesuits. The Jesuits used to claim, "Give me a child until the age of seven and I'll show you the man," that they would take a child and turn him into anything they wanted. And Watson expanded on this boast, Give me a dozen healthy infants, well-formed and my own specified world to bring them up and I'll guarantee to take any one at random and train them to become any type of specialist I might select--doctor, lawyer, artist, merchant, chief, and yes, even beggar-man and thief, regardless of his talents, penchants, tendencies, abilities, vocations and race

of his ancestors. Now, you could imagine--You could see in this a tremendous appeal to this view because Watson has an extremely egalitarian view in a sense. If there's no human nature, then there's no sense in which one group of humans by dint of their race or their sex could be better than another group. And Watson was explicit. None of those facts about people will ever make any difference. What matters to what you are is what you learn and how you're treated. And so, Watson claimed he could create anybody in any way simply by treating them

in a certain fashion. A second aspect of behaviorism was anti-mentalism. And what I mean by this is the behaviorists were obsessed with the idea of doing science and they felt, largely in reaction to Freud, that claims about internal mental states like desires, wishes, goals, emotions and so on, are unscientific. These invisible, vague things can never form the basis of a serious science. And so, the behaviorist manifesto would then be to develop a science without anything that's unobservable and instead use notions like stimulus and response and reinforcement and punishment and environment that refer to real world

and tangible events. Finally, behaviorists believed there were no interesting differences across species. A behaviorist might admit that a human can do things that a rat or pigeon couldn't but a behaviorist might just say, "Look. Those are just general associative powers that differ" or they may even deny it. They might say, "Humans and rats aren't different at all. It's just humans tend to live in a richer environment than rats." From that standpoint, from that theoretical standpoint, comes a methodological approach which is, if they're all the same then you could study human learning by studying nonhuman animals.

And that's a lot of what they did. Okay. I'm going to frame my introduction--my discussion of behaviors in terms of the three main learning principles that they argue can explain all of human mental life, all of human behavior. And then, I want to turn to objections to behaviorism but these three principles are powerful and very interesting. The first is habituation. This is the very simplest form of learning. And what this is is technically described as a decline in the tendency to respond to stimuli that are familiar due to repeated exposure. "Hey!" "Hey!" The sudden noise

startles but as it--as you hear it a second time it startles less. The third time is just me being goofy. It's just--It's--You get used to things. And this, of course, is common enough in everyday life. We get used to the ticking of a clock or to noise of traffic but it's actually a very important form of learning because imagine life without it. Imagine life where you never got used to anything, where suddenly somebody steps forward and waves their hand and you'd go, "Woah," and then they wave their hand again and you'd go, "Whoah," and you

keep--[laughter] And there's the loud ticking of a clock and you say, "Hmmm." And that's not the way animals or humans work. You get used to things. And it's actually critically important to get used to things because it's a useful adaptive mechanism to keep track on new events and objects. It's important to notice something when it's new because then you have to decide whether it's going to harm you, how to deal with it, to attend to it, but you can't keep on noticing it. And, in fact, you should stop noticing it after it's been in the

environment for long enough. So, this counts as learning because it happens through experience. It's a way to learn through experience, to change your way of thinking through experience. And also, it's useful because harmful stimuli are noticed but when something has shown itself to be part of the environment you don't notice it anymore. The existence of habituation is important for many reasons. One thing it's important for is clever developmental psychologists have used habituation as a way to study people, creatures who can't talk like nonhuman animals, and young babies. And when I talk on Wednesday about developmental

psychology I'll show different ways in which psychologists have used habituation to study the minds of young babies. The second sort of learning is known as classical conditioning. And what this is in a very general sense is the learning of an association between one stimulus and another stimulus, where stimulus is a technical term meaning events in the environment like a certain smell or sound or sight. It was thought up by Pavlov. This is Pavlov's famous dog and it's an example of scientific serendipity. Pavlov, when he started this research, had no interest at all in learning. He

was interested in saliva. And to get saliva he had to have dogs. And he had to attach something to dogs so that their saliva would pour out so he could study saliva. No idea why he wanted to study saliva, but he then discovered something. What he would do is he'd put food powder in the dog's mouth to generate saliva. But Pavlov observed that when somebody entered the room who typically gave him the food powder, the dog--the food powder saliva would start to come out. And later on if you--right before or right during you give the

dog some food – you ping a bell – the bell will cause the saliva to come forth. And, in fact, this is the apparatus that he used for his research. He developed the theory of classical conditioning by making a distinction between two sorts of conditioning, two sorts of stimulus response relationships. One is unconditioned. An unconditioned is when an unconditioned stimulus gives rise to an unconditioned response. And this is what you start off with. So, if somebody pokes you with a stick and you say, "Ouch," because it hurts, the poking and the "ouch" is an unconditioned

stimulus causing an unconditioned response. You didn't have to learn that. When Pavlov put food powder in the dog's mouth and saliva was generated, that's an unconditioned stimulus giving rise to an unconditioned response. But what happens through learning is that another association develops – that between the conditioned stimulus and the conditioned response. So when Pavlov, for instance--Well, when Pavlov, for instance, started before conditioning there was simply an unconditioned stimulus, the food in the mouth, and an unconditioned response, saliva. The bell was nothing. The bell was a neutral stimulus. But over and over again, if you put

the bell and the food together, pretty soon the bell will generate saliva. And now the bell--When--You start off with the unconditioned stimulus, unconditioned response. When the conditioned stimulus and the unconditioned stimulus are brought together over and over and over again, pretty soon the conditioned stimulus gives rise to the response. And now it's known as the conditioned stimulus giving rise to the conditioned response. This is discussed in detail in the textbook but I also--I'm going to give you--Don't panic if you don't get it quite now. I'm going to give you further and further examples. So, the

idea here is, repeated pairings of the unconditioned stimulus and the conditioned stimulus will give rise to the response. And there's a difference between reinforced trials and unreinforced trials. A reinforced trial is when the conditioned stimulus and the unconditioned stimulus go together. You're--and to put it in a crude way, you're teaching the dog that the bell goes with the food. An unreinforced trial is when you get the food without the bell. You're not teaching the dog this. And, in fact, once you teach an animal something, if you stop doing the teaching the response goes away and

this is known as extinction. But here's a graph. If you get--They really count the number of cubic centimeters of saliva. The dog is trained so that when the bell comes on--Actually, I misframed it. I'll try again. When the bell comes connected with food, there's a lot of saliva. An unreinforced response is when the bell goes on but there's no food. So, it's--Imagine you're the dog. So, you get food in your mouth, "bell, food, bell, food," and now "bell." But next you get "bell, bell, bell." You give it up. You stop. You stop responding to the

bell. A weird thing which is discussed in the textbook is if you wait a while and then you try it again with the bell after a couple of hours, the saliva comes back. This is known as spontaneous recovery. So, this all seems a very technical phenomena related to animals and the like but it's easy to see how it generalizes and how it extends. One interesting notion is that of stimulus generalization. And stimulus generalization is the topic of one of your articles in The Norton Reader, the one by Watson, John Watson, the famous behaviorist, who reported

a bizarre experiment with a baby known as Little Albert. And here's the idea. Little Albert originally liked rats. In fact, I'm going to show you a movie of Little Albert originally liking rats. See. He's okay. No problem. Now, Watson did something interesting. As Little Albert was playing with the rat, "Oh, I like rats, oh," Watson went behind the baby--this is the--it's in the chapter--and banged the metal bar right here . The baby, "Aah," screamed, started to sob. Okay. What's the unconditioned stimulus? Somebody. The loud noise, the bar, the bang. What's the unconditioned response? Crying, sadness,

misery. And as a result of this, Little Albert grew afraid of the rat. So there--what would be the conditioned stimulus? The rat. What would be the conditioned response? Fear. Excellent. Moreover, this fear extended to other things. So, this is a very weird and unpersuasive clip. But the idea is--the clip is to make the point that the fear will extend to a rabbit, a white rabbit. So, the first part, Little Albert's fine with the white rabbit. The second part is after he's been conditioned and he's kind of freaked out with the white rabbit. The problem is

in the second part they're throwing the rabbit at him but now he's okay. [laughter] Is the mic on? Oh. This is fine. This is one of a long list of experiments that we can't do anymore. So, classical conditioning is more than a laboratory phenomena. The findings of classical conditioning have been extended and replicated in all sorts of animals including crabs, fish, cockroaches and so on. And it's been argued to be an extension of--it's argued to underlie certain interesting aspects of human responses. So, I have some examples here. One example is fear. So, the Little Albert

idea--The Little Albert experiment, provides an illustration for how phobias could emerge. Some proportion of people in this room have phobias. Imagine you're afraid of dogs. Well, a possible story for the--for why you became afraid of dogs is that one day a dog came up and he was a neutral stimulus. No problem. And all of a sudden he bit you. Now the pain of a bite, being bit, and then the pain and fear of that is an unconditioned stimulus, unconditioned response. You're just born with that, "ow." But the presence of the dog there is a conditioned

stimulus and so you grew to be afraid of dogs. If you believe this, this also forms the basis for ways for a theory of how you could make phobias go away. How do you make conditioned stimulus, conditioned response things go away? Well, what you do is you extinguish them. How do you extinguish them? Well, you show the thing that would cause you to have the fear without the unconditioned stimulus. Here's an illustration. It's a joke. Sorry. He's simultaneously confronting the fear of heights, snakes, and the dark because he's trapped in that thing and the logic

is--the logic of--the logic is not bad. He's stuck in there. Those are all the--his conditioned stimulus. But nothing bad happens so his fear goes away. The problem with this is while he's stuck in there he has this screaming, horrific panic attack and then it makes his fear much worse. So, what they do now though, and we'll talk about this much later in the course when we talk about clinical psychology--but one cure for phobias does draw upon, in a more intelligent way, the behaviorist literature. So, the claim about a phobia is that there's a bad association

between, say dog and fear, or between airplanes or snakes and some bad response. So, what they do is what's called, "systematic desensitization," which is they expose you to what causes you the fear but they relax you at the same time so you replace the aversive classical conditioned fear with something more positive. Traditionally, they used to teach people relaxation exercises but that proves too difficult. So nowadays they just pump you full of some drug to get you really happy and so you're really stoned out of your head, you're and this isn't so bad. It's more complicated

than that but the notion is you can use these associative tools perhaps to deal with questions about fear, phobias and how they go away. Hunger. We'll spend some time in this course discussing why we eat and when we eat. And one answer to why we eat and when we eat is that there's cues in the environment that are associated with eating. And these cues generate hunger. For those of you who are trying to quit smoking, you'll notice that there's time--or to quit drinking there's times of the day or certain activities that really make you want

to smoke or really make you want to drink. And from a behaviorist point of view this is because of the associative history of these things. More speculatively, classical conditioning has been argued to be implicated in the formation of sexual desire, including fetishes. So a behaviorist story about fetishes, for instance, is it's straightforward classical conditioning. Just as your lover's caress brings you to orgasm, your eyes happen to fall upon a shoe. Through the simple tools of classical conditioning then, the shoe becomes a conditioned stimulus giving rise to the conditioned response of sexual pleasure. This almost certainly

is not the right story but again, just as in phobias, some ideas of classical conditioning may play some role in determining what we like and what we don't like sexually. And in fact, one treatment for pedophiles and rapists involved controlled fantasies during masturbation to shift the association from domination and violence, for instance, to develop more positive associations with sexual pleasure. So the strong classical conditioning stories about fetishes and fears sound silly and extreme and they probably are but at the same time classical conditioning can be used at least to shape the focus of our desires

and of our interests. Final thought actually is--Oh, yeah. Okay. So, what do we think about classical conditioning? We talked about what habituation is for. What's classical conditioning for? Well, the traditional view is it's not for anything. It's just association. So, what happens is the UCS and the CS, the bell and the food, go together because they happen at the same time. And so classical conditioning should be the strongest when these two are simultaneous and the response to one is the same as the response to the other. This is actually no longer the mainstream view. The

mainstream view is now a little bit more interesting. It's that what happens in classical conditioning is preparation. What happens is you become sensitive to a cue that an event is about to happen and that allows you to prepare for the event. This makes certain predictions. It predicts that the best timing is when the conditioned stimulus, which is the signal, comes before the unconditioned stimulus, which is what you have to prepare for. And it says the conditioned response may be different from the unconditioned response. So, move away from food. Imagine a child who's being beaten by

his father. And when his father raises his hand he flinches. Well, that's classical conditioning. What happened in that case is he has learned that the raising of a hand is a signal that he is about to be hit and so he responds to that signal. His flinch is not the same response that one would give if one's hit. If you're hit, you don't flinch. If you're hit, you might feel pain or bounce back or something. Flinching is preparation for being hit. And, in general, the idea of what goes on in classical conditioning is that the

response is sort of a preparation. The conditioned response is a preparation for the unconditioned stimulus. Classical conditioning shows up all over the place. As a final exercise, and I had to think about it--Has anybody here seen the movie "Clockwork Orange"? A lot of you. It's kind of a shocking movie and unpleasant and very violent but at its core one of the main themes is right out of Intro Psych. It's classical conditioning. And a main character, who is a violent murderer and rapist, is brought in by some psychologists for some therapy. And the therapy he gets

is classical conditioning. In particular, what happens is he is given a drug that makes him violently ill, extremely nauseous. And then his eyes are propped open and he's shown scenes of violence. As a result of this sort of conditioning, he then – when he experiences real world violence – he responds with nausea and shock; basically, training him to get away from these acts of violence. In this example--Take a moment. Don't say it aloud. Just take a moment. What's the unconditioned stimulus? Okay. Anybody, what's the unconditioned stimulus? Somebody just say it. The drug. What's the unconditioned

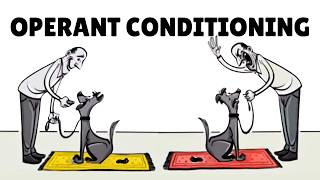

response? Nausea. What's the conditioned stimulus? Violence. What's the conditioned response? Perfect. The third and final type of learning is known as operant conditioning or instrumental conditioning. And this is the thing, this is the theory championed and developed most extensively by Skinner. What this is is learning the relationships between what you do and how successful or unsuccessful they are, learning what works and what doesn't. It's important. This is very different from classical conditioning and one way to see how this is different is for classical conditioning you don't do anything. You could literally be strapped down and

be immobile and these connections are what you appreciate and you make connections in your mind. Instrumental conditioning is voluntary. You choose to do things and by dint of your choices. Some choices become more learned than others. So, the idea itself was developed in the nicest form by Thorndike who explored how animals learn. Remember behaviorists were entirely comfortable studying animals and drawing extrapolations to other animals and to humans. So, he would put a cat in a puzzle box. And the trick to a puzzle box is there's a simple way to get out but you have to

kind of pull on something, some special lever, to make it pop open. And Thorndike noted that cats do not solve this problem through insight. They don't sit in the box for a while and mull it over and then figure out how to do it. Instead, what they do is they bounce all around doing different things and gradually get better and better at it. So, what they do is, the first time they might scratch at the bars, push at the ceiling, dig at the floor, howl, etc., etc. And one of their behaviors is pressing the lever.

The lever gets them out of the box, but after more and more trials they stopped scratching at the bars, pushing at the ceiling and so on. They just pressed the lever. And if you graph it, they gradually get better and better. They throw out all of these behaviors randomly. Some of them get reinforced and those are the ones that survive and others don't get reinforced and those are the ones that go extinct. And it might occur to some of you that this seems to be an analogy with the Darwinian theory of natural selection where there's

a random assortment of random mutations. And sexual selections give rise to a host of organisms, some of which survive and are fit and others which aren't. And in fact, Skinner explicitly made the analogy from the natural selection of species to the natural selection of behavior. So this could be summarized as the law of effect, which is a tendency to perform – an action's increased if rewarded, weakened if it's not. And Skinner extended this more generally. So, to illustrate Skinnerian theory in operant conditioning I'll give an example of training a pig. So here is the idea.

You need to train a pig and you need to do so through operant conditioning. So one of the things you want to do is--The pig is going to do some things you like and some things you don't like. And so what you want to do, basically drawing upon the law of effect, is reinforce the pig for doing good things. Suppose you want the pig to walk forward. So, you reinforce the pig for walking forward and you punish the pig for walking backward. And if you do that over the fullness of time, your reinforcement and punishment

will give rise to a pig who walks forward. There's two--One technical distinction that people love to put on Intro Psych exams is that the difference between positive reinforcement and negative reinforcement. Reinforcement is something that makes the behavior increase. Negative reinforcement is very different from punishment. Negative reinforcement is just a type of reward. The difference is in positive reinforcement you do something; in negative reinforcement you take away something aversive. So, imagine the pig has a heavy collar and to reward the pig for walking forward you might remove the heavy collar. So, these are the basic techniques

to train an animal. But it's kind of silly because suppose you want your pig to dance. You don't just want your pig to walk forward. You want your pig to dance. Well, you can't adopt the policy of "I'm going to wait for this pig to dance and when it does I'm going to reinforce it" because it's going to take you a very long time. Similarly, if you're dealing with immature humans and you want your child to get you a beer, you can't just sit, wait for the kid to give you a beer and uncap the

bottle and say, "Excellent. Good. Hugs." You've got to work your way to it. And the act of working your way to it is known as shaping. So, here is how to get a pig to dance. You wait for the pig to do something that's halfway close to dancing, like stumbling, and you reward it. Then it does something else that's even closer to dancing and you reward it. And you keep rewarding it as it gets closer to closer. Here's how to get your child to bring you some beer. You say, "Johnny, could you go to the

kitchen and get me some beer?" And he walks to the kitchen and then he forgets why he's there and you run out there. "You're such a good kid. Congratulations. Hugs." And then you get him to--and then finally you get him to also open up the refrigerator and get the beer, open the door, get the--and in that way you can train creatures to do complicated things. Skinner had many examples of this. Skinner developed, in World War II, a pigeon guided missile. It was never actually used but it was a great idea. And people, in fact--The history

of the military in the United States and other countries includes a lot of attempts to get animals like pigeons or dolphins to do interesting and deadly things through various training. More recreational, Skinner was fond of teaching animals to play Ping-Pong. And again, you don't teach an animal to play Ping-Pong by waiting for it to play Ping-Pong and then rewarding it. Rather, you reward approximations to it. And basically, there are primary reinforcers. There are some things pigs naturally like, food for instance. There are some things pigs actually automatically don't like, like being hit or shocked. But

in the real world when dealing with humans, but even when dealing with animals, we don't actually always use primary reinforcers or negative reinforcers. What we often use are things like--for my dog saying, "Good dog." Now, saying "Good dog" is not something your dog has been built, pre-wired, to find pleasurable. But what happens is you can do a two-step process. You can make "Good dog" positive through classical conditioning. You give the dog a treat and say, "Good dog." Now the phrase "good dog" will carry the rewarding quality. And you could use that rewarding quality in order

to train it. And through this way behaviorists have developed token economies where they get nonhuman animals to do interesting things for seemingly arbitrary rewards like poker chips. And in this way you can increase the utility and ease of training. Finally, in the examples we're giving, whenever the pig does something you like you reinforce it. But that's not how real life works. Real life for both humans and animals involved cases where the reinforcement doesn't happen all the time but actually happens according to different schedules. And so, there is the distinction between fixed schedules versus ratios –

variable schedules and ratio versus interval. And this is something you could print out to look at. I don't need to go over it in detail. The difference between ratio is a reward every certain number of times somebody does something. So, if every tenth time your dog brought you the newspaper you gave it hugs and treats; that's ratio. An interval is over a period of time. So, if your dog gives you--if your dog, I don't know, dances for an hour straight, that would be an interval thing. And fixed versus variable speaks to whether you give a

reward on a fixed schedule, every fifth time, or variable, sometimes on the third time, sometimes on the seventh time, and so on. And these are--There are examples here and there's no need to go over them. It's easy enough to think of examples in real life. So, for example, a slot machine is variable ratio. It goes off after it's been hit a certain number of times. It doesn't matter how long it takes you for--to do it. It's the number of times you pull it down. But it's variable because it doesn't always go off on the thousandth

time. You don't know. It's unpredictable. The slot machine is a good example of a phenomena known as the partial reinforcement effect. And this is kind of neat. It makes sense when you hear it but it's the sort of finding that's been validated over and over again with animals and nonhumans. Here's the idea. Suppose you want to train somebody to do something and you want the training such that they'll keep on doing it even if you're not training them anymore, which is typically what you want. If you want that, the trick is don't reinforce it all

the time. Behaviors last longer if they're reinforced intermittently and this is known as "the partial reinforcement effect." Thinking of this psychologically, it's as if whenever you put something in a slot machine it gave you money, then all of a sudden it stopped. You keep on doing it a few times but then you say, "Fine. It doesn't work," but what if it gave you money one out of every hundred times? Now you keep on trying and because the reinforcement is intermittent you don't expect it as much and so your behavior will persist across often a huge

amount of time. Here's a good example. What's the very worst thing to do when your kid cries to go into bed with you and you don't want him to go into bed with you? Well, one--The worst thing to do is for any--Actually, for any form of discipline with a kid is to say, "No, absolutely not. No, no, no, no." "Okay." And then later on the kid's going to say, "I want to do it again" and you say no and the kid keeps asking because you've put it, well, put it as in a psychological way, not

the way the behaviorists would put it. The kid knows okay, he's not going to get it right away, he's going to keep on asking. And so typically, what you're doing inadvertently in those situations is you're exploiting the partial reinforcement effect. If I want my kid to do something, I should say yes one out of every ten times. Unfortunately, that's the evolution of nagging. Because you nag, you nag, you nag, the person says, "Fine, okay," and that reinforces it. If Skinner kept the focus on rats and pigeons and dogs, he would not have the impact that

he did but he argued that you could extend all of these notions to humans and to human behavior. So for an example, he argued that the prison system needs to be reformed because instead of focusing on notions of justice and retribution what we should do is focus instead on questions of reinforcing good behaviors and punishing bad ones. He argued for the notions of operant conditioning to be extended to everyday life and argued that people's lives would become fuller and more satisfying if they were controlled in a properly behaviorist way. Any questions about behaviorism? What are

your questions about behaviorism? [laughter] Yes. Student: [inaudible]--wouldn't there be extinction after a while? [inaudible] Professor Paul Bloom: Good question. The discussion was over using things like poker chips for reinforcement and the point is exactly right. Since the connection with the poker chips is established through classical conditioning, sooner or later by that logic the poker chips would lose their power to serve as reinforcers. You'd have to sort of start it up again, retrain again. If you have a dog and you say "Good dog" to reward the dog, by your logic, which is right, at some point

you might as well give the dog a treat along with the "Good dog." Otherwise, "Good dog" is not going to cut it anymore. Yes. Student: [inaudible] Professor Paul Bloom: As far as I know, Skinner and Skinnerian psychologists were never directly involved in the creation of prisons. On the other hand, the psychological theory of behaviorism has had a huge impact and I think a lot of people's ways of thinking about criminal justice and criminal law has been shaped by behaviorist principles. So for instance, institutions like mental institutions and some prisons have installed token economies where there's

rewards for good behavior, often poker chips of a sort. And then you could cash them in for other things. And, to some extent, these have been shaped by an adherence to behaviorist principles. Okay. So, here are the three general positions of behaviorism. (1) That there is no innate knowledge. All you need is learning. (2) That you could explain human psychology without mental notions like desires and goals. (3) And that these mechanisms apply across all domains and across all species. I think it's fair to say that right now just about everybody agrees all of these three

claims are mistaken. First, we know that it's not true that everything is learned. There is considerable evidence for different forms of innate knowledge and innate desires and we'll look--and we'll talk about it in detail when we look at case studies like language learning, the development of sexual preference, the developing understanding of material objects. There's a lot of debate over how much is innate and what the character of the built-in mental systems are but there's nobody who doubts nowadays that a considerable amount for humans and other animals is built-in. Is it true that talking about mental

states is unscientific? Nobody believes this anymore either. Science, particularly more advanced sciences like physics or chemistry, are all about unobservables. They're all about things you can't see. And it makes sense to explain complex and intelligent behavior in terms of internal mechanisms and internal representations. Once again, the computer revolution has served as an illustrative case study. If you have a computer that plays chess and you want to explain how the computer plays chess, it's impossible to do so without talking about the programs and mechanisms inside the computer. Is it true that animals need reinforcement and punishment

to learn? No, and there's several demonstrations at the time of Skinner suggesting that they don't. This is from a classic study by Tolman where rats were taught to run a maze. And what they found was the rats did fine. They learn to run a maze faster and faster when they're regularly rewarded but they also learn to run a maze faster and faster if they are not rewarded at all. So the reward helps, but the reward is in no sense necessary. And here's a more sophisticated illustration of the same point. Professor Paul Bloom: And this is

the sort of finding, an old finding from before most of you were born, that was a huge embarrassment for the Skinnerian theory, as it suggests that rats in fact had mental maps, an internal mechanism that they used to understand the world – entirely contrary to the behaviorist idea everything could be explained in terms of reinforcement and punishment. Finally, is it true that there's no animal-specific constraints for learning? And again, the answer seems to be "no." Animals, for instance, have natural responses. So, you could train a pigeon to peck for food but that's because pecking for

food is a very natural response. It's very difficult to train it to peck to escape a situation. You can train it to flap its wings to escape a situation but it's very difficult to get it to flap its wings for food. And the idea is they have sort of natural responses that these learning situations might exploit and might channel, but essentially, they do have certain natural ways of acting towards the world. We know that not all stimuli and responses are created equal. So, the Gray textbook has a very nice discussion of the Garcia effect. And

the Garcia effect goes like this. Does anybody here have any food aversions? I don't mean foods you don't like. I mean foods that really make you sick. Often food aversions in humans and other animals can be formed through a form of association. What happens is suppose you have the flu and you get very nauseous and then at the same point you eat some sashimi for the first time. The connection between being nauseous and eating a new food is very potent. And even if you know intellectually full well that the sashimi isn't why you became nauseous,

still you'll develop an aversion to this new food. When I was younger – when I was a teenager – I drank this Greek liqueur, ouzo, with beer. I didn't have the flu at the time but I became violently ill. And as a result I cannot abide the smell of that Greek liqueur. Now, thank God it didn't develop into an aversion to beer but-- [laughter] Small miracles. But the smell is very distinctive and for me--was new to me. And so, through the Garcia effect I developed a strong aversion. What's interesting though is the aversion is special

so if you take an animal and you give it a new food and then you give it a drug to make it nauseous it will avoid that food. But if you take an animal and you give it a new food and then you shock it very painfully it won't avoid the new food. And the idea is that a connection between what something tastes and getting sick is natural. We are hard wired to say, "Look. If I'm going to eat a new food and I'm going to get nauseous, I'm going to avoid that food." The Garcia

effect is that this is special to taste and nausea. It doesn't extend more generally. Finally, I talked about phobias and I'll return to phobias later on in this course. But the claim that people have formed their phobias through classical conditioning is almost always wrong. Instead, it turns out that there are certain phobias that we're specially evolved to have. So, both humans and chimpanzees, for instance, are particularly prone to develop fears of snakes. And when we talk about the emotions later on in the course we'll talk about this in more detail. But what seems likely is

the sort of phobias you're likely to have does not have much to do with your personal history but rather it has a lot to do with your evolutionary history. Finally, the other reading you're going to do for this part--section of the course is Chomsky's classic article, his "Review of Verbal Behavior." Chomsky is one of the most prominent intellectuals alive. He's still a professor at MIT, still publishes on language and thought, among other matters. And the excerpt you're going to read is from his "Review of Verbal Behavior." And this is one of the most influential intellectual

documents ever written in psychology because it took the entire discipline of behaviorism and, more than everything else, more than any other event, could be said to have destroyed it or ended it as a dominant intellectual endeavor. And Chomsky's argument is complicated and interesting, but the main sort of argument he had to make is--goes like this. When it comes to humans, the notions of reward and punishment and so on that Skinner tried to extend to humans are so vague it's not science anymore. And remember the discussion we had with regard to Freud. What Skinner--What Chomsky is

raising here is the concern of unfalsifiablity. So, here's the sort of example he would discuss. Skinner, in his book Verbal Behavior, talks about the question of why do we do things like talk to ourselves, imitate sounds, create art, give bad news to an enemy, fantasize about pleasant situations? And Skinner says that they all involve reinforcement; those are all reinforced behaviors. But Skinner doesn't literally mean that when we talk to ourselves somebody gives us food pellets. He doesn't literally mean even that when we talk to ourselves somebody pats us on the head and says, "Good man.

Perfect. I'm very proud." What he means, for instance, in this case is well, talking to yourself is self-reinforcing or giving bad news to an enemy is reinforcing because it makes your enemy feel bad. Well, Chomsky says the problem is not that that's wrong. That's all true. It's just so vague as to be useless. Skinner isn't saying anything more. To say giving bad news to an enemy is reinforcing because it makes the enemy feel bad doesn't say anything different from giving bad news to an enemy feels good because we like to give bad news to an

enemy. It's just putting it in more scientific terms. More generally, Chomsky suggests that the law of effect when applied to humans is either trivially true, trivially or uninterestingly true, or scientifically robust and obviously false. So, if you want to expand the notion of reward or reinforcement to anything, then it's true. So why did you come--those of you who are not freshmen--Oh, you--Why did you come? All of you, why did you come to Yale for a second semester? "Well, I repeated my action because the first semester was rewarding." Okay. What do you mean by that? Well,

you don't literally mean that somebody rewarded you, gave you pellets and stuff. What you mean is you chose to come there for the second semester. And there's nothing wrong with saying that but we shouldn't confuse it with science. And more generally, the problem is you can talk about what other people do in terms of reinforcement and punishment and operant conditioning and classical conditioning. But in order to do so, you have to use terms like "punishment" and "reward" and "reinforcement" in such a vague way that in the end you're not saying anything scientific. So, behaviorism as

a dominant intellectual field has faded, but it still leaves behind an important legacy and it still stands as one of the major contributions of twentieth century psychology. For one thing, it has given us a richer understanding of certain learning mechanisms, particularly with regard to nonhumans. Mechanisms like habituation, classical conditioning and operant conditioning are real; they can be scientifically studied; and they play an important role in the lives of animals and probably an important role in human lives as well. They just don't explain everything. Finally, and this is something I'm going to return to on Wednesday

actually, behaviorists have provided powerful techniques for training particularly for nonverbal creatures so this extends to animal trainers. But it also extends to people who want to teach young children and babies and also want to help populations like the severely autistic or the severely retarded. Many of these behaviorist techniques have proven to be quite useful. And in that regard, as well as in other regards, it stands as an important contribution.

Related Videos

48:58

5. What Is It Like to Be a Baby: The Devel...

YaleCourses

472,292 views

56:31

3. Foundations: Freud

YaleCourses

1,063,214 views

1:03:43

How to Speak

MIT OpenCourseWare

19,473,656 views

53:19

2. Foundations: This Is Your Brain

YaleCourses

1,039,052 views

1:20:22

Paul Bloom - There Is Nothing Special Abo...

The University of British Columbia

130,917 views

29:37

1. Introduction

YaleCourses

1,776,019 views

4:47

Skinner’s Operant Conditioning: Rewards & ...

Sprouts

982,042 views

48:16

Paul Bloom: The Psychology of Everything |...

Big Think

1,867,121 views

58:20

Think Fast, Talk Smart: Communication Tech...

Stanford Graduate School of Business

40,514,033 views

57:15

1. Introduction to Human Behavioral Biology

Stanford

17,716,629 views

56:31

6. How Do We Communicate?: Language in the...

YaleCourses

475,979 views

1:02:29

Emotional Intelligence: From Theory to Eve...

Yale University

780,908 views

17:35

Behaviorism: Skinner, Pavlov, Thorndike, etc.

Teachings in Education

51,858 views

20:04

Do schools kill creativity? | Sir Ken Robi...

TED

23,584,716 views

1:09:33

9. Evolution, Emotion, and Reason: Love (G...

YaleCourses

413,471 views

49:44

Lec 1 | MIT 9.00SC Introduction to Psychol...

MIT OpenCourseWare

2,948,946 views

1:18:17

Daniel Goleman on Focus: The Secret to Hig...

Intelligence Squared

7,534,633 views

4:49

CLASSICAL VS OPERANT CONDITIONING

Neural Academy

423,114 views

2:12:28

Robert Sapolsky: The Biology and Psycholog...

Stanford

466,848 views

52:48

14. What Motivates Us: Sex

YaleCourses

471,447 views