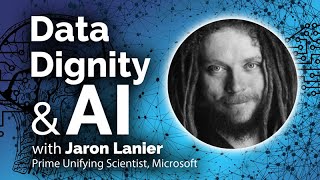

Jaron Lanier Looks into AI's Future | AI IRL

270.34k views4559 WordsCopy TextShare

Bloomberg Originals

Jaron Lanier is among the original Silicon Valley utopians. This ultimate insider gives an unfiltere...

Video Transcript:

you feel relaxed yeah you got to be like jiren laner is among the OG Silicon Valley utopians who had Grand visions of what the internet could be long before we had PCS cell phones or social media he was the pioneer of virtual reality in the' 80s sold multiple companies to Google Adobe and Oracle and now works of Microsoft over the years he's become a fierce critic of the industry he helped build cuz we're used to this idea that the internet should be mysterious we don't know where things came from but that's a really bad way

of thinking about AI yet he chooses to remain a part of it so on this episode of AI IRL linear the ultimate Insider gives us his unfiltered look into the dark side of technology and what that means for ai's [Music] future jiren lenier welcome to the show now you are the prime unifying scientist at Microsoft although here speaking very much in a personal capacity uh I am fascinated by the the idea of being a prime unifying scientist is that is that it like how does that work exactly the title is humorous I report to the

chief technology officer the office of the chief technology officer combined with prime unifying scientist spells octopus and I both have come to resemble one or so my students tell me and I'm also very interested in their neurology they have amazing nervous systems so we thought it would be an appropriate title I wholeheartedly agree and we have a laundry list of things to talk to you about because you have a a fascinating career that span so many decades and I think it might be useful just to set the scene here you know 40 years ago you

were pioneering Technologies at companies like Atari um and I'd love you to take us back to how you felt about the trajectory of the industry back then and how today Compares with what you expected that is an interesting question I don't think I've been quite asked before it presumes I remember those days Pretend We're in a time machine well um back in starting in the late 70s and into the early 80s I had a mentor named Marvin Minsky for whom I worked as a young researcher a teenager and he was the principal author of the

way we think about AI these days a lot of the little tropes and stories and concerns and ways just the way it's thought about come from Marvin I hated it I always thought this is a terrible idea AI is just a way for people to work together it's just people and masks we we're just confusing things why are we trying to build this you know entity this like artificial God or something Marvin loved arguing with me because all of his other employees in the lab were totally in agreement with him so the last time time

I saw him before he died he said Jaren can we have the argument and it was so great to be able to have it one last time so I always thought no no no no this whole idea is wrong and that got me into this other way of thinking which was supposed to be represent the other thing and I called it virtual reality and so I had the first startup in virtual reality I named it and I we ended up doing the first head supported uh virtuality headset were you optimistic were you optimistic about where

things were going I am even more optimistic now but I think to be an optimist you have to have the courage to be a fearsome critic it's the critic who believes things can be better the critic is the true optimist even if they don't like to admit it even if they don't want to feel soft and Squishy the critic is the one who says this can be better and that means optimism it's the acquiescence it's like no this is it those people are kind of useless so I was critical then I used to fight with

Marvin about the idea of AI since then I have been very critical of the way we did social media I have been very critical of a lot of things I remain so it is the true face of optimism let's talk about disinformation of it and its links to advertising and the embedded nature uh in social media and you know generative AI is disrupting a lot of things but it still seems like adver ising is inextricably linked to how companies will be able to monetize some of that technology what are your thoughts on that well look

um I'm not speaking for Microsoft but I want to say anybody who says oh I wish I didn't have to pay a monthly fee to use the the highest quality AI I'll just use the free one that fee is your freedom fee it means that the the mechanism that's paying for it is not the manipulation of you and there's really only two choices if we're in a market economy there has to be a customer so either the customer is you or it's somebody who wants to manipulate you those are the choices you know so we

have to get to the point where we accept that to be participants in a market economy we have to be full participants in a market economy or else we subjugate ourselves to those who are so we can't just expect things to be free yeah I mean I wish we could I don't like spending money any more than anybody else but the thing is you know in a market econ e omy if you if you're not willing to pay for what you get it means somebody else is getting some benefit from manipulating you I mean there's

just it's just logic it's inescapable we're cruising somewhere near the Realms of Open Source here and there is some debate in AI about whether the push to open- Source certain models uh could potentially exacerbate the risk to disinformation and i' I'd love to get your views on that well look um this is a somewhat complex topic and it's a topic in which my opinions sometimes illicit unfriendly responses from my peers but let me let me try to explain my thought on it I think the open- source idea it comes from a really good place I

think that people who believe in it believe that it makes things more open and Democratic and honest and safe and all that the problem with it is the mathematics of network effects so let's say if you have a bunch of people that share stuff uh for free it might be music it might be computer code it could be all kinds of things and you're saying oh we're being uh very communitarian here we're sharing share alike we it's a barter giant barter system the thing is that the exchange of all that free stuff is going to

tend towards Monopoly because of mathematics and so then it'll become the greater glory of something like a Google or whatever it is and so you end up with instead of decentralization you end up with Hyper centralization and then that h is incentivized to keep certain things very secret and proprietary like its algorithms or the derived data which is actually very expensive to generate of how things correlate um and so I think this idea that opening things leads to decentralization is just mathematically false and we have endless examples to to demonstrate that and yet it's still

a widespread belief because it feels so good to believe it but it's just this thing that didn't work but I think I mean part of the argument seems to be that you know if there is this idea that AI systems and in particular you the large language models is that it's a black box and we can't see how it's working and therefore if we have an open source model we can at least see how it's working right okay so there's an a slightly different idea of opening up that I think would be to everyone's benefit

including the Proprietors of the large models the users Society at large everybody and that is instead of releasing the code which just tends to support these emergent monop which is why some of them support doing that what we should do is have Providence so let me give you an example let's say you have an interaction with a chatbot and the chatbot gets all weird with you it says oh I love you you need a divorce we should be together how do we prevent it well if you say you want the AI to govern the AI

and you have this one AI looking at the first Ai and saying don't be all weird stop that stop that stop that it gets hard and the reason it gets hard is because of the limitations of language itself and this has been an interesting problem that Humanity has explored for many thousands of years if you think of the ancient stories of the little lamp with the genie and you rub the lamp the genie comes out and grants you wishes and if you're unlucky it's a sneaky Genie and no matter what you say the words get

twisted in the wrong way and this is exactly the problem with AI governing AI that words can always be twisted you can never words are actually more ambiguous than we think they are we go through life thinking words are precise but they're not which is actually the reason why large models work because they they're a statistical thing in the first place so they work very well with our statistical calculations but um there's another way to do it that does work anytime you have an output from an AI of course in some small way it relies

on millions or billions of examples from Humanity at large billions of photos billions of examples of text but in any particular example of an output it won't in a particular example there'll be just a few a small number maybe a dozen this my hand is doing this because this is a little hump of statistical yes it's a distribution I'm doing a little mathy thing here all right so if you can track who were the most important people who input stuff that was relevant to your thing so let's say it gets all weird and then you

say to the bot why did you say that and the bot says oh well I Dre some things from from some sexy s fanfiction and from some soap operas you know and then you say you know what don't use that stuff and then all of a sudden it'll you know like basically knowing where the sources are that were mashed up to create the AI result you see the magic of AI as we know it is that it can take examples from people and combine them in coherent ways you can have a recognizer for cats and

a recognizer for hot air balloons and say put a cat in a hot air balloon and in order to meet both recognizers it'll randomly get this thing that does both and all of a sudden you have a cat and a hot air balloon it's fantastic but you can always go back it's just that we don't because we're used to this idea that the internet should be mysterious we don't know where things came from but that's a really bad way of thinking about Ai and and then just since you're a business oriented Channel there's a lovely

thing that happens which as soon as you can attribute who contributed to an AI result then you can incentivize people to put in new data that makes the AI better A and B you can start to compensate people so instead of of saying oh we're just going to displace all these workers and they're going to have to go on universal basic income setting a trajectory for I think a bad outcome because whenever you have everything concentrated in some Single Payer Society it becomes a temp you start with Bolshevik you end up with stalinists because the

worst people want to control that Central thing let's talk about this because you call it data dignity which is the notion that if I'm putting data out there I should be compensated especially if it's being used to train algorithms how does that work in practice well it doesn't right now I mean in order to do it we have to calculate and present the Providence of which human sources were the most important to a given AI output and we don't currently do that we can though we can do it efficiently and effectively it's just that we're

not yet it it has to be a societal decision to shift to doing that and then you know there's a lot of little psycho dramas where people will say well I don't know if I'd want to get paid I like just being out there or whatever I think there's a lot of room to adjust how how this will work in detail but the point is I don't want to create more people who are just dependent on state payout to survive I want to create more if you like creative classes of people who are really good

at providing fresh data that makes the models work better so everybody benefits back in the 90s you talked about how chatbots at some point could impact democracy and elections and like that was probably like 30 odd years ago and the good days I was writing about this much earlier no in those days instead of chat Bots we called them agents which is sounds scarier it's an occasional language The Matrix movies used it to make these AI guys that look like secret agents I think pretty effectively actually they were pretty scary but in in the early

days we called them agents they'll be these agents that'll help you in life and so I was like no they're going to be corrupted it's going to be stupid it doesn't make any sense it's the wrong way to do it this has always been apparent how do you feel about the chat Bots that we that we have now as they relate to the risk to democracy we're going to find out very soon um I'm scared I'm scared because um the last round of social media getting into politics left a lot of people with um various

forms of fear and concern that have actually had the result of reducing certain levels of controls and and Corrections within the big tech companies so in a sense we might be worse off but in another sense I feel the um Tech culture or just the collective sensibility of the engineers who actually do everything has matured a lot on the whole do you trust the tech leaders behind the artificial intelligence models out there right now well I know most of them and um perhaps I that might bias me but I think we're in a pretty lucky

place on that you know I think um do you think they learn from their mistakes essentially I hope so I mean we haven't really had mistakes to learn from yet I mean what about social media well so no social media is a giant one but that precedes the big models I mean it is an AI you can think of social media as being halfway to Big models in that AI I don't like the term but the its algorithms direct people around social media so you can think of it as an aid driven thing and that

did not work out well for Society on the whole it works out really well for for some people and some you know like it's a very complicated story so but a lot of a lot of these companies are the same companies that have collectively made the mistakes and we as users of that have either allowed those to happen or push back and now it is a lot of those same companies or Engineers or people that are now making this these these new things and so I wonder what have they what have they learned like they

had a chance what I think they should learn okay so look if you look at the business models of the tech Titans there's really two in the US that are that are true Titans that are dependent on the advertising business model and that would be and alphabeta Google I just want to point out that the tech Titans that are not dependent on it we do some of it but they're not dependent on it would be companies like apple Microsoft Amazon you can complain about us you can complain about these other companies and I hope you

do I think we we should be accountable so do it do it but but but but they don't spew the same kind of weird creepy Darkness into the world but also their market caps are smaller I mean like the thing I want to say is like meta and Google are undervalued because they have the wrong business model like they do a lot they should actually be more successful like I I I believe this is a stupid business model and an an author authoritarian run platform is going to overtake us which is exactly what's happening with

Tik Tok so why are we doing it it works kind of but not maximally in my view so like why are we doing it and it's just habit it's momentum it's the hassle of changing but we gota well one of the big issues uh that comes up in terms of everything from disinformation democracy social media AI is the is deep fakes and that is something that is very hard to argue hasn't got the potential to get much more serious as a result of AI and what we've seen with generative uh models over the last couple

of years in particular and I'd love to get your view on if there is a a way that we could be preventing this from becoming the next problem um can I mention a small May culpa on that way back in the 90s some friends and I had a little Machine Vision startup company and I think we made the first deep fakes of tra so you're to blame I'm absolutely to blame yeah and I actually um we use the very first deep fake uh system to block scenes of minority reporting with the Guinness yeah year I

actually brought that into the script and but that was like based on a real early prototype of a deep fake but okay the answer to deep fakes is Providence like um what I was saying before if you know where data came from you no longer worry about deep fakes because you can say where did this come from and the Providence system has to be robust and not fak but it should say oh yeah well this is a combin ation this was somebody from Chinese uh military intelligence that combined this thing and that thing and that

thing and like okay great yeah get rid of that you know like Providence is the only way to combat fraud I actually think what should happen is Regulators should be involved so all of us Microsoft open AI everybody goes in front of of regulators all over the world says regulate us this is important so the question is how and so if regulation means the genie approach where you say we're going to have AI judge whether the first AI was good or not it becomes an infinite regress if you say it's based on data Providence all

of a sudden you have an action you're not you're not using terms you can't Define anymore like nobody knows everybody says AI has to be aligned with human interest but what does that mean it's a very squirly word I I worked on privacy for a long time I helped start the thing that turned into the Privacy framework in in Europe and um the gdpr and I got to say I'm still not sure we know what privacy is in the context of the Internet it's a very tricky concept so then that's where lawmakers come in and

say this is how we should be regulating you but my question to you is do you think politicians are too scared to actually challenge well you know technology this is a really good question I I feel like there was a thing that we in Tech culture did for too long where we'd go to Congress or a parliament somewhere else in a different country and we'd browbeat the people there we'd say oh you senator and congressmen or you know you don't understand Tech you're you're idiots we're the Smart Ones you can't say a thing you know

and and there this always happened and it was like this constant like you wouldn't believe what an idiot that Senator was and um this went on for years and years and years and I think we browbeat them to the point where they became timid in a way that actually hurts us you know um and now everybody in AI of of any scale is going and saying actually we kind of do want to be regulated this is a place where regulation makes sense when does that relationship become too cozy between big Tech leaders and lawmakers it's

cozy already I don't know I mean that panel that hearing with Sam Alman and and and people at the it was a c hearing wasn't it you know I mean it it felt cozy at times but not all the time and there was this General rhetoric of like please regulat us yeah yeah I mean I think we want to be regulated because everybody can see especially like if you think of this as being like the Troubles of social media times a thousand we want to be regulated we don't want to mess up Society we depend

on Society for our business and uh I uh it is a little ambiguous there's a kind of a Libertarian streak in Tech culture too that all regulation must be suspect but it's just clearly not like regulation is that layer upon which we can do free enterprise without that we don't have the order within which we can function otherwise it's just a kind of uh of survival of the fittest which does not lead I mean it leads to Natural Evolution but very slowly like the thing Matt you know markets are fast and creative and you don't

get that without a stable layer created by regulation you've called some actually some Al colleague friend yeah sure course and I I wonder I mean have you spoken to him recently about your concerns oh yeah all the time so um I I I won't speak for Sam certainly you know um I am very comfortable with working with people with whom I don't have total agreement but you know we have more agreement than you might think like Sam wants to do this thing of a universal I scan based uh cryptocurrency coin yeah to reward people once

AI does all the jobs obviously that's not within my my frame of recommendation I I don't think that's a good idea I think that some criminal organization will take that over no matter how robust he tries to make it like look at crypto crypto is mathematically perfect and then at the edge it's all criminals and fraud and incompetence there's some prominence there isn't it yeah you know I am of the belief that big tech companies have become so important to society that it's really useful to demonstrate that you can have free speech inside of them

and people still buy the products and buy the stock and it's not like some giant catastrophe and I've tried to create an existence proof of that where I can say things that are not official Microsoft I'm still look I spend all day working on making Microsoft stuff better and I really am proud that people want to buy our stuff and want to buy our stock I like it I like our customers I like working with them I like the idea of making something that somebody likes enough to pay you money for it that to me

is the market economy I want to I like that economy I enjoy participating in it and what I'd like to do is persuade my friends at some of the other tech companies at a little bit of speech in their in their situations might actually be healthy might be good for them I think it would really actually improve the business performance of companies like Google and meta you know they're notoriously closed off they don't have people who speak and I I think they suffer for it that you might not think so because they're they're big successful

companies but I really think they could do more what would make you walk away I don't know I mean of course you can always come up with some scenario I don't know what it would be but do you have kind of like a a a Line in the Sand I you know this is like I don't it would have to be very much based on the circumstances of the moment and the trade-offs I don't think you can really draw a line in something like this it and it's very personal and by the way it's not

only there's four or five other people at Microsoft who have public careers where they speak their mind and uh I think it's been a successful model it's worked for us and do I agree with absolutely everything that happens in Microsoft of course not I mean listen it's a big thing it's like it's like it's as big as a country you know and so of course there's all kinds of things and so I don't think being some like sort of pure perfectionist is very functional I don't think it helps anybody um although here in the Bay

Area there are many people who attempt to be that way and whatever but I I I really I I think you have to just always try to find the balance and it's never perfect Jaren this has been a fascinating conversation thank you for taking the time it's been brilliant thanks for having me thank [Music] you [Music]

Related Videos

24:02

Google CEO Sundar Pichai and the Future of...

Bloomberg Originals

3,434,049 views

21:01

Jaron Lanier interview on how social media...

Channel 4 News

3,628,085 views

24:02

Open AI Founder Sam Altman on Artificial I...

Bloomberg Originals

303,937 views

47:28

Data Dignity and the Inversion of AI

University of California Television (UCTV)

11,408 views

24:02

The AI Superpowers | AI IRL

Bloomberg Originals

30,571 views

14:55

How we need to remake the internet | Jaron...

TED

427,666 views

29:13

Palmer Luckey Wants to Be Silicon Valley's...

Bloomberg Originals

940,431 views

10:50

Why you should delete your social media ac...

The Newsmakers

3,102,747 views

9:39

Jaron Lanier - Why Aren't Aliens Already H...

Closer To Truth

173,490 views

7:39

How AI Will Shape Humanity’s Future - Yuva...

The Late Show with Stephen Colbert

504,121 views

10:23

Why the Japanese Yen Is So Volatile

Bloomberg Originals

48,085 views

18:59

AI Deception: How Tech Companies Are Fooli...

ColdFusion

1,846,026 views

26:26

About 50% Of Jobs Will Be Displaced By AI ...

Fortune Magazine

308,336 views

26:31

Why this top AI guru thinks we might be in...

TRT World

884,556 views

46:02

What is generative AI and how does it work...

The Royal Institution

965,271 views

45:45

Jaron Lanier | N2 Conference 2023

Human Energy

3,597 views

12:33

What is Generative AI? It’s going to alter...

Freethink

491,822 views

46:17

Mustafa Suleyman & Yuval Noah Harari -FULL...

Yuval Noah Harari

959,665 views

31:43

Andrew Ng on AI's Potential Effect on the ...

WSJ News

146,679 views

20:19

Is Gravity RANDOM Not Quantum?

PBS Space Time

230,885 views