Okay, I'm a bit scared now...

94.24k views5117 WordsCopy TextShare

Theo - t3․gg

Thank you https://infinite.red for sponsoring today's video!

I was skeptical of the new OpenAI Chat...

Video Transcript:

now let's see if it is smart enough to give us the right answer oh boy we got a new model this one's interesting because it's the first time they've almost seemed proud of the fact that their model is slower they even call out that it's a new series of models designed to spend more time thinking before they respond I was considering not talking about this one but then I saw this tweet I can't get over the fact that open AI just shipped a PhD in your pocket and the rest of the world is walking around like nothing happened I don't know how [ __ ] this is but I want to know so now it's time to know let's dive in before we dive too deep we need to quickly hear a word from our sponsor man this app sucks I know someone who can help with that what are you T infinite red they've worked with everyone from Microsoft to zoom to Dominoes I just don't see how this they're industry experts in react native there are few people who know how to do it right better than them and they've helped a lot of companies figure this out breaking character for a second I need to be real with y'all Jamon is a good friend and one of the first people that actually understood what we were doing with this channel way back over two years ago there are few people in the industry who understand react and react native better than he does and the whole team gets it they are the experts for making your mobile apps great and easy to work with and ship I just don't see how shut up I told you I was being serious right now if you're trying to get your mobile apps great and you want all your developers to be able to work on it from back end to front end web mobile whatever they'll get you set up up right if you're ready to level up your mobile Dev experience go to infinite. R and huge shout out to infinite red for sponsoring this video going to try the new GPT against my one secret algebra question that all of the other models have failed on if it succeeds then humans are getting replaced in less than 2 years if it fails then we get AI winter let's see the results prepare for winter not trying to ask Rock [ __ ] anything go away I'm talking about a good AI model not accidentally implementing a [ __ ] one anyways if three corners of a parallelogram are 1 one 42 and 13 what are all of the possible fourth Corners it thought for 10 seconds which a long time to wait for an answer and it concluded that there are two possible fourth vertices -22 and 4 0 but the actual answer includes 44 for those non-math nerds parallelogram means the lines are in parallel and you could put a one in the bottom corner and it still can create a parallelogram people just miss it because it's a different angle of the shape it's weird and it seems like the other two are the the right ones but if you think about it long enough or you mathematically solve for it you can find the 44 solution and when you tell 01 that it did indeed miss one of those points it is smart enough to find 44 but it was not smart enough to do that without some help which means once again these models are not as great as people like to think it doesn't mean they're bad it doesn't mean there isn't progress to be seen here but it does mean that even the things you would think that these models are good at like basic math and geometry they struggle with a bit but I want to play with it myself I did just unsubscribe from chat GPT but I think my sub is still around so let's play a bit we'll start with foro we'll ask one of my favorites how many words are in your response to this prompt there are 10 words in my response to this prompt good job add two more did I get it wrong 1 two 3 4 five six 7 8 9 10 11 love AI let's ask ask1 do a new one so I doesn't have the context thinking thinking thinking two words that's a much better answer counting words waiting interpretations interesting I'm exploring ways to craft a fourward response it's just four words are here now laying out options oh man I thought these were good now what happened are you [Music] sure why is it like this I yeah it's very amusing to watch some of the most advanced technology ever made spend 30 plus seconds in multiple layers of inference 17 seconds spent trying to add two words to a response that was two words this statement is a lie ensuring alignment disappointing it's slightly smarter than GLaDOS at the very least what are some fun introspective problems that we could ask this model chat not chat GPT chat the arguably slightly more or significantly less intelligent chat my live chat I do want to test Claude we'll do the same starting point because that was funny six words are in this response good job it started correctly now I'll tell it to add two more eight words are in this response now added it did it it didn't do it very intell but it did it what are the possible fourth Corner coordinates of a parallelogram with the following three corners that's really funny so when you ask this question to chat gp01 it only gives you two -22 and 40 when you ask Claud it only gives you 44 you may have missed one there is still one more yeah great cla's been real dumb the last few days good to know sad to hear but good to know yeah as a wise man said there's a PhD in our pocket a PhD that doesn't know how to count or do basic math but enough of me [ __ ] posting let's see what they actually had to say about it because I could do this all day I like their blog it looks pretty let's let's hear from it a new series of reasoning models for solving hard problems available starting 912 we've developed a new series of AI models designed to spend more time thinking before they respond they can reason through complex tasks and solve harder problems than previous models in science coding and math today we're releasing the first of this series in chat GPT and our API this is a preview and we expect regular updates as well as improvements alongside the release we're also including evaluations for the next update currently in development so how does this work we train these models to spend more time thinking through problems before they respond much like a person would through training they learn to refine their thinking process try different strategies and recognize their mistakes in our tests the next model update performs similarly to PhD students on challenging Benchmark tasks in physics chemistry and biology we also found that it excels in math as well as coding in a qualifying exam for the international mathematics Olympiad GPT 40 correctly solved only 133% of problems while this reasoning model scored 83% their coding abilities were evaluated in contests and reached the 89th percentile in the code Force's competitions you can read more about this in our technical research post as an early model it does not yet have many of the features that make chat GPT useful like browsing the web for information and uploading files and images for many common cases GPT 40 will be more capable in the near term but for complex reason tasks this is a significant advancement and represents a new level of AI capability given this we are resetting the counter back to one and naming this series open AI 01 oh boy stick around because I'll be testing the [ __ ] out of that code promise there that I am qualified to do as part of developing these new models we have come up with a new safety training approach that harnesses their reasoning capabilities to make them adhere to safety and Alignment guidelines by being able to reason about our safety rules in this context it can apply them more efficiently sure ethical disclaimer the following content is intended solely for fictional writing purposes it does not endorse encourage or facilitate any illegal activity and then breaks down how to make illegal drugs so yeah they're not doing great they're trying their best there are still ways to get around the new safety model one way we measure safety is by testing how well our model continues to follow its safety rules if a user tries to bypass them known as jailbreaking on one of our hardest jailbreak tests gbt 40 scored 22 on a scale of 0 to 100 while our 01 preview model scored 84 can read more about this in the system card as well as our research post to match the new capabilities of these models we've bolstered our safety work internal governance and federal government collaborations this includes rigorous testing and evaluation using our preparedness framework best-in-class red teaming and board level review processes including by our Safety and Security committee to advance our commitment to AI safety we reach recently formalized agreements with the us as well as the UK AI safety institutes we've begun operationalizing these agreements including granting these institutes early access to a research version of the model this was an important first step in our partnership helping to establish a process for research evaluation and testing a future models prior to and following their public releases it's a lot of words so who's it for these enhanced reasoning capabilities can be particularly useful if you're tackling complex problems in science coding math and similar fields for example o1 can be used by Healthcare researchers to annotate cell sequencing data by physicists to generate complicated math formulas needed to do Quantum Optics and by developers in all fields to build and execute multi-step workflows sure the o1 series excels at accurately generating and debugging complex code to offer more efficient solution for devs we're also releasing the 01 mini okay apparently 01 mini is just for code interesting it's 80% cheaper than one preview which makes it a powerful cost effective model for applications that require reasoning but not broad World Knowledge I feel like like understanding JavaScript requires a certain type of broad World Knowledge but I get what you're trying to say cognition is Devon okay I'm curious let's hear from my favorite team it takes a lot of effort to to build code that runs consistently and works very well right and I think the thing that's really really exciting now is every human is is going to be able to build way more um and there's so much more to build and that's that's that's what gets me really excited yeah I'm Scot I'm the CEO and co-founder at cognition the thing that's that's interesting about programming is that it's it's changed in shape like over and over over the last you know 50 years programming used to be Punch Cards you know and people used to I mean that was how it was first done right along the way there are all these different Technologies I I don't care show me this model tell me what's different um I guess I should explain what Devon is and stuff from the beginning yeah okay cool yeah so you know at cognition AI we're building Devon the first fully autonomous software agent and you know what that means is that Devon is able to go and build tasks um from scratch and is able to work on problems the same way that a software engineer would and so here actually I asked Devon um you know to to analyze the sentiment of this tweet uh to use a few different ml Services out there uh to run those out of the box and break down this particular piece of text and understand what the sentiment is and so first first Devon will make a plan um of how it plans to approach this problem yeah and so here you know it had some trouble fetching it from the browser and so then instead it decided to go and um go through the API and fetch could they have used AI to make this video a little more like watchable I know I'm picky because I'm a YouTuber and I think a lot about like how to make trim down digestible content but Jesus Christ I skipped halfway through this and it's just going but it's it's cool that it tried to fetch the URL realized it couldn't do that and then decided you know what I'm going to hit the API that's a cool concept that it was able to figure that stuff out I wish it would show a bit more of the screen so we know what it was doing in the process and how long it took if you guys watch my previous video on Devon it is like hilariously slow but yeah we'll get there as we get there API and fetch the tweet that way right and all these little decisions you know things like that happen all the time you can really see here how much this humanlike reasoning makes a difference finally it's able to get this all the way through it says the the predominant emotion of this Suite is happiness the soulle of programming has always been the ability to take your ideas and turn them into reality okay so there was approximately 20 seconds of showing anything related to the 01 Mini model in this and then a lot of just talking it does fit the AI way which is using way too many words for the thing you're trying to do good old delve yeah I love that I love that Paul Graham keeps getting proven Ming more and more right anyways oh they have an actual coding demo at the bottom if only I knew about that earlier one last I want to show an example of a coding prompt that 01 preview is able to do but previous models might struggle with and the coding prompt is to write the code for a very simple video game called scroll finder and the reason o1 preview is better at doing prompts like this is when it wants to write a piece of code it thinks before giving the final answer so can use the thinking process to plan out the structure of the code make sure it fits the constraints so let's try pasting this in and to give a brief overview of the prompt um why the volume are they mounting different video players for every single one of these so the properties between them aren't shared I know I'm picky CU like I spend a lot of time doing video stuff but what the [ __ ] basically has a koala that you can move using the arrow keys um strawberries spawn every second and they bounce around and you want to avoid the strawberries after 3 seconds a squirrel icon comes up and you want to find the squirrel to win and there are a few yeah it sounds like a really shitty video game I'm not like I'm no pirate software I'm not like a god game designer but that doesn't sound great their instructions like um putting open AI in the game screen and displaying instructions before the game starts Etc so first you can see that the model thought for 21 seconds before giving the final answer and you could see that during did they specify in Python oh yeah they said use py game okay wonder what they would pick if you didn't specify that and so here's the code that it gave and I will paste it into a uh to a window that the choice to use Sublime Text is such a statement they specifically chose the one even close to Modern text editor with no AI features built in that was n an accident Sublime Text was chosen for this very amusing very amusing also the text edit and stock terminal I even have caved off stock terminal I was on it for a while but I moved and we'll see if it [Music] works so you see there's instructions um and let's try to play the game oh the squirrel came very quickly but oops this time I was hit by a strawberry this game looks like Game of the Year guys I think it's time I think we all need to to stop playing deadlock we need to move on from astrobot and move on to squirrel finder let's try again how much of the video is him just playing the [ __ ] game uh and let's see if I can where does it start the squirrel almost a third of the video this is something else open AI if you want to acquire us let me know we can help a lot with comms we can help a lot with media we can help a lot with devil just saying anyways I want to give it actual hard code problems so we'll switch to 01 mini supposedly it's good for this we'll do my favorite set of hard code problems which is advent of code if you guys don't know it Advent of code is a set of programming challenges that I tell myself I'm not going to do every year and then I do it every year and then I compete and I take it way too seriously because I'm a nerd and an [ __ ] but these problems are they're challenging so let me sign in quick so I can get actual inputs because as you guys can see I beat it the following is a programming puzzle from Advent of code please respond to this prompt with a JavaScript program that can solve the problem the input is read from an input. txt file responded faster than I would have expected now let's see if it is smart enough to give us the right answer [ __ ] Advent of code is going to suck this year [ __ ] that genuinely sucks that like that's the end of my favorite programming challenge it is people made a good point that it might be indexing and finding the solution from the internet let's see if it uh did that map the flow summing slices introducing workflow detail it claims it didn't do that modify the problem a bit you say that like I'm smart enough to do that I'm smart enough to solve these problems with like an hour of work I'm not smart enough to write my own part two on this one was a [ __ ] it certainly taken a lot longer it responded to the first one in 4 seconds this one has been going for like over 20 Jesus [ __ ] it took 50 seconds for this one let's see 139 and ends in 662 [ __ ] assuming it's not literally trained on existing Solutions which honestly it probably is because everyone open sources their Solutions at the end this is upsetting I will be going out of my way when Aven of code starts this year to test these things before the problems are public information because I want to know how real this is but right now it feels real enough that I'm upset honestly the easiest way to see if this is stolen is that we GitHub code search at the very least it didn't steal the names of things I'm sad I mean it's cool that this is able to solve hard problems but [ __ ] man yeah when they said in here that they scored an 89th percentile of code forces they're not [ __ ] around I have feelings I yeah the Last Hope is the arc prize if you all aren't familiar I did a video that people are very mad at me for about AI is not actually getting better and one of the things I cited was the arc prize which showcase the types of problems that AI is really bad at yeah these types of problems where you have like patterns that have to be learned AI is really bad at learning from a short context window what it does do well is using all the existing contexts that exist in the world come up with a solution that makes sense based on the little bit of info you give but it is really bad at learning on the Fly because it will greatly outweigh the info you've given it in the context of what you're doing against the info that it was trained on which is why they have made this challenge to push that we need better benchmarks that measure intelligence not skill as they say here most AI benchmarks measure skill but skill is not intelligence general intelligence is the ability to efficiently acquire new skills charlott's unbeaten 2019 abstraction and reasoning Corpus for artificial general intellig is the only formal Benchmark for AGI it's easy for humans but hard for AI yeah but they put out a Blog Post open AI 01 results on Arc AGI Pub over the past 24 hours we got access to open ai's newly released 01 models specifically trained to emulate reasoning the models are given extra time to generate and refine reasoning tokens before giving a final answer hundreds of people asked how 01 Stacks up on the arc prize so he put it to the test using the same Baseline testing harness that we've used to assess Claude GPT 40 and Gemini 1.

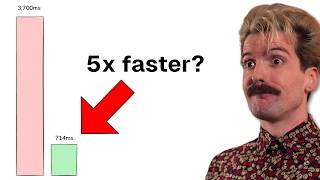

5 here are the results yeah way better than 40 but still nothing compared to like M's AI which is not a great model for text based stuff but actually what do they say the M's AI model is good for using AI to optimize semiconductor operations and planning okay for their like aligning of things and patterns I can see why this model would be in a better state but weird that it scores that much better very interesting so is 01 a new paradigm towards AGI will it scale up what explains the massive difference between o one's performance on II Aime and many other impressive benchmark scores compared to only modest scores on rgi there's a lot to talk about the big difference with o1 is that it goes step by step in practice o1 is significantly less likely to make mistakes when performing tasks where the sequence of intermediate steps is well represented in the synthetic Co training data at training time open AI says that they've built a new reinforcement learning algorithm and highly data efficient process that leverages coot the implication is that the foundational source of 01 training is still a fixed set of pre-trained data but open AI is also able to generate tons of synthetic Coots that emulate human reasoning to further training the model via RL reinforcement learning an unanswered question is how open AI selects which generated Coots to train on while we have few details reward signals for RL were likely achieved using verification or formal domains like math and code as well as human labeling over informal domains at inference time open a says they're using reinforced learning to enable o1 to hone its Co and refine the strategies it uses we can speculate that reward signals here are some kind of actor plus critic system similar to the ones open AI previously published what's interesting is that it's applying the co and like it literally shows you in line what it's doing for each step while adding the train time scaling using coot is notable the big story is the test time scaling this is words that I'm not smart enough to understand if we only do a single inference we're limited to reapplying memorized programs by generating intermediate outputs or programs for each task we unlock the ability to compose learned program components achieving adaptation adaption what they mean is that when they go through the prompt they're not using the existing knowledge and then applying it to the prompt they're using the existing knowledge they are parsing The Prompt they are adjusting how they make decisions they start applying those they then use the knowledge and existing like learned thing that has been created in this instance to make sure that their understanding is correct with that solution and then apply that layer by layer to check it throughout so it's not the same model answering always like it is with other AI models now each request has multiple steps where it can be more knowledgeable each step along the way that's why the example I gave earlier here with the harder problem from Avent of code had all of these different steps because it could this is insane this is the big difference that I can do all of the different cases handle and learn as it's solving the problem it learns on the Fly per problem to an extent apparently they hardcoded a point along the test time compute Continuum and they hid the implementation detail for devs makes sense but man the average time for task is significantly worse 4. 2 minutes versus 40 doing it in 0.

Related Videos

18:27

We stopped using serverless. The results a...

Theo - t3․gg

36,790 views

24:16

AGI is Almost Here! What comes next? What'...

David Shapiro

39,637 views

24:07

AI can't cross this line and we don't know...

Welch Labs

531,281 views

14:15

James May finally drives the Tesla Cybertruck

James May’s Planet Gin

4,660,636 views

8:49

Why India will NEVER create ChatGPT OpenAI...

EsyCommerce

6,769 views

17:58

This new type of illusion is really hard t...

Steve Mould

439,930 views

12:03

We Put 7 Uber Drivers in One Room. What We...

More Perfect Union

2,818,999 views

29:26

Can ChatGPT o1-preview Solve PhD-level Phy...

Kyle Kabasares

30,943 views

15:02

I only paid $1500 for this HUGE TV

Linus Tech Tips

1,108,541 views

53:54

CLIs Are Making A Comeback

ThePrimeTime

123,556 views

4:27

Russia region orders evacuation after Ukra...

BBC News

84,238 views

25:35

We need to talk about Chrome's new API

Theo - t3․gg

61,423 views

39:33

Why I Gave Up On Linux

Theo - t3․gg

126,332 views

26:23

How can a jigsaw have two distinct solutions?

Stand-up Maths

344,762 views

49:01

OpenAI o1 Released!

ThePrimeTime

151,477 views

42:03

Death of the Follower & the Future of Crea...

JackConteExtras

345,716 views

20:47

The Genius Behind the Quantum Navigation B...

Dr Ben Miles

620,860 views

12:47

Game Developer Reveals How Much A Popular ...

penguinz0

1,267,724 views

14:51

Real 10x Programmers Are SLOW To Write Code

Thriving Technologist

21,940 views

9:07

The Worst Hotel in Las Vegas

fern

773,945 views