Unknown

0 views18289 WordsCopy TextShare

Unknown

Video Transcript:

so just going to make a few introductory points here I wanted to mention that we are here in large part because the doctor to be well and his lab we are going to be talking about things related to our knee seek but in the context of those things that were pointing out by him and his collaborators that are important right now the presenters are going to be dr. Adam Eric golonka he's the chief scientist at the tower bioinformatics research center and he's going to be presenting together with Julia panov she's a PhD student and both

of them will share both biological interpretation or biological projects and some technical things those work is supported by the Taliban from Attucks Research Center which is that University of Haifa in Israel and also Pine biotech when we are going to be talking about some data examples based on data these are published datasets we provide here links to those published data sets you're welcome to read the publication's and also download data it's available and also I want to point out that some of these projects are prepared as educational project project and are available on our platform

our platform is the first of all the website that you're on right now edu dot t-- - bio info and you will find there are also some courses on basics of data analysis similar to what you're going to be seeing here today so just an overview of what we will cover today or hope to cover first of all it's going to be about next-generation sequencing data pre-processing including trimming technical sequences and PCR duplicates and the second point about processing of next-generation sequencing is going to be around quantification of expression levels conventional pipelines and also identification

eisah forms we will touch on the challenge of gene set enrichment analysis so annotation of genes but also gene set enrichment and finally we will practice practice and utilize some machine learning methods including unsupervised analysis of expression data and supervisor now so now I'm going to hand over the presentation to Julia and Julia will share with you her presentation about biological examples ok should be able to share your screen now ok hi can you see my screen can you see my screen yes okay great so hi everyone Azealia mentioned my name is Julia and I

used the platform in my research for a neurodevelopmental disorders and I also collaborate and assist other groups in using the platform in their research in areas such as marine biology evolutionary biology and cancer studies but I'm actually on the wrong slide right yeah you want me to show your slacks okay Julia I have your slides here if you want to I'm trying to get this working I'm sorry for some hopefully sorry so okay so my goal today before we begin the hands-on part of the workshop is to give you a taste of what we can

do with the top robotics platform and also with bioinformatics in general so I will focus on one project that we have been working on and I will briefly tell you about the kind of data and the analysis we used to generate the results that I will present afterwards every step of the analysis that you will be hearing about was done on the platform so let me begin yeah I'm on the wrong slide I'm not in the present mode wait oh yeah yes sorry about this but my sharing screen is not working for me could you

do it for me and share my and share the presentation for me and I will just speak okay can you hear or can you see my screen yeah okay great so and so maybe you can show the next slide so I will focus on one project about cell lines and I will tell you about the kind of data and the analysis that we use to generate the results that I present afterwards and so let me begin the project we will be looking at is based on the analysis by the gray group and I hope you

had a chance to to look at the publication but if you didn't it's okay we will be I will go as well so the 70 cell lines of breast cancer and we used only 49 of these cell lines that had all the molecular profiles for them and as you can see on the slide we had gene expression data and RNA editing data so the RNA seek data is actually produced by sequencing the RNA molecules in the sample so from this RNA sequencing we can get two kinds of data we can get the gene expression data

that we will go into more detail and you will have a chance yourself to practice how to do it exactly and also we can do RNA editing and we could generate the RNA editing table from those data as well so what it what edit those are might've forgotten or don't know is a post-transcriptional modification of primary RNA molecules as you can see on the slide sometimes RNA molecules are by a special enzyme and there are different types of editing there is a substitution of cytosine to uracil that in the sequencing data we see as a

timing and another type of modification could be a substitution of adenine to by go online so we studied these two modifications together with gene expression and RNA editing events can have very big effects on afterwards untranslated protein and the protein can be unfunctional or it can lose the biting sites for micro rna's that it had in wild-type state and the exact mechanism and the function of editing events are not completely understood but it has been shown that RNA editing plays significant role in different types this is about the kind of data we were looking at

gene expression and RNA editing and question is why why would someone might be interested in data driven analysis that actually has no planning beforehand we were not looking at specific genes or perturb rated States we were just looking at the data overall so we were interested actually in trying to identifies subtypes of breast cancer as you know breast cancer is a heterogeneous disease and our understanding of its complexity is still evolving so on this slide you can see kind of our history of what it was how people have with time have been trying to identify

the subtypes of cancer breast disease and recently people are trying to identify subtypes of cancer based on what we call multi-omics analysis by different layers RNA data DNA data data all together one analysis to find specific unique characteristic Euler characteristics of subtypes of cancer and as you probably know one of the methods or model cancer our cell lines and these cell lines are subtypes of cancer or or types of cancer and they are well established cell lines originally taken from patients that underwent biopsies and they are very useful models for studying the cancer as you

can see on the next slide we can have profiles of different types of cell lines with genomic profile and as well as pharmacological profile and based on these profiles we can better find treatments for patients that have similar genomic profile so of course cell lines are not are not the best models of cancer they lack some of the important things that cancer and patients have and that is hydrogen media inside the tumor cells and also the stromal component and however despite the limitations cell lines continue to be very important research models and preclinical trial tools

in cancer biology so understanding the characteristics of different cell lines can help us better understand both the subtypes of cancer and also sometimes understand that some cancer line is not a good model for the forecast for the type of cancer that it was used for as a motto so in our on the next slide you will see that our our project was based on 49 such cell lines we had gene expression table and you can see on the slide the gene expression table is actually a collection of all genes in stem we have different samples

with different profiles for each gene so expression and on the bottom table RNA editing table you see chromosome regions of the genome that have RNA editing events with high probability and what we're trying to do on the next slide you will see that to take these two tables and find groups of genes that are and groups of RNA editing that together have the similar sample profiles so you can see on the slide that for example we could find a group of genes in yellow and a group of RNA editing events also in yellow and they

would give us clustering of samples that are very similar to each other you can see the purple arrows pointing to the clustering of the samples and and those genes and RNA editing events would be somehow linked or another case could be that the genes in green and RNA editing events in green that could also give us a separation of samples a different separate example or for example an outlier sample that is somehow unique in its molecular characteristics and so the goal of our analysis in this in in this part in this project was exactly that

to find these somehow different samples that are different in molecular characteristics from all others and so this is actually what we were able to do was to identify one unique cell line and that was very different from all others on the next slide you will see matrixes of distances between samples so this is those are distances between samples and you can see in blue there are lines in blue on both tables one table is distances of samples based on the gene expression table and on the other table take on the other side is the matrix

of distances between samples based on the RNA editing events and you can see that both of these tables actually separate only one cell line HCC 202 you can see that separation in blue the distances between HCC 202 and all other cell cell lines are very high and they are painted in blue for visual representation so another way of showing this is going to be on the next slide is to look at the pca over the actual if you took took now these genes and you would look at the pca based on the expression level of

these genes you would see that these genes that we chose are only present in this sample and also with RNA editing events they are also only present in this signal so without actually designing our experiments so that we could target specific genes we were still able to choose a group of genes and a group of RNA editing events that clearly separates this sample from all others so let's look a little bit at kind of genes these are on one side we found olfactory receptors and micro rna's those were the expression levels and on the other

side we found rabjeet a basis so can we from knowing these genes can we now say anything about this cell line how is it different from all others well we could have some hypothesis and afterwards we could maybe generate more experiments or take more data to validate our hypothesis one of the hypotheses we were thinking of is that micro RNAs are known to bind to genes and to prevent the expression of these genes micro RNAs are also known to bind to a RAB gtp aces which are very important transport molecules and when Rob gypped a

gtpases are edited sometimes these binding sites are missing and no more no longer micro RNAs can be buying it can bind to this to these genes because of that we would have a very high expression of tranport not like us trap databases and it's known in the cancer studies that all factory receptors can cause malignancy in cancer or aggressiveness in cancer so wrap gtp aces by trafficking the olfactory receptors to nearby cells can actually make the cancer cell line or the cancer tumor much more aggressive so this would be some kind of hypothesis that could

be validated more with more targeted with more targeted experiments later on so this was a brief glimpse at what can be done with data driven by informatics approach and we hope that now you have a little bit of a more understanding of why this approach can be helpful in biological studies and life scientists life sciences and now I hope you will enjoy the work the hands-on workshop so thank you very much for your attention okay Julia and now I'm going to switch it over to Vladimir as it was said before glad in there is the

chief scientist at the table bioinformatics research center he as working development of the platform and extending it for both research and education [Music] a microphone isn't a zoo okay okay so hope that the screen is shared correctly if there are any problem please let me know and before we start please let us check that all of you have an access to your accounts at the tab whereby in the platform and that you have all the files in the archive prepared for this workshop so please let us know if anyone does not have this files as

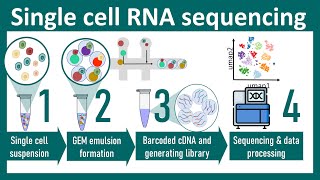

we'll use them later on in our practice so in any case we begin with some theory and then we'll proceed to practical analysis hopefully the theory will be relatively short and it will be related to the basis of RNA sequencing pipeline I mean library preparation that we need the step that we need to understand in order to understand what are the main steps of the analysis as you know perfectly well everything starts with the genome which part codes proteins so genes code for proteins but proteins are not generated just directly from genome there's an intermediate

step genes give rise to transcripts to my to messenger rna's and these messenger RNAs are then translated to proteins and all the cell's for the fixed organized for example all the cell's for one human well generally if we avoid thinking about cancer cells for a while generally have the same genes but these genes have different activity different levels of activity different expression levels in different cells in different tissues so some genes may be present in the genome but give rise to no messenger RNA is no transcripts and hence no proteins some can give rise to

an essential amount of transcripts and essential thus essential amount of proteins and roughly speaking sequencing sequencing process can be described as follows we have all these transcripts these transcripts are randomly divided into small pieces and this pieces are read by sequencing machine so read letter by letter nucleotide by nucleotide the problem is that we do not know the origin for all these pieces who get after this reading but anyway we know the sequence for human genome we know the nucleotide sequences for all the genes so we can map our shout segments on transcripts or better

to say probably better to say on genes and if there are no reads mapped on a gene for example gene bet shean be we see that this gene has zero or at least low expression level more reads more short segments we have the higher the expression level is but if we go deeper in the details we'll see that this process has several intermediate steps these steps might be slightly different between technologies but generally these steps are the following first all the transcripts are shattered into sub pieces so we have shattering and in fact we have

one copy of each segment more copies of the transcript of a transcript we have more pieces related to this gene we're served in our sample of the chattering at the next step adapters are negated to these small pieces in fact these prayer reads in fact this Legation is successful not for all small pieces but at least these adapters are gated to a sufficient part of this short reads them PCR amplification is performed that is multiple copies of each read with legate adapters is generated for example if there are six round of PCR amplification well if

there are two rounds of PCR amplification we get to multiple by two four copies for each piece if we have six rounds of PCR amplification we get to power 6 so 64 copies more rounds we have we get more copies for each segment there is a slight problem related to the fact that this higher efficiency is different for different segments so for some pieces we have more copies for some pieces we have lower numbers of copies so we're speaking about average numbers of copies web gain at this step so please pay attention to the fact

that indeed there are multiple copies of each segment after this step but sequencing takes not all the pieces but takes only a relatively small fraction of these pieces and reads these small fraction if the number of copies is relatively low it's the case when the initial number the initial amount of RNA is sufficient and the quality of library preparation is also sufficient then generally will read only one copy for each short segment number of duplicates so number of reads originated from the same small piece of initial transcript will be well above well below 10% if

we start with lower amount of RNA if so we need to perform more steps more cycles of PCR amplification we get more copies for each segment and the number of these VCR duplicates increases more over the absence of uniformity and the number of copies starts to play the role but after that we can continue to our previous discussion we simply map all these reads on initial genes and estimated an expression level just counting reads mapped on each gene so this logic this logic explains us this logic explain us the major steps of the analysis we

may start with pre-processing so if there are additional segments negated additional sequences negated to our initial segments adapters this adapters may be removed prior to the analysis additionally there's a standard effect of low quality amps for reads they also can be trimmed to increase mapping quality then PCR duplicates so identical reads that are potentially the corpus of the same initial segment can be removed from the analysis this steps increase the quality of the resultant mapping so we map reads on known genes unknown transcripts but at this stage there are different strategy different strategies we may

focus just on estimation on expression level of known genes in this case we map all this reads to the set of non genes known transcripts known isoforms we can also want to try to identify novel transcripts novel isoforms of known genes or completely novel transcripts it's a more rare type of the analysis but it's also not technically hard and definitely there's a combined strategy after the reads are mapped we can proceed to the quantification of expression level still just in order to understand how it can be performed technically without going deep into the details of

mapping algorithms of the estimation algorithms let us get started with the use of the terrible Barre info platform let us prepare the pipeline that performs all these jobs we've discussed we won't run this pipeline as the size of sequencing data is generally relatively large so processing time is also relatively large it may take hours or even several days if we have many samples so we'll just prepare these pipeline in order to do it we proceed to the platform please let us know if there are any problem with it and we go to the analysis related

to RNA sequencing data so here it is so yes there's introduction so what we can choose here what are the options today who are dealing with human genome so we select the set of genes of the genome as you see so we select Homo sapiens there are two types of reads single end and pair and for example for our today discussion we have some parent data and we need to okay I think I see it from here great thank you so let's just try okay and the size of the size of sequencing data is large

so uploading of new files can also be slow we use just links to the files that are already inside the system to have this short test so plug in here somebody was just asking if I think just to make sure so he'll is everybody on the same page can we move forward or okay if you go back one slide okay currently I'm just change screen so if again we are inside the platform so when we log in we can press on the areas of the analysis link and then we've got sections for analysis of various

types of omics data and for various pre-processing here we go to the analysis of RNA sequencing data so here's the link on the top left-top link so here we're here in order to perform the analysis we to perform standard analysis we use type of sequencing data we have single end sequencing or parent sequencing well currently in many cases its parent PE parent and select genome or the set of genes select transcriptome we're working is so parent and Homo sapiens and then we upload files so we press on upload files and add groups button please let

me know if there are any problem with what's going on and for our case for the case when we use files that are already inside we use URL bulk upload here it is so here we go to the files in the archive you have main files and for example if you want to start with just two samples we use the file links two samples dot spld this is a very small file which contains only links to our data but not the data itself okay so dick leagues if you do not have these files please let

us know and we'll simply fix this problem there was a question so what are the four drop-down options so okay okay so first we can deal with different types of experimental data I mean model organisms organism for each genome and transcriptome is known so and we have everything known for an organism our human sapiens so is the first option data type we're dealing is so typically for RNA sequencing we start with first few files if we begin from the very beginning then the type of reads single and or pair and and if we're dealing with

model organisms we just select genomic and transcriptomic data for related to this organism so organism and rich type so then okay okay then we upload a file from our set okay just to clarify about our set what what we mean is there is a button to download files on the page that you're on so you should have downloaded the zip file and that zip file contains multiple folders one of the folders is called main files web will mainly deal with main files so you download the file you unzip the file inside you see the folder

called main files and then we will be using the files that have an extension dot svl so okay and we select this file hopefully we'll get used to it promptly as we'll create a number of pipeline today of pipelines today the file is links to samples and then we go further with our analysis here we're not going to make differential expression analysis or any complicated analysis currently were focused on just estimation of expression level so after pressing define groups we simply say that we need no groups no additional information for this analysis so cancel no

groups and then we start creating our pipeline so the pipeline should be started so again I select start then a possible option is okay here again so let me just show it once again here we use bulk uploads as we're uploading only links to files but not the files themselves so later on we'll use main uploading button add files but currently were using bulk upload button and upload the file links to samples dot svl so we then press on define groups and say that we have no groups and go on so okay let's take a

look whether they're right and a problem at this stage if not we'll try to go further okay okay so we initialize our pipeline with the start module start and okay then for trimming adapters and low-quality ends of reads we can for example use trim emetic module we press on the module and then confirm by pressing save PCR duplicate removal can be performed where the module PCR clean so default option default technical option is perfectly okay mapping can be performed if we're going to map all our reads on jeans on transcriptome for example with bowtie or

bwh for example with bowtie and expression level quantification can be performed there are some expression table module here we need to choose the units for expression level quantification we'll discuss this units in a minute or two so for example fragments per kilobytes per million map reads it became will also do and that's all that's all so this model will give us quantifications for expression levels here we can just finish our pipeline and model and save give a name to this pipeline for example test pipeline or whatever you like and then in order to run pipeline

we won't do it now as this pipeline takes okay okay so now we won't run this by plan we just looked at how this pipeline can be created so while we're trying to solve our problem let me just repeat all these steps some people are saying that they are still doing making the pipeline so yeah please let me know when everything is okay I'll just repeat all the steps for people for going on just to make visual what we're doing so I just recreate this pipeline I start with the areas of the analysis RNA sequencing

select parent sequencing select genome upload our file links to samples tell that I need no groups for the analysis then start for example dream adapters if I select a model which is not required I selected traumatic but I want to remove it from the pipeline I can press just right mouse button and remove it so removing is performed with the right mouse button but I add traumatic I add PCR cleaning I use mapping this time for example bowtie I after mapping I need to go from reads from mapped reads from read positions to expression level

quantification which is before and by or same expression table and that's all and that is all and pipeline name and in order to start this pipeline I need to press run pipeline but currently I won't do it okay so is everything okay with this simple test we'll okay okay so anyway it's just an example and as you see we can easily include different types of models for each step in our analysis for example we want we can I'm just going on with my order because is not essential things I just gives several comments for people

or already who have already created these pipeline so there are different moods for example adding sequence adding mapping on genome and cufflinks calf merge models will also allow us to add steps related to the identification of neural isoforms so algorithmically it's not so simple but as we have all these modules all these algorithms inside the platform the usage is really simple we add additional modules to the pipeline and wait for the result more modules more execution time but definitely it's not a problem for the majority of experiments when the data is collected for weeks months

and sometimes even for years so additional data to of the analysis is sometimes not essential but from the other hand faster analysis is definitely what who also wants to help blue diamond yeah there are some questions that Sahil wants to ask on behalf of some people [Music] ok so what I understood the question is what do these buttons actually do to explain a little bit more in detail what traumatic and DCR clean you do so that it's clear to everyone it's not just buttons on the screen ok so thank you very much for your question

and let us return to our standard scheme of the analysis of RNA sequencing which just followed our general idea of the experimental of the preparation of experimental data so first in we located the adapters to our short sequences and standard pipeline may may wants to remove these short sequences this is performed by the first model we added traumatic so traumatic loops at the ends of reads tries to identify these standard adapters and has this model also has functionality to try to identify normal adapters which are not in its database forms just trimming so remove these

adapters from the reeds we have sequenced then we discussed these are duplicates we discussed PCR duplicates and so our next step was to remove these PCR duplicates and this is what is done by the is our clean algorithm so it just looks for pairs triples quadruples and so on of identical reads and leaves only one copy so the forms these bizarre duplicate removal the next step is mapping these short reads on genes so for each gene and for each short segment we want to find out whether this read has a good position above these gene

so and after this stage we've got positions on the transcription set for each read several some reads can have now allowed positions and for some reads we may fail to understand to identify its origin several some reads can be mapped on different locations but all this information is obtained by the mapping model for example as we are making on the transcriptomic data bowtie bwa sounds like that and the final step is going from read counts to to go from this mapping to the identification of expression levels to the quantification of expression levels and it's performed

by them and it's performed by the our same expression table modules so definitely algorithms that light behind these titles are not very simple especially if we're speaking about mapping and quantification but the purpose of these models is just as I described so let us please now have a very short pause I suppose for three or four minutes just a short technical break and after this break we'll try to discuss what are the standard units of quantification why we should go from raw read counts to some more complex measures so currently just for a moment just

for several me okay can everyone hear me okay during this break it would be helpful I assumed that everybody had a chance to take a look at the first pipeline that we created let me turn on my video here okay okay so while we're taking this short little break I'm going to turn on the microphone for Co and and please the heel if you want to just make sure that anyone has questions [Music] sorry I could not hear that cou yeah so we you know you have the quality of weeds and I think we can

touch on that subject but in the meantime what I want to do in this short break is just maybe collect all of the questions and so we will be able to answer them one by one so we noted it down the question number one is quality of raw fast Q files before you start the mapping [Music] [Music] [Music] by using this pipeline okay about the eyes it forms just a quick note we will get to that great question I think as Louie has shown with in the beginning we had a method to combine multiple sources

with Muhammad's data identify relationships between he knows I think we will get to that form again another question of minutes artistic apps so the basis of the connections like I have person it means to have these happen that app whether I will be okay so what is that I what is the logic behind selecting specific steps okay so there are different methods that the form of the task generally I hope that we discussed the logic of the preparation of the experimental data and so here here each step of the analysis simply follows the experimental step

just in two or three minutes I'll tell you that in reality several steps can be emitted for example pre-processing can be emitted but generally this pipeline just use back from reads we have out of the sequencing machine to initial data we edit adapters has remove them we potentially edit be shared duplicates had hands we remove them and in fact go back to the initial set of short sequencing sequences this reads originated from original transcripts then we try to map all these short sequences on genes in order to understand which gene which genes are associated with

many segments these genes are the genes with high expression level which genes have no almost no short segments associated with this gene so these genes are genes with low expression so unfortunately if we now go my deeper into the details will fail to complete even a short overview because indeed this app reasons have been studies designed for I think more than at least fifteen years and there are great algorithmic ideas behind this algorithm but currently we're focus just on overview these steps okay questions yes and just maybe you can quickly help address those so the

question number one was how to know quality of raw fast queue files before the mapping question number two was about the logic of different steps of analysis question number three was about making correlations between multiple omics data question number four was about identification of isoforms so I know you probably don't want to address everything at the same time but maybe you can just give an overview of when those questions okay so starting from the beginning we can use we can still use pre-processing steps and look at the output statistics of what what we're doing with

these models provide us a statistics for example with the ratio of P shard of reads identified as PCR duplicates and has the reads which have removed we see the number of reads for which adapters has been have been treated but anyway we can there are ways currently not implemented as modules as we use them really to look just at mean base quality reported by the sequencing machine so fast queue files along with a nucleotide for each position contains an estimate of equality for this position and just look at a number of positions with high quality

and low quality to the number of breeds containing only high quality basis it's possible but another possibility is to get statistics after the mapping for example to get number of reads or percentage of reads successfully mapped on genes to get ratio to get proportion of reads that had a unique location with respect to genes and to make a conclusion about the quality of this fescue file based on these overall statistics so prime primarily number of PCR duplicates that were removed number of reads that were not mapped that were mapped uniquely and that were mapped simultaneously

and on different locations so this is a short well not complete answer to the first question so going further about well for example identification of novel isoforms when we try to identify novel isoforms we are looking at reads mapping them not on transcriptome but on genome or better to say on exotic segments of the genome and these reads can link one exam to another exam so reads can start inside one exam and have there are the end of this read in the Artic it's on so this read just generates a link between these exams and

we have a map of all possible links so of all exon exon links supported by reads having this map we can try to identify genes so combinations of exams corresponding to a fix gene or we can find reads well logically let me avoid explaining here the details well fairly well mapped on a part of a genome that is not currently identified identified as known coding region so it's the logic behind identification of novel isoform so i think these are just short answers to two of the four questions well let me avoid discussing links between multiple

omics types today as it's really along topic so Julia just mentioned that there are some in-house tools we have for these analysis for example the Association there are some publicly available tools for example for multi-omics clustering these tools are also collected certain tools are also collected and the platform so there are multi-omics analysis sections well it's these are just some possibilities some are tommix clustering some associations and a number of additional models but it's just a topic for another discussion unfortunately I'm afraid it will take long even to make an introduction here and there was

the fourth question so could you please remind it okay if there are more questions I can also maybe say that we will have a number of breaks further on and it would be probably helpful if we can move on with the material so that we can do some practical aspects of building the pipelines and understanding their logic and as we get to the next stage we can take some more questions there okay fine so apparently first I want to say that there are at least there are two main types of bizarre duplicates artificial posad replicates

which originate from these step of library preparation PCR amplification but there may also be natural TCR duplicates so while performing these pre-processing step removing of these are duplicates you definitely should keep in mind the details of your experiments primarily well as one of the major parameters the amount of RNA material you started with enhance the number of the share implication cycles you performed and if you're especially if you are dealing potentially with short sequences for example not genes but you have focused on micro rna's then these beasts are the shared applicant removal step should be

used with caution or even completely devoted there are some sources so here you may find links that state that these pre-processing steps mister duplicate removal adapter removal well generally improve the quality of the final expression level quantification this improvement is moderate so pre-processing can be even avoided with getting good results so let us currently try to discuss what can what measures for expression level quantification can be used and the first measure we already discussed is very simple it is number of reads mapped on these gene first we can work with just raw counts the total

number of reads mapped on a gene a slight problem here is that several that some reads in many cases amount of reads are mapped simultaneously on different locations so we may want to split this one count count associated with one read between all the locations to which this read has been mapped the simplest way is to split this one count uniformly but in many cases more complex statistical models can be used and for example these are same module we added to the pipeline uses a relatively complex bias and approach so the idea is that we

can perform this splitting of counts associated with the same read in a more sensible way but in any way if we're dealing with counts either raw or so processed so called expected counts after this splitting what we have we may have two genes and for one gene will have many reads mapped of this gene and for another gene we may have much lower of reads mapped on this gene can we say that well natural expression level of the first gene is for example expression level measured in the number of mRNA molecules associated with a gene

can we say that for for the first gene this expression level is higher as we have higher number of reads unfortunately no and the reason is very simple if read if gene is longer so then shattering this gene or mRNA molecular associated in two parts will simply give us more pieces so longer mRNAs give more pieces in comparison with short messenger rna's but the number of molecules we have is the same so in order to get while meaningful quantification we should keep in mind the length of a gene and we may perform norman with respect

to this length of gene another idea behind scaling behind norman is the following imagine that we have one sample we sequence this sample and with them repeat sequencing just the same sample with adding one additional cycle with adding just depth of sequencing i mean reading generating twice as much reads so generally will have two fold increase in the number of reads mapped to each genes simply as we have more reads into consideration more genes in our collection but it's just the same sample and it's natural to have the same estimations of expression levels that is

why we should also keep in mind sequence in depth for example measured in the number of reads mapped on transcripts and that's why we use another scaling item total number of mapped reads and it's just a very standard unit for expression level quantification fragments per kilo base per million mapped reads so the number of reads is scaled by gene or transcript length and by the total number of reads we have another usual unit for expression level quantification is TPM transcripts per million CPM units right for fixed sample only for fixed sample all right simply proportional

to F pkms but the coefficient of the coefficient see here the coefficient of proportionality is selected in such a way that the sum of all TPS for a given sample is exactly is exactly 1 million so let us try to briefly discuss the logic which stands behind this unit to p.m. as unlike fpkm CPM have a very national biological interpretation so let us consider the following amount number of reads mapped on one gene multipled by the mean read length and divided by gene length so let us try to discuss what is the sense behind these

formula number of reads mapped on one gene multiple by meme read length so what we have in the denominator here so what we have here number of reads multiple by mean read length is in fact the number of sequenced nucleotides associated with this gene so number of nucleotides associated with this gene we have after sequencing after the division after dividing this product by gene length we instead get number of molecules number of messenger RNA is associated with this gene or with this either form that we sequenced if we sum these quantities over all genes we

have in fact we simply get the total number of molecules of messenger RNA of the sequencing and if we take this amount this amount for one gene and then divided by the total amount we have a fraction of messenger rna's of transcripts associated with the gene of interest in the health eternity of sequenced molecules so it's just a fraction multiplication by 1 million gives us scaling so CPM tells us the following in each million of messenger rna's in the sample CPM is the amount of molecules associated with the genes who are looking at so for

example if CPM is 10 then out of each million molecules approximately 10 are molecules associated with these gene and another question related to quantification of expression levels is scaled all these quantities read counts fpkm TPM are the quantities measured in so called linear scale so original scale for the majority for the for many types of the analysis this amounts this linear scale amounts are lot scaled so we take logarithm the reason there are multiple reasons for these transformation for example one of the main reasons is related to the statistics as for the majority of genes

the distribution of expression level is law normal so after taking logarithm we get normal distribution which is very good for the analysis in terms of classical probability theory and mathematical statistics another reason is that linear scale is simply similar for dealing with for biology relative differences in expression level are more meaningful than absolute differences if we say that the difference between expression levels is 100 TPMS it will say almost nothing but if we say that the expression level increased twofold threefold tenfold it gives more information and log scaling simply moves these relative differences to differences

that can be obtained by taking standard arithmetic means and differences so for example in log scale two-fold difference is simply a difference by 1 lakh to unit difference equal to 3 lakh fold units is equivalent to the difference to the 8 fold difference in the initial scale the problem with going to the innate to the logarithm scale very well not essential problem frankly speaking is that logarithm is defined only for numbers greater than 0 and for many genes the expression level is just 0 so we can take logarithm we get minus infinity that's why a

small shift is introduced the alias of shift fry and one of the standard one of the standard values is simply 1 1 of possible values but it's not the only option in any case this shift the differences in this shift effect only genes with low expression level and we should also we should keep in mind that low expressed genes that for low expressed genes quantification just experimentally is an exact so we can't be sure that expression level of low expressed gene is measured is measured really precisely that is why genes with low expression level in

many cases are simply removed from the analysis but log scaling is also a standard step of the analysis so running the pipeline we have just writing the pipeline we discussed simply gives us estimates of expression levels in the units we set for the samples we upload it to the platform in order to see results and in order to compare the results for different types of pre-processing including or not including PCR duplicates removal including or not including trimming adapters you may look now won't do it add the files you have in the subfolder output files there's

the next level subfolder earrin a sequence in comparison with an excel file so for two samples here you have just F pkms linear scale it becames for these two samples just without any processing without with pre-processing that includes only adaptive streaming and low quality and streaming and with the prep recession we discussed so shortly if we compare results for the case is no pre-processing versus no duplicates removal or even for the other case you see that for the majority of genes gene expression estimates are roughly the same so for example for these genes along the

x-axis we have expression level quantification obtained by one type of pre-processing and y-axis gives expression level estimate for the other type of pre-processing so for the majority of genes we get the same or approximately the same results but there are some transcripts for which the difference is really high it seems for example that the point that the dot I'm pointing at is not very far from the line x equals y but the difference is more than one and this difference of more than one is a two-fold difference as we're dealing with log to scale so

mass data experiment gives us the general view but for the individual genes for the individual players we have we need additional verification we need additional validation if we want to deal further with some small subset of genes of isoforms identified at this mass experiment so in any case this analysis simply gives us huge tables so it gives us dozens of thousands of numbers for each sample if we perform mapping on only unknown transcripts we have these numbers only for known ones if we add if we add models for the identification of potential novel transcripts that

definitely need additional what level validation then we have estimates as you hear transcripts marked with X log beginning these numbers additionally for potential novel transcripts but the question is what we can do further with this amount of data these expression level tables are not the end of the story they are just the beginning of the story and so now we'll just start discussing what we can do with these data so let us make a decision do we need a break here or yeah please look for that knowledge now in a bathroom or a brief question

straight okay oh okay okay so currently we'll just try to see what we can do with the table with these tables we'll use real tables for the cell line experimental data Julia briefly described out of these dozens of thousands of genes we removed okay okay so break shut break okay well I just noticed that the microphone actually doesn't work that great when you take it far away is it I know it's fine but I think the farther you go from the computer [Music] okay so let us know when we should start again there was a

request from the audience to see the speaker on video especially when there are questions so we are answering to those requests okay so it's a heel back there okay so don't start again please continue okay okay so currently we'll try to see what we can do with these expression tables and we start with a very simple analysis so we have several about 50 cell lines all cell lions originated from patients with breast cancer and it's probable that all these lines are similar to each other as we're dealing with sea lions associated with just the same

pathology but how can we know if it's true or not it's really impossible to go through all these numbers just by eyes and our visualization stops as an dimension equal to two or three so if we have two attributes we can visualize our data as dots in a plane three attributes can provide us Association of samples with dots in a 3d space but we have thousands of genes thousands tens of thousands of genes and so this visualization is looks impossible however we can try to find an optimum plane that keeps as much data as possible

and still allows us provides us an opportunity of visual inspection of the data so currently I'll try to explain what I mean using a very simple example and then we'll return to the presentation so we have a simple Pam so in fact what you see in your screen what you see in your screen is a 2d projection of a 3d object if we choose a plane of projection in a poor way we have very poor understanding of an object we're dealing with so we have just the same object what we can cannot understand what we're

dealing with in this projection change in the plane changing the projection gives us better and better way of seeing these objects for example in this projection you can really understand what is the edge what is the object okay okay so if there are any problem with video please let us know I think it is working it's working okay great so and a very simple thing can be applied to the data we're dealing with we can try to find optimal plane or optimal 3d space or optimal planes that can this help us in visualization of the

data of the data we have so let us try to test the thing I'm talking about so let us create and run our pipeline and expect inspect the result so we'll be working with unsupervised analysis as in these analysis we know nothing about data underlying our samples we think we start at least with the understanding that all samples are similar they originated from the same pathology so in order to perform this simple kind of the analysis we select unsupervised analysis section upload expression table for the analysis this is the file cell line expression data dot

txt we'll take a look inside this file in a minute or two so we select cell lines expression data file okay so main files in the folder we are working with today and the file cell lines expression data healthy great we select this file cell lines expression data txt and press open button so this file is uploaded to the system unlike the previous analysis here we indeed take the file from your computer's to the system so it may take several seconds please let us know in case of any problem so again we add files file

cell lines expression data so that's it and go on continue continue start then our principle component analysis module is PC draw as we want to have plots as an output PC draw the default options here are okay in order to get additional help regarding these options if you need to if you need explanation you can just point on I zooms and have short comments but here we say that one visualization associated with three first principal components we need no scaling centering and transpose explains which type which information gives the arrows and we wish their columns

samples and genes or issac formers expression so he were then it's just the end of our pipeline and pipeline name see draw for example say the name is the name L then after the name is saved simply run this pipeline by pressing run pipeline button that's it each other pipeline is starting it may take several seconds once the pipeline is started even monitor its progress here are in my pipelines pipelines reports the results and everything for all pipelines you previously had so again pressing on the name brings us to the screen with the pipeline progress

while the pipeline runs let us take a look at the file we uploaded in order to understand what we have inside our system what is this file it is a very simple file in which for each sample each cell line and for each jeans out of well about 7,000 jeans we have expression here expression is measured in log scale log two scales fpkm so we use F became units with log two scaling and so initially each cell line is a point in a space with a number of dimensions close to 7,000 well I reload it's

to see their progress so for me almost completed and in order to understand the simple picture we project all these data we project all this data simply to a 2d plane if you low the page you'll see the progress it won't take too long but it may require several dozens of seconds or a couple of minutes to complete in this platform mode you will also get an email notification after the pipeline is finished okay for me it's done so let us additionally wait some time to reloading updates the progress indicator and after the pipeline is

completed you see 100% done signature in the top right corner so if you have the pipeline completed you may take a look at the output the main output of the pipeline is stored in the download pipeline output files folder so in the left folder you see here download pipeline output files these are the main output files so go in here for example we have a PDF file PC plots so this is the visualization we wanted to have so I download this file and open it each dot is associated with one sample and we started our

discussion from the fact that all cell lines originated from the patients with the same pathology and we wanted to see whether all cell lines are similar however here we see at least two groups of samples we definitely see a clear separation of samples into two groups probably somebody will see additional separation in two groups in the left or in the right parts at the same time we know that there are multiple subtypes of breast cancer and who may hypothesize that this separation is related to the subdivision into subtypes and the authors of this study we

are working with the author of the study that produced the data classified all the samples into four classes into four types of the breast cancer luminal subtype basal subtype cloud in low subtype and normal like subtype so in fact we have information about clinical subtype for each sample and probably it would be interesting to see how subtypes are located into in these plots well we can describe the picture but a better way to look at it is to generate the picture in the same folder in the main file folder we have an additional file expression

data macht it is just the same file but for which each sample is marked by a subgroup is marked by subgroup so let us repeat generating PCA PCA plot but upload this file cell lines expression days marked in order to get different colors for dots associated with different subtypes so the analysis is just the same but the picture will include information about the predefined class so in order to do it we create one more by plying just the same one we used unsupervised analysis in this unsupervised analysis we upload the file but this time we

upload the file expression data marked [Music] we upload the file expression data marked that's it so again the difference is that just here we have predefined classes for samples and we'll see how these classes are located in planes and all the same steps are the following is a simple analysis so we upload the data we upload the data and then just repeat the steps we did continue part piecing you draw for example with different with default parameters and give a name to the pipeline and run it okay maybe this is a good time to see

if everybody is following along and if there's any questions that we have I have this especially the gene names with this or the data needs to be in this particular format of having samples versus did you get that Redeemer Oh could you please repeat the question because the order is a bit worse than I want it to be yes I understand the question is about formatting the input file for the PCA how do you have to organize your transcripts and samples very much perfect question so here we have multiple options we can first for the

PCA for the principle component analysis transport or gene IDs are simply ignored so in this type of the analysis we have features but feature names are not essential then we can arrange samples by columns and features by rows or vice versa and all we need let me show you I'll just show you it on the screen I upload well any file all we need here is to tell system which goes by rows and we what information goes by columns in our case when samples are columns we said transpose equal to yes and in the opposite

case we simply said transpose equal to no and if we have additional information about classes or groups or something like that we simply add a line marked by a keyword group here to here the keyword is groups with a name of a group so again here are two options are possible what goes by rows what goes by columns both options are possible okay are there any other questions so meanwhile I've got a question to you so if we look at the previous PC what here it is on my screen and remember that there are four

subtypes what's your idea how these subtypes would be located two on the left and two on the right or something different so can we make a prognosis looking at this picture - just get feeling the hill can you be the voice of the feeling and so what does the voice of the feeling say so frankly speaking I also have no hypothesis looking at this figure but anyway let's look at the correct answer so hopefully the pipeline is completed for you as well so please let me know if I'm wrong for the next pipeline will simply

have a loop you will simply have a look at new plots we have here and what we see is that the luminal subtype is very well separated from three other subtypes again PC plot but these are the subtypes are relatively close to each other in the first plane principal component one versus two we set number of principal components to be drawn to three that is why we also have planes associated with principal components one and three two and three and here in the next plane you see that cloud and low subtype is well also separated

from basal and normal like but basil and normal like subtypes look similar anyway it please pay your attention to the fact that initially we knew nothing about the subtypes but this analysis provided us an unsupervised but very clear very definitely raishin enter at least two groups but this is a visual inspection and visual inspection can hardly tell us what is a normal well an acceptable a good number of clusters of groups for a given set of samples so here we see that at least two but if we knew nothing about true subclasses we could not

set the number of subclasses 2 3 4 5 so on based just on our visual inspection so let us create one more pipeline for the unsupervised analysis of the data it's just in the same section for performing formal clustering in our case hierarchical clustering of the samples we have so again we go to the unsupervised analysis section again we upload the file expression data this time we do not need marks we don't need information about subtypes about classes that's why we again upload the file cell lines expression data so again it may take some time

we start creating pipeline and this time we want to perform clustering for example using each class using hiragana clustering model so each cost here it is here it is here let me know if everybody's here as here we'll set a bit different options for example we'll set the expected number of clusters to 4 if you like 5 more please keep 5 absolutely not a problem let us change linkage type for example towards d2 so it's more important because linkage type is an essential parameter of hierarchical clustering so linkage type is changed to for example words

d2 definitely it's not the only possible option and that's all that's all again do transpose simply tells which information goes by cones and by rows that's it that's it so we give any name for the pipeline and start it great so while it's running let's briefly discuss what is performed by these pipeline so initially we know nothing about the number of groups so initially we look at our samples which are here illustrated by dots in a plane and associate one group with each individual sample so initially in the bottom-up approach of hierarchical clustering we have

the number of clusters of potential clusters of potential groups equal to the number of samples then we simply unite two samples which are the closest into one group and treat these samples as an inseparable well met a sample acquires a sample so for us currently it's one meta sample but not two individual samples so it's one dot instead of two dots and then we continue the same process we look at two samples of meta samples which are most close to each other and unite them into one group so at each step the number of groups

is reduced by one we continue the process and we unite the closest pair of samples into one groups but here well initially we need to set distance between individual samples and here we need to define what is the distance between meta samples between what is the distance for the situation when at least one argument is not a standard sample but a group of samples well quite a sample so this distance can be defined in two very different ways minimal distance between samples from the first group and from the second group maximum distance mean distance or

more complex definition so it is the question so it's the fact determined by the linkage type how to define the distance between these quasi groups what formula is used so this process goes on and on and on at each step we add we reduce the number of groups by one and finish this process by one group that unites all the samples at this visualization it's hard to understand the logic it's hard to understand how the process goes on but there's once more standard visualization for the out of the hierarchical clustering so-called dendrogram so let us

have a look at the progress of our pipeline well let's inspect what's going on there so the mine is done so please let me know if yours is done as well so if it is again if we want to inspect our results visually we have a PDF file cell lines expression data series each class dot PDF and here you see the results samples in our case breast cancer cell lines go into the line below and step-by-step these cell lines are united in two groups the hate at which two sub groups are united shares the measure

of dissimilarity between these two groups for example if the if grouping is performed at a low height then the united groups are similar if we unite two groups at high height it means that groups are not similar at all so these figure clearly states that we have two well-defined clusters and potentially both clusters can be further divided into two subgroups but if you have a look a close look at this figure you'll see that these groups would just coincide with the subtypes we have for example one of our subtypes normal-like subtype yeah normal like subtype

is located in the rectangle you see marked as green so if we cut this tree into two groups we need to select the cut height at the level of 300 400 we have two groups if we cut the tree at a low height we get four groups if we continue reducing the cutoff height we get more groups but please take a look what will be the next separation if we reduce the height to the level of approximately 200 here you'll see that HCC 202 cell lion will be identified as a separate cluster as a separate

outlier so just the same cell line that was identified by the analysis explained by Julia anyway four clusters I seen really clearly in this figure and there's no wonder that luminal subtype here is subdivided into two classes as indeed it includes two subtypes of the second-level luminal a subtype and women will be subtype we obtain this division into clusters in an unsupervised manner looking at all genes looking at all genes after very single tree filtration exclusion of jeans with concentrate low expression level the question is can we get more looking at lower number of genes

so we can we get more information looking at much lower number of genes it seems that we lose many information limiting our focus on just a few genes but at the same time if we analyze large of genes the majority of genes the majority of transcripts simply introduce the noise so there are only some genes related to the question of interest and reducing the number of genes were looking at can potentially improve signal-to-noise ratio indeed we'll lose some information but still we can keep much information and greatly reduce the level of noise for example let

us look what can be done just in a similar analysis type if we limit our attention on 15 genes out of our initial set so let us create two pipelines similar to the ones we just created just the same types of the analysis but limited to 15 genes again we're in the unsupervised analysis section so first for the PCA analysis we upload the file cell Lyons 15 genes marked in order to see the complete figure cell lines 15 genes marked here it is it's small there are only 15 genes not 7,000 so PC draw analysis

you see a draw analysis is the the first pipeline we're going to create half the second pipeline we're going to create in a very similar way is pipeline for each cluster in this case we upload non marked file cell lines 15 genes cell and 15 genes and again perform job the same steps we upload this small file we upload this small file and create a pipeline each class for example with 4 clusters again you can keep any other number of clusters as it does not affect it does not affect the dendrogram we get and again

linkage type the algorithm for getting distance between coitus samples set to worth d2 we save it and go on and complete this pipeline each cluster and run it okay we'll soon have the results for these pipelines but in any case if we look at these tables will again see that for these tables just a moment let me show you for these tables the selected genes are identified by their in symbol IDs so information that's potentially hardly interpretable as only few of us remember the association of specific genes with their own sample IDs so if we

wants to get more meaningful information about genes gene names let us run the third pipeline and then just check generate the results just gather the results the pipeline we're going to run the next pipeline is associated with the utility section we simply want to know what are the genes were working with so we upload our file cell lions 15 genes the Lions 15 genes and use annotation model that will look at the database and add standard gene symbol and categories to each gene we have in our table so that's it annotation for example with the

default categories okay okay so let me just repeat all the steps the question is how to restore how to restore gene symbols and go-go categories heaven for example in sample gene IDs well technically it can be done simply by using the platform by creating a pipeline at the utility section so we upload a file with genes for which we want to know these gene symbols these annotations just the file were working with and create a pipeline with the annotation model it says that for example gene on transcript IDs located at column one in a table

that has a header we want to add information including GU categories so that is very simple pipeline that's it okay and let us look at the results let us look at the results what we get which pipelines are completed for you which are not in any case what we have for these 15 genes for these 15 genes we have a much clearer separation of groups on the PCA plane so is the figure you get out of the PCA analysis and indeed we see that going into lower number of genes well into the subset of genes

we can call a gene signature we can have clear a figure of what's going on and just a moment and if we see if we look at the results of irrigator clustering you obtained okay perfect so if you download the results of the hiragana clustering you'll see the dendrogram I am having here with additional marks so I just point your attention to the fact that these 15 genes indeed give us clustering into four clusters that perfectly coincides with the division into four subtypes number of genes with an increase of signal-to-noise ratio or an analog of

this signal-to-noise ratio gives us the result perfectly concordant with what we're dealing with so that you record your clustering and finally we're waiting for the results of the annotation which will also give us gene symbols and all the other data type but it is the result of the unsupervised analysis it is the result of the unsupervised analysis definitely there are multiple other methods for unsupervised analysis for example for clustering well known method is k-means there are other methods for clustering that may produce results different from the hierarchical clustering and that may require different input for

example 40 means we do not have a clear visualization with a dendrogram and have two predefined K number of clusters number of groups [Music] in advance but this initial analysis linked with clinical data linked with Vernon typic data gives us separation of our samples into groups and we have another task having the separation of samples into groups how to identify the group for new samples for example having our data set as a learning set we identified that breast cancer patients can be divided divided into subgroups and how to define how to understand which what is

the subgroup for a new patient it's the question for the supervised analysis so probably at this point we may have a short break well if you are a bit tired and finally in our final part before a short hand hands-on practice will briefly describe discuss the analysis in a supervised problem statement when we initially have this division in the subgroups that we could identify using unsupervised analysis okay so as I understand in about 15 minutes okay so in this case we can simply try to have a simple example which will show you that indeed these

methods of the analysis can simply be used to analyze a data set you know nothing about initially so what I'm talking about is the following in the set of files do you have there's a subfolder hands-on what is this set of data we have in this file we took two additional projects both dealing with breast cancer but in one project the samples were human samples just biopsy samples their biopsy samples or tissues taken in frames of surgeries and the other data set is Centegra is data originated from a patient arrives and graft project so human

breast cancer was implanted into mice and then taken again out of the non-human mice micro environment and it's clear that micro environment in human and in mice should be different and disabled samples should also be different so we took just the same 15 genes to make the analysis faster but the samples here are intermixed so some samples are associated with humans Sam associated with mice so could you divide this set into a human set and my set probably not named in each one but telling which samples belong to the first and which samples belong to

the second using the automatic modules we've discussed so in order to understand that this analysis is really simple in spite of in spite of the fact that the number of the amount of information is relatively large while dozens of samples and several genes so the task is to separate these datasets into two different really different subsets mice and human derived samples this the task clear so as the heel is walking there behind said he'll just let us know if everybody is following if everybody has seen the file it's in the original zipped folder that you

download it there is a file fill hands on and the only file in the folder hands on file with intermixed file with intermixed datasets human derived and mice do ok and people that are just joining online you can also let us know on chat if you are following along or if you are having any issues in the meantime I just want to mention also Vladimir there is somebody requesting clarification on the steps for the annotation pipeline so if at some point we can review that again that would help sure sure so probably a good option

is to give several minutes to implement these hands on task and then return to the annotation pipeline okay if you've started your pipelines and just waiting for the results well please let me know and we can briefly discuss these annotation and then to go through some additional topics very briefly [Music] [Music] okay so again regarding annotation here currently we're talking about very simple annotation about taking an assembled gene or transcript IDs and add an information which is simpler for manual interpretation i mean gene symbols so just standard gene names and gene ontology terms cell localization

gene ontology functions and so on and in order to perform this pipeline definitely all we need is the set of example gene or transcript identifiers in our case these identifiers allocated into in the first column of the table so we simply upload this file we simply upload this file but this one for example and tell that we need to add information for identifiers stored in the first column of the table that the table has a header so the first row is not it is simply a header and we want to include gene ontology data into

the output that is all that is all and the output for this pipeline I hope you also have an output for this pipeline includes a table marked with out suffix so it is just an initial table in the week in the right gene symbols are added so here for these assembled genes gene symbols are added and here you see that in our subset of 15 genes there is for example a very well known epithelium cell adhesion molecule he become is present and then goo terms are associated with each gene for example for the case of

this well-known hidden molecule Rahzel domes include plasma membrane as its adhesion lateral plasma membrane integral component of membrane and others so for for example DC and prodding these Gautam's include wound healing as a biological process along with gene symbols here we see food well a bit fuller description full gene name for example collagen type 6 primer etc so it's a simple very simple annotation limited to add in gene symbols gene ontology annotation so if you are ready with the hands-on test we can discuss the results and then briefly discuss just a couple of more topics

whatever there is also a question here if you will after this hands-on work go over a standard differential expression pipeline with four annotation exactly exactly so again and asking Sahil is the voice as the voice from there what are the approaches you test it in order to separate the data set into two subsets and what results did you get any ideas you're also welcome to write your ideas on chat and we'll announce them here okay so both options we discuss to the principal component analysis and hierarchical clustering analysis both of them can solve the problem

we have here for example for example if we perform here our kacal clustering for this file well for example I'll show you the result with two factors in an output well we'll see what's happening and principal component analysis potentially can also call this task but the output will be a bit longer to interpret so initially we have groups completely intermix okay the answer here from the audience somebody said PCA with two groups exactly so PC give two groups let me just take a look okay for example as we are waiting for the result I'll run

this pipeline and it's really fast [Music] so what we have for hierarchical clustering as an output here we see two well-defined groups and the answer for this shot for this simple task is the following all samples marked with odd numbers belong to one group all the samples marked with even numbers 256 60 etcetera belong to two groups so this separation is just because indeed one subset is human derived and the other subset is mize derived and in human derived samples all the samples belong to the same subtype so that's why the unification height is relatively

low and in the my stride subset in PDX subset there were two types of breast cancer and these two types are defined by these clusters that can be obtained by cutting the tree at the height of for example 30 so indeed again here occur clustering gives us a clear separation and if we look at the results of the principal component analysis after we get it it's it will take just or seconds we'll also see these clear separation for the hierarchical clustering definitely we have not only the plots but we have additional information for example we

have a file with the suffix clusters only dot txt and just a moment what is the content of this file I'll open it in Excel to a simpler view so for each sample this file gives a cluster identifier we set the number of clusters equal to two so the cut off height was selected in such a way that the result a number of clusters is two if we've said the cut off level two three four etc we'll have the same number of clusters here and looking at samples united in one cluster is very simple we

just look at samples marked with the same cluster ID and in the pc plane we indeed also have a similar separation into two groups one group the left and bottom and the other group top right so it is the separation so having groups heaven the groups we can think about the next problems and one of the problems is to find genes that have different expression in these subgroups we're dealing with so first we need to we need to define we need to analyze to understand what does it mean a gene has different expressions in the

in to compared classes in to compared subgroups first we may look at mean expression levels the different expression means that average expression levels are also different but look at the example features to the right of this slide you definitely see no differential expression in the upper case and evident differential expression in the lower case in that blue group of samples expression level is evidently much higher than in the red group of patients at the same time the difference between means in the upper and antilochus is just the same one in log to scale so two-fold

difference in the initial scale that is why fourth change the difference between means can be used as the only parameter as the only characteristic of differential expression we also need to consider statistical significance of this differential expression obtained using one of the standard or non-standard statistical tests in the simplest case it is a student test or so called T tests that compares the difference between average levels of expression levels compared to deviations compared to variations inside expression levels in each group more complex cases which are better for small sets of samples use more complex statistical