Liquid AI's Ramin Hasani on liquid neural networks, AI advancement, the race to AGI & more! | E1928

85.8k views11468 WordsCopy TextShare

This Week in Startups

(0:00) Liquid AI CEO and co-founder Ramin Hasani joins Jason

(1:09) Liquid AI's mission and concept ...

Video Transcript:

if you have AGI as you said like you can solve the energy problem you can solve if once you solve the energy problem like what I mean you are basically the most valuable company on Earth you know think about that I mean if you can solve economy like if you can solve uh politics basically the structure of governments you know this is the thing that we hoping to get and there's a race to getting there do you think everybody gets there at the same time like AI feels no you don't you feel some people will

get to AGI first first yes yes this week in startups is brought to you by LinkedIn jobs a business is only as strong as its people and every hire matters go to linkedin.com twist to post your first job for free terms and conditions apply EPO experimentation is how generation defining companies win accelerate your experimentation velocity with EPO visit geo.com twist and adio radically new CRM for the next era of companies head to ado.com twist to get 15% off for your first year all right everybody welcome back to this week in startup we got a great

guest for you today with a great idea Ramin Hassani is the CEO and co-founder of liquid Ai and uh we're going to hear all about what liquid AI is doing in a moment but they're kind of headed in a New Direction uh trying to make smaller and more efficient language models welcome to the program Rin thank you for having me uh maybe you know just uh by way of introduction here explain to me what the mission of uh liquid AI is and then let's get into you know sort of language models and you know the

size of models and making them more efficient yeah definitely so I started a company to design basically like from first principles systems that we can understand from scratch on a completely new base for artificial intelligence that is rooted in biology and physics so we started looking into brains and see how we can get Inspirations from there to design kind of a new math that we can understand and we can scale basically and that that kind of became kind of a liquid neural network technology that I invented during my PhD program okay liquid neural network what

does it mean compared to say a traditional AI model large language model so what's the difference what is a liquid neural network let's explain that and is that the term you came up with or is this an industry term yes that's something that I came up with so believe it or not like about seven years ago I started looking into the brain of a little worm the worm is called sea Elegance the worm is one of the like in the tree of evolution is one of our fathers okay okay so it's basically nervous systems and

you know cellular kind of organization and everything is evolved from this animal this worm has already won um four Nobel prizes for us because it shares 75% of its genes with humans so and its entire genome is actually sequenced is one of the only animals on Earth we have actually two animals now that its entire nervous system is mapped that means like we know exactly how an atomically like how each part of the nervous system is actually connected to each other right so I thought another nice behavior of this biological organism is the fact that

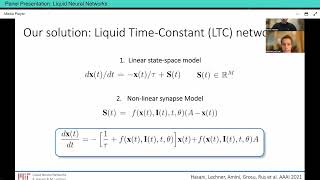

its nervous system is differentiable what does that me today's AI systems as as you know them they are basically a set of neurons in a layerwise architecture next to each others and they're connected through synapses or weights of the neural network and they become like a giant neural network that can do what chat gbd can do today right we scale those kind of neural networks into this kind of regime now neural networks the the way we train these systems on massive amount of data is with a technology called back propagation okay back propagation of Errors

the underlying mathematics of the systems is differentiable that means you can propagate errors without interruption inside inside the neural network inside this huge kind of gigantic kind of functional form of neural networks okay this property doesn't exist in the human brain in the human brain neurons Spike so you have seen like uh I don't know EEG kind of signals and stuff like you can see that there are spiking neural networks okay spikes we haven't understood yet like from nervous systems we don't know why uh spikes work we have no idea we still don't know what's

the purpose of the spike I mean some people say they translate an analog to digital kind of conversion to propagate information information much faster we know a little bit about the learning theory around that like Joffrey Hinton is actually like was working on some forward algorithms you know nonack propagation based kind of methods and stuff so there are local kind of learning rules and stuff that that we figured out but we there is still so much that we don't know how the brain actually does learning but when we go back into animals on until we

arrive at this worm we don't have any nervous system that doesn't Spike so that was that that's why I like this worm because you know the nervous system is something that is very similar to the mathematics that we design artificial intelligence with so I started learn like basically modeling the behavior of cells inside this worm and then this system became a new type of Learning System this Learning System is flexible in its Behavior meaning that when you train it on data the system still stays adaptable to incoming inputs this is not the case with artificial

intelligence systems when you train them they become kind of a fixed system so when you train the weights of a neural network let's say in the in the case of let's say GPT 4 GPT 4 has 1.8 trillion parameters each parameter this corresponds to 1.8 trillion weights in the system these weights of the system are already trained and they fixed now that they're fixed now you can it's now became an intelligence system you can input information in there and then take output information but the system is fixed liquid neural networks on the other hand they're

not fixed they're systems that you can have they they can they can stay adaptable to the input incoming inputs that's the major kind of difference between the two and that's an advantage because it will make the uh answers more dynamic or more real time what's the advantage to or more robust okay let me cut to the chase right now because I know you're busy and everyone is hiring right now and you know it's a lot of competition for the best candidates right every position counts Market starting to come back you need to get the perfect

person you want a bar raiser in your organization somebody who will raise the bar for the entire team and Linkedin is giving you your first job posting for free to go find that bar raiser linkedin.com twist and if you want to build a great company you're going to need a great team it's as simple as that LinkedIn jobs is here to make it quick and easy to hire these elite team members and I know it's crazy right LinkedIn has more than a billion users we all watched this happen when it was tens of millions then

hundreds of millions and now a billion people using the service this means that you're going to get access to active and passive job Seekers active job Seekers they're out there looking passive job Seekers they got a job but it's not as good as the job you're offering them so you want to get in front of both of those people maybe somebody got laid off wasn't their fault and they're an ideal candidate get that active job seeker and Linkedin also knows that small businesses are wearing so many hats right now and you might not have the

time or resources to devote to hiring so that LinkedIn make it automatic for you go post an open job roll you get that purple hiring ring on your profile you start posting interesting content and you watch the qualified candidates they just roll in and guess what first one's on us call to action very simple linkedin.com Twi linkedin.com twist that'll get you your first job posting for free on your boy jcal terms and conditions do apply a demonstration would be great here because this all sounds quite theoretical so maybe we could walk through your product demo

or uh your PowerPoint on how this all works yeah definitely definitely so we are talking about still the science of things like how this became uh liquid Ai and uh this is all based on a worm what worm is it it's a worm called sea Elegance it's a 2 mm long worm it's it's a very very tiny worm got it but it's a very popular worm let me show you what would happen when you train a liquid neural network versus a typical neural network okay and for those of you not watching you can go to

this week and startups on YouTube and find this episode just look at the recent videos tab yes what I'm showing is this is basically a dashboard of an autonomous driving system it's a neural network what I'm showing here in the middle you see layers of neural networks stack uh to each other and then they receive camera inputs and they make a final decision like they they make a driving decision basically now this system has has been trained on massive amount of kind of uh driving data this is just the lane keeping task because we've done

that at MIT uh during our our research basically so what we what we see here this this is actually an actual car that is getting uh driven by by this neural network in the camera on the top left what you see is the camera view and um on the bottom left what you see is an attention map of this neural network that means where does this neural network is paying attention to where when it is taking driving decisions this neural networks has 500,000 parameters it's a rather small neural network okay now as you see there

is also a little bit of noise on top of the image you know like on the on the camera you see like we put a little bit of of noise so that we can disturb and see how robust the decision making of this system is okay and as we see in a typical kind of neural network that you see in the middle you I I put all of these dots that are glowing they are basically single neurons that are getting activated and deactivated basically it is very hard to say what this what what this neural

network is doing right because this there's a lot of them and there's a lot of 500,000 parameters how can I actually say what each individual of this systems is doing you know in this task but again if in an abstract way if I bring it back to this image on the bottom left what you see is the attention map the lighter regions are the regions where the network is paying attention to when it's taking a drive-in decision got and that would be the road I guess exactly it has to be the road yeah it has

to be the road but it is basically like outside of the road in this case as you see the attention is kind of outside and is kind of affected by the noise that we put at the input you know so that's why it is not that much um reliable this is how a typical artificial noral network works now let me change that to a liquid neural network all we did we switched the parameter heavy part of this neural network we kept the eyes of the network which is this con con kind of layers as you

see like convolutional layers basically but we replace basically the parameter heavy part of the system with a with 19 neurons 19 liquid neurons neurons that are modeled after the worm's brain and then we basically you know like the synapses also like the connectivity you know it looks like a little bit more scattered it's kind of recurrent kind of connections like you can see a lot of kind of U un structured kind of Connections in this system but this system has 19 neurons and around 1,000 parameters as opposed to the previous system that I showed you

that had 500,000 parameters the system much less yeah thises much smaller and does that make it more accurate or does it make it faster decisions or both or we just don't know both actually so now let's look at the bottom left again like the attention map of the system as you see in the attention map now the focus is on the road and on the sides of the road so the system without any prior it actually figured out like how how to perform decision- making with without being like you know like disturbed by anything else

now not only this system is very much smaller than a Transformer architecture but it's also it can give you basically much more robust representation very similar to how biological systems perform decision making so net net a worm is a better driver than a human brain what is's what you're telling us that seems counterintuitive aren't human bra better than worm brains um and is this because the Silicon that these things are run on in the cameras um aren't able to process fast enough in real time like a human brain so actually a worm brain might be

a little bit simpler and easier to run on today silicon is that is that what I'm reading into this as uh I mean yeah to some extent but but the the fact that these are just modeled after how nervous systems perform computation in the brain of the worm mhm now we can take those mathematical Inspirations and then build machine learning systems that are not just like they're not just mimicking to be a worm or anything they're just like basically the fundamentals of computation in nervous systems now the reason why I told you the worm in

the tree of evolution is one of our fathers is the fact that these principles actually scale that means if if nature actually evolved worms into humans we can take these Inspirations from neural computations and even go beyond that you know so there's an opportunity to build AI systems powered by how nature designed nervous systems okay so the worm system is less robust and narrower than a humans but you could scale it up and if a worm had a billion neurons or a million neurons I don't know how many it has actually the worm has 302

neurons it's a very tiny worm okay so it's got 3002 how many neurons does a human have human has 100 billions of neurons got it okay so there's a big gap between those two but the worms are um simpler and easier to define or easier to emulate than a human because humans are much more complex with 300 billion yes yes we can understand this worm we can understand the brain of this worm much better than we can understand the brain of a human being because we still have a lot of questions even we don't understand

mice we still don't fully understand monkeys we don't understand small fruit fly you know yeah so that's why we need to start from somewhere so I wanted to take a step back and start as a as a you know computer scientist basically wanted to see like how these kind of systems how how can we look at the origin of these nervous systems and where can we find basically principles that we can at least confirm that exist in biology and then now take these systems and build new type of learning systems okay got it okay keep

an inspiration basically I understand yes okay so this is pretty trippy but I'm I think I'm following so let's keep going yes yes so then since then we managed to drive cars autonomously like with this nervous small nervous systems we showed that you can fly drones with them okay you can recently United States Air Force actually showed that you can also fly um um full drone F-16 jets with them these type of warm inspired systems can actually you know do a lot more than just you know like navigating Wars but they so it might not

be able to handle the existential crisis or making a season of The Sopranos and something complex like that in Creative but it might be able to do something incredibly simple and basic like stay in the middle of this road you know dead center or you know keep this drone in the sky not crashing into something exactly that's what we thought at the beginning right that this is going to be the property of this Learning System but then we started to see that you can also do much more complex kind of tasks way better than how

artificial intelligence systems performing that for example what predictive models for financial markets predictive models for biological signals let's say like if you want to predict the mortality rate of uh people in ICU based on their biomarkers you know and then if you want to do predictive tasks like that you can see that these models are really good at doing that in principle we figured out that this type of new type of technology is really good at modeling time series data data it could be video data it could be audio data it could be text it

could be user Behavior it could be Financial time series medical time series so it is basically a general purpose computer the type of models that be developed these type of models we've applied them and we checked it that the last seven years actually we have seen that these systems are really good at performing these kind of sequential decision making processes right and that became basically the point where we thought that okay so now it's time to start um maybe making larger and larger Systems off of these general purpose computers that we can um you know

like we can change the spectrum of how AI is done today because today we are working with a base called Transformer architecture right we're building we're basically changing that Transformers and gpts generative pre-trained Transformers into a new Foundation which is called liquid foundation models which is called lfms basically so it's a new thing that is coming basically okay and a Time series just so people know when you say time series it's very simple it's the series of a similar data point but over time so uh perfect would be a stock price over every minute on

the stock exchange or as you talked about driving it would be the steering wheels alignment or the speed of the vehicle over every second or millisecond you're that's a Time series and these are particularly good at studying a Time series is what you're saying yes yes and also this this podcast you know the audio signal that you're hearing is basically a Time series the video that you're obser you're seeing is also a Time series so all video data I mean in some sense if you think about it that's a Time series You Know audio it

is a Time series video is a Time series but then language is a little bit different than that language is also a kind of sequential kind of data but it's not the time element is different it's basically just a sequence of words words coming after each other so you could also technically apply liquid neural networks to those kind of problems as well are you tired of slow AB testing I'm sure you are do you have any trouble trusting your experiment results I know I do sometimes well get ready to 10x your experiment velocity with EPO

that's EPO whether you're a scrappy startup a tech giant or anybody in between their feature management platform will turn your risky launches into clearcut experiments data teams of of course love EPO and so will your product growth and machine learning teams the executives are going to love it too cuz they're going to love the results and the discipline that comes from defining really important product experiments and then executing on them really well because it gives data teams better coordination and faster Innovation Online Marketplace inventa has cut down experiment Time by 60% and click up the

project management company has cut down analyst Time by over 12 hours and epo's cloud-based system is all about empowerment you get instant access access to your experiments from anywhere in the world boosting flexibility and teamwork and you get a system that grows with your needs easily scaling up to handle more tests as your business grows with EPO the daunting becomes doable I love that so your team can rely on the experiment results and make faster decisions here's your call to action experimentation is how generation defining companies win accelerate your experimentation velocity with EPO visit geo.com

twist just visit get.com twist and let's get some experiments running let's get that product Market fit and thanks to EPO for supporting Independent Media like this weekend startups and all the startups who are listening well done all complex uh where are you at in terms of this being Theory versus execution so we see chat gp4 we see fsd2 where is your company at in terms of you know commercializing this and did did this all come out of MIT I heard you mentioned MIT earlier so you went to MIT you studied this and uh you know

this jet fighter that you know was AI based is that your software or they they also studied this so explain us to us where you're at with this company um and maybe some demos of the product yeah yeah definitely definitely so we started exactly maybe one year ago one year and three days ago actually the company so the company has four co-founders is uh myself and all MIT people so we it's myself it's Maas lechner who another CTO we we have actually invented co-invented the technology uh together and then we have Alexander amini another PhD

student from MIT and um he's graduated now and then the director of computer science and artificial intelligence Lab at MIT who is danielus basically is also a co-founder of our team we've started this company on this U new technology because we've seen a lot of like you know that our lab at MIT was focused on real world applications of AI you know like we really wanted to design AI systems that can go into the real world and solve real world problems you know and that's why like we always had our AI systems deployed in the

society like they were always deployed in an environment doing a task you know this would be an an autonomous C this could be also an you know manipulation of a robotic arm you know this could be any kind of task a humanoid robot kind of control do they refer to that as Cale at um Cale yes at MIT the computer science artificial intelligence laboratory which is known um correct me if I'm wrong for a lot of Robotics that we see in the world absolutely so the Roomba and some of those projects came out of that

yeah 100% yes yes yes so a lot of the fingerprints on robotics come out of this yes mit's cesal lab and you were part of that now exactly um where are you at terms of providing this as like a product are you is there an API are people starting to use this are you yeah how old is the company how much of your raise tell me a little bit about you know now that we got the background on the science behind this and the science is worms we get it super interesting let's talk about the

application and like making the startup reality because going from theoretical and spinning something out of a university and then making it reality that's a jump that very few companies are able to make so explain to me where you're at with that big jump yeah definitely definitely so we started last year 30th of March the company it's very fresh like has been like 12 months now we raised the substantial amount of kind of seed money I think we we first had a seed round of $5 million at $50 million valuation and then we actually did like

a C2 basically and um that C2 was also um I think eventually became $37 million and so overall we raised like $42 million in in SE in seed value at a $300 million valuation the reason for raising the money was basically building the Superstar team which is one of the things that we have like because on if you're building something completely different than 99% of the companies because every company in the generative AI space and AI space is working on top of a technology called Transformers now you're changing that foundation so you need to have

like people like-minded people from all over the world I actually gathered them from Mostly from MIT and Stanford and some of the students of yosua benju as well so we gathered like this team of people brilliant people they have all invented the new technology for efficient uh uh alternatives to machine Learning Systems people that have worked on explainability of AI systems like we have like all sort of kind of capabilities in the team with the purpose of wrapping basically this technology of ours like building on top of the techn the core technology which is liquid

neural networks for Enterprise kind of solutions with a horizontal kind of look to the market so we basically going after verticals because as I told you it's a generalist system I can solve financial problems for banks for large Banks I can solve also problems in the space of Biotech I can solve problems in the space of autonomy right so it's a horizontal play now as a startup it's always like the weird way to actually go after all I want to solve all of them but we talking boiling the ocean problem so yeah are you a

platform that you're going to provide an API to people or going to go after one of these verticals I guess is the question everybody has yes yes so we are building an AI infrastructure in which you can train fine tune and play around and use liquid foundation mods this product is an Enterprise facing product it comes with a developer package where we actually give it to Enterprises Enterprises are basically can use this technology and actually enjoy its performance they can see the efficiency of the models mostly you can develop models on the edge we have

today language models that run on a Raspberry Pi Raspberry Pi just so people know is the smallest Computing unit essentially in the Open Source Hardware Community these raspberry pies go for 10 bucks 25 bucks it has a certain amount of power to it so you're telling me you're going to be running this on this neural network on a Raspberry Pi which is like running it on like a thumb drive basically exactly people can imagine that yeah that's like one of the beauties of the technology so the technology can be running on a very very tiny

they're very energy efficient you know depending on their they can be small but they can be very powerful now in terms of like how we are going to Market and how we are actually commercial how we are managing to be the AI platform for all the verticals we have established some contracts across the globe actually with with some of the system integrators in the world so in Europe we have a contract with Cap Gemini which is one of the largest system integrators actually in Europe in Japan we are working with itochu CTC which is basically

the extent of Japan you know in uh United States we we're signing up with with ey and conversations with Accenture basically so the the target is that system integrators would take the platform as basically being able to integrate it in the verticals that they're interested in so you don't have to worry about the commercial ization of this you have to provide the people who do commercialization and license to them so this seems incredibly disruptive um if you are able to do this for a fraction of the cost um what does this do uh and the

fraction of the hardware if you're successful what does this due to Nvidia what does this du to open AI you know they're putting together you know billions of dollars tens of billions of dollars in supercomputers to train these models you're claiming you're going to be able to do this because it's with the worm brain and it's a much more efficient process with a fraction of the hardware model the hardware footprint so you know head-to-head what's going to happen to you know Big Iron in AI if you're successful yeah definitely so there are two costs on

developing AI systems one cost is like designing the AI systems and the other cost is basically usage of AI systems right like you can now now my AI is inference basically right so now on inference side as I told you we can be between 10 to 1,000 times more efficient than the models that are available today that's basically the the the basically the energy footprint of the models okay on the training side we can be between 10 to 20 times more efficient than the Transformer models that means if I train let's say a 10 billion

ameter liquid model it's going to cost me depending on how much information it can process which we call context lengths right depending on the context links that they have it can be between 10 to 20 times much more efficient to actually develop this kind of system so that means instead of requiring 10 billions of dollar like 10 billion dollar basically to to develop um gp4 Quality models you would need a fraction of that basically yeah maybe 500 million or something or 100 million a serious fraction what does that mean for you know somebody like open

AI Microsoft somebody's cloud computing platforms that are are they building all this extra hardware and focused on the wrong problem you know that Hardware is not the problem it's the architecture and the framework and the Paradigm under which they're building this and they're just building under a much less efficient Paradigm is that your claim here I would say you know the beauty of the Transformer architecture and what open Ai and everybody else is after is the fact that these systems scale really nicely you can scale them into larger amounts of data and also larger model

sizes so what motivates the community on a generative AI is the fact that the larger you make the systems the more powerful they become now if you look at if you look at where we are today with the state of theart we have a Claud uh Claud Opus basically which is the the most powerful model I expect this model to be in the order of like three to five times bigger than GPT 4 that means this model is I would say in the range of maybe 10 trillion parameter model ah they now they haven't released

Claude anthropic hasn't released what that model is but it is number one on hugging face now with the ELO ratings number one 100% it's a number one kind of Performing kind of AI system in the world right now okay like there's now entropic is talking about 10 Xing the size of the models every year that goes forward that means we can expect by the end of next year to have a 100 trillion parameter Transformer model the reason why we why they're doing that is because when the models are actually getting larger they become better and

better and maybe maybe we can get into AGI and generaliz kind of AI systems by enlarging kind of the architecture and the focus is just that there are two companies in the world that I think the absolute focus of the companies are building AI this open AI is an anthropic right now so there are kind of gutsy moves like like what we are doing basically we're basically changing the fundamental architecture we building new scaling laws basically on top of this thing the scaling laws let's see if we can make liquid neural networks also scale that

means if I have 1 trillion parameter liquid model it might actually be as performant as a 50 or 20 billion parameter 20 trillion parameter Transformer model the other way of it is also true if I have a 100 trillion parameter liquid model it might be better than a 20x larger Transformer based model so that means these are basically the kind of moves that we want to make I mean so far if you're successful when will anthropic move over to your platform do you think or are you a competitor to them do you think I think

I mean right now like we are we are going through um another fundraising like series a of liquid and I think after this round we are basically um getting prepared to actually train very very large models so these models are going to be I mean after the release of those models probably by the end of the year I would say then um the the community is going to see like that there are alternative kind of models that they can come in and disrupt the way Transformers are actually disrupting and they can scale the way Transformers

scale basically what Hardware are you going to use what platform are you using right now we are using Nvidia gpus as well like it's very simar it's just that the number the amount of gpus that we consume is about 10 to 20 times less than how got it 5% 10% of them what they're using startups and small businesses listen up you want a CRM that neatly organizes all your customer data so that you can avoid missed opportunities and you can deliver a personalized service rigid crms can adapt to your fast growing needs and that's where

AIO comes in attio delivers the goods it's a custom CRM that's flexible and deeply intuitive atto is built for the modern company headed into the next era of businesses it connects your data sources adjusts easily to your specific setup and Suits any business approach whether it's self-served or sales-driven Ado automatically enriches all your contacts think about that you might be missing a first name a last name an email an address all of that stuff it's going to your emails and calendars it's going to enrich those contexts and it's going to give you powerful reports it's

also going to let you quickly build zappier style automations If This Then That Type automations the Next Generation deserves more than a one siiz fits-all CRM join 11 Labs replicate modal and more and get ready to scale your startup to the next level head to ado.com twist and you'll get 15% off your first year that's at tio.com twist and so talk to me about data because it does seem like this is the next big shootter drop licensing data balkanization of data hey maybe Reddit is available to Gemini but not open AI Twitter now is you

know closing up access or x.com is closed up access for people um and it and the New York Times is in a lawsuit with open AI which obviously trained on their data without permission how do you see all of this resolving itself because obviously people are rightfully saying hey I own this data I have the archive of the New York Times or I'm Disney I own this Archive of Ip from Star Wars to Marvel where I'm an author and I have these books how how do you see all this shaping up in the in the

coming years because is that going to be the limitation the the data you have access to or is it going to be synthetic data rules the day and you're going to be able to just make your own data to train on how do you see all this unfolding yeah definitely I believe like at the end of the day I think the data providers they should be incentivized to provide their data and they should know they should know that their data their data is being used basically like you you need to have a payment scheme basically

for people that you're using their data in your in your system be in your mind how would that work do you have any ideas we haven't we haven't gotten there yet like I think I think this would be like a challenge to to to think about but at the moment what we trying to do is basically the way everybody does like we basically purchasing dat purchasing data right like you're basically paying for the data that you use in order to be able to you know like to leg you believe this is a good idea because

it will keep people making data so journalists artists writers thinkers you believe hey this is a a a fair deal here some sort of Licensing Arrangement where they get paid some reasonable fee to train your models or train claud's models or open ai's models or Google's models yeah 100% the reason being say for example a content creator on on YouTube right so if people come and and look at their content basically you know like and they get inspired to build something off of that you see so AI is also like basically doing the same thing

right there it's looking at the data that is basically available and it's getting inspired by that data if it's not directly the copy of that data right and that that scheme of how we are doing it through like let's say social media kind of channels right it has to happen like very similar ways that we can we can incentivize users of social medias or users of AI or providers of data for AI systems to also like have this understanding of this is basically the same thing the same kind of scheme can actually apply here there

might be analogies here but again like you really have to be systemat systematically going after this problem which is um one of the one of the main main main issues like as we thinking about scaling our company it does feel like it's fair if somebody's put a lot of work into it that if an AI was built on top of the New York Times Corpus that yes they would have permission to do that because it is something that you could partner with the New York Times as opposed to open Ai and build this with them

and monetize it with them and it's their opportunity to create an AI based on the New York Times data not you know open AIS or Geminis everybody should have the ability to opt into these things so I feel like that's a pretty smart approach that you're taking um how long before people will be able to use your platform and swap out gemini or swap out Claud or swap out open AI for yours for look a so I mean the first the first batch of products that are coming is basically already in use with some of

the clients is a developer package as I told you for solving AI problems like this could be let's say like you have a predictive task where you have like video data from surgical kind of processes and the at the output you want to predict basically what phase of surgery we are in for example that's a kind of case case study where a developer can take our package and then basically use our system in that kind of real word application to solve that task this is already ready and it's available to some of the Enterprises through

our system integrator contracts and through directly with some of them we are already working like in the financial sector in the medical sector in the healthcare and bio Tech we have been like very active and auto automotive okay this is already available what's your definition of AGI how do you determine that uh a system is generally artificially intelligent do you have uh or I mean you must have heard a million of these different ones when you're at MIT and there's a big debate around it but what what do you think I think for AGI I

think that I I just want to stick to something that we can actually still understand and talk about for example a system that is beyond human capable can perform Beyond human capabilities given the same resources that means if I'm provided that the same kind of resources is provided to the to the human and to it to to the AI system the AI system is being able to perform that task better or orders of magnitude better than humans got it so given the same resources we both have access to the internet we both have Broadband can

I beat this system at chess no okay but it a new game that just came out today could it beat me I guess is the question exactly and the a AGI can exist in a in the virtual world as well like as you were mentioning this these These are possibilities that are inside a virtual kind of existence Bally existing in an internet kind of system but uh in real world you need to have also embodiment so that's why a lot a lot of work is actually going towards you know like like the humanoid kind of

movements of robots like we building humanoid kind of robots and open eye figure I mean the the new works that are going on like there are so so many I mean at MIT there are many many people working on humanid kind of research also like other types of AI systems that you can integrate in the society you know yeah so the the point is you know there's virtual we know that those are creeping up like getting an answer to a legal question or making a marketing plan or writing something you know and OB obviously chass

and verticalized games go it's crushing humans but it's got to be able to translate into the real world and if it's going to be doing picking strawberries we're going to need a robotic arm we're going to need computer vision but all those things seem to be aligning so a robot we had a company called Rude AI which I think actually had some of its um Origins at MIT as well with the robotic hands being able to pick strawberries in the real world better than a human faster pick the right ones not crush them put them

in a box I think we're kind of there today we're pretty close to it for those kind of applications yeah but think about think about for application of play uh I want I want to have like a robotic soccer team or a basketball team you know like can can we have like those kind of things right that's a level of fine motor skill probably not yes yeah so when do you think we hit AG in your definition that it's able to beat a human uh at any task could be basketball could be cooking I think

I think the the next two to 5 years is going to be very very exciting and I think we going to see like uh leaps in in performance of these models as the size of the models are growing I would say um we might actually see uh you know first versions of it like very soon I would say maybe after 100 trillions of parameters this is where in terms of number of capacity in terms of number of parameters we would be equivalent to a human kind of the amount that is available to what is that

two more boosts of 10x so we have like two more boosts of anthropic training there CLA Claud four and five probably so yeah somewhere around Claud five or chaty pt6 something in that range of jumps two more jumps which might take another you said two to five years we get some what feels like smarter than any human on the planet I that was mine like smarter than any human on the planet able to beat any human on the planet any test now robotics might be hard because you do have some physical fine motor skills that

basketball and soccer seem out there but you know to work in a factory or to cook maybe it does work pretty quickly um yeah how do you think about Job destruction societal changes you know this is always something that folks in your career and coming at an MIT you know debate late at night when you're having drinks or whatever you're vibing whatever The Vibes are what do you when you're sort of off duty talking with people who are building this stuff what do you how do you think about retiring a whole swath of jobs that

are arduous and painful but that also do provide meaning and purpose to some degree or employment generally for humans working in a factory picking strawberries writing marketing copy all the stuff seems to be at risk so how do you think about Job destruction and what's the back channel on that this is it coming Fast and Furious or do you think we're going to be able to manage it as a species in a society I think we can manage it like any technology that comes in I would say it's going to be disruptive like you can

you can think about like the evolution of technology in all the things that are in their hands and and it changed the the the type of the jobs that you would be actually having but it's not going to like replace because right now you can use these systems as an assistant in some sense I think I think the this AI Revolution this one in particular is helping us to evolve into a better versions of our Els like every kind of application that today you see in generative AI enables is like in the productivity space right

so it's increased productivity we can do things faster we can build things faster because of AI and I feel like this is going to be the trend you know and we're going to frame basically AI systems for basically helping us to become the better versions of ourselves and get things done faster for me the moment that I'm dreaming of happening is the fact that when AIS can actually solve new physics and new mathematics new science right like if AI can can discover can discover new I I want I want to give the nii system basically

the Einstein's equation Maxwell's equation and the theory of everything that uh you know uh cosmologist are working on if I want to give them there and tell the a system hey continue from here and go figure out what's what's the next thing that is going to happen now if you solve physics then you can solve the basically the you know the way we built uh structures like the way we do science if you solve mathematics you can solve the economy of the world you know if you solve uh humanitarian Sciences like the conflicts that we

would have you know we might actually have ai helping governments basically solve conflicts you know there might be so many use cases of AI enabling like new opportunities for work but this is how I see AI helping us as an assistant as an as an elevator of of of the way we live yeah this is I think the most positive spin on it which is hey yeah you might get rid of some arduous jobs just like we got rid of being a phone operator like people used to have that job people used to work in

the mail room I remember when I was stared my career in the 90s working in the mail room or being a bike messenger was like a major career like you there were many jobs that you could do and you get paid really well bike Messengers got paid a sick amount of money in New York to run documents back and forth for law firms from Wall Street to Midtown and they don't exist anymore for all intents and purposes uh you don't have to run documents because you obviously the facts machine and email change that forever but

yeah you're right like you know what if we could actually solve existential problems or you know science problems around clean energy around farming around calories around Health you know maybe we just live with massive abundance and I think that's where people have to keep in mind there was like this uh shortterm look at it on the John Stewart show I don't know if you saw that trending wh what was your take on the John Stewart take that like oh my God we're just doing job destruction here I got a little cameo in there because I

was interviewing Brian from Airbnb and he was talking about like hey we just don't we're not going to need a bunch of customer support people answering repetitive questions which I don't know if that's a great career or not I don't know if people and there's some people who love being in customers work they like interacting with people but maybe it's not a great job uh long term I think we just get better choices like as as a as a species like you you would basically have a choice to to interact with more with humans right

and because let's say for a customer support job right what why a person would be interested in that job I would say the human aspect of it right I like I like to talk to people I like to interact with humans you can do that in the in presence of AI just in a different way it might actually be less involved than than how you have to do it or you're forced to basically do it like for for that kind of human interaction I would say Ai and and intelligence in general is giving us choice

choice is like what is an important kind of element uh uh of of of human civilization as well like like the way the way the way we evolve actually became like this kind of uh um uh the the the most powerful species in the in the world is by the fact that we have a lot of choice like choices integrated in our in our site and the ability to have choice I think again as I always say like I'm going back to this of course AI would have like like you know like downsides and upsides

it's not like all green and everything yeah but I think that the right version of AI is going to be extremely useful what what do I mean by the right version of AI one of the things that is concerning is making these today's AI systems larger and larger as black boxes if you don't understand what you're doing with a system that system is no matter how much control like you're losing control basically you're not you're not going to have like a lot of control in the system the fact that everybody like entropic is actually putting

like 20% of their Workforce on on on explainability right so explain what this means for people who don't understand because this is a topic that I think is super important and under reported on understanding what the machine is doing it's hard for people to believe that people don't actually understand what the neural networks are doing so take a minute to explain this to folks yeah definitely so let's let's first Define like what do I mean by explanation okay what do I mean but when I say I can explain a system okay I tell you the

equation that I think most of your your audience would actually be be able to relate to e is equal to mc² that's the onance equation right may I ask you this like do you think this equation is explainable that means what that means like if I have a mass I have an object and I know the mass of this object yep and if we know that if this object is moving with the speed of light y then then you can compute the energy that it would dissipate at that confr yeah you can explain this yes

you can explain it it's explainable across time like it's basically like at any given point in time if I just give you this equation this is called a physic physics equation or physical model mhm okay this is the best type of modeling framework that scientists has ever designed a physical model is a model that is completely 100% explainable And it explains a kind of reality that you can relate to right on the other in reality that's it exactly on the other side of the spectrum you have a statistical models I said physical models and now

we have a statistical models statistical models are not 100% explain the behavior of a system but they observe data and from data they infer what is basically the construct of this uh topic that I'm modeling let's say a chat GPT chat GPT is a statistical M okay it's guessing the next word it's figuring out what the next thing in this thread should be just by observing data right yes because e is equal to mc² it does doesn't need data anymore it's explainable you just need to plug in your data and it will always give you

like the answer you know but if you were to say the quick brown fox jumped over the lazy dog this is something a probabilistic kind of thing you know like you have to see whether do I this is a statistical model OKAY chat GPT and systems like that are statistical models now now scale this statistical models into billions of parameters as well this becomes today's AI system right today's AI systems are black boxes because of the fact that we cannot really understand why if there is an input coming in and an output is getting generated

why this output is getting generated there is no explanation to why this input outut the to a source exactly what's the source material explain your work is yes or show your work is what people tend to do in phds right and and in graduate school you have to show your work how did you come to this conclusion you can't just solve the math equation you got to show us how you solved it so we get an idea of that and and in these neural networks people have not been doing that exactly and now we are

basically hopelessly basically trying there's a term called mechanistics mechanistic interpretability mechanistic interpretability tries to point into a part of a system a gigantic system and tries to say based on these this interaction here I suspect that this this this method is basically doing what you know this part of the system is is um uh you know responsible for biases in my system or something now in the middle of these two spectrums that I plotted for you okay so I told you there's a statistical models and physical models in the middle there is a set of

models which we call causal models okay okay causation yeah exactly that means like X implies Y and if X implies y then what you know like basically like more structure into the the way you're designing Learning Systems what I understood from the liquid neuron Network kind of thing actually I proved theories around this thing like in my PhD thesis that liquid neuron networks I are Dynamic causal models there are one step ahead of the statistical models that means you can understand to some extent the behavior of what goes in and comes out and you can

explain a little bit about the cause and effect of tasks inside the system not 100% but to a really good extent compared to this statistical because they're simpler they're more basic exactly they're more basic and the math itself is kind of tractable the maass itself is like something that you can you can um you know you as as a as an as a technical person you you would you would be able to understand the machine now when I was telling you that we want to design the mission of liquid AI basically is to design AI

systems that we can understand and efficient deploy in our society because we understand the math behind our systems it's not like a Transformer architecture that I just take it and scale it because it escales it it it it gives your eyes to like very nice kind of capabilities as a black box but now we are designing systems that are kind of white boxes that at every step of the of of the go we have a lot more control into how this how how these AI systems are doing decision making yeah exactly exactly and this is

where like I think there are some weird incentives to take the time to slow down if you're open Ai and thropic or Gemini you're working on some big project to slow down and say hey we we don't want to make this model bigger until we understand it a little bit better there's a perverse incentives here in capitalism and in this race to see who can get to AGI first or who can monetize this first and get their next version out open AI 567 you know Claude version 456 Gemini whatever is there not an incentive to

not slow down and not understand it um like why put Engineers if you're putting 20% of them why not put 0% of them on explanations and you know explainability why do explainability when you could just you know put more servers on it get more data and and beat everybody else that that's the perverse issue here right the the alignment of incentives but I know I know what's their incentive like let's say what's the capital for AGI the the the the market cap of AGI is 6 yeah I mean it would be the market cap of

human existence the world that's it the world that's the market cap so that's it so that's where these companies are heading at you know so they the the market like if you have AGI as you said like you can solve the energy problem you can solve if once solve the energy problem like what I mean you are basically the most valuable company on Earth you know like think about that I mean if you can solve economy like if you can solve uh politics basically the structure of governments you know this is the thing that we

hoping to get and there's a r think everybody gets there at the same time like Aji feels no you don't you feel some people will get to AI first first yes yes of course like a lot of people have I mean of course like I would say open Ai and entropic would be the first bets that I would say both of them I don't know one first but I think they have a head start and they have uh a lot of kind of information in house to to to get there I don't know about Google

I don't know where where their where their um priorities are but I think the two companies are focused on really scaling AI systems into more and more kind of uh powerful uh uh beings I would say at this point I think it's it's going to be um an Tropic in open air but they both have the they're both taking the approach that you can just use their system to build whatever you want on top of it so of course if it's open it's not open but it's available to people to pay for it so then

if there was the ability to I don't know figure out which Stock's going to go up you might have a thousand developers realize Claude and opening high are great at this I'm going to make the best Trader in the world to go trade stocks that's true but that's why that's why the release of those kind of huge models is still it by itself is actually like a huge challenge I would say today we haven't seen those kind of systems yet but the systems that are coming in the next two years as I was saying those

systems even the release of those systems to public it has to be a roll out it has to be like a tral error like we really have to see how what's the reaction we internally like these systems getting massively tested you know like it's not like they're just the today they get it and then tomorrow they enable it to to to everybody to get access to right like you need to do a lot of testing of the system and see the capabilities how how they come about do you think that's why there was that chaos

at open AI is that maybe they felt like that next version was getting close and that's why there was sort of chaos because there was that whole sort of speculation like maybe they did feel like this thing was getting you know agis let's say do you think there I I don't know I I I seriously don't know like because I mean it's um it's all behind behind closed doors I I I I really just don't know the only thing I would say is like it might look like more of a more of a conflict like

just just on Mission I would say yeah as as opposed to like how um if if the AGI has been achieved or not you know this is the open source models seem to be doing pretty strong as well do you think open source wins the day or do you think open source can keep up with the close systems or no the the unfortunate answer is no because the Clos models are usually like a lot of resources are going a lot of concentrated resources is thrown out closed Source models that's like that's just a simple allocation

kind of task you know just think about like resource allocation like the massive concentration of resources and in the hand of like open Ai and Nvidia itself like you know Google Microsoft like all of these companies right so that alone is also slows down open source open source is going to always play the catch up and some the gap between the closed source capabilities and open source actually grows as well you know that's also another thing so I don't think the Gap is shrinking so unless there's going to be an open source move on you

know like a more Facebook you know to their credit is moving you know has moved to open source models Apple's doing open source models so it's going to be really interesting to see if either of these can catch heat delayed open source think about it how llama 2 llama 2 came out delayed open source is again the same story right so llama 2 came out as a commercial license first right and then they decided to open source now let's see how llama 3 is getting uh released so it is it is important also like to

think about timing on the open source like moves you know it is true that some companies are just putting out like for example Mr Al also played like a amazing role in the open source kind of community right like they they they put a model out but then immediately they they put the more powerful models like behind the pay wall right got it so you have to you always have to think about like what what is happening in the game and I would say the unfortunate truth is the fact that closer Smalls are winning amazing

all right so uh I think you're hiring and things are going pretty well for the firm if people want to join the firm where can they learn more and uh come join the liquid team yeah liquid. a basically like there's a get involved uh section where you can today we have like around 25 uh smartest people on earth I would say it's a really crazy concentration of people we have people with um Olympiad medalists and the team like we people that are solving literally like really complex problems for us we have on the team like

inventors of um uh very important AI Technologies and uh we have uh good philosophers also in house we have uh Yos Bak also like was part of our organization and um you know like uh it's it's it's always like it's a privilege for myself to work with such an amazing team of talent because this is this has been the power of liquid a I we have been like very good at uh bringing in like key players into into the space to build like something from scratch a kind of white box kind of intelligence and then

hopefully scale it into something that is Meaningful and again we are we are obviously hiring as well and U well continue success with it and thanks for sharing this crazy vision and uh you know be thoughtful about releasing this stuff let not end the world let's make life awesome for everybody and we'll see you all next time on this weeks byebye great job so much

Related Videos

45:28

Panel Presentation: Liquid Neural Networks...

Labroots

2,835 views

28:26

Building More Efficient AI: How Liquid AI ...

Forbes

13,113 views

55:57

CoreWeave’s Brannin McBee on the future of...

This Week in Startups

54,912 views

58:38

AI and The Next Computing Platforms With J...

NVIDIA

3,699,314 views

35:42

IA Summit 2024 Fireside Chat With Mustafa ...

Madrona Ventures

4,534 views

10:19

Is the Intelligence-Explosion Near? A Real...

Sabine Hossenfelder

607,483 views

1:22:22

The REAL Life & Times Of Krishna - Nilesh ...

BeerBiceps

769,081 views

46:02

What is generative AI and how does it work...

The Royal Institution

1,064,600 views

24:02

Google CEO Sundar Pichai and the Future of...

Bloomberg Originals

3,837,400 views

13:00

Liquid Neural Networks | Ramin Hasani | TE...

TEDx Talks

64,128 views

17:51

I Analyzed My Finance With Local LLMs

Thu Vu data analytics

487,075 views

30:54

Metera x Strike Finance - Futures, Options...

Metera Protocol

38 views

19:40

Inventing liquid neural networks

MIT CSAIL

38,211 views

13:40

What's next for AI agentic workflows ft. A...

Sequoia Capital

322,755 views

![The moment we stopped understanding AI [AlexNet]](https://img.youtube.com/vi/UZDiGooFs54/mqdefault.jpg)

17:38

The moment we stopped understanding AI [Al...

Welch Labs

1,174,306 views

42:26

Artificial intelligence and algorithms: pr...

DW Documentary

10,716,065 views

![The Possibilities of AI [Entire Talk] - Sam Altman (OpenAI)](https://img.youtube.com/vi/GLKoDkbS1Cg/mqdefault.jpg)

45:49

The Possibilities of AI [Entire Talk] - Sa...

Stanford eCorner

540,518 views

15:14

How are memories stored in neural networks...

Layerwise Lectures

705,491 views

19:15

GraphRAG: The Marriage of Knowledge Graphs...

AI Engineer

51,299 views

25:28

Watching Neural Networks Learn

Emergent Garden

1,349,268 views