How I Built an AI Agent to Create Faceless YouTube Videos (No-Code)

1.98k views4560 WordsCopy TextShare

Simon Scrapes | AI Agents & Automation

🚀 The simplest method to produce professional faceless videos at scale, 100% automated with N8N. Ho...

Video Transcript:

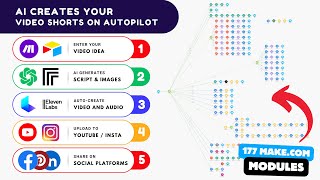

I guarantee this is the simplest way right now using an an to create your own faces videos where we've got image to motion Faces video is thriving and I'm going to show you today the quickest and simplest method to doing that for really cheap I'm going to jump straight in to an example output so you can see exactly what you'll be able to achieve by the end of this it's not perfect but it is it's pretty phenomenal for the amount of time put in from Tiny microbes toy civilizations this is the story of us picture Earth 300,000 years ago our ancestors take their first steps walking upright across the African Savannah they discover fire crafting tools and painting stories on Cave walls but we weren't content to stay put like Unstoppable explorers we spread across the globe from the I'll pause it there like like I said it's not perfect but actually you can see the potential in this we haven't included things like captions yet which would be great for improvements and like getting consistency over images is obviously important but we've got motion images as well as voice we've got like the fundamentals there and this is a super simple way to do so we're going to start off with the different tools we're using and just give an overview of that so most of you probably know 11 Labs but it is the leader in realistic AI voice platforms essentially so what you need to do to get started is just sign up for an account and what you can then do is go into your app and they have really cool things to do on this platform one of them which we'll cover separately is voice cloning so actually creating a clone of your own voice which is scarily good but we're not going to use that today and we're just going to use one of the voices that's already pre-created by 11 Labs if we go into voices here you can see I've got one of my own but also we've got a bunch of these different voices that we can hear an example of the world is round and the place which may seem like the end may also be the beginning it's quite like artificial and it feels a little bit AI generated but actually they've got a lot of different voices and you can test out different ones and find the one that's suitable for your videos but yeah we're basically using 11 labs for the other tool that we are using is is Leonardo doai so Leonardo doai I would describe as a a mid Journey alternative that's accessible via API and it's an image generation but the reason we're going to use it today is actually have a really cool feature they've called it motion here which is effectively image to video so I've seen a lot of faceless videos that are just made up of still images that have text overlay as captions and actually not quite good enough to pass as not AI generated whereas actually if we create motion from those images we'll be able to achieve much cooler results and much closer to paid platforms for faceless image generation and that's what we're trying to achieve through leonard. obviously we're working with n so actually being able to access this via API is super important to us because then we can do it all in one flow without leaving our Na and environment finally to achieve this result we're going to go to our na environment so we've got a trigger here which is an upload of our audio so what we we doing is effectively we are deciding on a script beforehand which we'll run through in a minute and we are getting that audio in 11 labs and we are downloading that audio we then want to generate timestamps for the script so based on the script that we input we need to match our audio to our visuals the way we do that is for the script we decide this many words would take this amount of time to produce or read and therefore we allocate that a certain amount of time to show on screen to match with the voice because what we're trying to achieve is the words and the visuals match so when an image changes we want the words to match the image on screen once we've generated the timestamps we're then able to generate images from the script prompts that match the visuals that you'd expect to see with the script using Leonardo and those are set at I think between two and 4 seconds so we want to change images regularly but also try and match it to the frequency in which the audio changes then finally as part of the image generation we want to put motion into those videos so we want to take an image and then make it into a video effectively or a motioned image so it looks closer to a video we'll download all that and then finally the tool that we're going to use is shot stack and we're using that to effectively merge all of our assets into a single video here's what shot looks like on the homepage so it's scale your video creation so effectively it's an API accessible video editor so again we chose this because it's a simple solution that we can use quickly to get fa this videos up and it's fairly well priced and accessible via API so again we can do it directly from our flow so I'm going to jump into the na flow now and go through exactly what we're doing in each stage so I've actually come back whilst I've recorded this and updated the trigger so before I said we'd upload the audio that's downloaded from 11 Labs but I thought actually I'll make it much simpler and we can generate a script right here in the input and also so then generate the audio and pass that directly in for the time stamps generation so we're going to run through those steps now so we are putting in an idea here and this could be any idea it could be a history of time but we're effectively doing a high school story about a computer science teacher teaching her students about artificial intelligence and the way it's developed over time so something very simple related to what I'm working on we then have an llm chain it takes that idea and it creates an engaging script we've told it to be one minute long and consider incorporating elements such as storytelling relatable content stuff that makes it really interesting I've also added a key Point around capitals and exclamation marks and then we just do some formatting so we want to remove all the line breaks from the text so then it's easier to process in 11 Labs we're then going to hit the first big stage which is the audio generation so we said we were going to use 11 labs for the audio generation so I'm first going to open up the 11 labs and show you where to grab the voices from so this is what your dashboard will look like in 11 Labs if you hit voices on the left hand side you'll have a bunch of voices that are recommended to you if you go up here and you go to library you have all the community made voices that would be really good to use in these kind of things for this we're going to do a narrative and story but you can have conversational characters and animation all of it so let's go narrative and story and we'll listen to one of the voices here and here we have a calm well spoken narrator full of intrigue and wonder for nature science mystery and you get a good idea of what that voice is like and you can go through and listen to all these test out a bunch of these we're just going to pick one and we'll pick this one so on the right hand side which you can't quite see because my screen's split here there we go we've got the view on the right hand side if we go on to view in here it will also recommend a bunch of similar voices that we can test but if we're happy with that voice what we can do e but if we're happy with that voice what we can do is we can either add to our voices or go on view and what we need for the API call is the voice ID so we're going to add that to our voices I go on view here and you could have done this in the other page as well and just below you can't see it here on screen but there's an ID button if you click that you'll be able to copy and paste it so I've just copied it and pasted that so now we have a voice that we want to use we'll go back to our audio generation and we'll do the first stage so we go into the HTTP request and you can see I've done an example here and the URL we are posting the text to or the script to is 11 labs. text to speech this at the end here is actually The Voice ID so we're going to replace this now with the British voice we just heard you want to set up your own API key so within 11 Labs you'll be able to go to the API settings set up your own API key if I jump into here it's one of the predefined credential types if you go to 11 Labs API all you need to do is put it into this XI API key in here with no other fields we're then going to send a header which is content type application Json because we're sending Json data with Tex in and that's our speech being sent and then we're going to put just in the body parameters text and then grab from the previous stage json.

text so we're just going to run that now meet Miss Rodriguez a computer science teacher so you can hear there that it's actually produced the script in the 11 Labs voice that we chose and return that as a file the next key stage of this is actually turning that generated voice file into a timestamped script and the reason we do that is because we want to match our images to our audio because it wouldn't make sense if the images did reflect the audio and if they didn't move at the same time so we're going to just use the open AI whisper model here and here is the endpoint so it takes in this file and posts that the audio transcriptions endpoint so what we're doing is just transcribing the audio at this point and there's a few different parameters we're going to put in our open AI credentials here and actually we're using open rout which allows us to access multiple models not just open AI we're just using open router instead and what we're going to do is send the body as form data telling it we want to use the whisper model which is the open aai transcription model and then finally we are inputting the data file the binary file of the audio that we want to transcribe the next stage is super simple and all we are doing is prompting an llm to create image prompts for each scene So based on the response it receives from open AI whisper it will assess the time stamps and what is said in that time stamp and this can get really granular and it will produce an image every two to four seconds to produce consistent images and make sure that we have lots of images showing and it's not just a static image with too much text so we're trying to get the right balance here of image swapping versus also Dynamic images we've told it an important part here which is make sure to have scenes spanning the entire video so don't leave any blank time between scenes often if you don't tell it this important instruction it will cut between it will produce images for one scene and then leave gaps or not produce one at the end not produce one at the start it like causes errors so this just helps us do that and what we've done is just effectively passed in the Json object from our transcript and we've just turned that to a string so that it's easy for the llm to process and Digest so we're going to come back to the workflow and I'll show you exactly what those inputs and outputs look like I've just realized this is called generate time stamps which actually not what it's generating it's actually generating our image prompts so I'm just going to update that here live yeah imagine you're an image generation prompt producer so it's actually creating our prompts and you can see that on a previous run I've effectively then split out the prompts so we can see what the prompts look like in the output so we have a start time and end time and a duration for that time slot as well as a prompt that we're going to create an image for by sending it to Leonardo next so we've got a modern classroom with a large projection screen displaying journey into AI with dramatic lighting and students looking engaged so it's not the most perfect prompt but it's a basic prompt that we're able to send to Leonardo and a model in Leonardo to produce an image for us at this point I'd love if you are finding the uh content valuable I'd love if you could go down below hit the like button and subscribe it really helps me reach more people and I'd love to produce more content like this if you find it valuable so what we're then going to do is the next big stage which is our image generation so we've already generated the audio now we're going to generate the images to go with the audio and then the final will be stitching those together so we've chosen Leonardo for the image generator Leonardo allows us to access multiple models like flux through one platform so it's an easy to use platform and you can change the model ID so if I go into the HTTP request here you can see it receives all the prompts the start times the end times and what we're doing is for each prompt we are generating an image and the output here is our generation ID which we're going to use to pull the images and download them and also shows us our API cost credit so inside here we're calling the generations end point to generate images we're using our Leonardo hetero so we'll show you how to get that in a second we are assigning a height and a width we're doing that at 1280 height and 720 width and that just produces a short St Style video which we are looking to reduce because we're doing 60c shorts so we're using shorts format we pass in the prompt and then finally we get to choose on Leonardo which model ID we choose so you can go to the um Leonardo docs so it is docs. leonard. docs and this is the API documentation and you can see under image generation that actually you can use any of these models and we decided to use flux and it gives you examples under each and for example for flux we can scroll down and we can see that to use speed or flux schel model we need this model ID so we copy and paste that we take that back into here and we insert that here so you can see that we were already using flu but that is the model ID there so you're able to change that if you find that another model produces better images for you so once we do that we just need to wait obviously these things take time we can only generate so many images at once so we are waiting 30 seconds to retrieve the output images are fairly quick so we just need to wait 30 seconds for our 24 images to be generated we are then calling the generations and passing in that generation ID that we received from the previous step you can't see it on the left hand SE out side here because I've pinned the data and we're just looking at example an example run we are actually doing a get requests so this is incorrect and we don't need to pass a body we only need to pass a body in post request if we're not sending any data and we're just retrieving it we just need a get request so we are then retrieving the generated images so you can see it returns here our URLs of our images so we'll open one as an example and you can see an example so this is actually the one we were looking at the prompt for it's got a journey into AI on the screen in front of a classro exactly like we prompted it to good with these going to go back out into the canvas and the next big phase is actually turning those images to motion images so this is a really really cool thing you can do through Leonardo it's effectively got image to motion so here we are we've gone to the app.

leonard. and we've clicked on motion and you can see exactly what we're doing here so people are able to upload an image so we've just created a bunch of images that we're going to do this to and actually Leonardo is able to return a motioned image so you can see this guy was just an image and now it's showing him walking along the street uh we've got Jesus At The Beach we've got Lion's main mushroom we've got a cat driving a car we've got all sorts so effectively we can create really cool animated shots and this just levels up our static images to look like they are actually created by video so this just levels up our videos and you'll see at the end that the video quality is much better than just static images with some text overlaid and some audio so we're going to come back to our n environment and we're going to see what was generated when we generated the motion so we've done the image Generations we are now calling a different endpoint to generate these motion images we are calling the generations motion STV so all of these have the same base URL up to V1 I believe and then afterwards we're posting our image data using a Json body our image IDs from the images that we generated so we drag the ID over here and for each image we're generating a motion and we're using a motion strength of three we can play around with this in Leonardo Ai and I think it's a value of one to five if I remember correctly and this just changes the image output and then we're happy for it to be a Public Image that others can see as well and this outputs again a generation ID and our API cost credit you can see that this is significantly higher this was 45 image generation was four I think so it's 10 times higher but actually creates really high quality images in another video I'm going to be exploring the different options for image to motion so if you're looking for more of these faceless video creation systems and automations then please subscribe below because I'll do a review of the different models that help generate motion from images we then obviously wait a period of time so it takes longer we're now waiting five minutes to then request and get our motion generations and we can see now at the URL one of our motions generated for the first image so what we're going to do is look at the ones that we downloaded so the next HTTP request effectively takes those generated images and like we did with the images we are downloading those MP4 files and we've opened up one here and you can see that this output here is effectively a motion image Just 4 seconds long because we're switching frames quite quickly a motion image or motion file that we're going to piece together with the audio so that is step three completed so we've generated the audio we've generated the images we've turned those images to video we now need to piece those together which is the final piece to create an actual motion video so we've now got this working so we take it to the next step and this is the final stage so we are now using our final tool which is shot stack which is effectively an API accessible video editor and if we go into the HTTP request for shot stack all we are effectively doing is combining our audio that we generated earlier with our visuals that we generated earlier and also inputting the time stamps so if we have a quick look here we've got this api. shot stack endpoint and what we're doing is rendering a video and this is just a standard video and a standard end point we don't need to customize this in any way because we're just combined audio and visuals so we'll put in our header off and if you head to shot stack and set up an accounts in your settings you'll be able to access your own API key I won't run through that here we will then make a post request with our different elements of the Json so this looks more complicated than it is but we're effectively sending a few different values that form a timeline and form an output that we want to be created as a video in MP4 and the dimensions of 720 by 1280 which we discussed before is the shorts Dimensions so that just tells short stack how to produce the output the inputs that we're adding the first is the audio of the script from 11 Labs that we Define earlier so we pulled that audio here and then the larger part here which looks confusing but actually is relatively simple you can see on the right hand side what it actually is so what we've done is we've pulled the information from our aggregate node and we're effectively saying okay for each item in this list because we've combined all of our motion images for each item we're going to upload a video we want to pull the video from the URL so it's this URL here the start time is pulled from earlier where we generated our image prompts so if we go back to the node down here on the left we've got the generate images using Leonardo oh no not that one sorry all the way back here to generate image prompts all you're pulling here from generate image prompts is the start time and end time that we pulled earlier and that will help us compile the video or help shot stack understand the different clips so you can see here on the right hand side all we've done is map a list of items and I wouldn't worry too much about this code you can copy it exactly how it is it pulls the dynamic inputs from our our named nodes but you can see it's pulling the image source the start time the length of it and that is helping us to produce a video in shot stack we then got another node that just waits for one minute for the shot Stack video to be created and then finally we send a message to shot stack with an ID from the response just to see if that is now ready e coming e and it looks like we've got some results so we've ped shot stack for the response and it said okay we're on the free trial plan so what that means for shot stack by the way until you upgrade to a paid plan you'll just have a watermark for shot stack which means we can produce as many videos as we want for free um but they will have a water mark on so it's really really good for testing but effectively it's given us all the different details of the video and what it's compiled for us super cool this is then all we need to do is just go to the URL from here so json.

response.

Related Videos

24:27

How to Build Effective AI Agents (without ...

Dave Ebbelaar

138,843 views

14:32

The Secret to Unlocking 97 Faceless Video ...

Simon Scrapes | AI Agents & Automation

1,960 views

5:13

China-based AI company DeepSeek heats up t...

NewsNation

2,467 views

46:47

Everyone Will Use AI Agents in 2025: Build...

Leon van Zyl

62,002 views

43:16

5 More Automations You Can Sell Today for ...

Nick Saraev

27,534 views

15:47

My AI Research Team Does 1 Week of YouTube...

Simon Scrapes | AI Agents & Automation

3,733 views

14:38

Create your AI Video CLONE with this simpl...

Simon Scrapes | AI Agents & Automation

1,403 views

1:05:18

Build Your First No-Code AI Agent | Full R...

Ben AI

48,712 views

1:02:39

EVERYTHING You Need to Create an AI Voice ...

AI WAVE

3,665 views

17:47

The ONLY 8 Faceless Niches That Will Make ...

InVideo For Content Creators

560,389 views

53:30

How I Automated Video Creation and Posting...

SumoBundle

13,742 views

1:39:57

FACELESS Videos 100% Automated (Make, Chat...

Stephen G. Pope

214,833 views

1:01:32

100% Automated Content System (with AI, Ma...

Stephen G. Pope

60,327 views

59:00

We Built THREE AI Apps Using ONLY AI in 58...

Helena Liu

103,449 views

15:39

Generate 100+ YouTube Video Content Ideas:...

Simon Scrapes | AI Agents & Automation

1,891 views

45:52

Free AI Tools I Actually Use to Create Bet...

Think Media Podcast

20,119 views

1:58:47

How to Build AI Agents | Complete AI Agent...

Nick Puru

12,657 views

13:18

I Built a Low Code n8n Content to Video Au...

AlexK1919

8,810 views

32:45

The Definitive Guide to Building AI Agents...

AI Software Developers

10,665 views

16:07

Watch Me Build a Dental AI Sales Agent in ...

Hamza Automates

40,318 views