Bioinformatics Pipelines for Beginners

9.93k views7601 WordsCopy TextShare

OGGY INFORMATICS

In this video, I discuss what bioinformatics pipelines are, the common steps involved in building th...

Video Transcript:

hello and welcome back to the channel in today's video we're going to be talking all about bioinformatics pipelines we're going to cover what they are and what they're used for common steps in a bioinformatics pipeline different ways you can build them and finally I'll guide you through a example of how you can build your own so without further Ado let's get into the video so firstly what is a bioinformatics pipeline well put simply it's a series of orchestrated data processing steps where each step Builds on the previous one in a bioinformatics analysis context of course these steps might include initial raw data pre-processing data analysis statistical tests and finally result interpretation and visualization but why do we need a bioinformatics pipeline well the scale of data we handle in bioinformatics is vast we're often dealing with entire genomes proteomes or complex interactions between them manually processing such a high volume of data is not feasible or efficient that's where these pipelines come in they allow us to automate this process handling the massive influx of data in a systematic and step-by-step manner besides handling large data another significant advantage of pipelines is the reproducibility they offer in research it's crucial that our results are reproducible meaning other researchers can follow the same steps and reach the same conclusion pipelines document each step of the data processing Journey ensuring that our work is transparent and can be accurately reproduced by others so to summarize a bioinformatics pipeline is an organized automated system that allows us to process large-scale biological data and Achieve reproducible results now that we understand what a bioinformatics pipeline is and why it's used let's dive into the typical steps you might find in one of these pipelines we'll be using a genomic sequencing pipelines our guiding example so the steps used in these participants can vary depending on the type of data and the goals of your project but usually we start with a data collection so the first thing we need to do is collect our raw data so in a genomic sequencing pipeline this data usually comes from a sequencing machine and consists of short DNA sequences often referred to as reads the next step is usually data pre-processing so once we have our raw data it's time for some cleaning this stage involves steps such as quality control where we check the quality of our reads and remove or correct any that are of low quality another critical step is read mapping where we align our reads to a reference genome step three is data analysis so with our pre-processed data in hand we can now move on to the main analysis in our genomic sequencing example this stage includes variant calling step four is interpretation and visualization so after identifying variance the next step is variant annotation here we add information to each variant about its potential effects finally we often want to visualize our results to alien interpretation this could be a plot showing the distribution of variance across the genome or a table summarizing the potential effects of each variant so as we move through a bioinformatics pipeline our data is transformed from raw reads into meaningful insights about genomic variations remember the specific steps in your pipeline might vary depending on your Project's needs however the overall structure will likely remain consistent across different projects you might be wondering why we call this process a pipeline well consider a water pipeline at the start you have a source which is similar to our raw data as this water flows through the pipeline it passes through various stages filters pressure regulators branching pipes all performing specific functions to ensure the water reaches its final destination in the desired state in a similar vein our raw biological data enters the bioinformatics pipeline and flows through distinct stages of processing each stage refines and enriches the data so by The End by the time our data has flowed through the entire pipeline what began as a raw and somewhat chaotic flood of information has been harnessed and transformed into a steady stream of valuable actionable insights right we're now going to talk about how to actually build a bioinformatics pipeline there's a few different ways let's start with the basics building a bioinformatics pipeline can often start with just simple scripting languages that you might already be familiar with like python r or bash at its core a bioinformatics pipeline is a sequence of tasks where each task processes data and passes it on to the next now each of these tasks can be performed by a script for instance you might have a python script that pre-processes your data and our script that performs a statistical analysis and then another python script that visualizes the results you can then put all these individual scripts inside a bash script to orchestrate the pipeline the great thing about this approach is that it leverages the coding skills you might already have so it there's no need to learn a complex new tool to start building your pipeline one thing to note is that when using this method it's important to design your scripts to be command line friendly what does this mean well each script should be capable of taking an input directly from the command line and produce an output that can be automatically passed on to the next step this can be done by saving the output to a file which the next script can read from or in some cases piping the output directly from one script to another in your bash script by doing this you're making sure that your pipeline runs smoothly from one step to the next if you are confused how linking scripts together actually works then don't worry because I'll be taking you through an example at the end of this video where you can build your own simple pipeline in this way although this approach is very powerful there are some limitations too as tasks become more complex and the volume of your data grows you may find that a bunch of linked scripts isn't enough and that's where some more of these Advanced tools come in which we'll talk about in the next section so the next way we can build bioinformatics pipelines is with a workflow man management system so as our tasks grow in complexity and our data sets increase in size the need for tools designed to tackle these challenges also escalates this brings us on to workflow management systems workflow management systems are designed specifically for managing complex data intensive computational pipelines these tools offer features that can dramatically streamline the pipeline creation and execution process the most common ones used in bioinformatics are next flow and snake make so why might you choose these tools over your familiar comfortable set of linked scripts all the advantages are numerous firstly they're modular so if you think of each task in our bioinformatics pipeline as a building block each block has a specific function like quality control or data analysis and these blocks can be reused across different projects in the context of workflow management systems these blocks are called module tools so instead of writing a new script for every project you can write a module once and then plug it into any pipeline that needs that task this saves you valuable time and ensures a standard consistent approach to each task which is crucial in bioinformatics the second thing is that they can handle large data sets with ease which you may well know bioinformatics deals with large and complex data sets and these systems are designed with such data in mind managing the data flow efficiently from one step to another thirdly workflow management systems are built with error handling in mind your pipeline can fail gracefully if something goes wrong leaving you from the frustration of unpredictable crashes another major advantage is reproducibility this is really important in bioinformatics these systems require explicit definition of each step of your process allowing anyone to replicate your pipeline and achieve the same results also these tools provide many extra features for tracking the performance of your pipeline I personally use nextflow a lot and I really like the summary reports you can get you can get a nice visualizations showing things like CPU usage RAM and runtime for each step in the pipeline this can be useful for optimizing your pipeline and managing resources efficiently plus many of these systems come with pre-made pipelines for common bioinformatics tasks and you can use these as a starting point for your own work so let's say you wanted to run a whole genome sequencing analysis pipeline you wouldn't have to build it from scratch you could just use one of the pre-built Pipelines as is or at least you could use it as a starting point and then customize it to add some additional features if you needed one thing to remember though is that these systems come with a steep learning curve it can seem intimidating especially if you're new to bioinformatics but don't let that dissuade you if you're going to be building pipelines regularly then I do recommend learning one of them because they do save you a lot of time and allow you to manage your your data and projects more efficiently in the long run so moving on we have another approach a third and Final Approach for building these bioinformatics pipelines and that is using a graphical user interface or GUI so a GUI like Galaxy offers a visually intuitive method for constructing and managing bioinformatics pipelines so for those who are either new to coding or find it a bit intimidating guis provide a user-friendly alternative so this could be useful for wet lab biologists who aren't as Adept in the coding themselves so with Galaxy you can drag and drop components to create a pipeline this is an open source platform where you can create run and share bioinformatics workflows it provides access to numerous bioinformatics tools which you can use directly through the interface without actually having to write any code yourself you can simply select the tool you want to use provide the input and Galaxy will execute the task for you kind of like you have a team of mathematicians working behind the scenes running the commands based on your instructions Galaxy also has built-in data handling capabilities which enables you to handle large data sets with ease one of the great features of Galaxy is its reproducibility so it tracks every step you take allowing you or anyone else to replicate the workflow exactly that being said while guerries like Galaxy can be incredibly useful they might not offer as much flexibility or control as scripting or using a workflow management system they're fantastic for getting started and for standard tasks but for more complicated or customized pipelines you might find that other methods are more suitable so in conclusion the method you choose for building your bioinformatics pipeline will depend on a variety of factors your coding skills the complexity of your tasks and resources at your disposal but regardless of the method you choose the key lies in understanding your data defining your tasks accurately and ensuring the reproducibility of your workflows okay so in the second half of this video I'm going to take you through building your own simple pipeline using the first method that I talked about in the video which is basically linking together comma online scripts within a bash script so you've basically got this bash script which is acting as kind of container which is gonna which is going to contain all your other Scripts and we're going to go through a simple example today so the example I'm going to show you is it's very simple as I mentioned it's not probably not going to be something you're actually going to be doing but that I'm doing that on purpose because I basically just want to have something which is going to be easy to understand because the main focus here is actually on how to actually build the pipeline not necessarily the you know the bioinformatics like that's going on so in this simple example we're going to build a pipeline that does three steps so the first step would be it downloads a gene expression data set the Second Step would be it calculates the mean expression for each gene across all the samples and then finally we'll visualize the results with a histogram so if we break this down we're basically going to create four Scripts one script for each of these three steps and then one script which is going to be the the bash script which we're going to put all these scripts inside of so that's just um demonstrated here so we're going we're going to have an R script which is going to download the gene expression data we're going to have another R script to calculate the mean expression for each gene will then have a python script to create the histogram and as I mentioned we're going to have finally this bash script which is going to hold all the above Scripts so you might notice that the first two scripts are R Scripts and you might be thinking why can't we just use a single R script to download the data and to calculate the mean expression and the answer is well we can but the reason I'm doing this and showing you um like how to do it with two separate scripts is because it's best practice when building these pipelines to separate out different functionality into different Scripts so I remember when I first started to learn you know how to build these pipelines and was using this method of Link scripts but I try to pack as much as I could into a single script so I'd have like an R script that would download the data do some pre-processing do some like input validation it would do all sorts of statistical tests and manipulation and then also do the visualization at the end and it would be this I'd have this long script with like hundreds of lines of code and it would just be impossible to try and debug that so that's that's one of the reasons is debugging and just making your code cleaner but there are other reasons too one of the other main reasons you would separate out you know different tasks into their own Scripts is because it makes your code more reusable so we I mentioned before about making your code modular so this is kind of what we're doing here we're separating out so we're separating out tasks into their own scripts we're making them modular and that way we can take a script and reuse it in another pipeline so let's say we have we had another pipeline which which would use the same sort of method to download the gene expression data but it had you know a different Downstream process we could just we could reuse this first script in in that new pipeline so if we combine these into into one script we wouldn't be able to reuse it in the new pipeline because we'd be doing something different in the Second Step so making your code modular allows you to reuse parts so if you know if you're going to be creating a lot of pipelines it's just good practice good practice to do that okay so I'm now going to take you through each of those four scripts I mentioned so just to remind you we've got the r script to download the data we've got the second R script to calculate the mean expression and then finally an R script that will plot plot the the output sorry a python script and then we have obviously that bash script as well which is which is going to be used as the container for all those other scripts so that's these four scripts in this script fold I've got here I will link all this code below so if you want to run this pipeline yourself or just take a look at the code I'll link my GitHub in the description let's start by looking at this bash script so so as I said this is the script which is going to contain all our other scripts so this is kind of the main pipeline script we've called it run Pipeline and a bash script has the file extension. sh so I'm just going to assume um you guys have minimal knowledge when building pipelines I'm just going to try and explain everything the best I can um so firstly let me just zoom in the first thing we have at the top here is what we call a shebang line and don't worry that it's in red that's just because I have a special setting on in my text editor but basically any file which is going to be executable will have a shebang line and what do I mean by executable that just basically means if you're running a script you know as a command line script so I'm in I'm in the terminal down here let me just list what's in here so I've got our four Scripts and if I were to say like run run the pipeline script just like that so that's how you're going to bash bit by the way you dot slash and then the name of the uh the script if I were just to run it like that I'd be running it as an executable any any file that's one as an executable file meaning you just call it from the command line like like that it has to have a shebang line at the top and what this shebang line does it tells the operating system um this it basically tells the operating system this is a script here's what what you should use to run it so in in this case it's saying this is a script you should use bashtronics this is the bash file if I quickly just show you my python file we'll see the similar thing it's basically saying this is a script use Python 20 in our R files it'll be like this is a script use R to run it um hopefully yeah but just just have that at the Top If it's going to be one as an executable file right so the next thing here is we are setting some variables so these these lines here that I'm highlighting we're setting to command line arguments so this bash script is going to be it's going to take two command line arguments it's going to take firstly a directory so this is going to be where our output to the pipeline is saved and secondly it's going to take a data set ID and this data set ID is a Geo or do gene expression or minibus ID other data set that's going to be downloaded by our pipeline bear with me um I'm trying to think if I'm explaining this well enough but just bear with me basically we're you know we're setting up a bag line then we're setting two variables and these variables are coming from the command line let me just let me just show you what that this is going to look so when we run our pipeline we do as I mentioned before dot slash the name of the file and then we have to set two arguments we have to set an output directory and we have to set a date set ID so this is what that's going to look like in our case so we're going to have and by the way when you when you have a bash script and you want to or any script that you want to have command line variable variables you basically just have a space between the file name and the first variable and a space between the in the first variable and the second one and so on so you just have spaces between each thing so this will if we run this it's basically going to think we've got the obviously the file to run and then we've got two command line arguments and now if I just quickly show you what's going on around the bottom here we're now basically running those three scripts so remember we've got one R script to download the data on our script to calculate the mean expression and then the python script to make some plots for us and these these files they each have their own command line arguments so just as we're going to run down here this is how we're actually going to run our pipeline we also send some of these arguments some of these variables to our our Scripts okay so we're now going to run this pipeline but before we do I'm going to activate a conda environment Conde is just a package manager and I'll talk a bit more about best practices for managing packages for pipelines at the end of this video but for this very simple example I've just used a corner environment it's basically a way to containerize all the packages you've used in a certain project because obviously there's so many different versions of packages or programs that if you don't if you use different ones you might get different results but if you're running a pipeline for example let's say you know you run a pipeline with Python 3 versus python 2 and you know you might maybe get errors or maybe it gives you a different result and you know there's tons of examples of this happening in re as I mentioned reproducibility is very important in bioinformatics so we need to keep track of all the packages and software versions we're using so I'm just going to activate this Quantum environment and you can tell it's activated when you get this at the start so now let's run the pipeline as I mentioned we first have the the first thing is the script so we have dot slash and then our bash script the second thing is a folder where we want the pipeline output to be oh yeah so in my case that will be one up from the current directory and let's just say pipeline out and then we're gonna have a third argument or sorry a second argument that is going to be the data set ID of the Geo data set so I'll explain what this actually means later on you'll see but just for the sake of it I think it's best to show you how the pipeline actually works before we go into the other script so once you've done that you're going to click enter and you should see a lot of output on the in the terminal just saying it's doing doing different things and you would have seen up here in the directory it's actually create this new pipeline out folder which is which is the folder that we set for our output directory so as you can see it's worked we've got three outputs we've got some expression data we've got the mean expression then we've got this histogram and yeah this is not um this is not a complex pipeline by any means as I mentioned but the point of this again is just to show you how to actually build them and how they actually work so this is not too important but I think now the next best thing to do is to go through the rest of your scripts so firstly we're going to show you this download data script so we're basically calling that from our bash script we say use R script if you're going to call and ask it from the command line you have to put R script before if you're going to call a python script from the command and you have to python before with the syntax here is you know R script the name the name of your script so the name of our scripts download data. r and then any command line variables so remember we have to make our we have to make our Scripts command line scripts to be able to take input from you know from either a previous step or from somewhere on the the file system so let's have a look at this so download data as I mentioned we have this shebang line at the top that's telling the operating system this is a script and it's an R script we need to run it with our script and then I'm not going to go too much into detail on this but I'm just going at a high level what we're doing we're loading in some libraries we are checking that the command line variables have been supplied so remember we need uh two command line arguments here we need the output directory and we also need the data set ID so it's basically checking that we have two command line arguments provided and if if we don't then it will throw this error so error handling is another important thing when you're building pipelines but again we'll talk about that nearest the end what we're doing here is we're basically taking those arguments from the command line we're setting them into variables and then we can use those variables in our in our code so this geoid this all business function which is part of the Geo query Library which you can pull data directly from the gene expression omnibus using this get Geo function all you have to provide is the you know the string of the data ID um and it'll kind of pull that gene expression set into your code so that's what we're doing here and then you know again we're checking did it work you know if you provide a data ID that doesn't exist then it will probably it'll probably throw an error at you so we're checking like did it work if it if it didn't work then we're gonna send this message back to the user again it's going to help with debugging and then just in our case we're just taking the first data set and then we're rounding the expression values and then we're writing a CSV so we're writing a CS4 a CSV called expressiondata.

csv and if we look in our pipeline outboard over here we see that we've got our expressiondata. csv file so that's what's that's what that's what the first script is doing now the second script and before we go to it I'm just going to show you back to our basket the second script is another R script this time we're going to calculate the immune expression with this R script called calculate mean expression. r and it's going to take one variable which is the outer the same outdoor you know as we've supplied to our batch script and to our script before so let's go to calculate mean expression again shebang line at the start telling our operating system this is a script it's an R script loading in the packages testing that the there's a command line argument and it's in setting that combined argument to the outdoor variable it's then reading in the expression data so remember we've already created this in the in the first R script and then it's calculating the mean across rows and then it's just writing it to a new file called meanexpression.

csv which again is here in our pipeline help folder okay so then the last thing is this python script so we're gonna so we we write python the name of our python file and then any command on arguments that that script requires again so we're going to apply this outdoor we're going to apply this out directory command line argument and if I show you what this looks like we've got the shebang line at the start loading and Report required packages checking that we've supplied a command line argument we're then setting that argument to a variable we're then reading in that file and we we're using that the variable obviously in our script so here you can see we're reading this mean expression. csv file from this outdoor folder which is this pipeline out folder we're then plotting the mean expression adding some labels and then finally we're saving this plot again to the outer variable which is pipeline out and we're going to call it mean expression histogram. png and that is this file right here so again that's a very simple pipeline but I'm hoping how do I zoom out okay I'm hoping you have got an idea now of how these actually work and just one thing before we move on is that if I just list the so let's play this if I list the files in you know this folder here you can see that the Run pipeline The Bash file was in green and you know it's not like the color isn't important but the reason it is that color is because that file is executable and when you first create this bash script let me let me pull it up again when you first create this bash script it's not by default executable so if you remember we said an executable file is a file you can just run from the command line it's not something you're not just like running it line by line in you know in like a in a terminal you're actually running the whole file at once so with our run pipeline script this is how we run it as an executable file um but the first time you create this file the first time you create this bashful it won't be an executable file so I just want to bring your attention to to kind of this part over here so if you if you if you're on Linux and you want to list list your files in the directory ls.

Related Videos

28:19

5 Steps to Transitioning Into Bioinformati...

OGGY INFORMATICS

26,308 views

14:22

Transcriptomics 1: analyzing RNA-seq data ...

OmicsLogic

39,900 views

16:26

Introduction to Graph Theory: A Computer S...

Reducible

564,037 views

13:30

Learning BIOINFORMATICS in 2023 - What I w...

Genomics With Georgia

19,458 views

19:15

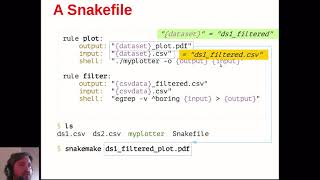

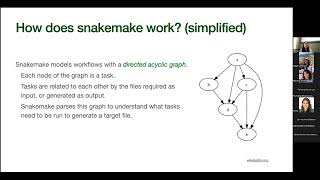

Introducing Snakemake

Edinburgh Genomics Training

14,630 views

1:44

Top Recommended Bioinformatics Projects 2...

Future Omics Bioinformatics made easy

1,727 views

17:40

Five steps for getting started with bioinf...

OMGenomics

88,523 views

8:13

Bioinformatics for Beginners

OMGenomics

26,907 views

50:46

An introduction to snakemake: a tool for a...

CompBio Skills Seminar UC Berkeley

1,251 views

17:50

4 things you MUST do before STARTING your ...

Genomics With Georgia

6,654 views

20:36

bioinformatics ROADMAP + Q&A

agenomicsphd

48,271 views

13:51

Wrapping a bioinformatics tool in Docker -...

Joshua Orvis

335 views

21:33

Bioinformatics project ideas

OMGenomics

39,285 views

48:41

Bioinformatics - Snakemake Workflow Manage...

Alex Soupir

8,386 views

1:16:29

Simple RNA-Seq Pipeline - Nextflow Worksho...

Nextflow

11,563 views

15:14

nf-core Nextflow Tools: Ultimate Guide to ...

BioinformaticsCopilot

804 views

22:36

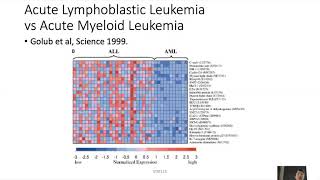

2020 STAT115 Lect1.1 Bioinfo History

Xiaole Shirley Liu

46,257 views

10:02

Bioinformatics in Python: Intro

rebelScience

151,119 views

56:23

Genes and geography -- a bioinformatics pr...

OMGenomics

29,747 views

30:12

An introduction to Snakemake tutorial for ...

Riffomonas Project

17,088 views