AI Vector Search in Oracle

313 views11881 WordsCopy TextShare

Jignesh Patel

AI Vector Search in Oracle Database is a new, transformative way to intelligently, efficiently, and ...

Video Transcript:

all right let's get started for today uh we have an amazing speaker Shashank chaan who's been uh at Oracle for a while he is uh VP there and is leading their inmemory and AI products today he's going to talk about some exciting new additions to Oracle which is adding support for Vector databases and as we know you can be a database company today if you don't support vectors natively so really looking forward to seeing what uh Shashank has to say on this topic so with that keep the intro short and sweet and Shashank the floor

is yours all right hey thank you thank you so much for having me I really wish I could have come in person but we we chose you know Veterans Day and for for the the the lectur and fortunately my kids are off so I wasn't able to come in person but okay so um yes seon um I'm gonna you know spent about 15 minutes going over through some overview of vector search I suspect many of you are with this already but it's good to have that that introduction this is our Safe Harbor statement making sure

that if I say something I shouldn't have said then I'm not liable saying it um okay so let's get started here so first off what is Vector search right Vector search is this new technology that really enhances information retrieval by allowing you to map your queries in natural language to the relevant data in your database and it does this based on semantics and not the precise matches that you're familiar with when you're doing a a database search so it's doing it based off on semantics or the underlying meaning of the content and using vectors to

measure that similarity so let's go through like an example here right on the left hand side you have this unstructured content you have video you have documents you have pictures and those unstructured content go through an embedding model that basically generates a vector numbers and those numbers are called Dimensions those Dimensions captured the important features of that data they overall the vector represents the semantic content the underlying meaning of that image that document whatever not the actual words in the document or the pixels in the image right so here's a u a real world example

where you know in in the real world in Enterprise world right we have customers that have support incident files these are documents people find bugs and in this support incident file you have all sorts of information like the representative name her email address the issue being a laptop the severity issue so forth all of these attributes can conceptual conceptually mapped to attributes in the vector so the laptop represents the product and it has some value of 33 the severity one attribute has a severity feature and it has another value and so you have this sort

of mapping from the unstructured content this document to a vector and now you can do some math on that Vector to determine if this support support instant file is similar to another support incident file that's being recorded so let's take a look at that um the main similarity the main excuse me operation on vectors is the mathematical distance between them so you know everybody's familiar with the distance between two points in a in a your plane here this is just multi-dimensional vectors right and so it's the same concept it's just the ukian algorithm it's the

pyam THM that you guys are familiar with but there are many different ways to compute the distance there's ukian there's cosine there's Hamming there's Manhattan there's Su of which we'll all we support all of those in our in our solution but let's go back to this example with this support incident file so let's suppose that the support incident vectors are collapsed into two Dimensions right let me move this I cannot see this there we go um so in this view I have uh two support incident files for laptops running slowly and you'll notice that they're

position close together and they're position close together indicating that they're similar to each other versus a support incident file for a desktop that's crashing would be much further away from the laptop incidents and so fundamentally again the closer these two vectors they are are the more similar their underlying content is so it's it's as simple as that now similarity property is what poers AI Vector search so let's look at a typical pipeline you begin with a question okay you're searching for something image document text something that question that you're searching for gets embedded into a

vector using an embedding model and there are thousands of embedding models out there um and talk about you know which ones should be chosen and how you determine that but you choose an embedding model you create a vector and now what you're doing is you're searching for vectors that are similar to your vector that you're searching for in your database in your Corpus of vectors here and remember those vectors map to do documents content that you have in your Corpus so when you're doing is you're going to do that mathematical distance computation with your qu

Vector with all the other vectors and you find the close closest vectors the top K matches top five top 10 whatever the top 10 top five closest vectors then correspond to the the documents right the top K matches the top K documents okay so that's this the the basic semantic search pipeline involving vectors now why is Oracle adding AI Vector search aren't there dozens of vector databases out there already and the very simple answer to that is we believe that you should run vector to search where your business data lives and I'll go through an

examples why that's important so let's suppose you know on the left hand side here you have your business database let's let's say it's Oracle database okay that's all your operational data resides over there and on the right hand side is you have a vector database like pine cone or weate or something like that now to perform semantic search on that Vector database one solution is you have to continuously send that data over to your Vector database so in our example we use that support incident document right You' have to send that document over it would

have to be vectorized and then the vector would be stored in the vector database but you can't stop there you need to also send more data your business data like the product data or the customer data these are all tables that are sitting in your business database and the reason why you need to send that is because you can't really predict the question that's going to be asked the question not just going to be hey find me documents similar to this document it's going to be something like find me documents similar to this document and

the product is equal to laptop and the customer is in this region this you know in Asia or um North America so that data has to be sent along uh with the uh with the support instant data in order to actually perform your search now the problem with this is that can cause data staleness it adds complexity it can com compromise security you got two different security policies on two different systems if you change the product table that has to get reflected down the vector database and so on so hopefully that's very clear that we

believe that hey don't don't push your data out of your operational database to Vector database instead bring the vector workload to your operational database okay so now we get to um you know our solution essentially um we think it's a better approach as I mentioned we want to add Vector search to your business database and so three main things that we have here we have a new Vector data type in our database in Oracle that allows you to basically store your vectors right you have new SQL syntax and functions that allow you to express that

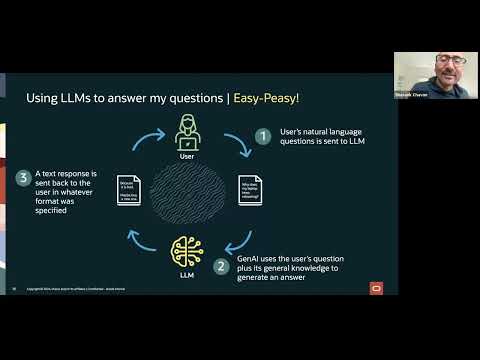

similarity search that you want to do and and you'll see some examples how easy it is to do so and then new Vector indexes to perform your search very very fast such that it is an approximate search but highly accurate no need to move and synchronize your data no need to manage multiple products and so forth okay so before I get into more details I want to spend just one more time on background and how Vector search uh is related to gener generative AI in general so with the power gener of AI you can use

Vector search to interact with business data using natural language so let's go through an example here you can use your large language model to answer your questions really easy to do here I am a user there's my large language model I ask this question right and my question is why does my laptop keep rebooting now if you ask this to the large language model it's going to use its general knowledge that was trained from the internet to try to answer this question and so what is it going to give you well it's GNA say because

it is bad maybe buy a new one right now why does it give that kind of answer I'm just this is by the way this is all just an example not real example but an example but it gives you an answer that's not specific to your question because again it wasn't trained on data that reflects your your situation your question your product you know your environment so that's an example of what why would it give me an answer like that now the issue again is those longings are trained on General Knowledge from the internet they're

based off of a frozen you know past snapshot maybe like 2 three years ago the large language mods as you guys all know can hallucinate so instead of just saying I don't know it will just give you an answer that it thinks it knows it's completely wrong and so in general they can provide very poor quality responses to your questions so we can do better with Vector search you can augment your questions your prompts with the private databased content that you have again in your operational database right where your data you know lands in um

you don't have to train your large language models on that sensitive Enterprise data that you have so let's look at an example and this of course is called rag which I think many of you have heard about with people augmented generation so let's look at this example again now you got your user saying why does my laptop keep rebooting um again that question gets embedded into a vector you perform a vector search on that and you retrieve all of that private content that relates to the question that you were asking okay so it pulls out

those incident reports related to your question but not just that we can add again all of that operational data that we have the product type the customer location all this information that we can conclude based off of The Prompt and based off of the vector search that we can combine together now and then send all of that to the large language model as a much more richer augmented prompt and the response now goes back to the user and this becomes more interesting it says Hey the issues with the firmware controlling the fan apply this operating

system update etc etc and it can base that again because of this private content that you have sitting in the Corpus in your database okay all right so now that's 15 minutes quickly of background information and now we're going to spend the rest of our time kind of talking about our solution and getting under the hood here so the the main highlights of Oracle AI Vector search are the following number one is allow you to generate Vector embeddings from unstructured data so we're going to talk about how you can generate those vectors either outside of

the database or inside of the database number two is we have a new Vector data type as I mentioned that you can store your vectors in alongside your business data in the same table right it's just another column next to your product column or your customer column um index we allow you to build approximate Vector indexes on your vector columns and as you'll note you you'll find out that if you don't have an index that's fine you're going to get very accurate responses because it visits every single Vector to do that distance computation but the

downside as you can imagine is it is very expensive distance computation is not de despite having you know Hardware resources to to optimize that fourth thing is the search perform AI Vector search on Vector columns using SQL so you know we start off with SQL as the source of how you want to go ahead and and perform semantic search very easily everybody knows SQL all database even if you're a database administrator you can perform this semantic search and and and have generative AI support in your Enterprise company in like five minutes it's that it's that

simple to do um but also we don't just support SQL you can support right your favorite language right Python and node.js whatever you want and the last thing is we integrate with Mission critical Enterprise capabilities so again Oracle database has been around for 40 50 years um it's the number one database for a reason right it's got a ton a ton of features um which people frankly customers have a hard time leaving um you know so we want to make sure that Vector search is inte into all those features all right let's get into it

so generating vectors so we offer three ways to generate vectors the first is you can pre-create those Vector embeddings you can load vectors from external files into the database directly into a vector column at however you want so however which way you generate those vectors no problem we can load them in if they're in a paret file we can load them in if they're in some Excel spreadsheet we can load them in number two you can use an external embedding service so you can make a call out an API call out from the database to

your favorite embedding model provider uh using this API that we have here so you can go to hugging face you can go to cohere you can go to open a whatever you feel like generate a vector number three is kind of the most interesting where you can use a database resonant embedding mod to generate your vectors so what that means is within the database itself we allow you to load a model think of the model bytes into the database and use this it's called Onyx open neural network um uh uh exchange I think it is

um to basically uh evaluate open source embedding models within the database so let's talk about that so first there's the external embedding provider you can just provide your credentials open a credentials and you see right here you can call it udle to embedding with your unstructured content some parameters to describe your uh credentials and there you go you got your vector now the in database embeddings here is you call this function called load Onyx model now as I mentioned these are basically open- Source models you can get them from hugging face for example you can

look in the leadership board you can find the top uh models out there you can find out which ones are open source and then you can download them in and you can load them directly into the the database okay so I gave it a name this is the the binary representing the open source embedding model and now to generate a vector it's just a new SQL function that we have so in this example select Vector embedding the name of the model and applying it to the unstructured content and that's it from this table now if

we look under the hood what is what's actually happening was I mentioned the embedding model has to first get converted into this Onyx format so we have a tool that allows you to do that now what's included in there is information like the tokenizer so we require the tokenizer because it helps us determine in fact how do we want to chunk up our data before we you know if you want to generate vectors per chunk the tokenizer helps us uh do that the third thing is now the Onyx model byes are loaded into the database

as a blob so we store this like a like a column in a table with a blob type okay now when Vector embedding gets called that blob gets retrieved from this table it's basically a dictionary table gets retrieved from the table and an onyx runtime session is created an onyx runtime station is basically just think of it as a um like you know Onyx is just like a an interpreted engine that's basically evaluating bite code to to generate your vector so it's creating that session to be able to then evaluate the the um the the

bites that you have inside of your your uh embeding moment the fifth thing now is currently we're limited to text based models because there's some security concerns with Onyx and notably this open CV Library open CV is used for image pre-processing um and so that's our current limitation right now but that's going to be removed in upcoming release okay question question y can you hear me okay yes I can hear you just fine great when you load these Onyx models two parts to the question the first part is do you uh send each record to

the embedding function one at a time and is the executable loaded in a some sort of a VM container that runs outside the main Oracle process or it is running in process great question uh so number one is you can support single single tons if you will single queries or you can support batch queries so we can send a single unstructured content we can send a a batch set of content so for example if you are creating a table insert as select so I'm going to select all my instructed content type them into Vector embedding

and insert those vectors into another table that will be done as batches um number two is in we are running everything within the database itself so it's in the database instance itself it's using all of the parallel query uh process workers that we have as part of the database it's not sitting outside of the database now that end you can do it outside of the database if you want that's you know you can run a python with uh with the Onyx runtime uh uh library and you can generate your vectors yourself separately uh we do

it ins the database as a feature so that you can say hey my data stays encrypted it stays secure all within the database you never actually leave the confines of the database aside at all and it is just one of the Alternatives as I mentioned you no you might get better faster performance by sending all of that unstructured content off to um open AI run them in a GPU generate those vectors quick you know generate those vectors quickly and then send them back in that's that's that's one solution but it has it you know it

has tradeoffs that's all it does it but the Onyx model then it is running inside the database it's just running as a UDF essentially something akin to that and you're not doing any other checks on it right so you're just taking taking that code and effectively running it through your UDF framework would be my guess that's that that's that's a good way of thinking about it's exactly right so it's just it's just we don't you know there we take the onx library as is we make some changes as I mentioned to be able to support

uh you know the the types of models that allow that is what tokenizer coming into the bottle bites and that's pretty much it and I know you mentioned that the open CB and other libraries that might come in they could be unsaved so would you run that in a sandbox container for the embedding yeah so all of this EXA good question all of this first has to go through our legal right proove that we can bring in these thirdparty libraries they all have to go through fuzzy testing security testing like all the the whole nine

um once we have approval to use any of these third party libraries then we can incorporate we we've gone through that process for the rest of the the Onyx Library as a whole okay and then for libraries that are approved do they run still as a separate process outside in the sandbox or at that point they just deemed to be safe and they can run exactly process they're deemed to be safe and they run within the within the database within the same database processes wonderful last question related to that do you have to worry about

memory management and stuff like that because some of these libraries could do a massive memory allocation do you absolutely so the memory management so basically we we hijack if you will memory management thread allocation all of that stuff is hijacked so that it runs with Oracle memory management components Oracle thread Library support so there is a little bit of trickery that happens in order to hijack that code path but that's how we make it where maybe that answers your other question that's why we make it it's safer if you will when we do this so

we have boundaries in terms of how much you can allocate right if things go beyond that boundary then it errors out great thank you yep all right okay second thing now store vectors right so we talked about this Vector data type um you'll see here we have a column called instant V has a data type Vector we make that a first class data type and you'll see that we have two attributes here SE in this case the 768 for the dimensions the number of dimensions and Float 32 for the dimension format both of these are

optional so in other words you could just say Vector without specifying anything else now the reason why we support optional is because we want the we don't want the schema to have to change in the event that you decide to say hey I want to use a different embedding model I want I don't want my application to have to change just because you decide to go to a different embedding model so that's why we we are very flexible with our data type we just say Vector we don't aot you don't have to specify the attributes

and everything will will work just fine if you specify these attributes then it's just more performant we say hey I know that my Vector is a fixed width of this size it has a every Dimension is a 32-bit float I can optimize accordingly based off for that um to insert vectors you know just you know you you this is the format that we support for a um for a textu representation of a vector uh you can select from the vector and you'll get back this textual representation of the vector internally we store the vector in

a binary representation which I think I'm going to talk about shortly um in addition to that now we also support different types of vectors so we support a binary Vector we support sparse vectors so I'll describe that very quickly here a binary vector vectors binary vectors are vectors where each Dimension is represented by a single bit zero or one and the vector itself then gets packed into arrays of of U and eight right eight Dimensions packed into a bite essentially and there are many different models that support this coher uh Jenna they all support these

binary vectors um so here you know we would just say Vector the number of Dimensions 1024 bits and binary representation lots of advantages of course you can get 32x reduction right you go from a 32-bit float to a single bit that's pretty impressive the computations are going to be really fast um as you can imagine now when you're dealing with bits it's basically bit Vector operations xor pop Talent so they're really really really really fast the most surprising thing for me is the accuracy so the accuracy is about 90% or higher of compared to float

32 accuracy so that's pretty impressive what this is from coh here cooh here says this I think they even say 97% if I'm not mistaken so pretty pretty impressive um and great compression great performance with them now sparse vectors are kind of somewhat the opposite they are very large number of Dimensions but only a few of them have nonzero Dimension values so they're very very sparse you can think of these sparse vectors being 64k 128k Dimensions but maybe you know less than a percent uh of those dimensions are actually have non-zero values and they're generated

by the sparse encoding models sped bm25 Etc now what these models are used for are primarily for document search or text search and the dimensions of those sparse vectors they map to keywords in a vocabulary so imagine you have a vocabulary of 30,000 tokens okay so essentially your vector is 30,000 dimensions and if your document contains any of those words in that vocabulary then that bit gets lit up saying yes I have this you know I I have this word I have this word and it's not just a bit so it's actually a 32-bit float

because it's a little bit more complex than that it's not just do I have it or not it's more like is the word that I have similar to the word that's in the vocabulary and how similar it is is how the the dimension is is how the dimension value is encoded so significantly faster Vector search compared to dense representation now as you can imagine um the if you have a sparse Vector float 32 30,000 a naive implementation is just going to densify it and so you're going to have 30,32 bit floats which is dumb right

like what you really want to do is compress out all of those zero dimensions and just keep the nonzero dimensions and so that's what we we do here in this example when you want to insert you say hey I have 30,000 Dimensions but these are the positions of the nonzero dimensions and these are the nonzero Dimension value themselves and this is essentially how we store it on dis as well okay so some a slide now a little bit more on under the hood of our Vector data type so number one is we store these vectors

as wobs within the database so you know in order to come we came out with this uh I should take a step back AI Vector search came out in May of this year and it was essentially designed about six months before that so all of what I'm talking about here has been in the works for about a year and so in order to be able to deliver it at that speed we have to leverage all of the underlying technologies that we have you know to begin with today so blobs has been around you know for

a long time so vectors are basically stored as blobs now blobs are inline into rows up to 8 kilobytes inline means there's no separate segment that we have to a blob that requires an indirect pointer axis to get to it in most cases that Vector is sitting inlined into your row with all of your other column values so it's a direct access to get to them now as vectors get larger and larger if they indeed get larger and larger behind above 8K then we have to have very fast ways of accessing those blobs because again

it's an indirect access the third thing is as you can imagine the vector bite format that we have the vector format just say the in the it's bite representation is very flexible and because we want to make this you know flexible for the future right so in other words it should be able supp any number of Dimensions right could be way more than 64,000 if we wanted it to uh any type of Dimension format right now you got in eights you know 32bit floats um you can also have at some point float 16 or you

and then we have binary of course already so different types of Dimension formats will be able to support and in addition to that we have various different metadata Fields now the metadata fields are used for many different reasons one could be from a performance standpoint so I can look at this Vector I can pre-process it and store some metadata associated with that pre-processed representation of the vector such that when I do a search I leverage those metadata fields to make my search faster um another way of looking at metadata is just more information about the

vector for example um what if we wanted to remember what embedding model this Vector was generated from that's a way that you can express uh more information within the vector itself another thing that we looked at surprisingly you'll find out that a columnar representation of vectors is a pretty interesting option um the reason why columnar representation could be good is just think about um all of the vectors uh excuse me all of the dimension values for a particular um Dimension are likely to be very similar to each other right they may be off by a

bit here and there but very similar so they might many of them may be positive many may be negative Etc so you can to get better compression by by leveraging the um the similarity across Dimension values if you represent your vectors in the column of representation um that's an option as I mentioned it's not something that we necessarily do but we we've have looked into this and we do support this in some cases bite level compression of vectors also support so this is another surprising thing if you put a bite level compressor like Z standard

on some of the Benchmark vectors out there they give you pretty good compression 30% compression um which is you know pretty surprising um I think generally it's not that won't be the case but uh I did I talked to the was it Colette I think is his name who created Z standard U this was about six months ago so and he had mentioned it the time that they were looking into optimizing these standard for vectors further so I thought that was interesting and quantization of vectors is also coming soon so we'll we'll talk about that

in a bit but quantization is just a way of of representing the vector in a smaller you know smaller representation for example 32-bit float into an in8 but the trade-off of course is you sacrifice on on quality doing your search all right um so now let's get to indexing vectors so as I mentioned Vector indexes are really important because they will make your search a 100 times maybe a thousand times faster and that's not no exaggeration there um while still preserving pretty pretty high accuracy in your search so we have two types of indexes and

I'm going to talk about this one at a high level first the neighbor graph index basically maps to something called an hnsw index you may have heard of this before hnsw index and it's an inmemory graph index where the vertices represent vectors and the edges represent similarity so Vector one is very similar to Vector 2 but Vector one is very dissimilar to Vector 9 now the benefits of of uh this nebor graph index are one it's very efficient it's probably the best in its class right now um it's very accurate and it's very fast the

only downside with it is it in memory only now there are some hybrids uh hybrid Solutions uh with hnsw um that uh that are applicable but again everything has has tradeoffs here um if you look at the neighbor graph index a little bit more closely or this hnsw index a little more closely it is a a hierarchy of graphs where each layer is essentially a neighbor graph as I mentioned the vertices have edges and the edges represent similarity and as you go uh from the lowest layer to the higher layers you see this decaying fraction

of vertices you have more vertices here in the lowest layer in fact you have all of the vertices in the lowest layer and as you go up the layers it's like a zoomed out picture if you will imagine you're looking at a map deep down into the bay area for example and then you're zooming out to uh California and you zoom out to United States and you zoom out to the world that's essentially what this graph looks like and so similarly when you do a search you stop you start excuse me at the topmost layer

so here's your query Vector I'm trying to find similar vectors to this query Vector so I begin my search at some random point in this layer and I find the closest Vector that this qu Vector matches to and then I dive down deep into the next layer which is deeper now it has more connections and again I find the nearest neighbor and drill down further to the next layer and again I find the nearest neighbor and drill down further to the last layer so once I'm at the lowest layer where I have all of my

vertices all my connections I now limit my search just to that neighborhood of where I landed on and it becomes a greedy algorithm at this point where there is a stopping condition that you will hit which which may not find the most precise the highest you know the most similar Vector but it is the most approximate right and the fastest way of getting to it okay so now going in a little bit deeper um what is the you know how do we actually what do our structures look like in memory so we have our memory

resonant structure so we have the graph itself and you can just think of the graph in terms of the data structure just think of it as a table where you're indexing on some vert vertex ID and the vertex ID maps to all of the neighbors all the neighbor vertex IDs for that vertex ID so a source to all of its targets um that's kind of the representation in memory for the graph and we have the vectors themselves that are laid out in memory and we have what we call our row IDs that are laid out

in memory now in Oracle a row ID is a identifier to the row in on dis so for this vector vector zero vertex ID Z here's its vector and vertex ID zero this is its row ID to get to the row on dis where that Vector resides in its table okay and this is as you can imagine it's really important because if I do my search on the graph and I say okay I I found these top five ver uh uh vectors now I need to pull the attributes for those vectors I need to get

the customer ID that represents that Vector the product ID so I got to go to the row IDs fetch the vector fetch the the attributes and bring them back out now in addition to this we have some dis resonance structures so we have what's called a row row ID viid table the row ID viid table is a mapping from the row ID to the vi ID the vertex ID okay VI ID stands for vertex ID which is the which is its value on in the graph and memory um and of course you have your base

table your base table as I mentioned is the table that has your vector column and all these other attributes in it now the reason why we need this variety of the table I'll talk about shortly is when you do when have attribute filtering so what attribute filtering means is like I have some filters on my base table so let's say for ex for the sake of argument that this is a products table and the vector column represents a a vector of the product an image let's say now I may have a filter that says where

product uh category equals toys okay so what we're going to do is we're going to run the filter first on the base table with with the product category column and say Hey I want only those rows where category equals tce great now I got let's say out of 10 rows I got five rows that match that category now I want to go to the row ID VI ID table and say for those five rows get me those five IDs the vector the the vector IDs and basically I represent that as a bit map okay just

think as a simple bit map um and now that bit map that that bit map gets used when I'm doing my search in the graph that says hey you know this vertex right here that you want to go to well the bit map says it's false so you're not allowed to look at this Vector you go look at its neighbors and so it's a filter now on the graph itself and that's how we can basically perform attribute filtering on the hssw graph by first evaluating the filters on the base table and and I'll show that

in in just a moment any questions on this I know this is a little deep so I want to make sure I address any questions here I have a a quick question uh I guess first part of that is how big are the row IDs are these like 32 64 bytes the what's the space that it takes in represent these are these are six bytes or eight bytes that's the size of these roties got it and then the second part is does the query Optimizer consider joint optimization between attribute filtering and hsw yes yes it

does yes it does so that's that's that's the beauty behind it because the optimizer play an integral integral part of the uh of the overall performance right in the plan that we generate here got it so how does it have any idea about the selectivity of the hnsw uh a prior uh say say that one more time Jes how does it know how to guess the selectivity of using the great question so the selectivity comes from the filters right so we start off with the selectivity on the filter column so let's say that selectivity is

you know 1% or let's say it's less it's very selective okay so it's very selective we might choose we might choose this pre-filtering solution where you evaluate the filters first on the base table join and then perform the search on the graph but if it's not very selective If the product you know let's say 99% of the rows are all poys right so in that scenario what we would do is do the scan on the hnsw graph first and then we would get the top 10 top 100 matches whatever it is and then evaluate the

filter on those 100 matches to reduce our set down so that we get the the right results so that's how the you you have to gather table stats first and then you want to run your your queries got it okay great that sense okay sure all right let's keep going so so we went into detail about what this neighbor graph looks like um some more detail here is Persistence of that graph so we use checkpointing to persist that hsw graph to the dis so if you look at this example here's your current instance we have

our vectors and arities in memory um now we also create this checkpoint table and what that is is every layer of this graph gets serialized and persisted into dis like that as a blob again okay so it gets persisted there and now if this instance was to go down when a new instance comes up or the same instance comes back up we can then load the the checkpoint the the checkpointed graph back into memory and we populate the vectors from the from the the base table and the r viid table so in other words a

checkpointed image doesn't contain the vectors themselves because that's just you know duplicated work the vectors are already sitting in the base table they're already persisting there we don't want the checkpointed uh image to have that so the graph comes in and then from the graph we essentially rebuild or repopulate these tables um and so that allows us to be able to very quickly bring that index back into the instance in the event of a shutdown or a crash question is this checkpoint sync with the transactional checkpoint and the log or do you if you have

to recover this instance does it sync it back up to the time of the crash from the transaction yes or this is independent yes good good question so when it comes back up okay so we we haven't gotten to transactions yet and I I'll I'll talk about that in a moment but essentially the the the short answer is yes it syncs up with any transactions that have happened since then um but this the long answer is the solution might be that it rebuilds the graph from scratch so that's the the worst case scenario the better

case scenario is is that it leverages the transactions that we've loged and then we apply them as part of our search so that we can search the graph and we search the log of changes together and get a final top K I'm going show I'm going to show you that in just a moment right here when we get to transactions um okay so uh I'll skip this in the interest of time this is just very simply we we can build an index on partitions of your table which is really important because if you have a

query with a predicate and the predicate gets you to a certain partition why scan a global um index just just stand the local index uh for your for your vector index so I'm going to skip through this this is basically describing uh our second index called an IVF index but for the in the interest of time I'm going to go ahead and skip this um we can if that question comes up later I can certainly talk about it okay so now we want to talk about searching vectors so we have a new SQL function called

Vector distance it's a very simple function it takes in two vectors and it takes in the distance metric that you want to apply so ukian Hamming Manhattan whatever it is out from it comes a 32bit float representing the distance between these two vectors so super simple here's what the query would look like so let's say I'm saying find the top 10 matching support incidents well I select from this support instance table order by Vector distance so that means a compute the vector distance for uh your your search vector and all of the vectors in that

table in that column and order those rows by the distances that you computed then fetch the first 10 rows only that's how you get your top 10 matches okay so really simple super easy to use um if we look at this now in terms of what the plan looks like so now let's look at that little bit more deeper so again before I get to that same query Vector distance fet for 10 rows only so if you look at the query plan okay I don't know if any of you seen an oracle query Plan before

this might look a little bit complicated but if you look at this plan it's got five operators in here and let's start with the lowest operator the vector index hnsw scan this operator is responsible for doing the scan on the hnsw graph and out from it comes row IDs okay the the row those that you fetched from this index scan and what you're doing here is you're fetching the properties from the base table so you see here I want to say select ID that means ID is a column in this table alongside your vector column

so you're going to fetch out that ID and you're also sorting all of the vectors by distance and you stop when you get to 10 matches that's what this filter is that's what the plan shows okay now the reality is all of what I just said happens here in the vector index row so so in other words you do your you perform your Graph Search you get the top 10 matches and just for those top 10 matches you're going to go to disc to get the ID column and everything else flows up as a pass

through to get up there okay hopefully that makes sense I'm G to show you slightly more complicated uh graph in just a moment now here's one where you have a filter find a top 10 matching support instance that were filed within the last seven days so same thing except now you see this wear Clause where incident date is greater than CIS date minus 7 order by Vector distance fch first 10 rows only okay now it gets interesting the optimizer picks the best access plan based on the filter selectivity so this goes to your question Jette

if it's very selective highly selective we go through what's called a pre-filter time which is what I described earlier you apply the filter on the base table first then you do the vector search if it's low let's go low first low selectivity then applies a post filter plan in the post filter plan you're going to evaluate the graph uh the search on the graph first and then you apply filters after for the passing ones if it's medium selectivity we do something what's called INF filter and it's really it's very similar to a post filter and

that we're evaluating the filters as we are scanning the graph okay not after we've done the graph and gotten a top 10 result as we're doing it so let's go through an example of what that looks like here so for that same query okay the same query here with the wear Clause everything else is the same we get a slightly more complicated plan and this is a prefilter plan so let's look at what that means first so pre-filter plan we have the same operator Vector index hsw scan but it says prefilter now under that operator

is a child this child view here is doing the filter evaluation and that filter evaluation is a join between the table the support instance table and if you remember that row ID viid table I have okay so why it's doing this the first thing is the filters you see the filters here instant date greater than CIS date at operator 8 so it does a table scan on that support instance table all of those passing rows are joined with the row ID VI ID table so out from it comes a bit map that says these are

the vids these are the vert IDs that you can only look at you cannot look at any other vertex IDs when you're performing the graph scan got it so then we apply the search on the graph scan and out from it comes the top K runs okay any questions on that jesan team I think that makes sense so in the hnsw index for each node you keep you record the viid yes for each each Vector we record the vid for correct got it do you have anything else besides the vector data and the vi in

the nodes in the graph index in the in the graph index we do um and it's a it's a feature that's coming out where you can you can maintain additional column values in there as well so the benefit there now is you can imagine I don't need to do this filter on the base table I can just go on makes sense yeah that makes sense right and that that is what Vector databases can only do today right Vector databases are basically a denormalized representation of your steem with those columns alongside now but as you know

we know that's not how normal you know normally schemas are normalized if you go an existing system today you're G to have a separate table for everything so we need to make sure it works for that for that type of schema thank you okay so that's prefilter now I'm going to show you INF filter really quickly so INF filter is a much more condensed plan where you also have again the vector index hssw row source and it says INF filter here and in that view all you have is this table access by index row ID

so what that means is as I am scanning I'm going through the graph every Vector I get to that's a candidate I I now get its row ID I look up that row ID I go to the table and then I apply this filter and out from it comes a true or false right if it's true great I can cons I can keep that Vector as in my candidat list if it's false I skip it and I move on I don't I don't consider it for so that's what we call an infil plan it's very

very efficient um based off of the selectivity right in that Medium selectivity range all right so um lastly I think here I think this is lastly here is joins similarity search over joins so again here's find top 10 matching support instance for a laptop reported by customers in guadalahara gets a little bit more interesting but again it's your standard query you got to join between these two tables I have my wear cloths between those two tables and I keep my order by V distance fetch first 10 rows only so you can see how how simple

and clean it is you know it's if you no SQL you write SQL only thing you need to do is add this order by Vector distance to be able to incorporate Vector search into your into your queries um this point just simply says the central capability as Enterprise data is normalized anybody can kind of learn this now another differentiator that we have here is you can specify what we call a Target accuracy so what that means is I can say when I'm doing the search how accurate of a search do I want this to be

when I use the index and the accuracy can be specified in a percent for example I want 95% that means out of the 100 rows that I get using the index 95 of them should be the same as if you were to do an exact search without an index that's what accuracy means okay so if I say 5% that means out of the 100 rows I should at least have five of them that in that golden set if I didn't use the index now what other Solutions other uh Vector databases do is they they expose

the lowlevel parameters in other words for each of those indexes I talked about this hsw index and I didn't talk about the IVF index but there's something called IVF index they have parameters that can control how greedy of a search you should do um and you have to know how to manipulate them how to change them so we we kind of control that for you we you just specify the percentage and then underneath it we map it to those parameters and do guarantee do you guarantee that percentage would be met or is it best effort

great question that's why it says Target so we say Target accuracy because we cannot guarantee it right but but what we do uh Jes is and I don't know if I mentioned it here um let me go through this and I and I'll answer your question just a moment the target accuracy percentages are mapped to the lowlevel index parameters I mentioned that so for example if I'm dealing with an IVF index an IVF index basically clusters your vectors into multiple clusters and then the index only visits certain clusters in order to approximate it search so

if I use an IVF index with 90% accuracy that might say hey visit 31 clusters okay out of your thousand clusters visit 31 of them um but if I had like a 5% accuracy it might say only visit two of those clusters when you're doing your search now what we do is this we map we have a table as I mentioned those percentages are mapped to parameters 10% 20% 30% and that table gets bootstrapped on index creation so when that index gets created we at that point based off of the vectors that we've seen we

decide what how to map 10% to en probes 20% to en probes and so on so that when you say 90% accuracy we know how aggressive or non-aggressive we should be now the table can morph over time as we see queries or as new vectors gets inserted and so that speaks to what you're saying Jes is at some point point if you keep adding more vectors and more vectors and maybe there's some skew in there the mapping could be completely wrong right and so we have you know want to periodically revisit this table and in

the background evaluate how accurate is it still and if it's no longer accurate we have a couple of options option one is we rebuild the index right option two is we just simply change the the mapping the table when saying n31 clusters for 90% we might say visit 50 clusters if you really want to get 90% accuracy so that's the that's the overall design now the implementation is where you know where it gets interesting but that's the overall idea behind the the the accuracy solution any questions okay I am Jes we have a up to

what time is that you want I'm we should wrap up in about like 5 10 minutes 5 10 minutes okay perfect okay so um this is the integrate section um bottom line is okay so actually we're getting into transactions so let me actually talk about transactions that's quite interesting and let's talk about for hsw supp okay so the way transactions work is we Journal DML changes so as you build your index once the index is built as new vectors come in we Journal those changes keeping the hnsw graph as a readon structure and by the

way what I'm describing to you right now is what's in product today um coming very very soon it's going to be a different solution so I'll talk about that just a bit so that's what we do is we Journal those changes into a separate table think of it as a as a faired journal table we have an inmemory private Journal that pracs those changes for active transactions but then we flush those changes to a shared Journal table when that transaction is committed now the queries will cons consult those journals along with the graph in order

to give us transactionally consistent results and I'm going to show you this uh example uh right here so let's look at this suppose we have our hnsw index it's built right we we created our index done now we have this private inmemory journal and we have an Onis shared Journal table so now as new vectors so let's say remember new vectors are coming in and I mentioned they get journaled and they get flushed into the shared Journal now when you do a search what you're G to First do is you're going to scan for delet

anything that was deleted if there is a a transaction in in an active transaction that deleted some row we need to know what that is similarly if it was uh in the SP Journal we need to know what it is so based on those delet deleted rows we get a set of deleted vertex IDs right now we can apply the search on our hnsw graph skipping those vectors those rows that were deleted right and from that list we get a top K let's say top 10 okay we get top 10 vectors coming out from this

one now similarly we need to scan for new inserts is both the private journal and the shared Journal so we again do those scans and we get a topk from that and now finally we just merge the two top 10 from here top 10 from here 20 coming in final top 10 out right so this is very simple very straightforward it works on our a a rack cluster which is a cluster of nodes because again we're just journaling the changes into table so the tradeoff is slower performance right after some period of time the vectors

pile up in these journals and so you're spending more of your time doing this scan on the journal than you are on the hsw ground and so that's where I don't know if I have a slide on it I probably do I I'll get to that slide in just a bit in terms of what's coming that's where we have an A a better solution Where You Are incrementally refreshing this graph based off of the changes in the journal so that we don't let changes pile up in the journal after some period of time those changes

will get reflected into this graph and then all the queries go on to this new snapshot of the graph okay does that make sense any questions on on transactional support all good okay great all right so now I'm going to skip through a bunch of stuff so that we can get through um this this slide deck here so Advanced features um main thing here that I want to convey is that the entire generative AI pipeline is supported in the database itself and so that means you know from your data sources being able to load the

documents convert documents from you know let's say whatever Json to text or PDF to text uh Punk those documents into pieces uh separately vectorize each of those chunks with embedding models we support within the database perform the search um perform a rag with large language models all of this is basically supported uh natively within the database and we have the apis that allows you to call out to different you know large language model providers or embedding model providers and so forth um many of you may be familiar with linkchain or llama index these are just

third-party Frameworks that allows you to very quickly build these generative AI applications and uh and we're integrated with those as well um I'm going to quickly go to this slide that just talks about hybrid Vector indexes this is an interesting index where we support a hybrid of both the text index and a vector index and the reason why you want this is because it just gives you even more accuracy and the way it works is you start with your data store you're pulling in files that you want to index and you're going to have a

separate you have your plain text that you go through a tokenizer and you build a separate text index those of you familiar with text index basically there's a a posting list for tokens to documents that's your text index and similarly you take that plain text and you throw it through a vectorizer and you generate your vector index so we have both the text index and a vector index and we call that a hybrid index and when you do a search the search can either go through just the text index just the vector index or a

hybrid search where it goes through both of them and then scores the results accordingly by merging those results together so I won't go into more details behind that I'll just leave it at that um coming soon just to kind of get through all of this um I mentioned incremental refreshes of hnsw graph supporting more models um Distributing the graph across the cluster of nodes imagine a custom distance function so you can write a function in JavaScript for example and then evaluate distance between vectors using that custom function we talked about the included columns and Vector

indexes and what about gpus so I don't think I have enough time to kind of talk about that um this was what going to be my next section about this but we do have this early evaluation PC where we are building the vector index on a GPU uh using nvidia's uh Rapids Library where they can build something called the camra index which is very similar to an hnsw graph but in that kagra index we are basically enabling ourselves to offload the index creation to a GPU and then reincorporate that index back into uh CPU memory

for hnsw graph and all this is available online by the way because we gave a presentation of this recently at at a conference um so because we don't have enough time to go through it and I apologize um I'm just going to skip through this and kind of conclude here uh these are some performance numbers which I can skip through unless you want to talk about it um lastly how to get started so you can just do a search on the AI Vector search you'll find all the information I kind of talked about um on

this web page already um you can also go to these QR codes if you're interested in trying this out for yourself for free um so this is you know really cool you can go to live Labs or you can download uh a container for Oracle database and you can evaluate it for free yourself um and that I'll end jigesh so I want to make sure I give enough time for questions here wonderful that's sounds great we have time for a couple questions so let me just see if the room has some questions yep go for

it you want to come up over here and speak up can you hear me from there I can kind yeah I think I can hear you but if you want come up go for so first of all thank you for the amazing talk I have a very high level question I feel like reg and like AI Vector search is very popular with data companies these days days I know datab braks also has one what is the differentiation for Oracle is it like tailoring to build features for your specific clients that have been with you for

a long time is it like adding a lot of like more generalized features and trying to attract like newer startups like making it easier to have a geni pipeline what's the focus here great great that's a great question so the the main thing to be the main thing is the value that we add to customers that are running Oracle database today where all of their business data resides right their data is already sitting in Oracle and so the value ad that we have is allowing you to perform effective search on that data right find that

search your business data we Oracle is we we consider what we call conversed database meaning you want to do a spatial workload a graph workload a text workload you can do all of that on one database on the same data that's the main thing the other things though are our differentiat like we talked about so I mentioned this target accuracy databases don't I don't know if anybody that has something like that to kind of simplify things to make it more easy to perform your search um doing more complex queries like join um most vect databases

I think all of them require you to have those included columns in a normalized represent excuse me denormalized representation so that's not how data is normally stored you normally have Separate Tables for your data for your scha and so to be able to join across them using SQL in a very easy way where the optimizer chooses the most efficient plan that is a major differential I would say you know compared to other databases and the third thing I would say is oh sorry go ahead I'll as about the third thing is simply we do support

generating those vectors within the database itself which is a a novelty so a lot of the customers whom we talk to they're in these air gapped environments the very secure bank think Bank uh banks for example Banks cannot risk sending their data outside of the confines of the bank okay so they're in these air gapped environments everything has to run on their servers so in that scenario uh being able to generate vectors and perform that similar search directly within the database is a is a pretty cool feature great we'll take one more question so you're

Co for that yeah so so I just have a question one of the main advantages of having like this vector search functionality within the Oracle databases that allows you to create the vectors from like the data but you mentioned mostly using like unstructured data what if I had like a table that there was a specific way I wanted to transform like The Columns of the table into like a string that could be vectorized does yes is that pipeline like supported that's a a great question so um that is indeed what a lot of our customers

are kind of asking us they're saying Hey how do I do semantic search on relational data now the the way that this would work there's many things you can you can take those columns and basically build a document out of them and use a standard off-the-shelf embedding model if you did that you'll find that your accuracy is not going to be that grain okay so that's because those models aren't trained on relational data they're trained on more you know textual data um now to your point uh is there a pipeline that supports taking column values

and encoding them into vectors right so we don't have we don't have that today as a feature that you can do but we are building something like that out as we speak right now so the idea is you know let's say you have a column that as in in an an enumeration of colors for example for vehicles and it only has like 10 colors so now you can imagine a dimension value that only has you know 10 possible values in it or 10 bits in it and you can build a vector from all of your

column values based off those dimensions and now you can perform search against it it's a little more complicated that but that's that's we are we are looking into that that's the idea yeah and sort of kind of related question like a main advantage of having like another use case for having a lot of uh embedding vectors is to use them for like lat Downstream machine learning task such as like classification and clustering you see an advantage of Oracle adding like those features on the like in-house Vector information absolutely and it's it's it's actually already implemented

that's a great great question so a lot of the all of the machine learning models and functionality that we support in the database now supports Vector types as well wonderful let's give Shashank another round of applause hey and Shan thank you so much uh if you could send me the slides that would be great and we'll post that and the video of the website so uh thank you awesome awesome man thank you so much thank you guys

Related Videos

1:14:20

Evolution of the Storage Engine for Spanne...

Jignesh Patel

2,255 views

43:31

What is a vector database? Why are they cr...

Pinecone

25,952 views

26:35

Use AI Vector Search to Build GenAI Apps w...

Oracle

5,636 views

1:00:54

Oracle's talk on JSON Relational duality

Jignesh Patel

328 views

55:03

ChatGPT & LLM Ethics: History, Architectur...

Understanding Machine Learning

10 views

48:13

A Fun & Absurd Introduction to Vector Data...

GOTO Conferences

15,517 views

48:21

Developer Coaching - Build Smarter Apps wi...

Oracle Developers

1,092 views

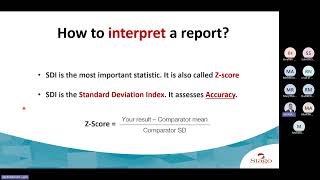

57:21

External Quality Assessment - Why & How?

Laith Abudawod

18 views

56:59

Jiahui Liu: The Black-Box Simulation Barri...

CMU Cylab Crypto Seminar

62 views

54:56

Live Webinar #9: Event Stream Processing w...

RisingWave

143 views

1:01:26

Google Tag Manager Tutorial for Beginners ...

Analytics Mania - Google Analytics & Tag Manager

255,154 views

46:34

Vector Search and Generative AI for Oracle...

Oracle Developers

3,519 views

1:03:16

Amazon Redshift: re-innovating cloud analy...

Jignesh Patel

333 views

1:22:18

Building Production RAG Over Complex Docum...

Databricks

17,053 views

56:49

Fast and Precise Business and Semantic Dat...

Oracle Developers

8,091 views

1:10:47

The Rise of Data Streaming Platforms

Jignesh Patel

536 views

42:35

Hybrid Search RAG With Langchain And Pinec...

Krish Naik

38,381 views

43:02

SQL Extension for Continuous Processiong i...

Jignesh Patel

280 views

1:00:10

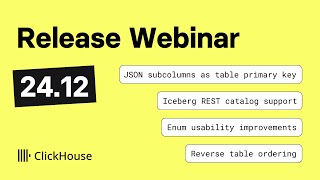

ClickHouse v24.12 Release Webinar

ClickHouse

907 views

55:21

The Data Mix - Season 3 Episode 2 - Rosann...

The Data Mix

6,585 views