OpenAI’s Deep Research Team on Why Reinforcement Learning is the Future for AI Agents

100.19k views6439 WordsCopy TextShare

Sequoia Capital

OpenAI’s Isa Fulford and Josh Tobin discuss how the company’s newest agent, Deep Research, represent...

Video Transcript:

a lesson that I've seen people learn over and over again in this field is like you know we we think that we can do things that are smarter than what the models do by writing it ourselves but as the field progresses the models come up with um better solutions to things than humans do the like probably like number one lesson of machine learning is like you get what you optimize for and so um if you if you're able to set up the system such that you can optimize directly for the outcome that you're looking for

um the results are going to be much much better than if you sort of try to glue together models that are not optimized end end for for the the tasks that you're trying to have them do so my like like long-term guidance is that um you know I think like reinforcement learning um tuning on top of models is probably going to be a critical part of how the most powerful agents get built [Music] we're excited to welcome Issa fulford and Josh Tobin who lead the Deep research product at openai Deep research launched 3 weeks ago

and has quickly become a hit product used by many Tech luminaries like the cisin for everything from industry analysis to medical research to birthday party planning deep research was trained using endtoend reinforcement learning on hard browsing and reasoning tasks and is the second product in a series of agent lunches from openai with the first being operator we talked to ISA and Josh about everything from Deep researchers use cases to how the technology Works under the hood to what we should expect in future agent lunches from open AI Issa and Josh welcome to the show thank

you thank you so much for joining us excited to be here thank you for having us um so maybe let's start with like what is deep research tell us about the origin stories and what this product is doing so deep research is a agent that is able to search many online websites and it can create very comprehensive reports um it can do tasks that would take humans many hours to complete and it's in chat gbt and it takes like 5 to 30 minutes to to answer you and so it's able to do much more in-depth

research and answer your questions with much more detail um and specific sources than regular chat gbt response would be able to do it's one of the first agents that we've released so we released operator um pretty recently as well and so um deep research is the second agent and you know we'll release many more in um in future what's the origin story behind deep research like when did you choose to do this what was the inspiration and how many people work on it like what what did it take to bring this to poition good question

this is before my time so to hear yeah so I think um maybe around a year ago we were seeing a lot of success internally with um this new reasoning Paradigm and training uh models to think before responding and we were focusing a lot on um Math and Science domains but I think that that the other thing that this kind of new reasoning model um regime unlocks is the ability to do longer Horizon tasks that involve like AG gench kind of you know abilities and so we thought you know a lot of people do tasks

that require a lot of online research or a lot of external context and that involves a lot of reasoning and discriminating between sources and you have to be quite creative to do those kinds of things and I think we finally had models or a way of training models um that would allow us to to be able to um tackle some of those tasks so we decided to try and start training models to um do first browsing tasks so using like the same methods that we used to train reasoning models but on more real world tasks

was it your idea and Josh how did you get involved at first uh it was like me and Yos ptil um who as our opening ey is working on a a similar um project that will be released at some point which we very excited about um and we we built an original um and then also with Thomas Dimson who's one of those people who just is an amazing engineer like will dive into anything and just you know get loads of things on so it was very fun yeah and I I joined more recently I uh

rejoined opening I about six months ago um uh from my startup I was uh out opening ey in the early days and um was looking around the projects um when I rejoined and got very interested in some of our HMT efforts including this one and uh and got involved through that amazing well tell us a little about who you built it for yeah I mean it's it's really for anyone who does knowledge work as part of their um as part of their day-to-day job or really as part of their life um so we're seeing uh

a lot of the usage come from people using it for work um doing things like uh you know research as part of their jobs um for uh you know understanding U markets companies uh real estat a lot of scientific research medical I think we've seen a lot of U medical examples as well um and and one of the things we're really excited about as well is um you this this style of like uh I just need to go out and spend many hours doing something that um you know where I have to do a bunch

of web searches and Cate a bunch of information is not just a work thing but it's also um useful for shopping and uh travel as well so we're excited for the the plus launch so that more people will be able to try deep research and maybe we'll see some new use cases as well it's definitely one of the products I've used the most over the last couple weeks it's been amazing using it for work for work definitely also for fun like what are you using it for oh for me oh my goodness um so I

was thinking about buying a new car and I was trying to figure out when the next model was going to be released for the car and there's all these speculative blog posts like there's patterns from the manufacturer and so I asked deep research can you break down all the gossip about this car and then all of the facts about what they've done what this automakers in before and it put together an amazing report that told me maybe wait a couple months but this year like in the next few months it should come out yeah like

one of the things that's really cool about it is it's it's uh like it's not just for going Broad and Gathering all of the information about a source but it's also really good at finding like very obscure like weird facts on the internet um like if you have something very specific you want to know that you might might not just turn up in the first page of search results it's good at that kind of thing too so that's cool what are some of the surprising use cases that you've seen o I think the thing I've

been most surprised by is how many people are using it for coding yeah um which wasn't really a use case i' considered but I've seen a lot of people on Twitter and in various places where we get feedback using it for coding and code search and also for finding the latest documentation on a certain package or something and helping them write a script or something so yeah I'm like I'm kind of embarrassed that we didn't think of that as a use case because it's like you know for chbt users it seems so obvious but um

it's I know it's impressive how well it works how do you think the balance of business versus individual use case will will evolve over time like you mentioned the plus launch that's happening you know in a year's time or two years time would you guess this is mostly a business tool or mostly a consumer tool I would say hopefully both uh I think it's a pretty General um capability which and I think it's something that we do both in work and in personal life so yeah I'm excited about both I think the the magic of

it is like um it just saves people a lot of time um you know if there's uh something that might have taken you hours or in some cases we've heard like days um people can just put it in here and get you know 90% of what they would have come out up with on their own um and so yeah I I tend to think there's like there's more tasks like that in uh business than there are in personal but I mean I think for sure it's going to be part of people's lives and both yeah

it's really become the majority of my uses for CHC I just always pick deep research rather than normal so what are you saying in terms of consumer use cases and what are you excited about I think a lot of shopping travel recommendations um I've personally used the model a lot I've been using it for months to do these kinds of things um we were in Japan for the for the launch of deep research so it was very helpful and finding restaurants with very specific requirements and finding things that I wouldn't have like necessarily found yeah

and I found it like when you have um something it's like the kind of thing where you know if you're shopping maybe for something expensive or you're planning a trip that uh is special or you want to spend a lot of uh uh that you're you want to spend a lot of time thinking about it's like for me you know I might go and spend hours and hours like trying to read everything on the internet about this one this product that I'm interested in buying um like scouring all the reviews and uh the forums and

stuff like that and deep research can put together um kind of like something like that very quickly and so it's it's really useful for that that kind of thing the model is also very good at instruction following so if you have a query with many different parts or many different questions so if you don't you want the information about the product but you also want comparisons to all other products and you also want um information information about reviews from you know Reddit or something like that you can give loads of different requirements and it will

do all of them for you yeah another another uh tip is like just ask it to format it in in a table it will usually do that anyway but it's uh like if you it's really helpful to have like a table with a bunch of citations and things like that um for all the categories of things that you want to research yeah there are also some features that hopefully we get into the product at some point but the model is able to the underlying model is able to embed images so it can find images of

the products um and it's also this is not a consumer use case but it's able to create graphs as well and then embed those in its response so hopefully that will come to chbt soon as well nerdy cons use case yeah yeah well and speaking of nerdy consumer use cases uh also like personal personalized education is a really interesting use case like if there's if there's a topic that you've been meaning to learn about um you know if you uh uh need to brush up on your your biology or uh or you know you want

to learn about like um like like some some world event it's um it's really good at you know put put in all the information about um what you feel like you don't understand what aspects of it you wanted to go do research on it and it'll put together a nice report for you one of my friends is considering starting a cpg company and he's been using it so much to find similar products to see if specific um names are already you know the domains already taken Market sizing like all of these different things um so

that's been that's been fun to he'll share the reports with me and I'll read them so it's been pretty fun to see another like fun use case is that it's really good at finding like a single like obscure fact on the internet like if there's like a uh you know like a an obscure TV show or something um that you want to you know to like find like one particular episode of of or something like that it'll go and it'll go deep and uh find the like one reference to it on the web oh yeah

my my brother's friend's dad had this very specific fact um it was about some Austrian General who was in power during a certain a death of someone during a battle like a very Niche question and apparently chat gbt had previously answered it wrong and he was very sure that it was wrong so he went to the public library and found a record and found that it was wrong and so then um deep research was able to get it right so we sent it to him and he was he was excited um what is the rough

mental model for you know what deep research is excellent at today and uh you know where should people be using the O Series of models where should where should they be using deep research what deep research really excels at is if you have a sort of detailed description of what you want and in order to get the best possible answer requires reading a lot of the internet um if you have kind of like more of a a vag question um it'll help you kind of clarify what you want but it's I mean it's it's really

at its best when there's like a specific set of information that you're looking for and I think it's it's very good at synthesizing information at encounters it's very good at finding um specific like hard to find information um but it's maybe less and it can make kind of some new insights I guess from what it from what it encounters but I don't think it's NE it's not making new scientific discoveries yet um and then I think using the O Series model for for me if I'm asking for something to do with coding usually it doesn't

require knowledge outside of what the model already knows from it like pre-training so you would usually use 01 Pro or o1 for coding or3 mini high and so deep research are a great example of where some of the new product directions for open AI are going I'm curious how to the extent you can share how does it work the model that powers deep research um is a fine-tune version of 03 which is our most advanced um reasoning model and we specifically trained it on um hard browsing tasks that we collected as well as um reason

other reasoning tasks and so it also has access to a browsing tool and python tool so through training um end to end on those tasks it learned like strategies to to solve them um and the resulting resulting models good at online search and Analysis yeah and like intuitively the way you can think about it is um you make this sort of this request ideally a detailed request about what you want the model thinks hard about that um it searches for information it pulls that information and it reads it um it understands how it relates to

that request and then decides um what to search for next in order to get kind of closer to the final answer that you want um and it's trained to do a good job of pulling together um all of those all that information into a nice tidy report um with citations that point back the original information that I found yeah I think what's new about deep research as an agent at capability is that because we have the ability to train end to end there are a lot of things that uh that you have to do in

the process of doing research that you couldn't really predict beforehand so I don't think it's possible to write some kind of language model program or script that would be as flexible as what the model's able to learn through training where where it's actually reacting to live web information and based on something it sees it has to make it change its strategy and um things like that so we actually see it doing pretty creative searches um you you can read the The Chain of Thought summary and I'm sure you can see sometimes it it's very um

very smart about how it how it comes up with the next thing to look for so John Carlson had a tweet that went somewhat viral you know how much of the magic of deep research is you know real time access to web content and how much of the magic is in kind of Chain of Thought uh can can you maybe shed some light on that um I think it's definitely a combination I think you can see that because there are other sear products that don't um necessarily they weren't trained ENT and so um won't be

as flexible in responding to um you responding to information and accounts won't be as creative about how to solve specific problems because they weren't specifically trained for that purpose um so it's definitely a combination I mean it's a fine tun version of 03 O3 is a very smart and Powerful Model A lot of the analysis capability um is also from the underlying 03 model training um but so I think it's definitely a combination before open AI um was working at a startup and uh we were uh dabbling and building agents um kind of the way

that I see most people describe building agents on on uh on the internet um which is essentially you know you uh you construct this graph of operations and some of the nodes in that graph are language models um and so you can the language model can decide what to do next but the overarching logic of the you know sequence of steps that H uh that happen is defined by a human and um what we found is that it's really it's like powerful way of building things to get quickly to a prototype but um it falls

down pretty quickly in the real world because it's very hard to anticipate all the scenarios um that the model might face and think about all the different branches of the path that you might want to take um in addition to that the um models often are not the best decision makers um at nodes and that graph because they weren't trained to do to make those decisions they were trained to do things that look similar to that um and so I think the the uh the the thing that's really powerful about this model um is that

it's trained directly end to end to solve the kinds of tasks that uh that users are using it to solve so you don't have to set up a graph or make those node like decisions in the back on the architecture on the back end it's all driven by the model itself yeah can you say more about this because you know it seems like that's one of the very opinionated decisions that you've made and clearly it's worked um there's so many companies that are building on your API um kind of prompting uh to you know to

you know solve specific tasks for specific users do you think all a lot of those applications would be better served by kind of having you know trained models end to end for their specific workflows I think if you have a very specific workflow that is quite predictable it makes a lot of sense to do something um like just described but if you have something that um has a lot of edge cases or um it needs to be be quite flexible then I think something similar to deep research is probably a better approach yeah I I

think like the guidance I give people is um the the one thing that you don't want to bake into the model is like kind of hard and fast rules um like if you have you know a database that you don't want the model to touch or something like that it's it's better to encode that in in human written logic but I think it's kind of like a a lesson that I've seen people learn over and over again in this field is like um you know we we think that we can do things that are smarter

than what the models do by writing it ourselves but uh in reality like usually the mo like as the field progresses the model come up with um better solutions to things than humans do and um and uh also like you know um the like probably like number one lesson of machine learning is like you get what you optimize for and so um if you if you're able to set up the system such that you can optimize directly for the outcome that you're looking for um the results are going to be much much better than if

you sort of try to glue together models that are not optimized end to end for for the the tasks that you're trying to have them do so my like like long-term guidance is that um you know I think like reinforcement learning um tuning on top of models is probably going to be a critical part of how the most powerful agents get built what were the biggest technical challenges along the way to to making this work I well I mean maybe I can say as like an observer um rather than someone who was involved in this

from the beginning but it seems like kind of one of the uh the things that um that Isa and the rest of the team worked really really hard on and was kind of like one of the hidden keys to success was like um making really high quality data sets um it's uh you know another one of those like age-old lessons in machine learning that people keep relearning but the the quality of the data that you put into the model is is probably the biggest determining factor in the quality of the model that you get on

the other side and then have someone like um Edward Edward son who's other um person who works on the project who just any data set he will optimize so that's a secret to success find your Edward yes great great machine learning uh uh model training how do you make sure that it's right yeah so that's obviously a core part of this model and product is that we want it to be users to be able to trust the outputs so part of that is we have um citations and so um users are able to see where

the model is um citing information from and we during training that's something that we actually like try and make sure is um correct but it's still possible for the model to make mistakes or hallucinate or trust a source that maybe isn't the most um trust worthy source of information so that's definitely an active area where we're want to continue improving the model how should we think about this together with you know 03 and operator and other different releases like does this use operator do do these all build on top of each other or are they

all kind of a series of different applications of o03 uh today these are pretty disconnected um but you can kind of um you can imagine kind of where we're going with this uh right which is like um the the ultimate agent that um people will have access to at some point in the future should be able to do um you know not just web search or using a computer or any of the other types of actions that you'd want like kind of a a human assistant to do but should be able to fuse all these

things in a more natural way any other design decisions that you know you've taken that are maybe not obvious at first glance I think one of them is the the clarification flow so if you've used deep research the model will ask you questions before starting its research and usually chat gbt maybe will ask you a question at the end of its response but it usually doesn't have such um uh that kind of behavior upfront um and that was intentional because um you will get the best response from the research model if the prompt is very

well specified and detailed and think that it's not the natural user Behavior to give all of the information in um the first prompt so we wanted to make sure that if you're going to wait five minutes 30 minutes that your response is as detailed and satisfactory so um we added this additional step to make sure that the user provides all the detail that we would need and I've actually seen a bunch of of people on Twitter are saying that they have this flow or that they will talk to 01 or 01 Pro or to help

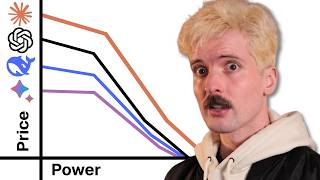

um make their prompt more detailed and then once they're happy with the prompt then they'll send it to deep research which is interesting um so people are finding their own own workflow so how to use this so there's been three different deep research products launched in the last few months tell us a little about what makes you guys special and how we should think about it and they're all called Deep research right they're research yeah not a lot of naming creativity in this field um I I think people should uh should try all them for

themselves and get a feel I think uh I think the the difference in like quality um I think they they all have pros and cons but I think the the difference will be clear um but like what that comes down to is just the way that this model was built um and the the um sort of the effort that went into um constructing the data sets and then the the engine that we have with the O Series mod models um which allows us to just um optimize models uh to make things that are like really

smart and really high quality we had the 01 team on the podcast last year and we were joking that open I is not that good at naming things I will say this is your best named product deep researches at least it describes what it does I guess yeah so I'm curious to hear a little about where you want to go from here you have deep research today what do you think it looks like a year from now and what maybe are complimentary things you want to build along the way well excited to expand the data

sources that the model has access to we've trained a model that's generally very good at browsing public information but um it should also be able to to search private data as well and then I think just pushing the capabilities um further so it could be better at browsing it could be better at analysis and then and then thinking about how this fits into our agent road map more broadly um like I think the the recipe here is um something that's going to scale to a pretty wide range of use cases um things that are uh

can surprise people how well they work um but this idea of you take a a state-of-the-art reasoning model you give it access to the same tools um that that humans can use to uh to do their jobs or to go about their daily lives and then you optimize directly for the kinds of outcomes that um that you're looking that uh you want the agent to be able to do um that recipe there's like really nothing stopping that recipe from scaling to more and more complex tasks um so I feel like yeah AGI is like an

operational problem now um and uh I think yeah um a lot of things to come in that general formula I'm so Sam had a pretty striking quote of deep research will take over a single DIN percentage of all economically viable tasks valuable tasks in the world how should we think about that I think of it as like um it's deep research is not capable of uh doing all of what you do um but it is capable of saving you like hours or sometimes in some cases days at a time um and so I I think

like uh what we're hopefully relatively close to is um deep research in the agents that we build next and the Agents that we build on top of it um giving you you know 1 5 10 25% of your time back depending on the type of work that you do I mean I think you've already automated 80% of what I do so it's definitely on the higher end for me we just need to start writing checks I guess yeah are there entire job categories that you think are kind of more at risk is the wrong word

but like more in the in the strike zone for what deep research is exceptional so for example I'm thinking Consulting uh but like Are there specific categories that you think are more in strikes on yeah I used to be consultant I don't think any jobs are at risk like I I don't really think of this as like a labor replacement kind of thing um at all like it's uh but for these types of knowledge work jobs where like where you are spending a lot of your time kind of looking through information and making conclusions I

I think it's uh it's going to give people superpowers yeah I'm very excited about a lot of the medical use cases just the ability to um find all of the literature or all of the recent cases um for a certain condition I think I've already seen a lot of doctors posting about this or like they've reached out to us and said oh we used it for this thing we used it to help find um a clinical trial for this patient or something like that so just people who are already so busy just saving some time

or um it's maybe something that they wouldn't have had time to do so and now they they are able to have that information for them yeah and I think the like the impact of that is like maybe a little bit more profound than it sounds on the surface right it's not just like it's not just like uh you know getting 5% of your time back but it's um the the type of that might have taken you four hours or eight hours to do um now you can do for you know um a chat gbt subscription

and 5 minutes and so like what types of things would you do if you had infinite time that now maybe you can do like many many copies of so like you know you should should you do uh research on every single possible startup that you could invest in instead of just the ones that you have time to meet with things like that or on the consumer side one thing that I'm thinking of is you know the the working mom that's too busy to plan a birthday party for the for her like now it's now it's

doable so it's I agree with you it's way more important than 5% of your time it's all the things you couldn't do before exactly what does this change about education and the way we should learn and you know what what will you be teaching your kids now that we're in a world of agents in deep research yeah education's been like one of the top few things that people use it for um I think it's I mean this is true for atrib generally it's it's like a if uh like learning things by talking to um an

AI system that is able to like personalize uh the information that it gives you based on what you tell it or uh maybe in the future what it knows about you um feels like a much more efficient way to learn and a much more engaging way to learn than uh like reading textbooks we have some lightning round questions all right okay your favorite deep research use case I'll say yeah like personalized education just like learning about anything I want to learn about i' I've already mentioned this but I think a lot of the personal stories

that people have shared about finding information about a diagnosis that they received or someone in that family received have been really great to see okay we saw a few application categories breakout last year so for example coding being being an obvious one what application categories do you think will break out this year I mean clearly agents agents I was gonna say too okay 2025 is the year of the agent I think so yeah and then how do you think about what piece of content that you should recommend people reading to read to learn more about

agents or where the state of AI is going could be an author too training data this podcast not I think it's it's like um it's so hard to keep up with the state-of-the-art in AI um I think the like the general advice I have for people is like um pick one or two subtopics that you're really interested in and go like cu cre a list of people who are we think are saying interesting things about it and like how to find those one or two things you're interested in um maybe actually that's a good deep

research use case like you know go go uh go use it to F like go deep on things that you want to learn more about this this is a bit old now but I think a few years ago I watched the I think it's called like foundations of RL or something like this from pel and it's um it's it's a few years old but I think that it was a good introduction to reinforcement Landing so yeah would definitely second any any content by uh Peter AAL my grad school adviser yeah oh yeah yeah okay reinforcement

learning is it you know it kind of went through a peak and then felt like it was in a little bit of adrum again is speaking again is is that the right read on what's happening with RL it's so back yeah so back yeah why why now because everything else is working like uh I think if you um maybe people have been following the field for a while we'll remember the Y laon cake analog analogy if you're building a cake um then most of the cake is the cake and then there's a little bit of

frosting and then there's a few cherries on top and the analogy was that like unsupervised learning there's the cake supervised learning is the frosting and uh reinforcement learning is the cherries on top when we in the field were working on reinforcement learning back in you know 2015 2016 it's kind of like I think uh uh Yan lon's analogy which I think in retrospect is probably correct is that we were like trying to add the cherries before we had the cake um but now we have language models that are pre-trained on massive amounts of data and

are incredibly capable um we know how to uh how to you know do uh supervised fine tuning on those language models to make them good at instruction following and like generally doing the things that people want them to do and so now that that works really well it's like very ripe to uh to tune those models for any kind of use case that you can define a reward function for great okay so from this lightning we got agents will be you know the breakout category in 2025 and reinforcement learning is so back I love it

um thank you guys so much for joining us we we love this conversation congratulations on an incredible product and we can't wait to see what comes with it thank you thank you thank you [Music] [Music] oh [Music]

Related Videos

31:34

Vibe Coding Is The Future

Y Combinator

107,819 views

2:11:12

How I use LLMs

Andrej Karpathy

770,620 views

1:03:41

The Government Knows AGI is Coming | The E...

The Ezra Klein Show

301,881 views

48:03

View From The Top with Lisa Su: Chair and ...

Stanford Graduate School of Business

88,124 views

24:02

The Race to Harness Quantum Computing's Mi...

Bloomberg Originals

5,284,847 views

46:15

Artificial Intelligence - Past, Present, F...

MIT Corporate Relations

27,618 views

44:27

Vector Databases and the Data Structure of...

Sequoia Capital

4,306 views

26:52

Andrew Ng Explores The Rise Of AI Agents A...

Snowflake Inc.

653,218 views

34:39

How To Build The Future: Aravind Srinivas

Y Combinator

97,236 views

33:08

How to Start Coding | Programming for Begi...

Intellipaat

10,007,121 views

24:05

OpenAI's Chief Research Officer on GPT 4.5...

Alex Kantrowitz

21,850 views

57:06

Stanford Webinar - Agentic AI: A Progressi...

Stanford Online

55,804 views

38:49

Using AI to Build “Self-Driving Money” ft ...

Sequoia Capital

4,390 views

24:51

This Is What Young Founders Should Focus On

Y Combinator

103,344 views

21:17

AI Models: A Race To The Bottom

Theo - t3․gg

70,172 views

22:50

LinkedIn founder: how to get ahead while o...

Silicon Valley Girl

271,981 views

12:10

AI Won’t Plateau — if We Give It Time To T...

TED

146,618 views

1:03:03

NVIDIA CEO Jensen Huang's Vision for the F...

Cleo Abram

2,034,985 views

1:16:55

Satya Nadella – Microsoft’s AGI Plan & Qua...

Dwarkesh Patel

627,740 views

1:04:52

The AI Product Going Viral With Doctors: O...

Sequoia Capital

2,819 views