Debate on AI & Mind - Searle & Boden (1984)

49.72k views10584 WordsCopy TextShare

Philosophy Overdose

John Searle and Margaret Boden discuss a few philosophical issues regarding artificial intelligence ...

Video Transcript:

good evening it isn't hard to think that there's something unique and indeed mysterious about the race of man for a start there don't seem to be others like us around still large items of belief or faith that support the perception of our uniqueness and mystery have been given way the rot said and I suppose when we learn from Galileo that we don't have the prime position in the universe at its Center we then turned out or so it seems not to be the children of God to come up to date no doubt what most supports

our pretensions to uniqueness and mystery is the belief that our mental powers of understanding and creativity and our mental capabilities generally are our special Preserve we alone have the physical equipment the brains which are the means to understanding and the like there's now an intellectual growth industry whose tendency it is to remove our claim to distinction partly by way of a new conception of mind or mentality itself the industry is called artificial intelligence inspired by extraordinary developments in computer science which some would argue are taking us into a new culture many who work in artificial

intelligence are confident that they have a model of the Mind a model of us which is more precise fertile and enlightening than anything from philosophers from Freud from benighted psychologists who attempt to reduce mentality to behavior or from neuroscientists present or future it's certainly not a model that makes us special there is no doubting the confidence of the new industry the confidence of may I say it the artificial intelligencia computers themselves are not the main interest of those who work in artificial intelligence who seek to bring the mind out of its obscurity they're not that

much concerned with the hardware they're quick to say that intelligence or creativity or understanding or Consciousness are not to be seen at bottom in terms of mechanisms and boxes from IBM or in terms of our brains or in terms of any other physical structures or materials Dead or Alive constructed or evolved the hardware including the neural Hardware in our heads doesn't matter it's the software that counts a bit more precisely it is programs that count very roughly they are the rules on which computers run they are the rules if the proponents of artificial intelligence are

right on which we run one question which arises for us tonight then is whether minds are just computer programs of certain kinds or rather just programs since certainly they don't need to be on computers can it be that the mind is yet less than a ghost in the machine but just as they say an abstract sort of thing is it true then that computers have mental states that we shall find out about our minds by studying theirs then we shall find out about our Consciousness intelligence creativity presumably our desires and passions by studying theirs that

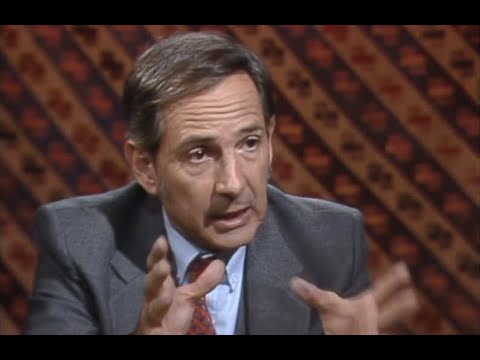

our future is perhaps a bit uncertain since the computers of the future will have what we have and a whole lot more of it Professor Margaret Bowden of the University of Sussex is as I trust she won't mind my saying the Dwyane s of artificial intelligence in these islands she's lately been elected the member of the British Academy her books include artificial intelligence and natural man which surveyed its subject most fully and minds and mechanisms which collects together some of her many papers professor John Searle of the Department of philosophy of the University of California

at Berkeley is among his present attainments this year's wreath lectures for the BBC and among his attainments of the past a first-class Rao it was caused by his article Minds brains and programs was first published in a professional Journal along with 27 replies including many from the world's leading proponents of artificial intelligence it was and is his contention that there is no reason to suppose that computers have or ever could have thoughts or feelings he has perhaps further doubts about artificial intelligence he allows that a myth has grown up the myth of the computer but

he believes that soon enough it will give way to what I'm sure he calls good sense could we begin Professor Bowden with some of your views I think it would perhaps be useful to have an idea of what you take a program to be oh a program as you said is just a set of rules for basically for moving symbols about but an AI program is a very different sort of program from the ones that we're normally used to because an AI program is one where there is a rich representation of the world that the

system is thinking about inside the program if you like and this is why AI is of interest to psychologists and also I think to philosophers of mind because it's concentrating on what goes in between the input the perception if you like and the output Behavior it's concentrating precisely on something which is in the same place if you like in the function of the organism as the mind is something which the behaviorists for instance totally ignored so programs then are at least fundamentally sets of rules but very rich sets of rules is it true in virtue

of programs that Consciousness might be ascribed truly to Future conceivable computers I think you've said that they might properly be ascribed Concepts do you stick to that do you I think that they might probably be ascribed Concepts yes but I think there's a there's a difficulty with words like Consciousness or even purpose and intelligence Freedom any of these words and that's basically not a factual difference because I don't sorry not a factual difficulty because I don't see any reason in principle to doubt that in fact uh you could have a computer system which had the

same sorts of events going in it computational events which we have in our minds when we for instance make a free choice I think it's a moral difficulty I think that once you agree to take the scare quotes off words like purpose and intelligence and freedom and you say if you're prepared to say that a particular computer program or robot really is free for example then what you're saying is that it's part of your moral universe and that all things being equal you've got to respect its purposes you know unless they Clash too much with

your own now I think that's a very strong moral thing to say I personally wouldn't want to see the day in which we said that I would want to keep a distinction between the purposes of naturally evolved creatures including chickens and cows and the purposes in quotes of artificially created creatures such as computer programs but I think I should say that that's my view it certainly isn't a view that would be shared by everybody in AI I think most people in AI would say that since I've agreed that in principle there's no factual difference here

they would want to go the whole way and say that in principle there could be a computer which was really conscious which really had purposes which was really intelligent so we think and have beliefs and computers think in inverted commas and have beliefs in inverted commas um is the difference between those two contentions only one about well of a moral character did I understand you well at the moment it's it's a different fact I mean the uh even the most uh powerful and impressive AI programs that we've got today hardly begin to scratch the surface

of the sort of power and complexity that the human mind has got so there's no question whatsoever that you can't use these terms uh except in a very weak sense about today's programs again I personally believe that that will always be true I don't actually believe that in practice we will ever build a computer like how in the film 2001 A Space Odyssey I don't think it'll in practice happen um again other people would disagree with me but I think that in principle that's possible John Searle you have strong views on the subject of artificial

intelligence and I think those views focus on one argument yeah and it's something about a Chinese room well let me tell you I wonder if you would tell me what it aims to prove and then tell me what it is okay um I like to talk about particular programs and the sort of thing that's claimed for them so let's take as an example the Yale program that the Yale artificial intelligence lab for understanding stories that's understanding it in quotes the way it works is this you give the computer a story these tend to be rather

simple-minded stories here's a story man went into a restaurant and ordered a hamburger when they brought him the hamburger it was burned or crisp so the man stormed out of the restaurant without paying the bill now you ask the computer did the man eat the hamburger and lo and behold the computer says no he didn't eat the hamburger now that's an interesting result because you remember it didn't say in the story whether or not the guy ate the hamburger one up for the computer one after the computer okay now and that's presumably doing something we

could do this is passing the test that we would test but we would expect a child to pass for understanding story okay how does it work well the computer has in its data Bank in addition to the story it's got a representation of how things go on in restaurants called a restaurant script and it matches the story and the question against the restaurant script before it gives the answer now what are we going to make of this well the what I call the strong AI people and I'm really not sure Maggie is one of them

but the people who are sort of the gung-ho Fanatics in AI say this proves that the computer literally understands the story now I think I can demonstrate conclusively that it proves no such thing and in fact that the computer doesn't understand the story is that understanding without inversion no quotes here honestly John understanding I mean and to their credit a lot of people in AI insist we are using these words in their literal sense Simon and Newell say the understanding we attribute to the computer is that same understanding we attribute to human beings okay what

I ask you to imagine is this I like to do it in the first person and have everybody do it from their own point of view imagine I'm locked in a room and I I got a huge box full of Chinese symbols I don't know any Chinese just so many squiggle squiggle signs to me now they bring in another box full of Chinese symbols and they give me a bunch of rules in English for matching these symbols so it says take a squiggle squiggle sign out of box number one and go and put it next

to a squirtle squaggle sign out of box number two just to jump ahead a second that's called a computational rule over formally specified elements okay now let's suppose they give me in another little batch of Chinese symbols together with some more rules in English for shuffling these symbols around only this time I give them back the chaps who are giving me all this stuff through the window I give them back some Chinese symbols so here I am in my room with my three Bunches of Chinese symbols I'm Shuffling symbols around symbols are coming in and

I'm Shuffling them back out now imagine unknown to me the guys who gave me the first box call it a restaurant script the second box they call a story about a restaurant the third bunch of symbols they call questions about the story the rules in English they call the program the answer the the stuff that I give them back through the window they call answers to the questions about the story and me they call the computer now let's suppose that after a while they get so good at writing the program and I get so good

at shuffling symbols that my answers to the questions are indistinguishable from native Chinese speakers so the story comes in I don't know what it means there's a bunch of symbols but the question follows did the guy in the restaurant eat the egg foo young and I give back the symbol that means no the guy didn't eat the egg foo young now I passed the test for understanding the story but I don't understand a word of Chinese I think that is the touring test well Alan Turing a Great British mathematical genius invented the test for determining

whether or not we should attribute understanding to the computer and the test was could the computer fool an expert so let's suppose that I can fool the experts my answers to the questions are as good as those of native Chinese speakers imagine they even give them give me a question of the form do you understand Chinese and I give them back answers that mean in Chinese of course I understand Chinese why you guys keep asking me these stupid questions can't you see I'm passing the touring test now here's the punch line This is the point

of the whole analysis I do not understand a word of Chinese and if I don't understand Chinese in this example neither does any computer because the computer has exactly what I have the computer has that and nothing more it has a set of formal rules for manipulating symbols it takes symbols as input it manipulates the symbols it gives symbols as output and if I don't understand Chinese which I obviously don't there's no way any other digital computer could understand Chinese or anything else because all it has is uninterpreted formal symbols or just to put this

again in one last sentence and that is what the computer has and Maggie described this very well with the programs is a syntax and not a semantics what it's got is a set of formal rules for manipulating uninterpreted formal symbols but what human being have is not just a bunch of symbols we know what the symbols mean you give me a story in English and I know what it means so what what the computer has is rules for putting terms together but no rules specifying what terms stand for something like that and furthermore it couldn't

because suppose you tried to give a computer an interpretation of the story so you feed in more symbols that says that say in Chinese the symbol for egg foo young means a certain kind of egg dish made by putting in a whole lot of soy sauce when you cook the eggs well there's no way that the computer can know any of that because what it gets is just more symbols that is the any effort to give an interpretation of the symbols results only in more symbols and that's by definition what a program right Professor Bowden

that argument as you know is enraged a lot of people I wonder if it enrages you it enrages me a bit too um you shifted John from talking about a specific example the Yale program with the restaurant script and the hamburger so talking about any computer I think that was a bit naughty because if you stay talking just about Shanks program I entirely agree with you these things do just shift symbols around they've got no sense of what a restaurant is or a hamburger or anything else I entirely agree but then I think um there

are two things that you would need to add to that sort of program to get a program which was much nearer to having genuine meaning genuine intentionality one is as you said yourself you've got to have some semantics plugged in now how are you going to do that I agree you cannot do it in principle purely by adding more formal rules which by definition aren't interpreted in terms of things in the real world and I think that you've got to have some causal relationship built into the computer just as it's built into our brain into

our retina you know whatever in order to establish the semantics in order to establish the reference and you've not only got to have sense organs if you like taking information in in causal Commerce with the world you've also got to have the thing manipulating things so if it doesn't you know if the egg foo young has a certain chemical in it it will spit it out and so forth as we do now um if you say that that reply isn't really very clear because there's all sorts of questions all sorts of problems you could raise

about whether I really have plugged semantics in I would say you're absolutely right but then I would say we don't understand from the philosophical point of view clearly what semantics is in the human case we don't understand what it is for you or me to refer to that glass so to be fair we shouldn't ask for a clearer account of semantics in the AI case then we ask for in the human case that's the first point I'd make uh the second point is that I also think there's a little bit of a slate of hand

when you talk in this dismissive way about shuffling symbols you know having you imagine this man having the table whether you look up sprinkle squiggle and he looks across and you find Squad great squirrel squawker goes in this box and out of the window um even the programs that Yale have at the moment and as I said before those are absolutely minute and poultry compared with anything that would have genuine psychological interest in explain what understanding might be even his programs are much much more complex than that and not only much more complex but they

involve a wide range of sorts of internal representations which represent which model if you like and we'll put all the scare quotes on okay things like beliefs desires intentions of the person who's listening to the story and emotionally reacting to it um of the various characters in the story and anything which is going to be um at all a candidate for being the same sort of thing as a human mind has got to have all that so I entirely agree that his program doesn't have any sort of semantics but I'd like to know what you'd

say about the programs of people like David Marr or Jeffrey Hinton which are programs basically their visual programs and the point of Interest here about them is that they are built in what's called dedicated machines that's to say they're built-in machines which like the retina of RI has got special little bits in them which react to the light coming in in a certain way so that they can suss out what the three-dimensional shape of something like this is given that they're only getting a two-dimensional image in now what would you say about that let's take

both of these uh points first of all the point about complexity it seems to me my argument has nothing to do with the Simplicity or the complexity of the program because the point is a perfectly General point the point is that however simple or complex the program is it order that it be a computer program it has to be defined purely formally the whole appeal of artificial intelligence and indeed the power of computer science is that one in the same program can be instantiated in an indefinite range of different kinds of Hardware precisely because it

is defined purely formally so I I would say as far as the Chinese room is concerned make the program as complicated as you like put in as many representations of as many facts about the world as you like and the argument remain exactly the same I still don't know any Chinese because in the way that the system is set up I have no way of attaching any meaning to the elements in the program to the symbols that the program operates over regardless of how complex the program is so I think the issue of complexity is

a red herring but I don't think that's your main argument your main argument if I understand you correctly is it's causation plus the program that gives us the mind now I already want to say that is an abandonment of AI as it's traditionally conceived because now we're not saying the mind is a program mind is the brain as program is to Hardware what we're saying is it's program plus causation so let me consider that if I may for a couple of minutes is it causation on the way into the computer or a causation on the

way out the way Maggie had it I listened very carefully the way they had it is both and I think that's a more powerful I mean that's the argument I like to challenge because that's a more powerful argument so if you had a computer with a good program attached to a robot right and it was causing affected by the environment and in turn acted causally so that it behaved right then on Professor bowden's view it would understand or understand an inverted commas and on your view it would be nowhere near it that's right let me

let me go through what I think would be the strongest version of Maggie's case imagine a robot and it's got television cameras attached to its eyes and it's got a motor apparatus attached to the computer in its uh compute in its skull and it takes in visual stimuli and it let it use the mar program to process the visual stimuli goes through the Primal sketch and all of those things and then it has motor output based on the set of the set of interfaces between the sensory input and the motor output in short the wave

that we've described it is much like the way a lot of people who believe in the computer theory of the brain describe the way that our brain mediates sensory inputs and motor outputs okay I want to say the Chinese room applies exactly to that case imagine that in this robot there's a big room in the robot's skull and I am in that room and what comes into me from these from from these television sets are a lot of Chinese symbols and I'm I have no idea where they come from I'm just paid to sit sit

in this room and follow the instructions and Shuffle the symbols around now I then pass back certain symbols or put them in the appropriate slots and push the appropriate buttons and unknown to me what I'm doing is mediating the sensory input to a motor output but all the same what I have is what any digital computer has formal symbols uninterpreted and you can give them any causation you like as long as there's no mental representation of that causal relationship they give me no way of attaching any meaning to the symbols so let's take an actual

case here here I am and I am the robot's brain now or I'm if you like it's homunculus and uh the robot eats egg foo young at this point and a symbol comes into me that means egg foo young unless I have some way of knowing the causal history of that symbol that is unless I have some mental representation of the causal relationship I still don't learn any meanings of any words that is the as long as the form of causation isn't mental causation it is as long as it's just ordinary physical causation it won't

give me the meanings of any symbols meanings seem to be the crooks here yes I mean can I make two responses to that John the first is that um you've got you've weakened your homunculus in in the story that you just told us you aren't the robot you aren't even the robot's brain you are a subsystem in the robot's brain now there are lots of subsystems in our brain lots of distinguishable psychological processes which are used for example in understanding a sentence or even in working out what the syntax of the sentence is now nobody

would want to claim I think but one of those processes actually understood what syntax was in the sense in which you and I you know as human beings understand it so that you you cannot uh cheat by cutting off the semantics By ignoring the fact that there are these causal relationships with the outside world with this robot and having a truncated homunculus where you've deliberately you know got rid of the semantics that's the first thing I'd say and the second thing I'd say is that when we talk about causation here it's very important to realize

that there are two sorts of causation that we need one which we need to get the meaning in is the plugging into the world the causal Commerce with the world in in the way that we just talked about what you might call Pure physical causation but light comes into my eyes makes things happen on my retina and that this body in the world touches that and it moves in physical space okay but in between that there is psychological causation what you call mental causation now I would want to say that that psychological causation is best

understood as a series of computations computations which ultimately are anchored in processes with a genuine causal semantics if you like of the thought that I've been mentioning but when Ma for example talks about Vision he doesn't just talk about the way in which the light affects the cells in the retina he then goes on as you know to talk about a number of different levels of representation in the visual system which are needed even just to work out something so simple as what is the three-dimensional shape of that glass and each of those levels of

representation um is defined by him very carefully in terms of a set of symbols which might conceivably have been different and Each of which is defined in terms of the more primitive symbols at the level below and ultimately of course you've got the level at which the eye is actually functioning as a physical transducer namely you've got a a plugging in of your semantics causally into the world but we mustn't forget that there's physical causation in that sense which is crucial but there's also mental or I would prefer to say computational causation The crucial point

in my analysis is that since the formal computer program has no semantic or mental content by definition then any artificial system that is supposed to give it mental content will have to do something which is equivalent to what the human brain does now that's because that's what the human brain does but on the way that you've described it where an incidentally in my uh reading for example I was supposed to be the whole brain that is it isn't that there are a whole lot of other brains in there I am the entire brain I do

all the mar processing as well as other sorts of processing now on that way of describing it there is no way that the causation plus the symbols could produce any mental content because there's nothing in the system that performs functions equivalent to those of the human brain now let me take these two propositions because they are crucial to the argument we got one out at the beginning namely syntax is not semantics the syntax of the program by itself doesn't give us semantics now the second proposition which is implicit in my account is brains do it

brains cause Minds now if you take those those two together then we know that the way that the brain does it can't be solely by manipulating syntax because we know that the syntax Isn't Enough by itself now what you've suggested is well let's add causation to the syntax and that way we'll get what the brain does but the answer to that is the only way it would do it would be if you added something that was more than what any digital computer has namely something that was biochemically the functional equivalent of the human brain well

I think I think at this point I have to say that your answer will come to that in just a few moments when we will come back John you were saying before that certainly programs aren't sufficient for mentality to wrap it up rather quickly but on top of that that there's some limit or there's some restraint such that only certain sorts of things could conceivably have Consciousness or mentality and I think Margaret you were Keen to suggest that he was mistaken about that John put it because he talked about systems having the functional equivalent of

the biochemical nature of the brain and I think that that could easily be taken as a mystification even if a materialist mystification of course it's true that if visual pigments that you find in eyes were the only chemicals in the universe that were capable of responding to light right than only brains uh could support Vision certainly that's true um and there may as a matter of empirical fact B certain sorts of chemical interchanges going on in our brains which uh can't have any sort of function equivalent anywhere else but I see absolutely no reason whatsoever

to believe this I mean the important point is not what is the biochemical nature of the brain but what are the functions which that biochemistry is uh subsuming is supporting one of the computational functions it's supporting and I see no reason whatsoever to expect that there's something special or magic about protoplasm you know such that there are certain computational functions which only it in principle can perform there's an interesting way that I think you're reminding begs the question by saying that what the brain does are perform computational functions so let's go over this there are

two propositions that form the basis of my argument both of them seem to me to be obviously true and what I'm really trying to do is work out their consequences one is syntax is not the same as semantics you don't get semantics from syntax alone the second is just as a matter of biological fact brains cause Minds that is specific brain processes cause mental States now if you put those two together you don't get the result and I certainly didn't mean to imply that only brains could cause Minds that you that uh that that the

system would have to have a brain like ours to cause to have a mind but you do get the following result it's a trivial consequence of the proposition that brains cause minds and that is anything else that was capable of causing mental States would have to have powers but Civic Powers equivalent to those of the brain now that maybe martians do it by having a lot of green slime in their head or maybe you could do it with silicon chips but you know that if the brain does it then any anything else that did it

would have to have powers equal to the brain now if you go back to the first proposition namely syntax is enough you know that the way that the brain does it can't be solely computationally because you know that all the computation does is operate over formal symbols you furthermore know and this and other logical consequence that if you build an artifact a robot or something else that did it you would have to have more than just the formal syntactical processes you'd have to have something that duplicated the powers of the brain now it becomes a

question well what kind of systems would do that now here's where I really part company with AI the strong AI position is any hardware whatever that had the right program and I gather you want to add to that any hardware whatever that had the right program together with the right causal relations to the external World okay but that would have the consequence for example but let's suppose we build one hell of a big computer out of old beer cans oh you make computers out of anything you make them out of men sitting on the high

stools or you can make them out of water running through pipes so let's build a computer out of old watney's cans I don't know if we're supposed to mention particular labels but anyway all beer cans you've done it I've done it okay uh we have so millions billions of old beer cans banging together and they're powered by windmills and we we got the right inputs we have transducers that that trends reduce photons so we got a perception of light now I want to say it just seems to me incredible that this system of beer cans

the biochemical properties of the human brain and and I want to say now here's here's the my main objection to ai ai doesn't say for all we know beer cans might have thoughts and feelings what AIS is if they've got the right organization they must have thoughts and feelings because that's all there is to having thoughts and feelings is instantiating the right program Professor Bowden are you committed to the view that a pile of old beer cans with the right causal connections and the right program on the right program must have a mind or whatever

well in as in principle in as strong a sense as any uh computer could be said to have a mind I mean I already said earlier in the program that I personally wouldn't want to say that it did for basically moral reasons but as I said it isn't in my view it isn't a factual impossibility but can I pass actually John from the strong AI position which we've been talking about which actually isn't really my position anyway speaking personally from the strong AI position to the weak AI position see I personally am not primarily interested

in computers I'm interested in the human mind I'm interested in how it works how it relates to the brain and what the hell goes on up here right and I'm interested in AI because I think it gives us a way Far and Away the most promising way that I can see at the moment of thinking about these things and of asking a new set of questions using some of the concepts that computer science has given us and of making hypotheses suggesting hypotheses which are much more Rich much more precise much more complex than any we've

had before and which also have their own dynamism I mean you can see what their results are they're putting them into a computer and letting the thing run and I think from the point of view of theoretical psychology this is very interesting um I've already mentioned that I think that some of the work in low-level Vision in AI has been psychologically very useful not that it's given us the answers about just what's going on even about that but that it's given us some very interesting ways of asking you questions and there's already been I think

some genuine Advance there but let's get off that topic because somebody might say well you know who's really interested in how you get from from 2D to 3D it's pretty boring let's take something like I don't know creativity uh which is one of the things that people always bring up as what they regard as obvious counter example you know you couldn't possibly come to understand human creativity better by thinking about it in this sort of way well I mean I'd like to make two points there first of all um there's no alternative because it seems

to me there are two theories in quotes about creativity at the moment neither of which are at all helpful uh one is basically it's divine I mean Bernard 11 the theater critic actually stated so in two pieces in the times a couple of years ago when the play Amadeus about Mozart was running he actually claimed creativity is divine and couldn't possibly be explained by a human psychology the other is that it's well it's not Divine but it's it's sort of superhuman it's something special it's it's intuition it's something which perhaps all of us have but

some of us have to a much greater degree Mozart for example but it's unanalyzable it's special and again it's not something which science never mind computers which science could explain now that seems to me to be defeatist I mean it may be true but we'd better look a bit harder before we decide that it's true and I'd like to ask you know how intuition Works what it means to say that something was uh found out by intuition and just take one example and and just for you to kick around I'm sure you'll be happy to

uh a program written by a man called Lynette uh I pick it not because it's a very very impressive program but because it's the most impressive program so far in this sort of area it's a program which explores mathematical ideas it doesn't just do sums according to preset rules it actually uh kicks around the ideas in its mind so to speak changes them transforms and combines them and asks itself from time to time haven't got anything here which looks as though it might be interesting and if it finds something which it thinks might be interesting

in terms of the criteria it has or mathematical interestingness then it concentrates on that and tries to prove theorems and so on that's quite a very long story short and admitting all the technicalities this thing a has come up with a large number of ideas which it labels as being interesting which mathematicians also would agree are interesting things like prime numbers and square roots and and so on it's even come up with one theorem which was a new theorem in the theory of numbers nobody in the whole history of mathematics had ever thought of this

before certainly passing the touring test it's passing the Turing test now um and it's doing this as I say by having rules which are if you like rules for exploring and I think basically that's what creativity is it's a question of exploring the structures in your mind putting some of the concepts together changing some of the constraints on your structures that are in your mind not necessarily consciously and that this is what's churning underneath in our minds underneath what we call intuition well it seems to me we've now got several pieces on the board I

I'm not quite sure where your final position on strong AI that is the view that computers literally have thoughts and feelings uh is but I I'm I want to insist that even in this case of the uh the theorem proving program if it really is a formal program I mean we actually we've had these theorem proving programs for quite a long time now uh it would not be a case of actually having thoughts it would be a case of uh simulating the production of probes I mean the actual Prince but I think I think it

was the creative production okay let's talk about creativity a it wasn't a fear improver in the ordinary sense but B I'm not interested for the moment in the question about whether or not you can say that that program is really creative what I'm interested in is understand whether or not we can understand human creativity better by thinking about it in in terms of this analogy okay and that seems to be a factual question I mean this seems to be an empirical question and I can I'll translate it it as precisely as I can and that

is are the principles on which the human brain operates that is to say those principles that are crucial for such things as creativity are they fundamentally or do they fundamentally involve some digital elements that is let's grant for the sake of arguments that strong AI is false that the computer doesn't literally have thoughts and feelings now then well what good is it in the study of human psychology well one line would be and it's one that I would agree with it's a very useful tool it's a useful tool in studying human beings in a way

that it's useful tool in studying Avalanches or patterns of rain in the Midlands or the economy of South American countries it's just a very useful tool but there's a stronger view which I think you hold and that is we have very good reason to believe that even if the program isn't sufficient for having mental states that it's nonetheless necessary for having mental States so that we can study creativity by studying machines well they don't literally have thoughts and feelings nonetheless do something analogous to producing human creativity now let me tell you why I'm a little

bit suspicious about that first of all there's a long history of identifying whatever is most puzzling to us about the mind and the Brain with the latest technology so in my childhood I mean nowadays we're told the brain is a digital computer when I was a boy we were always told it was a telephone switchboard what else could it be and if you go back to Freud Freud always identifies the brain or analogizes the brain to electromagnetic and hydraulic devices sharington identified the brain with uh Telegraph systems leibniz compared the brain to a mill it's

and I'm told the Greeks there were some Greeks who thought the brain functioned like a catapult so we ought to be suspicious of some brains do of fixing of fixing our model of the brain on whatever is the latest technology and the real question is this it seems to me that I haven't seen a shred of evidence to show that psychology is best understood on the computational metaphor regardless of the truth or falsity of strong AI they claim that the key to Understanding Psychology is to see the operation of the human mind on digital principles

seems to me have been totally unsupported when you said that of course you agree that the computer is a useful tool for thinking about the mind just like it's a useful tool for thinking about hurricanes or whatever now I suppose what you meant by that was it's a useful tool because it gives you precision and it can work out the results of your theories for you quickly and and so on I mean we should not to be sneezed at no other psychological method has got it agreed but I certainly would want to claim her sure

you dispute that it gives us more than this in the case of psychology though not in the case of hurricanes namely that some of the concepts that were developed in computer science in order to build these machines to do vaguely interesting things are the best that we've got at the moment the best the most promising Concepts that we've got at the moment for thinking about what goes on in the mind I think they're not the best set of tools and I want to suggest some better tools and I think in fact one of the pernicious

things about AI even though it is a useful tool one of the pernicious things is it distracts attention what it from what really are the fundamental problems in Psychology now I believe the basic notion in understanding the operation of the human mind is the intentionality of the mind that is the ability of mental States and events to represent objects and states of Affairs in the world so I'm thinking not just the belief and desire and hope and fear but also of perception and voluntary action now this feature of all of these things namely that they

refer Beyond themselves which philosophers and psychologists call intentionality that feature seems to me the fundamental feature in understanding human psychology and human behavior there's no exaggeration to say at least I think it's no exaggeration to say that intentionality is as fundamental to psychology as atomic theory is the physics or is the theory of tectonic plates is to geology now here's the problem with AI instead of addressing intentionality on its own terms what it does is address a formal analog and it gets us to think that really we what we really ought to be studying is

not for example Consciousness notice there's very little about Consciousness in Ai and very little about it in contemporary psychology partly because they've bought this information processing model you have said very little about Consciousness in this discussion but conscious and unconscious forms of intentionality are the fundamental Notions that we're going to have to use in explaining human behavior now Consciousness and intentionality are themselves grounded in the brain they're grounded in the neurophysiology and the great illusion that AI Palms off on us is an illusion that's persistent in the social sciences throughout this century is that in

addition to the actual functioning of the brain to produce mental States and in addition to the mental States themselves in all of their tediousness and Glory that is actual conscious and unconscious mental states that there's got to be some intermediate realm now for a long time it was supposed to be behavior and now it's supposed to be some computer program that's supposed to mediate between the neurophysiology between the level of the hardware the level of the plumbing and the level of the mental phenomena themselves so I want to say that our basic notion of understanding

human being is the one we've known all along is not the idea of a formal computational process operating over some abstract formal symbols there's no reason to suppose that that's how human beings work the way they actually work as far as we know anything about it is first they've got brains they've got these neurophysiological engines and those brains produce mental states which themselves function causally by way of their intentionality I quite agree that from the philosophical point of view the basic notion of psychology is intentionality and I would say not Consciousness intentionality although of course

any adequate theoretical psychology has got to be able to deal with Consciousness so I entirely agree with that and I uh would use that as a stick to beat those sorts of psychology like for instance behaviorism you know which have had no place at all for that um but I want to say a few things about that first of all intentionality isn't the same thing as Consciousness for example the experiments no I agree on that we agree on that they're forms of intentionality that aren't conscious that's the first thing the second thing is that really

nearly all theoretical psychologists including Freud uh took Consciousness for granted at the philosophical level they took it for granted after taking it for granted at the philosophical level some of them went on to ignore it at the empirical theoretical level too some of them didn't uh but the certainly it's not true that um theoretical psychology is only concerned with Consciousness also uh it's not true that AI or computational cycle apology influenced by AI is the only form of psychology that has taken that philosophical fact if you like or that philosophical domain for granted no one

says it's the only branch of psychology my point okay now let me say something more positive than that I think now obviously we disagree one of the reasons one of the specific reasons why I find AI a philosophical end of psychological interest is that it seems to me to be a promising way of thinking precisely about intentional yes the way that it does it and this is really one of the consequences of the Chinese room argument is that we can now show this the way it does it is by changing the subject instead of taking

intentionality on its own terms what it does is try to construct a formal analog of intention but we don't know we agree on its own terms but we do indeed well I've just published a philosophical account of it controversial universities non-controversial account anything in this field I mean so that's hardly an objection the point however is this in the case of of AI what we have is the illusion that we don't have to study intentionality on its own terms that is to say either in terms of its own logical and psychological structure on the one

hand or its physiological underpinnings on the other well we can study is this purely formal analog which we study completely abstractly well I would like one example of a flourishing or a historical form of theoretical psychology which is done better with Freud in Psychology for example uses intentionality throughout now what it assumes intention what Freud does very interesting and incidentally I'm certainly no fan of Freud and and what I think you're pointing to is the general impoverishment of psychology as a discipline one thing I entirely agree with but from William James through Freud just to

mention two prominent examples much of the best work that's been done on human psychology uses straightforward Notions of intentionality James didn't James and Freud don't use the jargon Freud often talks as if he's doing some kind of hydraulic so the mind he uses all these electromagnetic and hydraulic metaphors but when you actually get to that clinical practice in Freud that the explanations are intentionalistic yes but you see I quite agree with that and if you asked me to name one psychologist who's closest to AI of course not counting the very recent ones who know about

it I would pick Freud precisely because Freud was concerned with the transformation of symbols he was concerned with the relationship of meanings with the way in which symbolic meanings come together and are compared and the way in which a memory is structured and so on and so and so on all his stuff about parapraxy slips of the tongue all this stuff seems to me in principle makes very good computational sense so I think it's a very strong example Freud and AI are both talking about symbols rests on a very deep pun I might almost say

it's a Freudian punch because when Freud talks about symbols he meant real symbols something actually stands for something else now the problem with AI is and here we're back to the Chinese room argument there's no way that the symbols in the program can stand for anything they're strictly uninterpreted formal symbols so the idea that what we've got is both Freud and AI are concerned with symbols that's a pun on the word symbol because in in in in the AI sense assembled namely an uninterpreted formal counter they're not symbols at all well when Rutherford said the

atom was a planetary system he didn't mean that that the thing in the middle was emitting light but there you go again with with the metaphor of course metaphors are often useful the difficulty is at some point we want to know what is literally true now I want to say as far as we know anything about it Freud has got some one thing or whatever his other faults are literally right namely human beings are symbolic animals they use things use some things to stand for other things that is not captured in a well he's got

it right but because he's stating it and we all agree that it's true at an intuitive level what he hasn't uh provided and what I think with the possible exception of your good self no philosopher since has provided is and a universally accepted clear complete account at the philosophical level of just what intentionality is waiting for but there is a further difficulty here you apparently recognize that there is some phenomenon which you think Professor Cyril hasn't quite got right you do recognize its existence indeed now whether or not it's yet clarified I take it the

dispute between you is whether or not it can in fact be explained in terms of programs and computer simulation and I'm the idea that's got to be universally acceptable well I wouldn't want to say uh I don't know that I'd want to say it quite as strongly as that at the very I'd certainly be prepared to say that we I think that it's likely that we'll develop our understanding of it better by thinking about it in these terms but that's another promissory note this is full of them yeah but but what we're interested in is

what do we know what can we actually is what can we find out and what is the best way to proceed now I am saying and I think you agree with me the best way to proceed must involve the examination of intentionality yes and you also agree with me no program has intentionality yes but I also say that no atom is really it is really a solar system we don't need it to be a solar system because what we do is study the atom on its own terms and precisely what I'm recommending is we better

start studying intentionality on its own terms and not in terms of some currently fashionable mechanical analogy so I really don't see any basis for a disagreement between us if you really agree with me that intentionality is the subject matter of psychology there's no way AI can deal with intentionality except by analogy well science moves by analogy and sometimes it moves forward and sometimes it moves forward by making literal investigations of the phenomena at hand I like your analogy with Rutherford because if we just try to investigate the atom by looking at the solar system we

wouldn't have got very far at some point you have to actually look at the atom now I am saying if you want to investigate intentionality you won't get very far you're not likely to get very far just by doing simulations and analogs you better look at the phenomenon itself yes but look at the thing just slightly differently and we have this fundamental future of our mental life which you both appear to agree on that is intentionality taking something I mean one might speak of it I suppose in terms of aboutness or directiveness as indeed Professor

Searle does now we clearly have that feature and he says computers don't have it or programs don't have it and you tend to come back and say well nonetheless they are rather useful ways of approaching the solution of that problem and it keeps sounding a little bit as if you think they come a bit close to taking something to stand for something else or come a bit close to directing themselves onto an object well I don't think they do when they adjust programs that are rules for manipulating formal patterns which don't have any causal plugging

into the world but I think that when they do have a cause of plugging into the world and there are already a few examples of this then they do really begin to have something which is close interestingly closer to intentionality and I also think that the human mind I seems to me to be a very good bet at least from the methodological point of view that the human mind is such that overlying the basic causal grounding in the transducers and the voluntary action and so on and so forth there are mental processes going on and

the best way that we currently have of thinking about just what those mental processes might be specifically and how they work and in what order they work and so forth are computations similar in nature in significant ways to the sorts of computations that you have even in current formal programs like for instance Shanks programs to respond to that it seems to me I am really having some trouble getting your theory into Focus because I thought you agreed in the beer can phase of our argument that if the beer can have the right cause of relations

on the right input and output then it would have to have all the intentionality that human beings have I thought that was agreed on and your only objection to that was you wouldn't feel it morally about beer cans the way you feel about other people and I I wouldn't feel morally about them either because I think it's obvious that they don't have any conscious thoughts and feelings are unconscious thoughts and feelings okay now it seems to me your positions weaker than the beer can phase because now it seems to me you're saying well we sort

of get the best available way of studying intentionality by doing this but now that is a not really the same thesis indeed it doesn't look like it's consistent isn't my thesis so but I thought you accepted it in the beer can the phase of our discussion but now then now then the second objection I wanted to make is not just that the position is inconsistent but that it begs the question precisely to say the best way of studying it is to study formal computer analogs that's what's in dispute what I'm saying is no there are

two better ways of studying it one is study the phenomena itself picking up your example Rutherford would have made a mistake if he'd stuck looking at the solar system instead of investigating atoms yes intentionality themselves and secondly another way to study it is to study how it actually works in the brain what the actual causal basis in the brain but now if you've got those two if you've actually got a study of intentionality on its own terms and you've got a study of its causal basis in the brain then what would be the grounds for

saying the best way to study intentionality would be to study itself well the point is of course Rutherford would have got nowhere if he'd only looked at the planetary system what he did was to look at the atom but in looking at the atom he used he made use of the concepts he already had which referred to the planetary system so that's the analogy so it's nice to have an analogy to start and the second one once we get started then we can forget about the analysis I mean it seems to be a very important

point to make of course you must look at the brain of course and one of the interesting things about ma for example who we've mentioned is that he makes a concerted attempt I mean never mind whether it's successful he makes a concert and serious attempt to link what he says with what's known and what might be hypothesized about neurophysiology at a very detailed level now it seems to be what you have to ask about the brain is not just what happens on the causal physical level if you like between this neuron and that but what

mental processes what psychological processes I would like to say what computational process is but anyway what functions are these causal processes observing that's what we have to ask and that is not a question which can be posed at the purely physical level it's a question which is posed at the psychological level and it's a question where again I can only repeat what I said before it seems to me that a very promising way of posing those very questions is by making use of some of the concepts that have been given to us by computer science

and AI well let me just respond to that briefly by advancing another research project let's one way to start off on any serious research project is ask yourself well what do you know so far so that we can then use that as a springboard into the unknown well one two things we know so far is we have brains and they produce mental States and we have mental states with intentionality and they are responsible for our Behavior now it seems to me that right there gives you a research program it tells you okay if you want

to understand psychology you're going to have at least two levels to work on the brain how does it work in the plumbing and intentionality how does it work at the level of mental States whether conscious or unconscious now if somebody then says aha but you should devote most of your effort to a third level not the the best way I use your expression here to study this is neither to get into the brain nor to study the intentionality but to study this intermediate level of the program I would say there isn't any reason to suppose

that that promissory note is the one that's going to have the biggest payoff now of course it's possible that anything might develop but if we're just looking at what we now know and what is what is the most intelligent way to place our resources seems to me we're much better off proceeding to study the matter at hand namely intentionality and its basis in the brain well we're betting on different horses thank you Professor Bowden thank you Professor Searle good night

Related Videos

1:03:26

Daniel Dennett Interview on Mind, Matter &...

Philosophy Overdose

10,264 views

55:20

Debate on Mind-Brain Relation: Searle vs E...

Philosophy Overdose

52,998 views

1:10:38

Consciousness in Artificial Intelligence |...

Talks at Google

446,113 views

27:44

The Concept of Language (Noam Chomsky)

UW Video

1,870,217 views

35:57

How philosophy got lost | Slavoj Žižek int...

The Institute of Art and Ideas

474,596 views

8:26

Professor Charles Taylor ~ What kind of re...

Common Home

32,699 views

1:42:21

A Discussion of Artificial Intelligence wi...

Fritt Ord

49,556 views

34:06

Gottlob Frege - On Sense and Reference

Jeffrey Kaplan

318,206 views

1:14:33

The Logical Structure of Human Civilizatio...

Philosophy Overdose

188,147 views

30:55

What is it Like to be a Bat? - the hard pr...

Jeffrey Kaplan

534,874 views

59:18

Karl Popper & John Eccles in Discussion (1...

Philosophy Overdose

41,414 views

1:10:54

Consciousness as a Problem in Philosophy &...

Philosophy Overdose

66,711 views

45:10

Marxist Philosophy - Bryan Magee & Charles...

Philosophy Overdose

93,402 views

44:53

Heidegger: Being and Time

Michael Sugrue

792,853 views

1:23:34

A. J. Ayer - What has Become of Philosophy...

Philosophy Overdose

36,041 views

1:52:41

Is Liberty Possible? Charles Fried (1981)

Philosophy Overdose

2,297 views

9:18

Transcendental Idealism and Knowledge | Im...

It's Time to Learn! - Kanik Time

22,224 views

41:41

how to transform your self image

Newel of Knowledge

15,613 views

5:32

The Witness Is Your Self

Rupert Spira

9,633 views

58:31

“Where Language Can Lead” ft. McGill Unive...

Christian Scholar's Review

2,047 views