Chat with SQL and Tabular Databases using LLM Agents (DON'T USE RAG!)

102.28k views8879 WordsCopy TextShare

Farzad Roozitalab (AI RoundTable)

In this video, together we will go through all the steps necessary to design a ChatBot APP to intera...

Video Transcript:

hello everyone and welcome to another video from llm 0200 series as you requested I'm here with a video to show you how to perform rag with various databases but I want to take you much further than that because rag with databases is not the most effective way that you can interact with them and extract information from them so I created this sub series called Advanced rack series and it is going to contain three videos where I will focus on Rag and Q&A with various databases and I will show you how to convert Excel files CSA

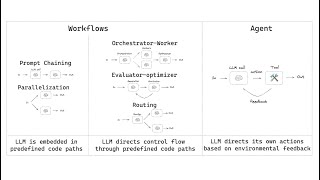

files SQL databases and text files into a SQL database a vector database or a graph database and use large language models to interact with them a few days ago I shared with you this schema on our YouTube Community Channel this is a little bit modified but I will go through all the details so when you want to interact with your databases there are technically two different ways that you can Implement your project the first one is to implement a rack project and the second one as I'm calling it uh Q&A is to implement a Q&A

project there is a fundamental difference between these two when we Implement a rack project we have an embedding model in our pipeline that is going to convert the users question to vectors then we will use that Vector to perform a vector search over the content of our Vector database then we retrieve the most relevant content and we pass it to a large language model to to get the final result and it doesn't matter if we are performing the vector search on a graph database or a vector database but on the other side when I talk

about a Q&A pipeline it means that I have an llm agent that takes the user's question but instead of converting it to vectors it converts it to a query that our database understands then it uses that query on our database to get the results then it passes the results to a large language models and it gives us the answer so with that explanation you probably understood that that is going to lead to a very different performance and behavior with these two approaches so when we use a rag pipeline we are technically looking for some sort

of a semantic relationship between our question and the content of the vector database but when we use an llm agent we are using the exact query that an expert would use to extract the information that we are looking from the database so we expect to get much more accurate and precise answers from our database and we can also ask much more complex questions from our databases so my main goal in this sub series is to give you all these techniques and tools that allows you to implement whichever you want based on your Project's specification and

needs so I divided these techniques into different groups as you can see uh they are distinguished using different colors so we have the blue Group which is the one that I'm going to focus in this video we have the green group and the yellow one and the purple one so for the purple one we already have a video on the channel so uh I'm going to skip that one it is called rag GPT feel free to check it out but in this video I want to show you how to use SQL llm agents and interact

with SQL databases that are created from CSV files Excel files and SQL databases in the next video I will show you how to use llm agents Knowledge Graph and graph database to interact with CSV files and Excel files I will also show you how to perform rag with CSV files and Excel files using graph database and in the third video I will show you how to to use Knowledge Graph llms embedding models and graph database to interact with documents so that is going to be somehow the twin project of rag GPT since in rag GPT

we were also dealing with documents but this time we are going to use Knowledge Graph and graph databases and keep it in mind that Knowledge Graph is going to open up a whole new word for you because Knowledge Graph allows you to leverage the knowledge within your database or the knowledge shared among different databases so as soon as you are able to build and construct the knowledge graph from your databases then you can start asking much more complex questions uh than you would be able to ask from a normal rack pipeline or in some cases

from a Q&A pipeline that is directly connected to a SQL database so Knowledge Graph is just in my opin in the next level of where this whole domain is uh moving toward and it's going to uh address some of the most challenging uh tasks in the domain for instance in the second video I will also show you a project from Microsoft in which they have designed a medical chatbot using a Knowledge Graph so then you can start asking questions about uh how many patients uh were diagnosed with that spefic specific issue or these type of

questions which a pipeline would never be able to give you the answer for that question so I'm going to go through these three projects in this sub series the first two are already uploaded on the GitHub repository and you can go through the repository although the repository is different from uh LM 0200 I will show you quickly the repository after the presentation and the third one I will upload it in a couple of days so the first project is Q&A and rag with SQL and tabular data in this project I'm going to use Microsoft Azure

you can also use open AI I will use Excel files CSV files and SQL data I will also use SQ light and Lang chain so I mentioned that I'm going to use an llm agent the llm agent the SQL agent is uh from Lang chain this is the scheme of that agent and this is the reference for Lang chain website again as you can see the main difference between using an llm agent to perform uh that Q&A that I mentioned on a SQL database versus having a rack pipeline is between these two steps embedding and

Vector search we don't have it here but instead we pass the question to a large language model that large language model is going to create the query then it's going to use it on our SQL database and then it passes the results to another large language model and and we are going to get the answer and everything that happens here is going to happen automatically and on top of that if something goes wrong for instance if something needs to be modified in the query the agent does it automatically for us so it has the capability

to fix itself and try again until it reaches to the proper result to show you how to perform rag on tabular data I will cover two different techniques in this video I will cover the one at the top which is to treat each row of a tabular data as a chunk of a vector database so to give you an example this is the data set of uh the Titanic data set from Kagel the first row has survived zero P class Tre name Mr oven Etc so one way to perform rag with a tabular database is

to create a string from each row so I have a string here which contains the column name which is survived with its value which is zero the next column name is p class with its value which is three and then we have the name Mr oven Etc through the end then I will store this then I will convert this string to vector and I will store both this string and the vector in a vector database so I can perform Vector search on that so in case my data set has 30 rows there will be 30

chunks in my Vector database this is one way to do it and I will show you how to do it in this video in the next video I will show you how to perform Vector search on a specific column so if I have for instance a column here that is called description and that column gives a comprehensive description of each specific row I can only convert that specific column into vectors and then we store the information in for instance in the next video in a graph database and we perform rag only on that a specific

Vector column so these are the two ways that I'm going to cover rag for tabular data there might be other ways uh that uh you can think of but in general again when you want to deal with data sets tabular data sets databases Etc the best way is actually to use LM agents to perform a uh to query those databases using the languages that those databases understand that would be the most effective way to interact with them this is the schema of the project that we will develop in this video and as you can see

I provided the description on the top here so the redlines are the data preparation pipeline for SQL database which allows us to perform Q&A as you can see our chatot is interacting with a SQL database or with a vector database so to create the SQL database I can convert a SQL database Excel file or CSV files into a SQL database which is the red line I can also upload a CSV file and Excel file while using the chatbot which automatically will be converted in a into a SQL database the green lines are for data preparation

pipeline for a vector database so I will show you how to convert a CSV file or Excel file into a vector database the yellow lines are the chat pipeline for interacting with a SQL agent and a SQL database so as soon as the user asks a question that question is going to be passed to a SQL agent that agent is going to automatically generate the query uh use the query on our database get the results pass the results to a large language model and what we get in the output is the answer to our question

and the blue lines are the chat pipeline for interacting with the embedding model llm and a vector database to perform rag on our t data so the user asks a question we pass that question to a to an embedding model we get the vectors we perform the vector search on our Vector database we retrieve the most relevant content we pass those contents along with the system role along with the user's question to a large language model and then we will get the answer from it so now let me show you quickly the GitHub repository uh

the databases and data sets that I'm going to use uh in this project and then uh we will jump on the code and we will see how this whole thing works so this is the GitHub repository that I mentioned earlier it is called Advanced R Series so it is different from llm 0200 as you can see two projects are already uploaded you have access to both they are both functional and you can immediately uh clone the repository and start using them and for this project I'm also going to use Lang chain uh SQL agent I

showed you the link on the presentation I will also include the link in the description and also you can find the link in the GitHub repository read me file I will use three different data sets the first one is diabetus data set from kaggle the second one is breast cancer data set from kaggle and finally I'm going to use uh the shinuk database uh I I'm using them because they are very well-known databases and data sets and they are very easy uh to use in your project but in general as soon as you understand how

the whole project works it is very easy to just fed your own data set or databases and start interacting with them so let me open up the project this is the project schema it is exactly uh the same as I used to uh build in the previous videos but there is one new folder here so from this video I Decided uh to change the way that I explain uh the concepts so first I will go through all the knowledge that we need uh for implementing the project and for running the project then after we understood

uh everything I will go through the project schema and I will show you how to implement it so during the past videos I received a lot of questions about how I can use your projects with open AI models because as you know I use azure open AI so I provided you with this notebook to First verify that you have the right access to your open AI models GPT model and embedding model in case of this specific project the second step is when you want to refactor the project to use open AI all you need to

do is first verify your connections second go through the functions like the places that I'm getting the response from one of those models and in case it is needed to be switched to a new function just switch the function and get the results and pass it to the rest of the pipeline because the rest of the pipeline is going to be exactly the same for me and you if uh you are using open AI directly the only difference would be the way that I interact with my open AI models versus the way that you would

interact with your uh open AI models so if I run this notebook you can see that it is now going through GPT 3.5 and it successfully generated the response from my model and it went through uh my embedding model and it successfully generated the embeddings for this single board another question that I received a lot is how you can pass these uh credentials to your project so all you need to do is to create aend file in your project so I have three let's say variables here so all you need to do is just pass

these variables in front of uh the proper names as you can see here I am getting uh the environment variable with these names so these are the names that I'm using in my environment and then you can easily start using the project because it will automatically get those variables from yourm file and it's going to inject it into the project uh pipeline uh itself so the this is another uh question that I received a lot I hope now you have a good understanding of how to refactor the project now let me uh go through the

pipeline itself so I have as I showed you three different type of data I have uh I have two Excel F two CSV files cancer and diabetes you can also uh add Excel files the pipeline is going to automatically detect the type of your data and it's going to load it uh properly I don't have an Excel file in the project but the code is already able to handle it then I have the Titanic data I have two versions Titanic small and Titanic to show you uh how it works to upload a CSV file while

we are using the chatbot then I also have the shinuk uh SQL data so all you need to do is is to just copy paste uh the information that is given on that web page that I showed you into a folder like this and then uh you are good to go you can easily convert this to a SQL database and start interacting with it I also have this chroma which I will remove it here because we are going to generate it automatically so step one is to prepare our SQL database and our Vector database because

if you remember in our pipeline we had two different databases that would allow our chat B to interact with them a SQL database and a vector database so right now I have these ones the offline data sets and databases on top and I need to convert them to one of these two so let's go through the red Pipeline and first I want to convert my SQL data the shinuk uh database to a SQL database using SQ light so the description says to prepare the SQL database from a do SQL file copy the file into data/

SQL so I have already uh my folder here and then open up a terminal if you are using Linux make sure that you have skite installed on your operating system if you're are using Windows again double check and make sure you have it I already have it so all I need to do is to create a SQL database in my data folder so it is now going to open up a SQL database for me in the next step I will go through all the data in MySQL file and I will copy it in my cql

database so this is the example that I'm going to use with the shinuk SQ light data here we go so it just created this SQL database here and it contains all the information that I'm looking for just to make sure that actually the information was successfully stored in my database you can also run an example so I know there is a table in my uh SQL database called artist and if I just print uh the top 10 value you will see that these are some information within that table so now I know that my SQL

database was created and it also contains the information that I'm looking for in The Next Step you need to create a SQL database from your CSP files and Excel files so you just put your CSV files and Excel files into this folder data/ CSV _ uh xlsx and in the terminal from the project folder execute this python module so I will go through the necessary uh like information that you would need to understand how this module works but for now first let's quickly create our data set all right so I forgot to change the module

name actually uh it is now changed because I also added the vector database module so in case I run this it is going to go through my CSV and Excel files as you can see it detected two different data sets and it's going to create a SQL database that contains all my data sets so my SQL database right now is going to contains two tables one with the name of cancer and the the other one with the name of diabetes and if I open up my data folder you will see that a new database was

created here called CSV XLS xsql database. DB this is step two I just updated the file for step 3 which is to create a vector database for our CSV and Excel files so right now the module is pointed to this folder for upload you can change the uh folder that you would like your module to Point At You can uh change the databases that you would like to be prepared uh for rag but right now if I execute this code it is going to go through Titanic a small and it's going to create a vector

database from the content of my Titanic data set so again I will uh show you in a moment what is happening uh behind the scene for each one of these commands so right now I have my Vector database I have my CSV xlsx cql database and I have my SQL database from my uh shinook SQL data so that was a step one in a step two I want to make sure that I have have the right access and connection to my SQL database so I will quickly uh create a path for my SQL DB this

is going to be pointed at this one and I will just perform a simple query the one that we just saw on uh terminal this time with another table uh called employee on my database so as you can see these are uh the tables that are available on my SQL database and this is the top 10 values of that a specific table itself so again just to avoid any uh confusion make sure that you have the right access to your SQL database in the next step I want to start querying my database so remember I

told you there are two different ways that we can interact with a database One is using Q&A so so I want to use an llm agent that is able to ask questions in the right way from my SQL database the second one was to perform rag so I will show you how to perform rag uh with a SQL database and a tabular data in a moment but right now let's start asking questions from our SQL databases so I need some libraries for this task I want SQL database from langin Community I'm using pip R root

for uh managing my directories and paths and I'm just turning the warnings off for this notebook I give the database folder again it is sitting on my data SQL database and I just create an instance from that database next let's again verify that we have the right access to our database perfect so I have the right access I can uh query my database and I can get the results so next also I want to make sure that I have access to my environment variables because these are going to be used for interacting with the GPT

model and our embedding model perfect so the environment variables are loaded and uh we now can actually read one of the envir environment variables uh from for our GPT model in the next step Let's test our GPT model model perfect so the GPT was uh able uh to give us the answer I have the right connection to my model in the next step I want to create a SQL query chain and start interacting with my database so to create a SQL query chain this is where I'm creating it I need the large language model that

is going to be used in that chain and I need my database so we just created an instance of our database here and I'm going to create an instance of my large language model here so as soon as I pass those two to this function here create SQL query chain and I ask a question that chain is going to give me the query that can be used on that database so if I ask how many employees are there it's going to look for the employees table and it's going to check the number of rows in

the employees table so this is the query that can be used on our SQL database to get the answer for this specific question if we run that query using DB run. response we can see that uh our response is valid it can be used for getting the results from the query and also if we print the chain uh we we can see that uh it is giving us the system role that L chain provided behind the scene for that chain for that large language model it says you are a skq light expert uh given an

input question first create uh a synthetically correct sqi query to run Etc and also it tells the agent what format to use for giving us the answer so in the next step I want to add one more element to the chain which is to query the SQL database tool because here we use the database itself but I want the the the chain to be able to uh execute the query uh as well so again we are going to create SQL query chain with the llm and the database and now I will also create an instance

with my database for execute query from from query SQL database tool class from Lang chain community so again in the previous step we saw that our chain was able to give us a query a valid query that actually can be used on our database in this step we just Implement one more element into that chain which is going to execute that query automatically so if I create the chain uh I will not go through the steps for creating a chain this is uh how Lang chain can be used for creating chains it is a very

user friendly uh process you just need to provide the steps one after another using this here so you can see that if I ask the same question the previous time it gave us this answer which we then use to get the answer manually this time it also gives us the answer so our chain now contained two separate steps in the next step we create the whole chain with an llm agent to perform the whole process for us so it's going to go through the question it's going to create the query it's going to get the

results and it's going to also give us the results in a userfriendly manner because if I get this result just eight it is not nice to print it like this in our uh chat bot but instead I want a userfriendly response that I can actually show uh to my user so again we are going to create uh the chain we start by creating an answer prompt we provide the structure that we want uh the agent to use we create the chain from our answer prompt the way that the query is going to be processed and

also the way that the answer is going to be processed and then we will ask a question again how many employees are there so now now it gave us this nice answer there are eight employees and I can print it in a chat bot for my user so this is the chain that we just created you can go through the chain you can modify the variables one thing that I mentioned in the presentation is in case we use an agent that agent is also able to fix itself if necessary so up to this point we

were able to get the results from the database and print uh the answer in a nice format but actually if we want to implement an agent on top of this chain we can wrap the whole process again we can uh go through create a SQL agent we can use an agent type so it's going to use openai llm we can create an agent and then we can start asking a question so now this time our agent has access to a large language model to the database and it is also going to fix itself in case

something goes wrong so if I ask this question you can see that the agent is now going through all the necessary steps to query our database and to give us the final add output so the input is the users's question and the final output is the country whose customers uh spent is the most is USA so this is the agent that I'm going to use for our chatbot so beside this agent Lang chain also gave us more tools to add on top of it I'm not going to use those tools this is just another example

describe uh the playlist track table again these are not the questions that you can ask from aaq pipeline so pay attention list the total uh sales per country which countries customers spend the most this is another question that you can ask from IRA pipeline because rag is just going to search for the semantic relationship between the vectors uh created from this question with the vectors on our Vector database but now our agent is now is able to use a query to actually go through all the necessary steps that uh are needed to give us the

answer for this specific question so that was a step three we just queried and ask questions from our SQL database the database that was created from a SQL data itself so this database was created from shinuk SQL database and we were able to ask questions from it so that was a step three and now let's go to step four we also had a SQL database that was created from CSV files and Excel files so if you remember we had diabetes CSV files and we had the cancer CSV file and we created a SQL database from

both CSV files so I said these two are going to be the tables in our SQL database in a step four I want to show you how to create the SQL database but with another data set so we see the process that are needed uh for making that happen to create that database I will just load the Titanic data so we see uh what we have we have the column names and these are the top three rows to create a SQL database from the Titanic data set I just again go through SQL database from Lang

and Community I also load create engine from SQL Alchemy I create a path that I want uh to create that database inside it so I will just name it test SQL database in data folder so as soon as I run this cell I expect a database to be added here I create the database I created using Create engine and then I will just convert my data frame to my SQL database let's see here we go we have test SQL db. DB so I just converted my Titanic data into a SQL database and in case you

want to merge multiple tables and tabular data into one SQL database all you need to do is just to start passing those data sets into your engine and create different tables so that is how I did it in the pipeline that I executed earlier in that pipeline there were two CSP files and eventually we created two tables in our SQL data this is how I did it in the pipeline next I can start asking questions from that SQL database so the question that I'm going to execute here is Select uh anything from uh the table

with the age less than two so I just want to have the list of the infants uh in the Titanic and this is equivalent to DF dfh less than two in pandas so if I have a pandas data set a pandas data frame this is how I can achieve this result and with a SQL database this is how I can achieve this result and again I can create an agent and let that agent to interact with my database we just saw how to create an agent and we run the agent on the SQL database in

a step three this is going to be exactly the same so I will load my llm I will create an agent with the llm the database the open AI tools and develop assist tool just to show us all the details and again a question that you cannot ask a rack pipeline what is the average age of survivors so if you ask this from a rack like rack project there is no way that that pipeline is going to give you the answer for that but for this actually our agent is now able to query the database

and give us the average age of the survivors in the meantime while it is giving us the answer I want to show you the equivalent in pandas so in pandas I can filter my database with survived equal to one mean means all those who survived in Titanic I can get the age column and I can calculate the mean value of that column which is 28.4 and the result that the agent gives us here is the average age of the survivors is approximately 2841 years this is the power of using llm agents so that is why

I told you that there are much more powerful ways to interact with your databases than implementing rag on them so in case you would like to ask for instance one of the uh channel members ask me the other day if I ask for what are the list of winter clothes in my database is this pipeline able to give me the answer actually to find out the proper answer to get the proper answer for that specific question you need to implement an llm agent not a rag pipeline so this is step four we just saw how

to perform Q&A with a SQL database that was created from a CSV file now let's go to the final step which is performing rag with our SQL database so let me actually bring this question because I want you I want to show you uh how a rack pipeline would fail for answering these type of questions so the last notebook that I want to go through is going to perform Rag and have Vector database so again I load the libraries that I require for the vector database I'm using chroma DB for the llm I'm using Azure

open AI GPT 3.5 and again make sure that you have access to your environment variables I load the environment variables I create an instance for my Azure openai and an instance for my chroma DB so we just created Titanic uh small uh chroma DB and this is how we did it so the first thing is to create a vector database and name the table inside that database for instance Titanic small in this case because I just want the database table to have the same name as my data frame here then you just load the data

frame that you have in mind for instance for Simplicity I just provided you with five rows for this uh C CSV file so in the notebook I'm not going to go through these steps because I've already created the chroma database from my full Titanic but in case you just want to test you can skip the first part that I executed uh this module and create the vector database from here then you go through each row and create exactly the structure that I told you so each row is going to be treated as a separate Chunk

in my Vector database so here's the first row I have the column name the value the second column name the value Etc and this is the second row third row until the rest of the CSV file so this is one way that you can interact with your CSV and your Excel files with rack then you can also inject some metadata into your vector database for instance for the metadata um adding the name of the database itself the data set itself and also you need to add some unique IDs to the vector database so this is

how you can create uh that Vector database and you can also count the number of values in it so in case you go through this test here you would uh have uh the number here you would have five for the number of uh chunks into the database because I only loaded five rows but in case you have the database ready you can just immediately start interacting with it so the query is I need more info about this specific person and I'm going to just convert the query to vector so first let's count the number of

values in my Vector database uh since I created it from the Titanic small there are 30 rows in my data set and now I can perform the similarity search so the result of the similarity search is this dictionary right here it is not user friendly again to pass this dictionary to your chatbot and to show it to your user but instead you can pass this result along with the system role along with the user's question so this is exactly as I showed you here I'm now going to pass the question the system role which is

this one along with the retrieve content to my large language model perfect now I have my response I can just print the response based on the search results Owen Harris uh is a male who was on board uh the Titanic Etc he did not survive and he also the chat is also G able to give you some more information about that person and I also uh printed the data frame for you here so you can verify the answer that we just received uh from the rag project but let's change the question right now and let's

ask what is the average age of survivors so if you check here the chatbot needs to filter this column and keeps only those with the value equal to one and then it needs to look at this column and calculates the average of that column so if I convert this question uh and get the embeddings and then per per form the similarity search on the vector database and pass the results to my GPT model and if I print the results based on the search result we can see that the age of one of the passengers in

the data set is 39 years old however this information does not necessarily represent so if I continue reading the average age of survivors that that is when rag falls short and now I just hope you have a good understanding of why I don't recommend you to implement rag with your databases and I strongly recommend you to leverage llm agents and to query your databases properly it is just way more powerful than Rex so technically with an llm agent you uh it means that you have hired an expert to interact with your database so this was

the last step now I want to show you how I created the project and let's run the chatbot and see uh some of the questions that we just saw in the notebook again together so to create the project again I have a config folder with the configs and uh I just provided all the directories that my chatbot is going to look at the CSV XL files where I'm going to create my SQL databases uh Etc I have my llm config which is the system r that I'm going to use uh for the agent and also

the llm config uh for the system rooll that I'm going to use for the rag llm uh model I have the engine for the llm that I'm going to use which is GPT 3.5 I have the temperature which is equal to zero always use temperature equal equal to Zer when you are implementing uh a rack pipeline or a Q&A pipeline because you need precise answers and you don't want your model to be creative and uh that's it rag config I have the collection name which is Titanic small because I'm going to create the vector database

from Titanic a small and I have top k equal to one which means after I search the search result will only retrieve the most relevant piece of content from my Vector database I have the data folder which we just went through I have explore which we just went through images contain some images for the chatbot itself and all the code for the chatbot sits here so if you check the read me file here I provided you with all the necessary steps to execute the project you know how to uh prepare the SQL database from the

SQL data you know how to prepare the SQL database from CSV files uh and Excel files here it is so again the third step was missing so I just updated the read me file and you know how to prepare a vector database from your CSV and Excel files and uh by for instance right now I'm using the CSV file in for upload folder to create the vector database from Titanic a small and finally you can also upload a CSV file or an Excel file while using the chat bot so I will uh show you how

to do it while I'm using the chatbot I've also provided you with the list of the data sets databases Etc and the key Frameworks that I'm using here so you have access to all the steps that you need to go through for making the chatbot functional the first two steps that you need to take is to execute these two modules this module is going to go through uh this class prepare SQL from tabular data and this module is going to execute this class prepare Vector database from tabular data and also I showed you how to

create a SQL database from our SQL file so you need to execute that in the terminal so you need to have two databases here this test one we just created it uh with the notebook and you need to have this chroma here so three databases for the chatbot to be able to interact with them next you have access to app.py which is the chatbot user interface itself again it is identical to Rack GPT uh I'm using these three functions to connect the chatbot uh to the back end in respond I'm just pointing to the right

direction in my backend based on the user's question and also in case the user uploads a file I'm executing this function so I'm not going to go through the details of the back end because technically whatever I have here is the things that I just went through in the explore notebooks so now let's execute our chatbot to execute the chatbot just simply run python Source uh app.py the chatbot is running I can bring the chat part here so this is the chat user interface uh again you can type here you can submit your text you

can upload a file which we will see in a moment and you can change the chatbot functionality so in case you want to chat just keep it on chat in case you want to upload a file switch it to process file and remember to switch it back to chat afterwards then you have the chat type so you are able to chat with four different databases the SQL database that was created from a SQL data you can interact with a SQL database that was created from CSV and Excel files that you pre-processed in advance you can

interact with a SQL database that is going to be created as soon as you upload a CSV and Excel file and you are able to rag with CSV and Excel files converted into a vector database so there are three Q&A and one rag feature I just put the rag feature there so you can test and evaluate the performance but definitely if you are uh planning to interact with your databases you can just skip this feature and make sure that your uh Q&A pipelines and your llm agents are set up properly so let's start asking a

question also provided you with some sample questions so to ask a question from a SQL database again I can ask how many employees are there so we just got the results there are eight employees and if I go back to my code you can see the agents steps in the terminal so we can also go through the steps and see exactly what it is doing and uh just verify the process by yourself the second question let's again check the questions Leist the total set this is exactly the same question that we saw in the notebook

again the total sale amount is 523 and if you go through the terminal you can see the performance of the agent again one more thing about the agents uh just keep it in mind in case your agent requires to go through big chunks of data one of the issues that you might face is the context length limits of your large language model so I've hit the limit a few times with GPT 3.5 so I strong strongly recommend you to use a more powerful large language model in case as you can see uh the amount of

information that is going to be processed and it's going to be pass to the model sometimes gets big uh so in case you would like to just make sure that your model works fine uh make sure to switch to to a more powerful model such as gp4 and in general using gp4 would also make the performance uh smoother than 3.5 so now let's uh actually let's go through pre-processed databases and let's ask what is the average single epithelial cell size in cancer data so you remember in our SQL database that we created from CSV file

we had two different tables cancer and diabetes so let's ask this question here I switch Q&A with stored CSV xlsx SQL database the average single epithelial cell size is 2.37 so in in explore I also have explored databases you can verify the answers by checking uh these notebooks so if you check cancer notebook you see that I calculated the average using uh pandas query it is 3. 2.36 and the result is 2.37 so it just rounded up uh the second decimal here so that was one of the questions now let's ask another question and this

time from diabetes so what is the average age for those who were diagnosed with diabet so again same database the average age for those who were diagnosed was 37.7 so let's go through that database so I have a notebook here again you can verify the questions here this is exactly the process that happened behind that query so our agent was able to understand that the outcome should be equal to one get the result of this filter then filter based on the column name age and finally get the average value so as you can see there

are three different steps needed to get the proper answer and the answer is 37 06 and the answer that the chatbot gave us was 37.0 7 again it rounded up the second uh decimal here so we just interacted with the SQL database that was created from CSV files Excel files Etc now let's ask a question from the vector database that we created from Titanic so if I go with rag and if I go into sample questions so since that Vector database was created from the same data set I will ask this question what is the

average age of surv survivors you would see that actually it won't be able to give us the answer based on the search result it seems that the average age of survivors is not provided of course because in rag it retrieved one chunk which was belong to this person and that chunk for sure was retrieved somehow randomly because the semantic relationship between our query and the information within our Vector database uh just was not clear so that is 39 years old but it doesn't mean that the average age is 39 years old on the other side

I can start asking questions about a specific person so since I know this person is already there I can say give me more info about this person so now this time the system is able to retrieve the right chunk because the semantic relationship is going to find out that this person uh is within my data set here we go uh this person is a passenger in Titanic and here is the detailed information about that person the class level the gender age Etc so we just saw the limitations of rag with Vector databases that were created

from tabular data SQL databases Etc in the final step I want to upload the same file Titanic data and ask the same question that rag couldn't uh give us the answer for so this is the data set and if I upload Titan CSV it gave me an error because I didn't change the app functionality again change the app functionality before doing it okay uploaded files already please ask your question so what it did was it just automatically created a SQL database from that CSV file and now if I switch back to chat and if I

go into Q&A with uploaded SQL database and if I ask the same question what is the average age of survivors the average age of survivors is approximately 2841 and let's verify the answer so I go to explore Titanic I come down the average age of survivors again it requires three steps uh to get to the answer is 28.4 so that was the correct answer let's ask another question so in sample questions so for those who survived in Titanic were the majority men or women so this is the question that I want to ask based on

the data there were 233 female survivors and 109 male survivors so the majority were women all right so let's again verify the answer this is the answer so it needed to First filter based on the survival equal to one get the six equal to for instance female or male and it's going to result in a true or false throughout those uh survived passengers and then for instance in case of using pandas it needed to calculate the values so the True Values are 233 which are female and the false values which are men are 109 so

again the answer was correct so we just went through all different functionalities of the chatbot there are a few notes that I would like to go through before wrapping up the video which are very important one very important keynote is that when you are querying SQL databases using llm agents make sure not to use right Privileges and limit the scope when you are allowing the users to interact with the chatbot because just like the user can ask for the number of for instance the survivors or the average age of the survivors from a data set

a SQL database the user can also ask to remove a database or to modify the information within that database and the agent is actually able to generate that query and it's going to execute that query so in case you don't consider proper security measures your database is going to be jeopardized and the users can easily uh manipulate the data set so that was it for the keynote that I wanted to bring out again we just designed this chatbot together we went through all different steps of preparing the vector database and SQL database and we started

to interact uh with different type of data sets uh using our Vector database and SQL database so I hope you understand that how powerful an llm agent can be versus a rag project so again in case you are looking for proper answers and in case you would like to uh get accurate results from your databases use agents so thank you very much for watching the video and I hope to see you in the next one

Related Videos

3:17:00

Learning Javascript from zero

AsyncStack

18 views

23:41

Jr Devs - "I Can't Code Anymore"

ThePrimeTime

497,328 views

1:36:39

Chat with Multiple/Large SQL and Vector Da...

Farzad Roozitalab (AI RoundTable)

28,639 views

1:23:34

Chat and RAG with Tabular Databases Using ...

Farzad Roozitalab (AI RoundTable)

29,136 views

30:03

End To End Text To SQL LLM App Along With ...

Krish Naik

91,813 views

1:11:47

Vector Search RAG Tutorial – Combine Your ...

freeCodeCamp.org

252,238 views

57:10

29. AWS X DEVOPS Project #1.1 | Connect EC...

monkweb

63 views

20:22

LangGraph - SQL Agent - Let an LLM interac...

Coding Crash Courses

10,935 views

3:31:24

Deep Dive into LLMs like ChatGPT

Andrej Karpathy

1,215,021 views

16:16

Use AI to chat with a SQL Database 2.0 - p...

The Code Wolf

17,369 views

26:52

Andrew Ng Explores The Rise Of AI Agents A...

Snowflake Inc.

610,437 views

22:53

Use AI to chat with a relational SQL datab...

The Code Wolf

34,309 views

33:08

How to Start Coding | Programming for Begi...

Intellipaat

9,839,619 views

28:56

Chat with ALL Your Databases Using AskYour...

Farzad Roozitalab (AI RoundTable)

6,850 views

53:50

Langchain vs Llama-Index - The Best RAG fr...

Farzad Roozitalab (AI RoundTable)

15,925 views

35:07

LLMs for Advanced Question-Answering over ...

LlamaIndex

52,612 views

31:50

Building Effective Agents with LangGraph

LangChain

45,981 views

18:44

Turn ANY Website into LLM Knowledge in SEC...

Cole Medin

250,701 views

6:56

Microsoft Reports Quantum Computing Breakt...

Sabine Hossenfelder

656,092 views

12:44

LangChain Explained in 13 Minutes | QuickS...

Rabbitmetrics

881,055 views