How to Build an LLM from Scratch | An Overview

259.78k views6159 WordsCopy TextShare

Shaw Talebi

Want to learn more? I’m launching a 6-week live BootCamp for AI Builders.

👉 Learn more: https://mav...

Video Transcript:

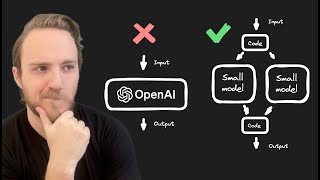

hey everyone I'm sha and this is the sixth video in the larger series on how to use large language models in practice in this video I'm going to review key aspects and considerations for building a large language model from scratch if you Googled this topic even just one year ago you'd probably see something very different than we see today building large language models was a very esoteric and specialized activity reserved mainly for Cutting Edge AI research but today if you Google how to build an llm from scratch or should I build a large language model you'll see a much different story with all the excitement surrounding large language models post chat GPT we now have an environment where a lot of businesses and Enterprises and other organizations have an interest in building these models perhaps one of the most notable examples comes from Bloomberg in Bloomberg GPT which is a large language model that was specifically built to handle tasks in the space of Finance however the way I see it building a large language model from scratch is often not necessary for the vast majority of llm use cases using something like prompt engineering or fine-tuning in existing model is going to be much better suited than building a large language model from scratch with that being said it is valuable to better understand what it takes to build one of these models from scratch and when it might make sense to do it before diving into the technical aspects of building a large language model let's do some back the napkin math to get a sense of the financial costs that we're talking about here taking as a baseline llama 2 the relatively recent large language model put out by meta these were the computational costs associated with the 7 billion parameter version and 70 billion parameter versions of the model so you can see for llama 27b it took about 180,000 th000 GPU hours to train that model while for 70b a model 10 times as large it required 10 times as much compute so this required 1. 7 million GPU hours so if we just do what physicists love to do we can just take orders of magnitude and based on the Llama 2 numbers we'll say a 10 billion parameter model takes on the order of 100,000 GPU hours to train while 100 billion parameter model takes about a million GPU hours to train so how can we trans at this into a dollar amount here we have two options option one is we can rent the gpus and compute that we need to train our model via any of the big cloud providers out there a Nvidia a100 what was used to train llama 2 is going to be on the order of $1 to $2 per GPU per hour so just doing some simple multiplication here that means the 10 billion parameter model is going to be on the order of1 15 $50,000 just to train and the 100 billion parameter model will be on the order of $1. 5 million to train alternatively instead of renting the compute you can always buy the hardware in that case we just have to take into consideration the price of these gpus so let's say an a100 is about $110,000 and you want to form a GPU cluster which is about 1,000 gpus the hardware costs alone are going to be on the order of like $10 million but that's not the only cost when you're running a cluster like this for weeks it consumes a tremendous amount of energy and so you also have to take into account the energy cost so let's say training a 100 billion parameter model consumes about 1,000 megawatt hours of energy and let's just say the price of energy is about $100 per megawatt hour then that means the marginal cost of training a 100 billion parameter model is going to be on the order of $100,000 okay so now that you've realized you probably won't be training a large language model anytime soon or maybe you are I don't know let's dive into the technical aspects of building one of these models I'm going to break the process down into four steps one is data curation two is the model architecture three is training the model at scale and four is evaluating the model okay so starting with data curation I would assert that this is the most important and perhaps most time consuming part of the process and this comes from the basic principle of machine learning of garbage in garbage out put another way the quality of your model is driven by the quality of your data so it's super important that you get the training data right especially if you're going to be investing millions of dollars in this model but this presents a problem large language models require large training data sets and so just to get a sense of this gpt3 was trained on half a trillion tokens llama 2 was trained on two trillion tokens and the more recent Falcon 180b was trained on 3.

5 trillion tokens and if you're not familiar with tokens you can check out the previous video in the series where I talk more about what tokens are and why they're important but here we can say that as far as training data go we're talking about a trillion words of text or in other words about a million novels or a billion news articles so we're talking about a tremendous amount of data going through a trillion words of text and ensuring data quality is a tremendous effort and undertaking and so a natural question is where do we even get all this text the most common place is the internet the internet consist of web pages Wikipedia forums books scientific articles code bases you name it post J GPT there's a lot more controversy around this and copyright laws the risk with web scraping yourself is that you might grab data that you're not supposed to grab or you don't have the rights to grab and then using it in a model for potentially commercial use could come back and cause some trouble down the line alternatively there are many public data sets out there one of the most popular is common crawl which is a huge Corpus of text from the internet and then there are some more refined versions such as colossal clean crawled Corpus also called C4 there's also Falcon refined web which was used to train Falcon 180b mentioned on the previous slide another popular data set is the pile which tries to bring together a wide variety of diverse data sources into the training data set which we'll talk a bit more about in the next slide and then we have hugging face which has really emerged as a big player in the generative Ai and large language model space who houses a ton of Open Access Data sources on their platform another place are private data sources so a great example of this is fin pile which was used to train Bloomberg GPD and the key upside of private data sources is you own the rights to it and and it's data that no one else has which can give you a strategic Advantage if you're trying to build a model for some business application or for some other application where there's some competition or environment of other players that are also making their own large language models finally and perhaps the most interesting is using an llm to generate the training data a notable example of this comes from the alpaca model put out by researchers at Stanford and what they did was they trained an llm alpaca using structured text generated by gpt3 this is my cartoon version of it you pass on the prompt make me training data into your large language model and it spits out the training data for you turning to the point of data set diversity that I mentioned briefly with the pile one aspect of a good training data set seems to be data set diversity and the idea here is that a diverse data set translates to to a model that can perform well in a wide variety of tasks essentially it translates into a good general purpose model here I've listed out a few different models and the composition of their training data sets so you can see gpt3 is mainly web pages but also some books you see gopher is also mainly web pages but they got more books and then they also have some code in there llama is mainly web pages but they also have books code and scientific articles and then Palm is mainly built on conversational data but then you see it's trained on web pages books and code how you curate your training data set is going to drive the types of tasks the large language model will be good at and while we're far away from an exact science or theory of this particular data set composition translates to this type of model or like adding an additional 3% code in your trading data set will have this quantifiable outcome in the downstream model while we're far away from that diversity does seem to be an important consideration when making your training data sets another thing that's important to ask ourselves is how do we prepare the data again the quality of our model is driven by the quality of our data so one needs to be thoughtful with the text that they use to generate a large language model and here I'm going to talk about four key data preparation steps the first is quality filtering this is removing text which is not helpful to the large language model this could be just a bunch of random gibberish from some corner of the internet this could be toxic language or hate speech found on some Forum this could be things that are objectively false like 2 + 2al 5 which you'll see in the book 1984 while that text exists out there it is not a true statement there's a really nice paper it's called survey of large language models I think and in that paper they distinguish two types of quality filtering the first is classifier based and this this is where you take a small highquality data set and use it to train a text classification model that allows you to automatically score text as either good or bad low quality or high quality so that precludes the need for a human to read a trillion words of text to assess its quality it can kind of be offloaded to this classifier the other type of approach they Define is heuristic based this is using various rules of thumb to filter the text text this could be removing specific words like explicit text this could be if a word repeats more than two times in a sentence you remove it or using various statistical properties of the text to do the filtering and of course you can do a combination of the two you can use the classifier based method to distill down your data set and then on top of that you can do some heuristics or vice versa you can use heuristics to distill down the data set and then apply your classifier there's no one- siiz fits-all recipe for doing quality filter in rather there's a menu of many different options and approaches that one can take next is D duplication this is removing several instances of the same or very similar text and the reason this is important is that duplicate texts can bias the model and disrupt training namely if you have some web page that exists on two different domains one ends up in the training data set one ends up in the testing data set this causes some trouble trying to get a fair assessment of model performance during training another key step is privacy redaction especially for text grab from the internet it might include sensitive or confidential information it's important to remove this text because if sensitive information makes its way into the training data set it could be inadvertently learned by the language model and be exposed in unexpected ways finally we have the tokenization step which is essentially translating text into numbers and the reason this is important is because neural networks do not understand text directly they understand numbers so anytime you feed something into a neural network it needs to come in numerical form while there are many ways to do this mapping one of the most popular ways is via the bite pair encoding algorithm which essentially takes a corpus of text and deres from it an efficient subword vocabulary it figures out the best choice of subwords or character sequences to define a vocabulary from which the entire Corpus can be represented for example maybe the word efficient gets mapped to a integer and exists in the vocabulary maybe sub with a dash gets mapped to its own integer word gets mapped to its own integer vocab gets mapped to its own integer and UL gets mapped to its own integer so this string of text here efficient subword vocabulary might be translated into five tokens each with their own numerical representation so one two three four five there are python libraries out there that implement this algorithm so you don't have to do it from scratch namely there's the sentence piece python Library there's also the tokenizer library coming from hugging face here the citation numbers and I provide the link in the description and comment section below moving on to step two model architecture so in this step we need to define the architecture of the language model and as far as large language models go Transformers have emerged merged as the state-of-the-art architecture and a Transformer is a neural network architecture that strictly uses attention mechanisms to map inputs to outputs so you might ask what is an attention mechanism and here I Define it as something that learns dependencies between different elements of a sequence based on position and content this is based on the intuition that when you're talking about language the context matters and so let's look at a couple examples so if we see the sentence I hit the base baseball with a bat the appearance of baseball implies that bat is probably a baseball bat and not a nocturnal mammal this is the picture that we have in our minds this is an example of the content of the context of the word bat so bat exists in this larger context of this sentence and the content is the words making up this context the the content of the context drives what word is going to come next and the meaning of this word here but content isn't enough the positioning of these words is also important so to see that consider another example I hit the bat with a baseball now there's a bit more ambiguity of what bat means it could still mean a baseball bat but people don't really hit baseball bats with baseballs they hit baseballs with baseball bats one might reasonably think bad here means the nocturnal mammal and so an attention mechanism captures both these aspects of language more specifically it will use both the content of the sequence and the positions of each element in the sequence to help infer what the next word should be well at first it might seem that Transformers are a constrained in particular architecture we actually have an incredible amount of freedom and choices we can make as developers making a Transformer model so at a high level there are actually three types of Transformers which follows from the two modules that exist in the Transformer architecture namely we have the encoder and decoder so we can have an encoder by itself that can be the architecture we can have a decoder by itself that's another architecture and then we can have the encoder and decoder working together and that's the third type of Transformer so let's take a look at these One By One The encoder only Transformer translates tokens into a semantically mean meaningful representation and these are typically good for Tech classification tasks or if you're just trying to generate a embedding for some text next we have the decoder only Transformer which is similar to an encoder because it translates text into a semantically meaningful internal representation but decoders are trying to predict the next word they're trying to predict future tokens and for this decoders do not allow self attention with future elements which makes it great for text generation tasks and so just to get a bit more intuition of the difference between the encoder self attention mechanism and the decoder self attention mechanism the encoder any part of the sequence can interact with any other part of the sequence if we were to zoom in on the weight matrices that are generating these internal representations in the encoder you'll see that none of the weights are zero on the other hand for a decoder it uses so-called masked self attention so any weights that would connect a token to a token in the future is going to be set to zero it doesn't make sense for the decoder to see into the future if it's trying to predict the future that would kind of be like cheating and then finally we can combine the encoder and decoder together to create another choice of model architecture this was actually the original design of the Transformer model kind of what's depicted here and so what you can do with the encoder decoder model that you can't do with the others is the so-called cross attention so instead of just being restricted to self attention with the encoder or mask self attention with the decoder the encoder decoder model allows for cross attention where the embeddings from the encoder so this will generate a sequence and the internal embeddings of the decoder which will be another sequence will have this attention weight Matrix so that the encoders representations can communicate with the decoder representations and this tends to be good for tasks such as translation which was the original application of this Transformers model while we do have three options to choose from when it comes to making a Transformer the most popular by far is this decoder only architecture where you're only using this part of the Transformer to do the language modeling and this is also called causal language modeling which basically means given a sequence of text you want to predict future text Beyond just this highlevel choice of model architecture there are actually a lot of other design choices and details that one needs to take into consideration first is the use of residual connections which are just Connections in your model architecture that allow intermediate training values to bypass various hidden layers and so to make this more concrete this is from reference number 18 Linked In the description and comment section below what this looks like is you have some input and instead of strictly feeding the input into your hidden layer which is this stack of things here you allow it to go to both the hidden layer and to bypass the hidden layer then you can aggregate the original input and the output of the Hidden layer in some way to generate the input for the next layer and of course there are many different ways one can do this with all the different details that can go into a hidden layer you can have the input and the output of the Hidden layer be added together and then have an activation applied to the addition you can have the input and the output of the Hidden layer be added and then you can do some kind of normalization and then you can add the activation or you can have the original input and the output of the Hidden layer just be added together you really have a tremendous amount of flexibility and design Choice when it comes to these residual Connections in the original Transformers architecture the way they did it was something similar to this where the input bypasses this multiheaded attention layer and is added and normalized with the output of this multi attention layer and then the same thing happens for this layer same thing happens for this layer same thing happens for this layer and same thing happens for this layer next is layer normalization which is rescaling values between layers based on their mean and standard deviation and so when it comes to layer normalization there are two considerations that we can make one is where you normalize so there are generally two options here you can normalize before the layer also called pre-layer normalization or you can normalize after the layer also called post layer normalization another consideration is how you normalize one of the most common ways is via layer norm and this is the equation here this is your input X you subtract the mean of the input and then you divide it by the variance plus some noise term then you multiply it by some gain factor and then you can have some bias term as well an alternative to this is the root mean Square Norm or RMS Norm which is very similar it just doesn't have the mean term in the numerator and then it replaces this denominator with just the RMS while you have a few different options on how you do layer normalization the most common based on that survey of large language models I mentioned earlier reference number eight pre-layer normalization seems to be most common combined with this vanilla layer Norm approach next we have activation functions and these are non-linear functions that we can include in the model which in principle allow it to capture comp Lex mappings between inputs and outputs here there are several common choices for large language models namely gelu relo swish swish Glu G Glu and I'm sure there are more but glus seem to be the most common for large language models another design Choice Is How We Do position embeddings position embeddings capture information about token positions the way that this was done in the original Transformers paper was using these sign and cosine basic functions which added a unique value to each token position to represent its position and you can see in the original Transformers architecture you had your tokenized input and the positional encodings were just added to the tokenized input for both the encoder input and the decoder input more recently there's this idea of relative positional encodings so instead of just adding some fixed positional encoding before the input is passed into the model the idea with relative positional encodings is to bake positional encodings into the attention mechanism and so I won't dive into the details of that here but I will provide this reference self attention with relative position representations also citation number 20 the last consideration that I'll talk about when it comes to model architecture is how big do I make it and the reason this is important is because if a model is too big or train too long it can overfit on the other hand if a model is too small or not trained long enough it can underperform and these are both in the context of the training data and so there's this relationship between the number of parameters the number of computations or training time and the size of the training data set there's a nice paper by Hoffman at all where they do an analysis of optimal compute considerations when it comes to large language models I've just grabbed a table from that paper that summarizes their key findings what this is saying is that a 400 million parameter model should undergo on the order of let's say like 2 to the 19 floating Point operations and have a training data consisting of 8 billion tokens and then a parameter with 1 billion models should have 10 times as many floating Point operations and be trained on 20 billion parameters and so on and so forth my kind of summarization takeaway from this is that you should have about 20 tokens per model mod parameter it's not going to be very precise but might be a good rule of thumb and then we have for every 10x increase in model parameters there's about a 100x increase in floating Point operations so if you're curious about this check out the paper Linked In the description below even if this isn't an optimal approach in all cases it may be a good starting place and rule of thumb for training these models so now we come to step three which is training these models at scale so again the central challenge of these large language models is is their scale when you're training on trillions of tokens and you're talking about billions tens of billions hundreds of billions of parameters there's a lot of computational cost associated with these things and it is basically impossible to train one of these models without employing some computational tricks and techniques to speed up the training process here I'm going to talk about three popular training techniques the first is mixed Precision training which is essentially when you use both 32bit and 16 bit floating Point numbers during model training such that you use the 16bit floating Point numbers whenever possible and 32bit numbers only when you have to more on mixed Precision training in that survey of large language models and then there's also a nice documentation by Nvidia linked below next is this approach of 3D parallelism which is actually the combination of three different parallelization strategies which are all listed here and I'll just go through them one by one first is pipeline parallelism which is Distributing the Transformer layers across multiple gpus and it actually does an additional optimization where it puts adjacent layers on the same GPU to reduce the amount of cross GPU communication that has to take place the next is model parallelism which basically decomposes The Matrix multiplications that make up the model into smaller Matrix multiplies and then distributes those Matrix multiplies across multiple gpus and then and then finally there's data parallelism which distributes training data across multiple gpus but one of the challenges with parallelization is that redundancies start to emerge because model parameters and Optimizer States need to be copied across multiple gpus so you're having some portion of the gpu's precious memory devoted to storing information that's copied in multiple places this is where zero redundancy Optimizer or zero is helpful which essentially reduces data redundancy regarding the optimizer State the gradient and parameter partitioning and so this was just like a surface level survey of these three training techniques these techniques and many more are implemented by the deepe speed python library and of course deep speed isn't the only Library out there there are a few other ones such as colossal AI Alpa and some more which I talk about in the blog associated with this video another consideration when training these massive models is training stability and it turns out there are a few things that we can do to help ensure that the training process goes smoothly the first is checkpointing which takes a snapshot of model artifacts so training can resume from that point this is helpful because let's say you're training loss is going down it's great but then you just have this spike in loss after training for a week and it just blows up training and you don't know what happened checkpointing allows you to go back to when everything was okay and debug what could have gone wrong and maybe make some adjustments to the learning rate or other hyperparameters so that you can try to avoid that spike in the loss function that came up later another strategy is weight Decay which is essentially a regularization strategy that penalizes large parameter values I've seen two ways of doing this one is either by adding a term to the objective function which is like regular regularization regular regularization or changing the parameter update Rule and then finally we have gradient clipping which rescales the gradient of the objective function if it exceeds a pre-specified value so this helps avoid the exploding gradient problem which may blow up your training process and then the last thing I want to talk about when it comes to training are hyperparameters while these aren't specific to large language models my goal here is to just lay out some common choices when it comes to these values so first we have batch size which can be either static or dynamic and if it's static batch sizes are usually pretty big so on the order of like 16 million tokens but it can also be dynamic for example in GPT 3 what they did is they gradually increased the batch size from 32,000 tokens to 3. 2 million tokens next we have the learning rate and so this can also be static or dynamic but it seems that Dynamic learning rates are much more common for these models a common strategy seems to go as follows you have a learning rate that increases linearly until reaching some specified maximum value and then it'll reduce via a cosine Decay until the learning rate is about 10% % of its max value next we have the optimizer atom or atom based optimizers are most commonly used for large language models and then finally we have Drpout typical values for Drpout are between 0. 2 and 0.

Related Videos

25:20

Large Language Models (LLMs) - Everything ...

Matthew Berman

113,980 views

13:56

Microsoft Just Showed Us How To Use New AI...

TheAIGRID

56,024 views

22:13

Run your own AI (but private)

NetworkChuck

1,657,873 views

1:44:31

Stanford CS229 I Machine Learning I Buildi...

Stanford Online

436,048 views

28:18

Fine-tuning Large Language Models (LLMs) |...

Shaw Talebi

346,270 views

46:02

What is generative AI and how does it work...

The Royal Institution

1,103,375 views

24:02

"I want Llama3 to perform 10x with my priv...

AI Jason

452,922 views

41:36

Prompt Engineering Tutorial – Master ChatG...

freeCodeCamp.org

1,675,443 views

8:57

RAG vs. Fine Tuning

IBM Technology

42,646 views

48:35

Introduction to DataForge Part 2: Understa...

DataForge

49 views

![[1hr Talk] Intro to Large Language Models](https://img.youtube.com/vi/zjkBMFhNj_g/mqdefault.jpg)

59:48

[1hr Talk] Intro to Large Language Models

Andrej Karpathy

2,302,456 views

27:14

How large language models work, a visual i...

3Blue1Brown

3,552,767 views

15:04

How I'd Learn AI (If I Had to Start Over)

Thu Vu data analytics

851,374 views

6:54

How to Learn AI and Get Certified by NVIDIA

Sahil & Sarra

166,561 views

10:24

Training Your Own AI Model Is Not As Hard ...

Steve (Builder.io)

580,791 views

8:31

How does ChatGPT work? Explained by Deep-F...

HowToFly

616,832 views

6:21

Don't Learn Machine Learning, Instead lear...

Deepchand O A

78,647 views

12:29

What are AI Agents?

IBM Technology

588,652 views

40:37

How to Build LLMs on Your Company’s Data W...

Databricks

38,073 views