Oracle’s Globally Distributed Database and Oracle True Cache

55 views13049 WordsCopy TextShare

New York Oracle User Group NYOUG

NYOUG Webinar on 12/17/2024, presented by Habib ur Rahman

Oracle’s Globally Distributed Database an...

Video Transcript:

yeah hi everyone thank you for joining us today we're excited to present you great topic great webinar and co-hosted by the New York Oracle users group and Oracle partner viscosity North America so feel free to submit questions in the Q&A field and let's make this uh webinar interactive uh so uh this uh webinar will be recorded and you can rewatch it uh so anytime and download slides at or.com and we have colan levita the VIP of New York Oracle users group so I will give you you can uh introduce uh New York Oracle user group

right yes thank you alen so the uh New York Oracle go to next slide please the New York Oracle users group was uh founded in uh 1984 for the exchange of ideas assistance and support among users of Oracle software products uh and you can connect with us on either LinkedIn next slide please Elina LinkedIn uh Facebook um X and of course our website n y.org and all the webinars that we've uh co-hosted with viscosity for the past I believe it's four years soon we be going on five years can be found both on viscosity website

as well as the New York Oracle User Group website ny.org category presentation webinars have any issues you can always contact us from the webbsite um I just want to turn it back to Alina who introduced viscosity and then I'll just introduce uh Habib thank you uh thank you colan so about us viscosity North America we are leading Oracle consulting firms specializing in Oracle database services like Rec Apex performance tuning and high availability uh we have uh Oracle six or orle Aces on our team and they wrote a bunch of great books and deliver uned experti

and Hands-On Solutions uh you have you can visit our events page and uh uh check out what's going on there uh we own aop.com it's a training Hub and uh uh we provide valuable workshops uh uh exclusive webinars live virtual classes uh to help dbas and developers stay ahe of the curve including on Oracle database 23 Ai and uh please follow us in social media uh yeah we are doing great content and a lot of videos and valuable stuff so and if you have any any questions uh regarding our services or training on our PB

just reach out to us yeah uh now um that's all from my side colan uh now uh you can introduce our speaker today okay thank you Elina so abib rahan a principal product manager in the Oracle database Organization for Oracle globally distributed database in true cache bringing extensive expertise and Oracle database Technologies including real application clusters Data Guard Golden Gate and performance tuning additionally he possesses deep knowledge of Oracle Engineering Systems such as Oracle exod dat exod dat cloud and customer and oci architecture and solutioning khabib has also held the position of Oracle pre-sale solution

architect leading Oracle engineering system I just want to thank B for uh presenting the webinar today and for viscosity for co-hosting these series of webinars that's been going on for at least four years now and all these um this presentation uh will be on the viscosity website as well as the NY website thank you very much and Habib floor is yours sure thank you give me a second let me share my screen I hope my screen is visible to you right we can see your screen okay thank you okay thank you uh kman for introducing

me here hi everyone my name is zman already introduced by kman I'm the principal product manager for vle database globally dist database and the true cache so in today's uh webinar we'll talk about a detail of what warle globally distributed database is and what the two cach we have so let me start this initially so if you uh see like uh when you talk about the distribute database because this is something new in fact um I mean not new in fact because this is already there in fact since lot of people using it lot of

lot of wom the thir using it but if you see the distribute database nothing but say it's like a simply database that stores the like data across the multiple physical location instead of one locations okay and each location stores a subset of the data and the physically it the data are distributed such a way it's hidden from the application no application no knows that data is getting from single point but in the reality it's being virtually distributed if you see here this is a kind of enironment here if you see here this is called application

now this application is going to get the data from any of the location now these locations could be the they are located from different maybe the data centers different places this is what we call is the database which are distributed across the different physical locations okay now if you see this Oracle database it became the distributed database in 2017 actually now if you do the Oracle I mean we started a concept call sharding in 2017 we used to call Shard actually right now this become a distributed database somewhere in 2 in fact with we up

23 I and it was uh using the same power same thing to basically we have the something called the orle native uh like uh database where the Shing was built in 2019 and then in 2023 we have built the New Concept called um distribut database so this was used for the Min purpose like if you see the reality in today's time we have the mobile messaging we have the credit card for detection with the payment processing smart power meter in fact all these are the areas where we are using this distributed database actually now let

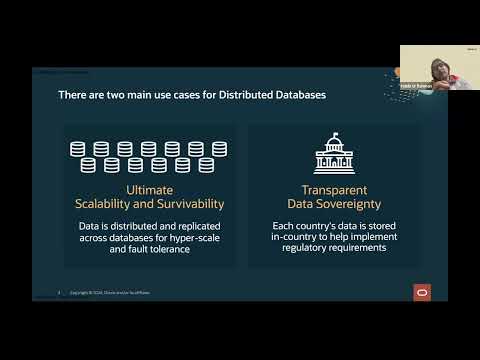

me take two uh main examp key uh use cases with this I able to explain you exactly where we use this database if you look at reality this dat distri dat base has two main purpose one is to give you the ultimate is scalability and the survivability and the second is called transparency transparent data sovereignty now that scalability talks about where the data are distributed and replicated across a multiple uh like I mean location in fact and this database gives you hyperscale and Thea tolerance if you talk about K now second one talks about about

the transparency over the data transparency data sovereignty data sovereignty become a quite common thing nowadays because if you take example like suppose you are a application owner and you want your data to be distributed in such a way because nowadays every country has their own uh regulations right which they say like the data of respective country should be there like suppose the data of us should be the US and the data of India should be India so if you have such because in India we have multiple we'll talk about the use cases there so when

we have such kind of kind of regulations where the data is cannot be relocated collocated to other country the data servant which call normally so there are the case so these two are the main cases where we can see the ultimate like all the banking sector nowadays which they use uh applications right now customer may be present in India customer may present us as well right so if you talk about any Bank any bank which bank has a branch in both countries so in that cases naturally every country like SBI in India has a rule

that data which exist in India should be in India so these two are the very important use cases which talks about here let's take detail of this thing okay so now from this diagram I'll try to explain you exactly what I mean by sh Shard see Shard if you look my picture diagram here we have three shards Shard one Shard two and Shard three red shard green Shard and the blue Shard right now these are nothing but say one physical database is divided or distributed across a multiple databases and each database we call as a

Shard now the beauty of this shart is it does not share any hardware it Shar nothing something called we call something shared nothing architecture okay and data in each shart is replicated for solvability in case if one shot goes down it should shoot two sh can serve the purpose right and this is something also called the active active architecture means at any point of times the data will not be lost the application will be able to get the data because all three data are having the subset of this thing right I told you a single

logical data data is a tip physically distributed across the multiple database which we call Shard and data in each Shard is replicated for solity and the all Shard can process application request this enable the active active architecture and data can be distributed across multiple sharts okay now data we can this data this Shard can be anywhere this Shard can be on the frame this Shard can be also on the uh let's say cloud it could be also multic clouds any could be here now the benefit want IDE here the benefit of sharding is look hypers

scale is nowadays is the main uh talk of things right like we have the like sometimes when we have the festivity season right the festivity seasons I told you every Shard has the data right suppose if if some Festival has come up right we have the option to hyperscale The Shard we can add The Shard we can remove The Shard online without any in fact without any down down times with minimal Deb distribution so this gives you a power to add remove The Shard based on your convenience okay second is called fault isolations obviously in

case you have one big database right which goes down naturally you're going to lose the data you lose your city of application so that monolithic gets broken into multiple distrib what you call the F isolation so in the case of fault isolation what we do is we create the shart and each shart has a set of data so in case if let's say example here in the picture if this Shard let's say green goes down still we have red shard blue Shard and yellow Shard they can s the purpose so this is something called f

for section and the third is called Data 7y data 7 is one of the key uh topic of things like data can be distributed across a multiple country depending upon the Shing keys right so there we have something called user defined data me can move the data you can say like the data of US citizen should be the US and Indian C should be in Indian so that kind of the faity we have so these are three major benefit which sharding gives you and if you talk about this part which we call is the distribution

of the data in application so this is reality actually if you see the distribution of data is hidden from application see when the application connects you let's application here right with the send the request here this application doesn't know from where is getting the sub purpose so from the application does this data is hidden Now application request can get the data from the single shart or the multiple shart depending upon the type of Co return here right request need not request that need to be uh like when you say when you say like the data

you want to use data from shart three you file the command this thing we have something here between I'm not sure diagram here something called the uh Shard coordinators which you call the U like uh catalog in fact so the catalog used to define that from where this particular key is lying and based on that is p the data for you so reest that need data from the multiple shot are automatically split in this split now this is the next kind of generation where the data can be achieved from the this database and also this

allows you to to place the data in the multiple geographic location in any any other country you want so this gives you the wide uh angle where the single database can be scaled can be used for survivability and this can be also each sharts are we call Independent to each others there's no dependen at all actually now let's take example here couple of example to explain exactly what it is now here the data 70 let's say take first example here now I told you this data 70 become the mandatory in the many countries like India

other countries especially for the payment database now in India The Reserve Bank of India it's it has something called the data localization regulations now the DOA data localization regulation which says that the payment data must reside in India if the both payer and the pay are the India citizen similarly applies to us as well other country as well now this is something you can say a showstopper for the Global Financial Services companies naturally every Bank face this kind of issues right when the data comes because data no doubt is the key for everyone right is

the data data is everything for us today so data has to save has to secure has to play such a way that people belong to the country they can access the data so there's one of the major area this is going to and also that store the data from a country in the single database right now the key observation here is that the data must be physically stored in India but it can be access from anywhere this is the beauty I mean it's not required the data should be data can be in India but the

application you're accessing you can access application from any of the world actually but the data from where country you want you can access from there only so application do not need not to be like I mean fine tune it's the me same thing what we do will we change the architecture of the current like layout so that data can be spit to multiple countries based on the regulations now take example here we have let's say one of the largest US Bank which we have done this rect structuring in the recent past they wanted us to

do the kind of uh I mean uh solutioning where the US data and the India data they can lie into their own areas but the application which was there that was not change that can be be EV so here in the case you see here we have the US east coast and the West CO right now here we have the replication disas recovery for call the and what we did is what we did is uh we initially I mean the US this this machine is hosted if you see previously this machine is host on EX

machine ex machine all right and the application and the database ts are replicated across the regions inside the US for de recovery now Oracle Global when when you play this concept called Oracle globally distribut database what exactly did it is it enables a bank to easily comply with the inds regulation which RBI has mandated us also the new Shard were created India to hold India data now here if you look this this diagram here this Shard was created here or created here this Shard is not going to hold any us data the India Shard is

going to hold India data and the US sh going to hold us data now this gives you new database architecture it does not it required very very minimal change application level maybe the connection Str required but there's no high major in fact change required also the high complex application TS did not need to rund depl India now in case here in case this was not their case then you would have to also apply the same concept same application IND as well but here we didn't touch much to application only minimum change we done for application

whereas we have shed the database and we have made such a way that the Indian data India sh and us us CH so that gives the compliance which respective countries bank system right now one thing is guaranteed that the regulations will be increased here also if you look the other example here I have this is something called the buai right uh buai if you take this is basically it's the leading data platform for the digital marketing campaign right the data is access in real time by The 100 of millions of consumers as they serve the

internet because this is totally say like digital platform campaign so naturally when you surf the Internet right it traffic increases so this is something called accessing the realtime data from the uh internet so naturally every time maybe hundreds or millions of connection consumer using right now blai is basically hypers skill work it is on the multi database that requires near the instant response resp time obviously in the internet when you access some data you don't need to wait for that you just click it you should get it right so when you have this kind of

things obviously you should have uh something very U agile kind of thing something quick kind of system right now if you see the figure here it process around 1 million transaction per second and it works almost 30 billions API per day a huge system we have right with the response time somewh want .6 millisecond right now if you look this number of machines if you look here blue Kai now runs on the orle globally distributed thisas it runs on around 104 uh Community server and which uh cul has something called 5,48 CPU right goes to

77.4 tab of memory and it was migrated from the combination of no SQL in fact if you talk about the buai it has multiple combination databases now it was it was combinations of the like product called Aeros spikes candra iscala it was there so we migrated from there to Oracle glob database now the Simplicity of the architecture it gives you the same Simplicity plus it inherit the entire capability of the orle SQ power as well now this is something like I mean this kind of in Innovation we have something which you don't no one has

actually like now if you the architecture deployment here look here this one look we have 1 2 3 4 5 six data center we three data center here data center one 2 three right each data center they have replica so now if you see any point of any point of time like if you see the total data value is something 2.5 kabyte and is distributed across the multiple data centers we have we have three data center out here right each of the shart in the data centers has replica in the different data centers so that

if one Center goes down goes down the application can serve the purpose from the available data centers hence enabling the BL Sky to survive an outre of entire Center if this example here in case if the data center one goes down entire thing goes down even though you have now from here the this is called red shard now the red shard avable here there the green sh blue green Shard avable here the blue in fact the green is here blue is here so data center one goes down even though in that case we have this

survivability to get the data access from the other data centers so this is something very robust very scalable solution right and along with this what I call is the one uh like I mean say a company which has the more data distribution method than any any other commodity hard any Comm computer actually right now Oracle gives you four types of data distribution now let me tell what distribution imp is see in the Oracle database when in the sharding basically when you are like do the sharding we do the architecting you need to Define that how

you're going to create The Shard on this table and then you define which kind of sharding method I mean which kind of the distribution method you going to use which you call distrib method now this gives you four options one we call is list uh value based Systems Second is system defined third is composite and the fourth one is called directory based sharting so four types of sharting we have and believe me we have many competitors like in in in this system but I think Max two or one exist with them actually right I can't

name it right now here which compe we have okay now look the first one is called the value based system sharding now the value based uh sharding distribution this distribute the data by the value example for example a country code or the product ID or you can distribute the data by range of values something called say range of phone numbers right so first one is called the based uh value based data distribution the second method we call is the system based sharding now system based sharding s distribution big distribution is basically it uses the consistent

hash algorithms right to evenly distribute the data across the multiple Shard for the scality purpose and for the pism purpose for example we want to distribute the data by customer ID by device ID by item ID right we can do this way and the Third Way is something called consistent hash now beauty of this is the consistent hash I mean it it also allows to enable the additions of Shard online without any kind of downtime with minimal data movement now third type of sharting we have in Oracle is called type of distribution we have called

composite data distribution now composite data distribution it uses two level of sharding with two different sharding keys right and with two different sharding methods normally if you see it's the combinations of the user defined and the system defined Shing now data is first distributed by the value for example the country code of the range or phone number and then the distributed by Across the data center and say for example the custom ID and second sharding key is using the con hash hashing so these two are these two four types of sh distribution method we have

now this is user defined the user defined sharting uh distribution has its own speciality like suppose uh there are sometimes required like uh you have something called the skew data right skew data we have right and you want to uh do the manual intervention you want to allocate the you want to distribute the data based on your requirement suppose you want to say you want to move some set of data in us some set of data in UK some set of data in India so if you have such kind of system where you want to

define the sharding based on your requirement that's what call user defined Shing in user defined sharing you have a complete control on this like how do you want to place the data right this is basically if you take example from here we have let's say Taylor Swift Shard be sh and SH now here if you take example from here now we can store the Tailor Swift and the detal data in the own shart and we can combine the smallest artist data together in the separate sh so this option we also have it like suppose we

want to store in separate like based on requir actually right now in there's something called duplicated distribution I'll tell you exactly what it is like when you create um when you identify the number of tables you want to do sharing right let's say you have example you have customer data you have say like item data and say you have many dat something right now these three data these three tables you say you are going to sh them but you have let's say one uh table called product product item table now product item table is very

small table right so in case you want any table which is very small in the size you don't want to Shard them so you can duplicate them and you can duplicate them and they they will be played all the all the shards right generally when you do duplicate uh distribution that means you are not sharting so in case you want to store them you want to keep them you don't want to because the purpose of this one is to um avoid the uh any kind of cross Shard queries or any kind of cross Shard referential

interior checks in case you don't want to use them you want this very small size so you can put this particular table say product table or any in table in all three shots so there what we call the duplicate tables duplicates dat distribution okay now we also have the beauty in this case Oracle support the partition data distribution within the each chart now for example the data in any sh can be further be partitioned by the data value such as the data range then further we can Partition by let's say data value or the hash

now this enable the fastest query and join within The Shard Shard can be also added without any incurring any down times let's say told you if you have season you have some festive season right now and you think the uh data workload is going to increase on my system and maybe during let's say Christmas time or maybe the Thanksgiving times maybe I can get I can get unexpected kind of traffic right so in those times you can add the number of shot maybe depending on requirement and when the season goes off right of the Season

you can remove the so adding and removing the sharts is totally automatic it does not Inc down times and also it gets automatically get distributed across the shards you don't need to distribute it get distributed and third is this enables you something called scale out or scale in for C told you right data across the sharts is automatically rebalanced with minimum data Ms and this is something online operation does not require kind in kind of the downtime so this uh feature we also have in the case of like um sharding now let's understand the application

methods see what IAL no doubt has more replication than anybody else you know two common replication maybe is uh in the group maybe most of you also gone through this DBA kind of things in Oracle is we have two major kind of location one is called Data Guard adg and the second is what you call in gold langate right now we have third one is called raft based replication this is basically the features of 23i it is not a feature of 9C it's a feature of 23i now the main purpose of the Bas uh replication

is basically it works on something called The Raft quum based replication methods protocols now this provides you the automatic failure to a replica under any uh under three subse now if you see from this diagram maybe next time I can explain very nicely now the purpose behind this is to implement an active active symmetric configuration and each charts which accepts rights and the reads for the subset of data it also delivers the zero data zero data loss using the high performance signus replication across charts now this is similar to Cassandra you know I think you

like Cassandra right Cassandra something called open source that does not use a raft but it has a notion of something called replication factors then we have something called Data stack data Stacks do not use raft based replication it use something called P sauce because they are trying to move to Raft then we have Kos DV it use raft yoga use raft Google span use back sauce and the to be used R okay now the purpose thing up here is everybody use it right but orle does something different way it use a different way now the

capability the features the functionality which you that other do not have it I can prove that as now here if you look this diagram here I think most of you know right what exactly the meaning of the raft right because ra is something new not new something old concept now customer can Define the application factors say example from here uh we have one two three Shard right we have three shards right now each shards if you look here the asri this asri is called leader and these two are the followers now if you see the

if you see first first uh diagram first uh Shard the green is a leader red and blue are the followers in the second Shard green is a followers red is a leader and this is a followers so every asri is a leader is two the followers now here we are going to have three copy of data three copies of the data so what do is customer has the option to define the replication factors that is equal to copy of data to stored in the distributed database now this is similar to cassendra okay now if you

see picture here I told you we have three Shard right three application factors which implies three copy of data across a multiple three Shard now asri indicate the leadership of the subset of data there is something green blue and the green the blue and the rest two are are follower now ler has a power what power it has it all the reads and the rights by default are routed by the leader not the follower upon writing the leader the replication replicate the data to the followers upon establishing Quorum now it is quorum quorum is basically

established when you want to commit the data right so Corum establish in fact Oracle has multiple types of like I mean option we have it here but let me give you this a standard one two of three five of seven sorry three of five four of seven these are different typ of Quorum like if majority of the followers are ready Are acknowledge these things the data to leader they get committed this is how it happen actually so I think most of you must be aing this how the qu I'm get how this works right okay

now the case leader get failed due to some reason let's say leader fail right I mean it's du reason now all the followers within that leader they want to compete to become a new leader now if the moment the new data get established from the follower what happened is this has happened because of the protocol after that all the reads and the rights are routed to the new leader and applications being sent the ask to Route the request to new leader not the old one okay so n2n applications fail is less than three subse and

also the when you define this when you when you go for the raft based application you need to very sure that what latency between the DC and Dr that should the important things okay now along with this Oracle also has uh two more types of replication I think most of you know right this one the first one which you call is a uh Rog right the read Lo so orle also support redo logs and the uh Golden Gate so in the redo logs it support the rlock using this is collective data C that provide the

faster uh the performance and most comprehensive SQL functionality right this called readable replica provide the simple operations it also supports the uh SQL replication that is what we call the uh Golden Gate right that provide the faster failers fully writable replica including the conflict avoidance and resolutions kind of options okay so these three types of replication we have orle so I think last two redo levels and the SQL levels I think most of you know right because this a old thing now this raft based replication is something new which we have introduced in orle 23i

uh one more thing before I move further uh if you have any questions please put in this uh uh chat I will give you in fact 10 minutes time then we can discuss the any kind of thing we have okay okay now let's see the deployment options U if you look the uh options where you can deploy the shards sole supports in fact every platform you on P Solutions you want to deploy on the community Hardwares ex machine machine we can do in fact you want to deplo O Cloud you can do this thing you

want to apply in the multi Cloud something called Azor AWS anywhere so orle gives you the variety options there could be possibility maybe when you start working with this Shing this short concept you might come to know there will be customer who wants one particular shard in on Prim second Shard maybe on the m Cloud third Shard maybe oci so even all that kind of option we have actually so we do not I think you talk about the any competitive like vendor which provide the multi which provide the distri database do not have this this

option actually right now let's take an example here this is basically feature of Oracle 23i now Oracle 23 actually I mean this is not topic of TR actually the topic of today is different dist database and the true cache but yes we had the options where Oracle natural language can be used so let's go back to a look at these things now orle 23i bring something called natural language query to globally distri database using the autonomous database select there something called select a right if you can Google to select in somewhere you can get this

blog out there now it can translate the natural language into natural language question into SQL like you see my screen here right they're using something called AI large uh learning model the SQL query is automatically routed to the appropriate country or the shart by the global distributed RS for example if you want to ask a question say how many total streams for each ter Cruise movie we viewed in India this month now in this case the large learning model generated a SQL which already has a country code AS India and hence the Oracle globally distributor

base route this query to The Shard in India so see exactly here you can get the output here now this gives you a kind of flexibility in fact where you can route a query basically query routing done automatically by the query which we have in the sh but yes I mean this option we have and the second feature I want talk here is called AI vector and the actually I think this is not topic of but yes e Vector search and the ra what you call theet retriable augmented generation is also available with Oracle globally

distributed base and Oracle distributed base will add the hyers scale and the data 70 tole database 23i Vector search the customer will be able to compile combine the similarity search using a AI Vector each search the business data can be customer and the product is single distri now here we also have the same feature like the Oracle glob database is also available on the database right it's available actually now here we can basically we can like add the autonomous management to basically the purpose of the autonomous uh concept you see maybe you know like ADB

right autom database dedicator and the shared one right you see ADB basically ADB has the feature which eliminate the operation complexity right of the distri database and it reduce the cost right it's a combination of glob distrib database with Oracle atom database right which enables you the Oracle globally database to be on online I mean to be uh access from the cloud as well and this is basically hosted oracles Cloud native distributed dat services so we can also access in case you want to access the orle glob distrib database you can access even the Oracle

like Services OC Services as well so OCA Services you have in fact either you can access you can install it and your let's say a machine you can access it or you can access through the Oracle oci also we have something orle Live Lab we'll talk about later on this thing so we have multiple ways to access this things now one very important thing from here to ke is what exactly the key here is the key purpose of orle globally distributed base is mostly fully feature distributed database it provides a more data distribution than distribution

method than in else it has a lot of replic also more application than anybody else also it can be deployed on frame also on the cloud as well it's also called something conver database architecture right which makes the data 70 easy for the modern application they can use a multiple data types and workload the 23i raft based replication it provide the fast quum based failure right it supports also something called Leading Edge AI select and the vector search and the autonomous capability to remove the complexity and R the cost so beauty of this is you

see here I have given some link here in fact now this link is in case you want to access the uh Oracle data based ra based application how the application works right you want to see the hands on right nothing theoretical full of handsome so we have something called Live Lab this is free of cost to access there's no charge for anything you just need to click this link once you click the link it will open the link straight away and then you have two options to create a Sandbox or to like spin up a

Sandbox or to host on your own tendency in case you have any tendency on the oci you can build it this raft replication and you can use any time but in case you do not have any tendency out there what you can do is you can use that particular sandbox just uh click on this I think maybe it take I think maximum 10 to 15 minutes the sand box gets commission for you and straight away you can go there and you start working on this even that sand box will be having all these steps we

have in fact 1 2 three four multiple step there to show you that how to access this thing and also you have the screenshot you have the command everything there so in case you want to do any hands on you want to get your hand dirty on this Oracle uh database distri database you can work at this way so we have this data 70 distributed database we have Quick Start DBA so every link I have attached here for your reference purpose but I will advise all of you just go and try the Live Lab this

is cost nothing is there just click on this and maybe within 10 minutes the sandbox get commission provision and then from there you can try everything every command steps there actually I'll do one thing maybe after end of this uh presentations I'll try to show you how to uh launch it and how you going to going to work for you look for you okay so uh before I move to uh okay I question yeah this is right now uh Mr Pete this is right now dedicated not the Ser list only we have a dedicate not

Ser list yeah okay this data is sent you said the how data is sent to specific sh is a partition based or the sub key basically what happen here is uh when you define The Shard right I explain you when you uh say you want to sh your database right so first and the foremost thing is you need to find out which period table table want to sh it let me take example you have table called customer right in the customer table first question here is which of the column is going to be Shing key

now say you saying that let's take customer ID now customer ID will be yeah sance Ro in fact yeah that's true now customer ID will be your sharting key okay you define this thing now once you define these things now next step is this how you going to distribute database you distribute database based on user defined Shard system defined Shard composite Shard or the directory Shard so let's say you take system defin sh right so system defin sh I told you it generates something called the or a hash something hashing algorithms right so the moment

when you write the query it says select start from customer now we have two way to WR the query one provide the sharding key like this select name from customer where customer ID is one 123 the moment say one 123 that means you give the sharding key if the sharding key is provided in the query that means this is single sh qu there we have something called the catalog catalog has all the information then we have GSM that's called the listen director you can say the GSM knows which particular Shard ID belongs to particular which

particular this particular Shard ID sharding key belong to which particular Shard so if you provide the sharding key in that case case that Shard key recite any of The Shard say Shard one Shard two Shard three you get the output straight away that's what we call the Single Shard qu in case you say select start from customer no Shing key in that case what happens the catalog will be generating a kind of parallel threads to all the shards and they run parall something called scatter gather concept it it it it is all the data from

there run theer through theun the parallel queries get the data gather it and send back to user so that basically defines like I mean I mean when you when you say system defin sharts the system defin sharts every Shard as I told you this is uniformly distributed suppose you have three Shard and suppose you have thousand data so you may um you may see 3,000 3,003 data on all three chart we have and plus also last th000 data will be distributed equally so in the case of system defined sharts you don't have to Define that

where this particular data has to go this will automatically taken care by the uh system based on the hashing algorithms if you see user Define sharts then you have the options to Define that this particular Shard let's say data of the US data of the Indian citizen it has to go itive sh so those OP we have yeah I hope I understood question yeah yeah Pete the question is sist is in fact on the road map yes but right now we have this globally distri base only on the adbd yeah thank you so this uh

Live Lab in fact I can maybe after if allows me time I can show you that how you can invoke this thing and if you don't have any questions can move the next topic okay yeah now next one in fact here given the link as well if you can click on this any of this thing also I have given my email ID as well in case you have any specific question to ask or if you have any use case if you have any customer you want to ask to do any kind of PC or something

uh I'll be more than happy to you can reach out to me and we we can uh take it yeah now next is called true cache okay now true cach is basically uh I mean let's go to talk about it true cach is something you say is a key feature of orle 23i right again the true cache is not available in 19c similarly the rough replication not ailable in 19c now these are the features of 23i which you never had before this okay so to accelerate your application right in the tradition what happens to accelerate

your application performance with a true cache what we do we use the true cache SQL and object cash and this is generally used to access performance of your uh like application and we use separate kind of cluster caching example there are many kind of cach in the market this called radi or the MIM cach you know MIM cach right these two are the also we have error as well now these are different types of cashier mechanism we use right nowadays now the only problem with this cashier has multiple things okay the reads the purpose of

cash is that to get the benefit of read right I mean benefit should be uploaded right now the conventional cach you say radius or the MIM cach they have certain limitation they have certain limitations they also had the problem issue the performance issue as well right now if you look the what is the difficulty we have in this traditional cachier the first is the developers are responsible for loading the data to the cache when the application starts you take example of a spikes if you reboot the spikes it takes hours to come up because it

has to warm up the cash everything right so the problem is in the traditional cach is the developers are responsible for loading the data into cache when the application start right now it read the data from the database okay and then load the data Into Cash subsequently on the each cash Miss in case is in cach Miss actually the application reads the data from the database right and again load it from the cach into the cach then then return the data to application so the thing is here is every times if something cash missing something

happening the developer has to write some kind of code where he has to bring the data back from dist cash now this is one thing here second is this in such scenarios developer have to write the custom code give me a second I'll answer a question now here developer as saying developer has to write the code custom code right for loading the data in the conventional object cach developer is also responsible for cash consistency within the database and the second is data in such cache becom stale become old in the database in fact and developer

has to write this custom code to key the data in commission object cache sing with the database mean cache and the primary database right now some cach Works around by this allowing the application to configure the there's something called time to live concept right there's option called time to live now in the time to live it allows you for data to be cash for certain period of times okay but in the reality it doesn't work well okay now second thing is issue happen is there's a frequent invalidations which reduce the cash Effectiveness because obviously after

certain time if you say you updated a table into let's say your primary database right if the primary database is not updated into cachier is not pushed into cachier right naturally the data that data we call s data right because the data in the cach is different the data in the Prime data is different okay now due to this invalidations which normally reduce the effectiveness and that add the load to the backend database on other hand the less frequent validations the higher cashier for getting dat St again so additionally any schema changes in the database

can be invalidated the entire cach naturally if you add one let's say table in particular database okay and that if it is not let's say updating to cach a and you're writing them query obviously you will get the old data you you get the data so in this case what happen is the developer has to write the data has to write this custom code to ensure that you have the consistency within the caching ensure that the data gets automatically uploaded from the database Prim to cashing so that is one thing which normally gives the more

issue in the terms of the performance perspective developers also responsible for cash consist other cash in Comm cash object you know each cach is independently maintained like you have talk about the rmm cach for example as I told you you have account right in the account let's say you have updated a some amount let's say you have there's $1 $10 you put $20 more now you account you have $10 in accounts you have added 10 more 10 more dollar now the actual should be answer should be $20 right but in case your cash is not

updated due to some reasons right you will get the date at $10 only so in all the cases only problem is this he your as the Cash become as as the object become uh like coming I mean as become bigger something if it doesn't refresh if you don't have proper code custom code naturally you get the issue with the consistency also it in case you have something called complex object okay that is not cached or you say nested relationship even there is not cash so all these are the difficulties issues in the conventional cash systems

and it also has some limitations it does not support every types of data format so example the conventional cach supports limited data types and the data format object cach support only the scal data types like suppose string type data integer type data Json type data okay data cannot be accessed in the col format this is limitation of the radius M cach it cannot be accessed in the col format say the an say in case you have analytics or the reporting purpose in this case this can be very effective other hand uh your Oracle database supports

a richy variety of data in case our case it supports Json we support the text we support the spatial we support the graph we support the vectors we support everything right it allows us to access everything fact whether this is a colum format or it is theow format now developer must be handle the conversion between the rich data and the format uh formed support data by the Oracle datas so that is difficulty and other big issue is caching the Json data Json document you say it EXA the caching problem the why because typically a full

Jon document has to be reloaded in the cache even if one of the attribute in the documents change in the database because this are very something things in the Jon database uh documents if you want to cash entire thing in case some column or some attribute got changed some reasons you no other option have to change the Miss part no you have to need you need to reload the entire cash out there is something other this results in increasing the io load and the backend database load actually now second thing is the missing it has

a missing Enterprise grade uh kind of features now such cache has many missing Enterprise gate feature like for example the object cache it lacks a performance feature like multi- threading parel processing subpartitioning secondary indes many more all these are uh is not supported out here hence the large uh cash cluster is typically deployed I mean what actually do is they deploy the large cash col ERS okay to compensate from missing features so what they do is they instead of doing this they deploy multiple huge clusters so this thing so in fact if you deply the

multiple let's say large clusters obviously you're going to increase the cost right cost but okay also it does not have the advanced security features like user privilege management or you can say strong encryptions it doesn't have object level security doesn't have then Ro level security doesn't have like it does not allow any kind of AD to access the data in the cashing so all these are limitation we have and because of this we cannot say this is the Enterprise great feature hence the sensity of data cannot be cach also object caching also lack the Enterprise

great like availity feature manity features and the obility features which typically result in the lower application service given agreement now let's look at what we have what warle two cach gives you the beauty now if you look here the work true cache is basically it's inmemory consistent and automatically refresh SQL object cach which we know only call it that is deployed in front of orle database it handles all the re SQL just like an Oracle database conceptually it's a more like a diskless active Data Guard standby this you can say if you see noral definition

is something called dis L active adg stand by data in the true cache is always current and consistent across the multiple tables and objects now schema change in the database are automatically propagated to the cachier now here scaling the true cache with the partition configurations now true Cache can be scaled with Partition configurations now there's something new we have out here which normally other V they don't have the multiple true cache instance can be deployed in front of database for purpose not only one in fact if you want to do multiple caching you can do

this thing you can deplo multiple caching tiers before the application maybe if iil is okay to able to um okay is it can able to cash the large data volume as well and two cach can also support is sping data to dis as well now this is something new this allows us to cast the data much more data value than the traditional one also it has a future which which we call the global proximity to applications now for uses for use case like uh um data lency user proximity and the device proximity right applications are

deployed far from the database obviously you applications you have application in India and databas in us right so in these all cases where we have Pro concept so what we can do you can deplo for the performance boost you can deplo the true cache instance before the applications to boost a read performance significantly a true cach does not store any data also the good thing is true cash do not store dat data on the dis by default hence it considered a data processing TS component that the side that also gives you the regulation like res

regulation because some country they also have the regulation as well okay now true cash as I told you in the previous case in the Jon if you want to load in the traditional cach conven cach if you want to load the complete Json document right and due to some reason some problem one of the component one of the attribute got missing you need to reload again in fact but in the in our case is different the true cach is a true document and relation cache with log pre concy this is something new that and Jon

document store in the database can be cached in the true cache documents are automatically refreshed at the attribute level guarantee this is something the new thing we have hence reducing the I/O and the backend database so we don't have to do these things we don't have to cash the entire JS to there we cash once but yes what are the changes we have at the prim database level that get automatically populated but the tradition cach is not actually now concurrency is automatically maintained within the versioning when the document is read from the true cache there's

something called value e tag is enabled is embedded in it okay when the same document is updated in the database the database verify the eag inside the document and compare it with that in database it show the consistency now look here any change you do the documents it generates something called value based EAB it normally it embedded right so when you do the changes when you do any addition any update into let's say cach so it checks in the database it checks the both eag from both places the cashier and the database if it matches

it updated because this level of security we do not have we do not it doesn't support in the tradition database in cash now if EEG does not match then error is reported back to application and in such case application can read the document again then perform their Bild so this is avoided to any kind of misleading or the Mis I mean I mean I mean any kind of uh kind of data breaches okay now look here these are the basically uh in fact nine types of the deficiencies we see in the traditional databases now true

cash uh one is say here loading the cash is done or taken care by the developer team cash consistent within the DP is again we have to write some custom code to ensure that the consistency between the database and the cash is maintained cash constitute with the values in the same cash is Again by the developer teams cash consing with this other cach is other developer teams Compass data support I told you it supports limited types of data in fact full support is there but again we have to write some very Uh custom code to

ensure that in case if any of the attribute is missing in the Jon document that has to be reloaded so if you look from all these nine point which there actually these def efficiencies these all are governed and owned by the developer so the responsibility accountability on the developers increased that is something where it incur the I mean the good time of the developer right but if you look the orle database in orle database everything you talk about the first six point is fully automated right loading the cash is automated no develop requirement cash consistency

by automated cash consistency with the value in the same cash is automated cash the other Cas automated and the last three comprehensive security parallel processing and high ability all these are built on so now this is the one thing which it differentiate it differentiate from the uh conventional caching to oral caching now let's see how to use a true cache now as you know the true cache like the application can query data from the true cache in two ways as you like this the main purpose behind this true cache is to ensure that the application

which is quing the data which is normally putting the data doing the select data data it has to do such way that it should get the data much faster right now it can do in two way of uh like data sele selections one first the application can maintain two separate connections one the read only connections to True cache and read right connection to the database we have a jdbc driver that has a beauty right so we Define it the query which you're firing it which connections you to use a read only connections or read connection

if with read only connections then the query will directly jdbc will direct you to True cache if it's Rite it D direct to the private database now okay now use the read only connections for offloading the query through cach in case you want the data fastest to beet you should use the read one read only connections because here the data has been treed from the true cache now here it will off the data right optionally the application can also choose to maintain just one connection now we have this something called smart Oracle jdbc driver that

has enhanced the transparency to Route the query to the true cash or to the primary datas depending upon the connections to you provide like read connections are the read right connections it has a beauty the jdbc driver maintains two connections internally and does the read write split under the application control this something very different the application use set something called set read only API call to Mark some section of code AS read only and this is existing the jdbc API which normally my SQL already used for the for the similar purpose and the jtbc driver

will send the read one queries automatically to cash like the moment you uh invoke the read one uh connections all the queries will be automatically routing to the jdbc WR it rout it to the uh like true C true cast in fact inance and if there a right on this then deals are automatically sent to the database label this called P database so this is uh something which normally gives you uh an upper hand and you don't need to in fact do any kind of writing the custom code or something in fact so everything is

automated here now this is a 23 AI feature told you now let's say some uh use cases uh in fact maybe you can see the true Cache can use to support the variety of use cases like I've taken the four case of the out here say first one can be used say mid caching mider caching is basically used for for boosting the application performance okay it can be uh used to cach as a okay the second one is which something called age cach age cach is basically for enabling the device proximity or the user proximity

this is for something called the data 70 and a third we call something called multi regions or multi- regions for example uh you want to use for data res in and we also have something called Cloud cross cash this is deed again on the multicloud for providing the proximity of the data to the applications so these are four different types of kind of use cases where we can we can use the uh cach to do the like depending upon the requirement you have it now one slide is what the benefit of what business get the

benefit you so I mean if you can talk and can talk a lot actually so if you see primary the benefit of using this true cach is the true cachier basically it offers the several benefit the first benefit I can say is it enables the significant cost saving because the first cost saving is the cost of the developer I mean they don't have to write the code okay second thing is this it has so much thing automated then it has buil-in feature you don't do INF just you configure the true cach and does it use

it create the two connections read well right will both connections and jdbc Driver Auto WR write you without you based on the query we have so everything automated here now by improving the application performance obviously without having to reite those applications nothing to reite application application work as it is in fact it does not require any additional investment obviously everything everything is built in you just go and use it configure it in the new caching product are the new operation skill set you don't require any kind of any skill set for this you just require

Min skill set how to configure this caching by default we have the already the documents you can use it and follow it is not it will not take in fact more than 20 minutes for that okay the management and the operation skill for True cash are the same as the Oracle database right if you Oracle DB you know how to work in the database you can configure caching there are some certain parameter you need to configure both caching side and the prim side that's it okay also it can be used as a single cacher for

the different data types and format for example documents relations rows column true Cache can be Deed on the community Hardware as well I mean it is not required that true cach should beond the highend machine Any Community Hardware you can use canly this you may have the D machine in machine I mean HP machine heav machine and machine you can apply there okay there's no specific Hardware required and that could be uh again I mean equ different size and also that can be used for running the Oracle database and also Hardware should be I mean

it also save the hardware cost as well right the true cash offers the strong security I told you we have the weakest Security in the conventional machine but in our case we have the strongest security normally every security which we orle database process that is inherited to to cashes okay and we have some customer uh like I mean feedback which we have uh I mean due to like Ian we have M the mean customer yes uh some like feedback we have like we have many customers who have already evaluting the true cash the one of

them is one of the biggest stock change in the world that is already evaluating over true cach to offload the read queries from some stock tickets and the data in the true cach should be around something 10 terabyte okay and then in the primary database it could be something around 20 terab okay so we are be working with one of the stocks in the world biggest in the world and other thing is we can deply the six or to I mean basically the purpose behind is there are I mean the same stock change which is

using something close to 200 node on the r radius cluster and that 200 node is replaced by just placing the six cash cluster so you can see this tremendous change in the cost as well right then we have 200 clust R cluster that is serving the purpose of 200 terab the same thing with the better performance we are able to deply on same configuration on the six toast instances so we are saving the huge Hardware cost as here also one of the biggest mobile phone manufacturing is also in the true cache is basically to upload

their requies and the primary database is mixed with vertical scaling and again we have one of biggest financial institution Isle true cash to offload their fraud detections and application and we have using like exping the AI model ref as the model can train like okay along with this we also have one of the biggest marketing company that is also Ting the true cash in fact we are doing this multiple p with all these customers right now they are running this real time I mean they running something huge campaign and they need the real times marketing

campaign because you know in the campaigns you should always have the latest data the the most current data right so data in the conventional cashier tend to be get stale as you know like because of the tweaking multiple feat things like because application developer team has to something they show this thing and along with this we also evaluating the same product with the Oracle Fusion application to cash some of the complex business object which have been nested Rel because I told you nested relationship is not cash in a tradition one but in our case in

cash so these are the few examples we have like what I call I mean I mean V evaluating this things and then again we have one of the biggest bank is also relating the true cash to offer read queries to cash enhance the Improvement performance so key take from here is uh I mean we will have uh again the live life for the 2 his if you want to uh use it we can provide you this thing so you can get a feel of it okay and in case you want to know more of the

true cache how true cache Works what architecture what are the diagrams we I mean architecture we have and how it I what are the things you need to configure we have given the link out here you can from there you can find this thing okay now this was things very quick because Frankly Speaking I mean this sharding itself is a very big topic I mean big topic but since the time constraint I could not complete everything because this required at least for me two to three session to include conclude the orle distribut datase okay we

just talked about today the features of what distri database has it there some use cases then different types of the data distribution methods different types of data application methods and then we discuss some kind of use cases in fact right okay so let me ask a question here I think a few of them I answer the answer what is differ between the true cache and the in memory what would be the use cases for the both in uh my dear friend it depends in both cases if you uh say uh two cache and in memory

as I told you true cach is in memory is is what you call a consistent in memory right told my definition now both are different things uh let me give you an example here in the true cach there's no limitation how much you can store it depending upon your memory you have it right so question here is in the true cach you have the options to offload the data right in case of in memory because you have two types of request I request read iio and write iops right in the in memory how do you

segregate the iops of read and right in the true cach only the read being offloaded right on being offloaded so any customer who has analytical kind of data all kind of data they have some the reporting data right and they want to use this things so that could be the best example as I told you New York Exchange fraud detections mobile company all these example which call the reone data right so this is there in memory is again something different thing because in memory is something you can use in database because database also has option

called memory you can use it but both are different cases and also the true Dash can be Deed on the multiple uh data centers as high ability as well okay so this can give you both Hil andity as well kingdom is something different now other question is dis list Data Guard does it means the true cache server should have memory equal to DV size is not required is not required traditionally should be but if it is it depends like it depends that how much data you want to cach right because in true cash you have

let's say in the primary databases let's say 30 TV right but true cash you want to sell say cash only let say 10 GB that depends it is not required but it depends that how much data you want to cash in true cach yeah how to determine the size of true cach server memory see my dear friend this is again depends that amount of data as I told you like uh in my here slide in fact here if you look this yeah we have uh offloading read queries for stock uh tickets to True cash with

10 TB of cash data for 200 TB the size of data is 200 TB the physical size but they want to Only cast 10 TV of the data here okay so similarly your question says same thing so your question says size now this depends totally that how much data you want to cach your case which I'm showing right now the stock change says in your case in your case the stock change says if they want to store they want to cash only 10 TB of data out of 20 TB so that depend again what kind

of information you want to store here yeah so that is totally depend upon the requirement yes any question we have uh I still have some time let me see if I can show you the uh live black link just give me a second I one or two more questions we have to wrap it up soon yeah just give me one second I'll let me show them this uh live live one example maybe that would be help them to use it okay let me shoot again the moment you open the link you get this kind of

screen out here right this one this live live we have right so the moment you open this link you will get this option call is start this is called green button now in the green button see here the moment you click it here this is called Running on your your owny now this will give you options in case you have any oci tendency you click on this right let me click on this this will take me here left side now this is totally how to start the r how to prepare the left right we also

have given the script you yeah here a script here here Z file this one this col application store the script and follow the steps so in this case if you have old tency you can configure everything out here right you can configure everything out here these are steps how how how you work on there so all the step you have given here with the screenshot as well what out get it here now in case in case you choose the other options this what you call the uh green button let me again click on this in

case you choose the uh other option called send box I think you can see my screen right you just choose run on this Live Lab send box now if you click on this in case you don't have any account on the let's say oci just say the Live Lab send box this will provision this Live Lab for you maybe in the maximum 10 to 15 minutes and then you can use it so this will give you option with even though if you don't have access you can use this this will give you comprehensive understanding or

comprehensive handson to work on the distributed database with the ra application yeah yeah uh I think um let me come back to my screen yeah any question you have guys before we round up this thing well thank you H for today's presentation um everybody has been interested in it everybody's stay most of everybody's stayed on and we look forward to continuing the webinars in 2025 and our first one coming up in h January 17th will be Craig Shaham we'll be talking about AI so you don't want to missed that on behalf of nug and H

viscosity I want to wish everybody a happy holiday happy New Year and thank you happy thank you very much thank you very much guys have a nice day yeah thank you all see you thank you bye thank you bye bye take care enjoy our days take care

Related Videos

1:09:04

The Future of Data and AI

New York Oracle User Group NYOUG

38 views

25:11

Next-Generation Intelligent Data Architect...

Oracle

2,146 views

6:46

Bill Maher warned of a Trump second term. ...

CNN

754,356 views

1:31:50

NVIDIA CEO Jensen Huang Keynote at CES 2025

NVIDIA

5,694,778 views

46:34

MYCOM OSI's GenAI Webinar | PM session

MYCOM OSI

31 views

58:35

DuckDB and the future of databases | Hanne...

Posit PBC

715 views

10:09

Michael Cohen reacts to Trump sentencing, ...

MSNBC

344,673 views

1:00:19

Develop AI/ML-Driven Apps with Autonomous ...

New York Oracle User Group NYOUG

106 views

1:06:38

Going Meta S02E05 – One Ontology to Rule T...

Neo4j

721 views

1:01:35

Oracle Database Patching the Right Way (Ou...

New York Oracle User Group NYOUG

284 views

1:01:58

Low-Code, AI, and the Future of App Creati...

New York Oracle User Group NYOUG

119 views

43:37

Mastering Multi-Account Governance with AW...

Cloud in

31 views

1:09:34

Oracle Innovations to Deliver Mission Crit...

New York Oracle User Group NYOUG

80 views

1:06:34

What’s New in SAP HANA Cloud | Deep Dive w...

SAP

624 views

1:26:24

Emerging Architectures of LLM Applications...

TensorOps

1,512 views

55:23

EC-Conference2024: Thinking Differently Ab...

Encryption Consulting LLC

54 views

1:09:19

An Introduction to Oracle Database 23ai - ...

New York Oracle User Group NYOUG

1,181 views

50:36

Microsoft AI Tour keynote session by Satya...

Microsoft India

52,159 views

4:43:16

DP-600 Fabric Analytics Engineer FREE work...

Data Mozart

9,515 views

1:04:03

Build an Innovative Q&A Interface Powered ...

New York Oracle User Group NYOUG

86 views