I Built OpenAi’s Personal AI Agent in 1 HOUR using No code

189 views2997 WordsCopy TextShare

Ahmed | AI Solutions

📆 Work with me: https://cal.com/ahmed-mukhtar/discovery-call

OpenAI just dropped a demo of their ...

Video Transcript:

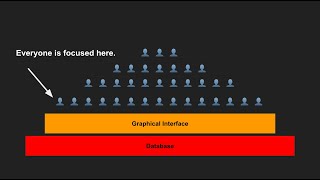

openi just dropped an incredible demo showcasing a speech to speech personal AI assistant but if I told you that you can build something just as powerful without writing a single line of code and if you miss the demo don't worry we'll be watching it together and breaking down exactly how it works behind the scenes in this video I'll show you exactly how to build a personal AI assistant that can manage your tasks your inbox your calendar and more importantly make calls on your behalf and the best part we'll be using simple no code platforms to do the complete setup and make sure you stick around to the end because I'll also be covering the cost of running this setup and I think you'll be surprised so strap yourselves in because today I'll be giving out all the source let's get started so those of you that missed it openi recently had their death day where they released all their new features and releases this account here shared a video on Twitter showcasing a demo of the live agent that they did I've actually put this at 1. 5 so we can quickly run through this could you place a call and see if you could get us 400 strawberries delivered to the venue Bute they placing an order for strawberries essentially I'm on it we'll get those strawberries delivered for you hello hi there I'm Rance Fant you tell me what flavors strawberry have yeah we have chocolate vanilla and we have peanut butter wait how much could 400 chocolate covers 4 are you sure you want 400 yeah 400 chocolate strawber how much would that be I think that'll be around like $1,415 of 92 cents awesome let's go ahead and leave the order 400 chocolate C strawberries great would you like deliv please delist to the Gateway milon days and I'll be pay in cash right cool so I think conf entally at the end just confirms the order all right then let's actually try and understand what's happening here so this is a very high level overview of the whole process behind the scenes but before we get into it I want you to keep in mind this technically isn't how they're actually doing it and I'll explain why that is in a bit so the first person gives a command to the agent via voice that goes into a speech to text model this could be things like open AI or gram for example that changes that speech into text it's like a transcription model and then the text goes into the llm and that's like the brain of the agent so it analyzes the user's query and then extracts all the different entities for example in this use case it realizes that it needs to make a phone call and the instructions of the phone call needs to be that it needs to order 400 strawberries and then it goes ahead and makes a function call to an external API the external API is the one that handles the interactions with the user and this could be something like vapy and that handles all the dialog management the decision making with the vendor like the strawberry vendor and then at the end of the call after we get the confirmation it creates like a summary of the whole transcript and that gets returned into the llm the llm then processes that text and then goes through to a text to speech model open here got their own voice models that do text to speeech but you can also do like 11 labs and there's a bunch of other vendors that do the same thing and then finally The Voice gets fed back to the user via the application so like I mentioned earlier technically this isn't how they're doing it because of the new feature that they released and essentially these two here don't actually exist we can go straight from the voice input directly to the llm and then back out from the llm via voice as well and this is what they're calling realtime API so we're able to do Native speech to speech so the model is able to actually handle directly speech so there's no transcription involved so the benefit of this is that we get a very low latency and makes the conversational flow a lot more natural and then overall the experience is just a lot better and with that being said now let's look at how I've actually built this so I have this structure here with a different platforms that I've utilized essentially I'm using telegram for the input and outputs I'm using na10 which I'll explain in a second but essentially this is where we connect all the different applications together and then for the phone handling like I mentioned earlier I'm using vapi so we have a voice input that goes into telegram so I'm able to just record a message that gets passed on to the trigger of the na10 workflow and we start with doing speech to text so I'm using open ai's whisper model and then in a similar fashion the llm inside the agent processes the text and then makes a function call to an external API to V's API and then vapi handles that whole complete conversation and then we get the summary returned back into the agent which gets for to the user inside telegram so this is just a top high level view but now let's actually dig into the different platforms and find out how I actually did this so na10 is an open source automation tool that allows for Integrations of different apps together in like a visual workflow environment I know that it might look intimidating or complex as we hop in but essentially all you need is a basic understanding of apis and data manipulations and especially if you're familiar with make. com you'll be good to go right so let's take a step by step and actually try to understand what's going on let's first look at this top part here so the telegram bot triggers the agents and over here we're just extracting the text from the message and this if statement basically checks if it's voice or text if it's voice it goes and grabs the file once we have it downloaded we using open AI whisper model to get a transcription of the voice then we put the transcription into a variable that goes into the agent and if the original message is text we just go directly to the agent node here so the agent here is connected to a bunch of things first it needs to connect to a chat model so I'm using GPT 4 R um the latest one and then for the memory we're using window buff memory to keep the history of the conversation right so the agent also needs to connect to a database whenever it needs to do the rag part of the agent so the retrieval part and I have it connected here to Pine Cone Vector database and essentially all I did was set up a CSV file containing the contact information so we have the name the type of contact which will make more sense later on I put myself as a friend Speedy Service as a car garage and uh dental care for you as a dentist then we have the emails for each one and then we have the phone numbers so once that gets put into the vector database anytime we need to grab someone's contact details we can retrieve that and then we get to the main section here so we have all the tools that the agent has access to so we can send an email get unread emails p a calendar event get today's meetings at a task and get today's tasks but what we're going to focus on today is making a phone call I won't be covering all these tools today but once you understand the principles of creating a tool and connecting it to an agent it's just a copy and paste job so as an example we have um the send email tool essentially all we do is give a description so the llm knows when to actually make a call so we say call this tool to send an email and down at the bottom is where we pass all the parameters for the tool to actually accomplish the task so for the send email tool we need to send it the send to email so that's the email of the recipient we have the subject and then the message itself and essentially what it will look like actually I've got the today's meetings so you can see how simple this tool is and most of these tools are exactly the same they're very simple so the agent triggers this tool and passes the different parameters to it so in this example we have to give it the time frame of when to look so the parameters that I've chosen we give it the start of the day and then tomorrow at the start of the day so at 12 and then that gets converted into today and tomorrow essentially just using um formatting and we pass those parameters to the nodee and it comes back with all the events that in that time frame then we do some formatting just to extract all the different meetings and then finally we just set the response variable to the meeting list that we created from the previous node and that's how it gets sent back to the main agent again all of these tools are basically exactly the same the one we want to focus on today is this one here so let's actually see it so this is actually the complete workflow here and this is why it only took me 1 hour to put this together and actually create the VAP assistant so again in a similar fashion we grab the different parameters from the agent so we want the first name the type the instructions that we're going to pass to vapy assistant and then the phone number and what we're doing here is we're utilizing Dynamic variables to pass the different variables to the vapy agent so the agent itself is very simple I'm using deep gram for the speech to text and using 11 labs for the text to speech voice model and I'm using GPT 40 model for the llm there's no knowledge base the temperature hasn't been set and the max tokens these are all just default values and essentially this is the whole prompt here it's very simple we just say you're a voice personal assistant for armed act as armed please follow the given instructions below to the best of your abilities and here we've got the different Dynamic variables so you'll be speaking with and then it has their name they are a type denst for example and then we also give it the instructions of what to do on the call itself and then there's some additional notes here just so that you can behave as we want it to so to actually make the call we have to do a HTTP request it's a post request to api.

vy. a/ and the main part I want to focus on is the body of the request and here's where we put the assistance ID so you can just get that from at the top here you just copy assistant ID and paste that back in here we also need the phone number ID and this is the phone number that you've purchased through vapy itself so you just click on here and it'll be right at the top you copy the ID and paste it down down below here and for all the other variables we got these from the llm itself and so we have the first name type instructions the phone number and the name of the person that we're trying to contact and that goes off and makes the call outside of this workflow and the tricky part was actually knowing when the call has ended so we can get the summary of it so there's another request that we do to get the call status so it's api. v.

a/ and then we pass it the call ID which we get from the previous node and this one here is a get request and that's what we have to do and that Returns the status of the call and a bunch of other information that we don't need to worry about and then I have this if statement so there's a parameter goal status and we're checking if it's not equal to ended that means the call is still in progress and if it is we wait 3 seconds before we make another get request and if this node returns false that means the call has actually ended and then we're able to extract the summary from the previous node and that's under analysis do summary and we assign that to a variable called response and that's how it links back to the main agent because when we call the tool we there's a variable here so it says fill to return so when it sees the response in the last node in that sub workflow that's when it ends that sub workflow and returns back to the main agent so with that we've actually covered everything here uh don't think I'm missing anything um essentially that goes back to the main agent and we do some formatting here in this node to send out to the telegram bot and I think we ready to see in action so let's do that could you please call my car garage and book my car in for full service at the earliest available slot and but make sure it's a full service and also find out how much this would cost me so we've got the so this one's running okay we won't be able to see it while it's running um so this is the sub agent we've got a phone call here I can't SC record this part but yeah we've got a phone call so let's hear it hi this is speedy garage how can I help you hey I want to book my car for a full service package can you tell me the first available slot you got and how much it's going to cost right so we actually have the bronze silver and gold package so if you want a full package uh full service this will be a gold package and that would cost you £200 is that okay yeah sounds good what's your earliest available slot for that so we've got an available slot at let me quickly check it's at 3 pm on Friday does that sound good for you yeah that's perfect let's book it amazing that's all confirmed for you all right so your car has been booked in for full service with Speedy service you've agreed to a gold package for £200 the appointment is scheduled for Friday at 300 p. m. so this has worked so we can see here that we checked about eight times until the call was completed amazing this one is also just cing up but yeah that succeeded as well so you can see it went through it detected that it was a um a voice let me just zoom in so it transcribed the the audio send out to agent we grabb the phone number from the database and then we call the make phone call tool so if you actually want to see a full demo of this you can check out my other video where I go over all the different tools and test them all out all right so let's quickly cover the cost of running this setup uh let's actually start with vapy so with vapy it cost us 12 cents per minute and if I look at the logs so it's only 15 cents for the code that I just made next let's look at the usage of open's API costs and I've counted about 12 different commands that I've actually sent today while doing the testing and actually doing a demo and all of these come down to 11 cents for for today and that includes the transcription of whisper embedding model and the GPT 40 model so that comes down to around 1 cent per command which is absolutely nothing and finally for na10 itself they have a community version where you're able to self-host it yourself so I have unlimited workflows unlimited executions and this is why I've chosen na10 in comparison to make.

Related Videos

20:19

Run ALL Your AI Locally in Minutes (LLMs, ...

Cole Medin

122,494 views

19:20

5 AI Agents Every Business Needs before 20...

Ahmed | AI Solutions

629 views

10:58

GPT-o1: The Best Model I've Ever Tested 🍓...

Matthew Berman

263,906 views

46:56

Build an AI Agent Team That Does EVERYTHIN...

Ben AI

43,930 views

20:43

LangChain and Ollama: Build Your Personal ...

AI Software Developer

5,081 views

19:53

Can ChatGPT o1-preview Solve PhD-level Phy...

Kyle Kabasares

69,238 views

9:44

Fastest Coding Assistant and it's FREE

Alex Ziskind

52,751 views

11:17

AI is bad for your soul. I learned the har...

Cam One

2,280 views

27:49

How To Build Scalable AI Agent Teams | AI ...

Devin Kearns | CUSTOM AI STUDIO

13,737 views

8:31

I Built A Mobile APP In 30 Minutes With No...

WeAreNoCode

155,406 views

19:06

Suno Prompting SECRETS! Powerful Metatags ...

AI Controversy

241,219 views

16:27

This RAG AI Agent with n8n + Supabase is t...

Cole Medin

42,250 views

15:00

Huge ChatGPT Upgrade - Introducing “Canvas”

Matthew Berman

41,287 views

7:11

AI Agents Explained Like You're 5 (Serious...

Vendasta

22,190 views

11:43

I love small and awesome models

Matt Williams

22,054 views

16:58

ChatGPT o1 is INSANE: See the Full Demo! 🍓🚀

AI Samson

124,979 views

42:12

18 Months of Building Autonomous AI Agents...

Devin Kearns | CUSTOM AI STUDIO

143,827 views

18:34

5 Ways to Use ChatGPT’s Advanced Voice Mod...

Bryan McAnulty

46,149 views

57:27

Python: Create a ReAct Agent from Scratch

Alejandro AO - Software & Ai

13,372 views

8:53

What is AI Agent? | Simple Explanation of ...

codebasics

19,084 views