Smart City End to End Realtime Data Engineering Project | Get Hired as an AWS Data Engineer

74.47k views23265 WordsCopy TextShare

CodeWithYu

In this video, you will be building a Smart City End to End Realtime data streaming pipeline coverin...

Video Transcript:

hello and welcome back to the channel in today's video we have an interesting project to work on together this is our decided by the community and you can look at how the community voted in the community tab now before we continue if this is your first time in the channel and you have not subscribed to the channel don't forget to like And subscribe now let's go back on to the project now this project is going to be a realtime streaming project with a parch spark and let me give you a background use case for this

now we have a driver that is traveling from C London to Birmingham now they want to have an iot device that collects information about the vehicle about the GPS location the weather information the medical uh uh any accident or something that happens on the road all this information are being gathered by this iot or any devices at all and being sent to our system we want to analyze this data in real time and be able to work on this data in real time and as the driver is moving on uh from London central the Central

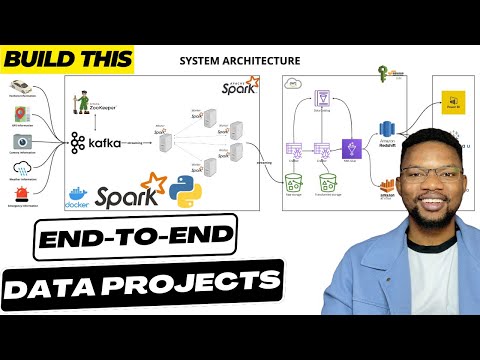

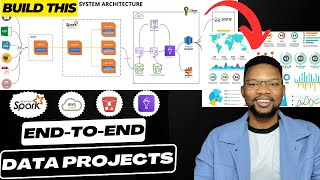

London to Birmingham we are collecting this information alongside now if there's a delay on the road or something like that we get all this information in real time how do we access how do we handle all of this information we're going to discuss that in detail in the architecture section but that's that's the background overview of this uh this project now how we connect the bits and pieces of this system together we also be discussed in in shortly so let's go into the architecture section and discuss how that looks like here the system architecture we

are going to be building today we are going to start by collecting information about the vehicle that is traveling from London to Birmingham uh to put that into context this is how the map looks like so it's going to start from Central London and move on from Central London to all of the steps it takes about 2 hours 30 minutes depending on the time if you have an Optima without traffic and no issues and incidents on the road it's about 2 hours 30 minutes from Central London to Birmingham but interestingly we are going to see

how that applies to our case where we're going to be visualizing a traveler that is moving from Central London to Birmingham in our case we're going to be collecting the vehicle information that the driver is uh is driving then the GPS information of the car so we get accurate information about how the car is moving from Central London and which route it's taking through the latitude and longitude information then we have a set camera information in here which is going to be a screenshot this is going to be a dummy because we don't have an

iot system that is live and listening to uh sending information from live cameras in this case but this is going to be something that is possible if you have a live system that is collecting camera information and sending it to the system it's also possible then whether information is something that we're going to be looking at as well then we have the emergency information if there's a maybe an accident on the road uh the policeman stops you on the road there's a fire there's uh any other kind of emergency information that might disrupt the inform

uh the the traveling from Central London to Birmingham this is what we're going to be looking at as well we're going to be collecting information about that and by the time the the the data is uh collected and uh let's say uh the police arve arrest you by let's say 10:00 and then you were released by 10:15 or 10:10 that also is going to be captured in our data set now once that data comes in it's going to be sitting in our CFA system but before we do that we're going to set up our pic

zook keeper which is going to be the one controlling whatever information is going into CFA and the multiple CFA information if you have multiple Brokers like uh two or three Brokers that we can control zuker is going to help us doing the coordination with that so each of these data that is coming into the into the system is going to be passing through CFA and then we have a consumer with Apaches park that is listening to events that are coming into Kafka once the data is been listen and consumed with a parches spk we're going

to stream the data directly to AWS in this case we're going to be writing into S3 bucket at the end of the day while the data is uh getting uh written into our S3 bucket by the time the traveler gets to Birmingham or before then we can easily write our condition which is going to be our process on AWS glue to extract information from our raw storage uh using the data catalog of course uh we write that into uh with AWS glue we automate the process to get that from the AWS catalog now once the

data gets into catalog it makes it easy uh we we just have an access to connect to Amazon Athena or red shift in this case all of this is going to be controlled by AWS of course uh we have our roles and securities in place and then Amazon red shift is going to be our final destination in this case for the data now we can also visualize the same thing with Amazon Athena but we're going to be focusing so much on a red shift but we're going to have a bystander sitting on Amazon a where

we can just write our query and visualize that but the major thing is going to be done with red shift eventually we're going to be having uh powerbi visualizing Amazon red shift if we have enough time or we use T or Lua Studio that is the entire architecture of the system and that's how the system is going to be looking like so before we continue if you have not subscribed to the channel don't forget to like comment share and subscribe to the channel it is uh with this likes and comments and subscri subscription that uh

keeps motivating the the channel to grow and uh you know to to reach more people as well as uh you know keeping the information fresh and up to date so enough of that let's get into the coding section and start getting our bits and pieces all connected together so see you in there now make sure that you have your Docker desktop installed if you don't have it you have to go to do.com and then get Docker installed on your machine whether you are using Windows Linux or obone 2 or any other maybe Mac OS it

doesn't really matter what matters if you get Docker desktop or Docker engine installed on your machine so you can easily get all of these things that we are doing and follow through along as we could now once your dock instance is set up we are ready to get started with our architecture we are going to come back to these uh producers that are sending data into this uh CF and then our Apache spark uh much later but for now let's set up up this architecture and then continue from there okay now going back into our

py Cham I'm going to be using py Cham in this video so if you are interested you can also use py Cham or you can use any other ID that you like the process is pretty much the same I just like the UI and the layout of py Cham there's nothing special about it really so you can use any ID so just click create create a directory and then you you create a virtual environment and you should be good so in this case I'll be calling this project Smart City now I need a main py

which is what we're going to be working with basically and I'm going to change my python version to 3.9 so for some of you that might miss this part the python version that is being used in this video is python 3.96 but you can use uh 10 as well and I think 11 but it is very dicey to use the latest version which is 3.2 as as of the time of this recording so I would say if you're using 3. 12 you proceed with caution all right let's proceed it will be good to have your

Docker desktop installed and uh up and running before you continue so if you don't do that and you are trying to run the commands that are going to be run uh in a few minutes then you are going to have run into some issues okay so let's do let's continue now it is possible that by the time the system gets up uh you want to be sure that your virtual environment is sitting in the right place so easily if you're using Linux systems or Mac OS or whatever you can do which python it's easy for

you to get where the data is sitting now in my case it's sitting in the VNV which is exactly where I want it to be so now let's continue so let's get to the architecture of the system uh like I said the architecture of the system we are currently working with is uh the Zookeeper with cafka then with a parchas park all sitting on Docker okay so let's get our Docker compos in okay I'll be using Docker compos yl to facilitate the services uh installation all right so let's get that in all right so let's

start so we have a version in this case the version we're going to be working with is version three then we have our services the services we're working with is the zooe keeper all right zoo keeper as well as purchase PK but before we get into a purchase pack let's fix zookeeper and the broker first so we have our image the image that I'm going to be using is going to be the image from confluent Inc I like the image so you can use any other CP any other zookeeper that you like but I'll be

using CP zookeeper in my case and the version that I'll be using is 7.4.0 I find it to be save so you can use the latest version I think there's an updates to that but if you like just keep it as 7.5 uh. 4.0 and you should be good the host name in this case is going to be zookeeper excuse me the container name is going to be zookeeper as well zooke keeper then the ports that I'm going to be exposing uh in this case uh is uh 2181 to I'll just use the double quote

2181 2181 so that's the internal and external IP uh the port then the environment that I'm going to be working in the environment environmental variables mean so we have the zooke keeper uh client client Port G is 2181 all right then zookeeper zookeeper uh TI time it's going to be 2,000 2,000 then the El check in this case I I'll have a test so that each time we are doing an El check because of the dependency we want to be sure that this zookeeper is ready to accept connection before contining so I'm going to have

have a command the command is going to be a bash which is going to be the bash command uh which is Bash C and what that is going to do basically is to say Echo are you okay so are you okay so once this uh returns are you okay and in the Local Host I'll be I should be good all right Local Host 2181 so what that means is is going to be running are you okay in the terminal once that is done and once I receive a response then probably it means that the the

server is ready to accept connection the interval in this case of uh the the test is going to be 10 seconds and the time out is going to be maximum of 5 Seconds the number of real tries before it fails is five so if the Zookeeper doesn't respond after five tries just uh terminates the process itself so I'll call this data Master as my network so let's get in our Network so the network in this case is going to be uh data Mastery lab all right all right so continuing uh hopefully uh this gets us

uh up and running and we should be good okay now our zookeeper has been set up so we've tick this off our box now let's continue with Kafka which is the one that is going to be controlled by zooke keeper and the cordination will be done by zookeeper as well so let's continue now uh we have zookeeper then the next will be to getting our broker the broker is going to be our c car of course image the image is also going to be coming from confluent Inc like I said you don't have to use

the same thing so you can use a different uh zookeeper or Kafka broker which is totally fine so far you expose the same Properties or similar properties you should be good the the container name in this case as well is going to be broker it's going to be depending on the zookeeper in our case and on what condition is the dependence is going to be so the service he condition what that means is until this test returns and healthy condition this broker is not going to get started it's just going to be keep pending if

it fails after five retries this is not going to get created that's what the dependency means really uh in short term and then once the condition is there we have our ports and we're going to have the ports going to be 9092 for the internal external IP external 9101 as well for the I think this is the jmx uh stuff now we have a lot of environment in this case I'll just copy that because it's a lot I don't want to make mistakes and then we keep debugging that before getting into the actual activity all

right I'll just space that into the environment section in here but I'll just quickly go through them so we can explain what each of them do and uh how they function in this in real sense so the CFA broker in this case we're using one broker so the broker ID is broker one and it's going to be depending and connecting to zookeeper on zookeeper 2181 The Listener security protocol map is plain text and uh we're using plain text in this case I'm PL text is also going to be PL text the advertised listener is going

to be broker 2902 which is where is going to be accepting connection from or as well as 9092 all right so these are the two things that is going to be used to we can use to communicate with our broker the metric reporter is this for the Confluence metric rep I think this is majorly uh Confluence related so if you're using any other broker information as your Ser as your broker you may not need to set in set the Kafka metric reporter we also have the C uh offset topic replication factor is one you can

increase this if you have multiple replications uh you want to increase the number of replications but the rest are just uh uh they're just more like confident information in this case we have the transaction State as well then the jmx sport is 9101 the jmx host name as well is Local Host schema registry in this case we're not using it so it's really uh useless so you can remove this but I'll just leave it as it is but it doesn't really Hur if it's there now for the rest is uh this is confluent uh specific

if uh you're using confence you should add this as well this is the metrix reporter bootstrap server and the topic replica and metrics enable we're not enabling any metrics and even if any metric is going to be going out from broker it's going to be anonymous okay now let's put this on a network the network this is going to be sitting on is going to be net networks networks is going to be the master La good all right so continuing I'll just get this here data Master good now continuing we need to get the he

check as well so the Heth check for our broker is similar to what we have before in this case uh this guy I can just copy this and just modify the command really uh just maybe delete this press enter here and paste that in there so this is going to be a bash command as well but instead of are you okay okay I'm going to be using the NC and this is going to be ncz ncz Local Host uh B 9092 so what this is just like doing is more like a doing like a ping

of this 9092 to see if it is ready to accept connection I'll leave the interval and time out and the r r as well as the same now what this means basically is that our our zookeeper and our broker is ready and we can spin them up while we continue with our apach spark installation so let's see if everything is working fine before we continue to do that we do Docker compose Docker compose up and in detach mode now because we not building anything so if you if you have a Docker file and you're building

an image for that you will probably need to get in your Docker uh flag for build otherwise the build is not going to reflect okay but in this case we're just using the normal system we're not building anything so it's easy for us to just doer compose upd in this case all right so if you look at this we are creating the Smart City Network um zookeeper is created and our broker is started so if you look at our dashboard Smart City in this case is coming up I I don't want to click on that

I just want to click on this down and you can see we have a broker up and running and zooke keeper as well up and running so let's continue with our a parese spark setup okay so our we need to get in our spark Master work architecture so to do that we're going to have our spark Master now because the spark Master they are kind of related in some ways the only difference is uh everything is the same system really but you have a the class that is differentiating them as master and worker modly the

the properties are really the same so what we're going to do is we're going to have a small module that we can replicate across the master as well as the worker as well but we just specify where the master is going to be we have a class for that and for the workers as well that are going to be working uh for the master in this case is going to be uh inheriting the same thing from from this small module all right so let's quickly do that so in our Master we going to have image

the image is going to be bitnami Vietnam spark the latest that's the latest uh version doesn't really matter what it is so we are going to have our volumes the volumes is going to be getting information from uh which we don't have jobs right now but we're going to have a job uh which is where we're going to be putting all our spark jobs and we're going to be mapping this folder in our local system to op vietnami not Vietnam vietnami spark jobs all right now the command that I was talking about that is differentiating

the master from the work is going to be coming from bitnami being the spark class it's going to be og. Apache do spark. deoy mastermaster all right now finally the master is set up we just need to expose the port so let's expose the port for the master so in our case we're exposing exposing 9090 all right so let's say 9090 as our local and then in the uh in the container is 8080 all right so internal and external so we have 7077 as well because this is where our spark URL is going to be

sitting on so it's important we expose that as well finally we get in our networks the networks that this is going to be working on is uh data map simple as that good now as data M good now the next thing is to get in what am I doing okay that is fine now the next thing is to get in our worker our Master is fine so to do that let's get in a small module so we don't keep replicating the same thing over and over again so we have xar common in this case uh

this is uh a name that I chose for myself you can choose any name so far it's maybe relatable and you can easily uh relate to that so the variable for this when we want to reference this is going to be spark common all right so the image for this small module is going to be the same same thing as our Master B Nami spark latest all right and the volume we are mounting for each of the workers it's going to be the volumes it's going to be jobs the same way we have it for

in fact I just quickly copy that uh the volumes command and the ports uh I'll just maybe just copy this guy all right and then in the volumes replace that this is fine now for the workers this is going to be the workers so it's going to be different from Master it's not going to be master in this case it's going to be B Spar class um spark deploy worker in this case and worker then we have to pass in our spark URL the spark URL is going to be spark h spark spark master in

the port of 7077 all right so this is where our spark Master is sitting in and we're passing this so This knows that this is where the master is sitting in and when whenever you want to receive information you are receiving it from from there then the final thing is the depends on depends on of course the worker is going to be depending on the master of course so spark master all right now let's setting the environment that this each of the workers are going to be inheriting so we have the environment we have the

spark mode the spark mode is going to be Walker all right the spark uh worker core nodes which is the number of CES the number of CES for each of the worker so if you have enough compute you can increase this course that will be that will mean that your system will be way faster and each of the the workers will be more efficient but two is so fine you can have as many workers as possible all right so memory is going to be 1 gig now interestingly if you reduce this from 1 gig your

worker may not not work so I will recommend a minimum of 1 gig so you can try that out and see how that looks like and give me a feedback in the comment section so I'll just copy this smack Master URL as my sparkmaster URL finally we getting the networks and that's all we need to do networks okay the networks in this case is going to be data Mastery lab good finally we have a variable that we can use now so let's get in our workers so I just get in the first two work and

you can do that you can increase the number of workers if you want so I have spark uh spark worker one in this case is going to be inheriting from a steric spark common all right simple as that for our second worker we do the same thing but change the name of the worker so if you want to have more than two workers you can actually double copy that and then paste that and just change the name three and four all right so what I'm going to be doing I'm going to to start with this

four but I know this is going to give me some compute issues so I'll just comment this out for now all right so let's use this two and see how the system performs under stress let's stress this out all right Docker compose up the tach and takes that for his pin you can see that we have our spark Master created and Spark worker 2 and worker one as well created which is what we have in here which is good so interestingly if you go into the Local Host 7077 you should see something like this uh

we just wait a little while for the master to be ready I think it's still currently running uh what's this exception in connection so I think is still currently waiting something is too large in this case I think I'll just leave wait for this to to finish the initialization I think it's still starting up uh still starting up so we just wait a few more minutes for that to be done and while this is ongoing we just go along with our coding we come back to this much later to test if our spark master and

work architecture is up and running before we uh while we continue all right now interestingly uh even though we didn't create our jobs directory by the time we spin up our spark worker because we map it to our jobs directory we created the job for us and that's one thing you have to be careful about if you map it to a file and you didn't create the file before running it's going to create a folder for that particular file but in our case folder works just fine all right so let's get in our main py

into jobs that's where we need it anyways so and then we do another touch jobs uh jobs and inside our jobs we're going to create a new file which is going to be our spark I'll call this spark City really py so these two files are what we are going to be working with extensively so the main py is where we going to be doing the production and the spark city is where we're going to be writing our spark jobs to uh listen to events from Kafka now for our main. py we are going to

start by installing a few packages which is what we're going to be using so we do peep install we're going to be installing confluence simple Json and pypar so the ppar version we're using in this case is simp is the latest version of course simple Json Confluence CF so I'll just run this to get those uh packages installed and saved into my requirement. txt so if I do peep freeze requirement. txt what is going to do is populate the requirement. txt with this package so by the time we increase we install a new package we

need to add requirement. txt as well as part of the so we can export it to requirement. txt so for those of for those of you that don't know how requirement. txt gets created you do p phase then greater angle greater than angle uh greater than symbol requirement. txt so let's continue so I'm going to be importing OS of course importing confence Kafka uh from here I so from Confluence Kafka import serializing producer because we need to produce our record even though we are not doing that right now so we import simple Json as Json

as well and finally we import our P Spar which is we don't need it right now so let's leave that for now so let's get in the latitude and longitude for Birmingham and London so London coordinates in this case are coordinates it's going to be latitude of course this is a Json it's going to be Lati latitude of course the latitude is the 515 H this is something that I checked and looked up on the map so I don't know it often then we have the what am I doing yeah good so we have the

latitude then we have the longitude of course so minor 01 278 0.1278 okay good now that's for London so let's put this on the same line so to save some space and yeah we do the same for Birmingham as well all right all right the latitude for Birmingham you can look it up on Google of course 52 4862 all right then for the longitude is -89 04 so the logic we are applying to this is by the time we are I'm going to just apply a simplistic value in real sense you want to get the

actual figure that is going to be more sophistic uh sophisticated and it's going to be a representation of actual data right so in our case we're going to have latitude increment increment which is what we are increasing and uh of course the longitude increment as well so we have the Birmingham because from the bottom that's the south is going Northeast Okay so we have the the latitude from here latitude all right we are going to subtract that from the London London coordinate which is going to be the latitude as well latitude and while that is

ongoing we we divide this by 100 we do the same thing for the longitude as well which is going to be uh longitude and uh Birmingham coordinates uh I just uh long longitude longitude I'll just copy that and replace that with is good and that's how we need to do to do the increment the incremental for both latitude and uh the increment for both latitude and longitude all right so that's the process for that now let's get in our environment variable so let me just comment this out and say this is going to be uh

what we're doing basically is calcul calculate calculate the movement uh increment so this is uh where we are doing the calculating in the movement movement okay all right so in real sense you have to you know put the actual value there or get the actual value so the environment variables of course that we going to be using for configuration it's going to be coming in from either the OS or by default so we say Kafka bootstrap Kafka boot strap servers it's going to be you can get that from the os. getm so if that is

set up in the environment all well and good if not we can just default it to our bootstrap uh server okay bootstrap servers it's going to be Local Host 1992 then we get a vehicle topic which is where we are cering for this any information that is coming about the vehicle is going to be going into vehicle topic GPS topic camera topic weather topic emergency topic of course so vehicle topic it's going to be OS to get M get M and that will be V topic if that doesn't exist use vehicle data then for the

GPS use the GPS topic get M GPS topic then we have GPS data for the traffic as well traffic information traffic topic this is where this is just a majorly traffic information whether there's traffic on the road uh we get that as well or you get hold by the driver gets hold by the traffic right that will be going in there it's normally really traffic data then we have the weather so if there it is raining as always in London so we get information about the weather as well weather topic then we have uh weather

top with data finally we get the emergency which is the emergency topic so os. getet EnV and that would be emergency topic if that doesn't exist as well get that into emergency data lovely now once this is done the next thing is to now getting the actual logic I'll just minimize this for now and get started with that so we have our start time which is the time that is going to be the the the d The Traveler is going to be starting the driver all right so I I'll just stick that to the current

time which is the date time. now then the start location start uh location it's going to be the London Co London coordinates that's we just copy that simple as that so let's import our date time it looks like we don't have it so in our here we just say Import in fact from date time import date time there time okay so going back here we have our start location and the time we start so let's get in our different function that is going to be uh used so let's start with the entry point so if

the name if the name is going to be main if the name is main so we can start running from there so let's get in our producer config which is our Kafka information broker information producer config it's going to be uh this is how you you configure uh Confluence CFA by the way so you have bootstrap servers bootstrap do servers reallying then you have the CFA bootstrap server that we already created then if there's any error that will be our error call back we just do a Lambda function Lambda to print that the error for

Lambda is going to be just print print CFA error CFA error and the error is simply error so if you have your CF C maybe hosted somewhere else and uh you have most times if you something like a CFA uh confence confence environment on the CL confence called if you created your producer which is your broker in there you have username and password so you need to get in your sasl information which is the the the username and password for that and if you have a schema registry as well because conference Cloud uses schema registry

so use the schema registry in that case the username and password as well as the host information for the schema registry you put that in the producer config most times you want to put that inside a different configuration configuration file that you can maybe exclude from committing that from your GitHub or something like that so that's that's just a different case entirely but let's stick to this our use case in this case all right so going back to our code we have our producer config set up properly then we have our producer in this case

we brought in our serializing producer if you can still recall from Confluence CFA so we use the producer config to to tag into our cying producers a parameter all right so let's try and catch if there's any error we simulate the journey so let's simulate Journey which is going to be used with the producer and the vehicle we're going to be using is called V code with you code with you I see w y and then maybe one 2 3 all right interesting topic uh vehicle name all right so if there's an error we call

that accept so if you stop the the the journey yourself you say that's a through a keyboard interrupt may be contr C or something like that simulation ended by the user by the user otherwise except uh we have exception as e any other error just uh say an unexpected eror unexpected error or code or code if I can only type or code I think it's dou R isn't it yeah it is all right so let's put e into that and we should be good so let's create our first function called simulate Journey so this simulate

journey is where we're going to be having all information about the vehicle information and at the end of the day we're going to be producing data from there all right so we have a simulate Journey all right so getting the producer as well as the device ID uh which is the vehicle information really device ID so you can change the variable name if you like so while this is true uh what is true in real sense what the true in this case is the Val uh the the driver is still driving from London central the

central London to Birmingham all right so in our case we getting our vehicle data vehicle data which is going to be generate vehicle data how do we do that we use that with the device ID which is our device the the vehicle information so let's let's uh create this function because we don't have it right now so we have generate vehicle data and then getting uh because we just passing the device ID to that so it's easy for us to say we are creating uh a function that just passing device ID into that okay simple

as that here we get in a location which we don't have right now so let's get our simulated location so we say simulate vehicle movement so what this means is the first time the very first time the the driver starts and starts moving towards Northeast from the south what that means is each location is going to be increased or decreased in whatever whatever case heading towards Birmingham in our case so that's what that means when we are doing a simulation so each time we we get an information about our latitude and longitude of the driver

if there's any hold up or something like that on the road then we get the same latitude and longitude on the SP on in the same space for some for quite some time and we can build up our reports based on that to know what time they hold up appens and stuff like that so let's continue so the simulate vehicle movement uh what we want to do basically is to get the information about this vehicle uh which is going to be the latitude and longitude of course so we have death simulate vehicle movement so we

just let's declare this as Global the start location and then we start moving towards Birmingham all right move towards uh birming Birmingham all right so starts location in this case is going to be uh what is it latitude latitude all right then we keep increasing the latitude we the latitude in Latitude increment then for the longitude uh we keep increasing the longitude as longitude as well so each time the latitude and longit longitude gets increased so we just add some Randomness in this case uh let's add some Randomness uh to simulate the actual to simulate

actual road travel all right so it it doesn't mean we are just increasing by two or three every time or something like that all right so I just copy this again then the random is going to be coming from random uh we don't have random imported so import random import random So Random do uniform so we want the the value to be uniform between minus 0.005 and 0.5 the same thing for the longitude as well okay so it doesn't Spike up as it doesn't go too far above 0.5 okay So eventually we just return the

start location I hope you are following me with this kind of picture that I'm trying to paint to you as the as we are moving on we just increasing the latitude and longitude by the latitude increment which was the one that we subtract from the longitude from Birmingham to uh London central then we divide that by 100 we just increase that by by the time we are moving on and the randomness we had is just 0.5 not too big so we don't have a spike in the by the time we are moving on all right

so that's what we're doing here in the simulate vehicle movement basically and once we have our location now the next thing is just to return an object that represent our location so the object is going to be simp ID which is going to be uu ID okay do version 4 so let's import okay automatically imported so we have our device device ID in our case is going to be the device ID so let's get in the time stamp as well time stamp which is the current time at that point in time so let's get the

next time because we want to keep increasing the time as well a comma so we want to keep increasing the time and get getting the iso format for that so how do we do that let's implement this okay so I'll just copy that and I what is it above the simulate vehicle movement I'll just create a new what I'll just copy this and paste that in there so let's uh implement this function uh it's going to be Global uh Global and the start time all right so the start time is going to be increasing because

this is the time we we using to you know keep track of how long it's taking the driver to get to Birmingham time Delta time Delta in this case let's import that import that from date time of course so we just add a seconds the seconds is going to be random does Rand in uh between 30 to 60 uh basically what we're just doing is just uh the simulation of update update frequency really doesn't mean so much it's just the update frequency for the time all right then we just return the start time okay start

time okay so going back to our simulate Journey where is it here we get the iso format of that particular time because that's what we returning in this case okay then we go forward from here once we have our uh the time I just add a comma to that then we have the location the current location of the driver which is the location latitude of course and at the end of the day because we are using a twole all right so location for the longitude as well longitude so this will be encapsulate or enclosed in

a tup all right so we have the speed of travel which is going to be random do un form all right 10:40 so you shouldn't be too slow or too fast so if you are in Central London the maximum speed is about 20 so once you get out of Central London and you are heading up on the highway maybe M1 I think you can do up to 40 or more okay so the direction that we going basically I'll just uh you know put this as by default Northeast all right from our Central London of course

is nor East so what kind information are we getting most times this all all this information will be sent in from a iot device okay so the maker of the car is Toyota I think I prefer BMW really I don't want to use Toyota let's use BMW all right or Posh whatever so let's say BMW cclass uh maybe c500 if that if that kind car exist then the year is [Music] 2024 what the latest model all right the full type if there's any information about that I'll say I'll just call this hybrid really hybrid okay

good so that's enough about our car we can get all information from our iot device that is sending information to us but for now let's just let's just leave it at that if there's any other information you can add to that it's totally fine so I'll just I'll just leave it at that so once we get our vehicle data in this case it's easy for us to say let's print that and see how that looks like before we continue uh so we know whether there's any error that we need to fix and uh or or

something like that so print vehicle data and just break I just need a single record okay so let's run this in our terminal I'll just come to the terminal and then python jobs and main.py so let's see if we are able to get that we get the ID U ID device ID okay the time stamp is this the location is this the speed direction make model year full type good so that means uh everything up to this point is still very good so that's a good sign so let's continue the next thing is to handle

we've handled our vehicle information let's handle the GPS information as well so GPS data all right GPS data and will be collected from generate GPS data all right so what we need to do that is to get the device ID which is the car of course that we getting information for and then we get the time stamp because we don't want the time stamp to be too uh wide apart so we want to get a time stamp so each time we're getting information about the car we're getting all of this information at the same time

so there could be a delay which is fine but if not we should it shouldn't be too wide apart so we just say maybe every 1 minute delay really we handle that in our spark video spark session all right let's continue so our GPS data in here we are using the device ID and a Tim stamp so let's implement this guy all right so coming back to the uh generate GPS information in here we just create what am I yeah I think I just click on one usage in there so def I'll just copy that

and then get in the device ID all right the time stamp and of course our vehicle is private so the vehicle type it's going to be private okay so we're not using a public car of course bus takes a longer time okay so you have uu ID in our uu ID do version 4 then we get the device ID okay as simple as that device ID get a time stamp okay okay and then that will be the time stamp coming from our input our parameter really and then speed it's going to be random of course

we we don't need this to be too wide apart as well should be uniform between 0 to 45 maybe to 40 uh for the GPS yeah that's fine so of course this is going to be in kilometer per hour so shouldn't be too wide apart so comma then the direction is going to be of course East uh maybe maybe not East really I yes let me just leave it as Northeast really uh because that's where we're heading to anyways so the vehicle type I'm trying so much to make this uh look something like a real

time scenario how you can get all the information you can get really okay so that's our generate GPS data and if you go back down we get we have our GPS data in here so the next thing for us to do is generate our traffic camera information so we have our vehicle data we have GPS data let's generate traffic information as well so we come in here I'll say Dev generate traffic camera traffic camera data all right so we need a device ID the time stamp of course which is the current time and the camera

ID that we using to to because there might be multiple cameras so it might be that we getting information from multiple cameras so in our case we just stick to one camera but we can extend this and make it a little bit more sophisticated by having more cameras more vehicles and we can get more information from our system okay so U ID uh do version four then we have the device idid which is going to be coming from device ID the camera ID camera ID is going to be from here that's the camera that is

sending this information to us then we have the time stamp this is happening okay time stamp and this is usually happening uh at a particular time then we have a snapshot this is where it gets tricky all right interestingly I couldn't find any URL that can give us uh the actual camera footage that we can use but what we can do is get just getting our B 64 encoded in here encoded string encoded string so in a case where you have actual uh snapshot or actual screenshot of what is happening what you want to do

is maybe it's a a URL or you want to save it as a b 64 you can easily just use request or gu to do that and then code it in B 64 or you use the actual URL maybe saving to S3 bucket you just get the actual S3 bucket URL and stamp it into this place so that solves the problem for us so because we don't need any picture really we just get the snapshot information like that so let's get the traffic information into our journey as well we have the traffic uh that will

be traffic data isn't it traffic uh traffic dat I think traffic camera data sounds better really from a particular camera okay so we have our device ID we have the vehicle time stamp vehicle data and that the time stamp and the camera that is sending us this information we can give it any name so let's say uh [Music] camera one two 3 uh maybe uh I'll say Nikon uh I don't know Nikon camera uh Nikon cam one 2 3 right so that's the name of the camera it could be anything really so I just I

just that's what comes to mind all right so let's get into the weather data as well so right now we fixed this uh vehicle data GPS vehicle data is taken care of GPS is taking care of camera has just been taken care of we are left with weather and emergency okay okay weather data it's going to be generate uh where where data and in our case we getting device ID the vehicle data and extract the time stamp from that because we want that time stamp to be unique across okay to be the same thing for

all of the data vehicle data even if the data comes in L it's okay but we get the same data across board all right so let's create a function to do this okay so we can just scroll up to the top uh where is it up to the top yeah in here and just getting Dev um we have GPS Dam okay I can get it in here so Dev so I just wanted to look at in sequential device ID uh time stamp and the location so we just return just like we returned before I'll just

get in a uu ID device ID and the location Maybe uh location I think we we should probably add location to the camera information so we have the latitude and the longitude of the camera at what time is getting information to us so we have the location in here and that will be location so let's quickly fix that before we forget so our traffic cam is getting location for us so I'll just get this in and get in the location okay and we should be good good so let's get back to our weather so location

in this case is going to be the location that the data is coming in time stamp is going to be of course time stamp then let's get all this weather information about the area all right that the car is currently in we have the temperature with the the temp temperature will be shouldn't be that high so usually we have a uniform temperature from minus uh I'll say minus 5 to a maximum the maximum we've experienced in the in London to Birmingham maybe let's say 26 maximum all right it could be more but I'll just stick

to 26 really so let's say weather condition weather condition ah temperature not temperation okay you probably saw that so we have the random. choice for the weather condition okay weather random. choice and uh we have the sunny if it is cloudy if it is raining and or it's snowing I think we should probably add a seed to our random so we stick to that random. seed so let's say 42 as our seed all right so once you choose sunny it's always sunny across board so it doesn't change really unless maybe you get to somewhere like

Bermingham and it's maybe Sunny there and uh snowing in London in summer precipitation all right so if there's any drizzling or something like that okay or any precipitation across bone we have uh 0 to maybe 25 I don't know uh the wind speed most times is usually uh windy so let's get the wind speed as well random. uniform in real sense you want to get all of this from maybe a weather API I don't want to make things too complicated that's why I didn't use the weather API really humidity is going to be random do

random integer from 0 to 100 so it's okay the humidity is this is of course a percentage all right so just in case uh you're wondering then we have the aqi which is our air quality index a quality index aqi that would be for of course I I want it to be okay I think it should be uniform let's make it uniform across board unless you know the aqi in central London it's a little bit bad I wouldn't say bad maybe not too but when you move on the road the aqi gets better so I'll

just say uh the aqi value goes here okay so whatever aqi value you want to use uh you can just twg this to get your teste and uh to suit you best okay so finally we get our weather data um which is weather data in this case now let's see what else we need to do before we continue and that will be uh let's let's see I'll just go down I think I should just scroll this down a little bit here I'll just minimize this we have weather data finally we just getting our emergency emergency

incident data all right so that will give us access to generate Emer emergency incident data all right so we just pass in a ID maybe The UU ID which we don't need really you can just use that vehicle data get the time stamp time stamp and that will be the device ID as well device ID time stamp and the location that the emergency app on all right just like before just copy this okay and then we paste that in so let's create the function that help us do that here we have uh weather data so

and then we should get in our emergency uh function okay the function here then we have our incident ID uh I'll say device ID really device ID the time stamp time stamp uh I think this should be underscore so we keep it uh uniform across board time stamp and then location okay you just return a Jon object the ID is going to be of course in string U ID do uu ID version 4 then the incident ID um let's get in device ID first device ID is going to be the device ID then for the

incident inci incident uh ID so that will be a uu ID I want it to be unique across board so I'll use a uu ID for that as well then we have the type in our case the type of incident is going to be a random choice and that would be maybe we shouldn't have uh used the we shouldn't have used uh that random C because once it selects fire for instance it's going to be fire across sport but I think you get the logic really so it doesn't matter what it is so so far

there's a there's an incident and if there's none it's okay we good to go there's no incident okay so time stamp it will be the time stamp from our parameter good then the location is going to be location as well location then the status it's going to be random. choice and that will be between active or result okay what that means is if there's a a police incident if it is still active and you know how long it it is active depending on how long uh the number of records that Returns the active and the

time it takes between the start of the active to the end all right that will give us the to the result so that will give us information about that and if there's any description of course description will come in here most times you may not have this but I'll just say if you have a description of the incent you can put it in here description all right okay finally we are good to go so our emergency in data GPS traffic information all of this has been taken care of now so now let's fire this up

into CFA and see how that looks but before we do that let's test this data to see if everything is working fine before we continue all right so let's just print them I we say print uh vehicle data uh print GPS data print uh traffic camera data and then print weather data finally print emergency incident data good so let's see how that looks like in the terminal I'll clear this up and then run that again to see how that looks is there any error maybe not there's none so let's see what we have we have

the ID in here for the first one I think we checked this out it was pretty much fine let's double check this as well I code with you time stamp is the same across board the speed direction vehicle type is okay then we have this as well B 64 encoded string for our snapshot I think everything is good and we can start writing this to our Kafka all right let's do that all right we are leading to the end of this section so let's quickly get in our um producer and then we produce these data

that we're writing into cfap so let's do that so the easiest way to do that is to create a function that we can reuse for that so we can have produce to produce data to cka uh you can have the producer and then get in a vehicle topic and vehicle data of course then we can replicate this a couple of times about five times then we have the same done for GPS uh done for traffic traffic we do the same for weather we do the same for IM geny topic so we get this GPS uh

traffic we get in the weather and then we getting the emergency okay good now let's get this function that helps that handles all of this for us because right now nothing is being created to be frank with you and in our simulation here above just above this guy we can have our def definition and that will be produce data we have the producer we have the topic and the data we are producing so producer do produce that's uh the Syntax for that so we just get in the topic the key that we're going to be

using for the production is a uid so let's convert that to string otherwise we're going to get a a challenge in that case and the value is going to be deserialized okay uh to be serialized that would be json. Dums so let's serialize that and we seral in the data but if there's any issue this is where we need to handle an edge case the edge case is in the case where we have The UU ID not being passed correctly this is something that can cause a challenge really by the time you are running your

system so it is possible you have that error so if you don't handle this uh Edge case you might run into unable to produce data into CFA so to do that let's quickly change our data in this case um what so in this case let's just have our default serializer just in case there's any challenge fall back to this serializer and I'm going to just say this is a Json serializer and I'm going to encode this into a utf8 format and finally on delivery are get in a delivery report just to be sure that the

data gets delivery report so just to be sure the the data gets delivered uh to Kafka so let's get in these two functions all right the very first one is the uh the the Json serializer we de serialize we serialize any object so if this if the object if uh is uh instance okay if the object is a uu ID so we just get say uu uu uu ID do uu ID so if it is a type of uu ID we just do a return for that particular object that's a string simple as that all

we just have to do is handle this case all right so otherwise we're just going to raise an error a type error that we don't know this error okay uh raise the type error we are raising in this case is uh object of type something something is is unable object do class name dot name of course is not Jason seriz Ser serializable serializable okay Ah that's a mouthful okay so the final thing we need to do is the delivery reports so the delivery report is where uh By the time data gets sent to kfka we

get the delivery report that has been sent so if there's an error if not we get a message okay so if error is not known that means there's an error while sending so we say um message delivery fi and the reason for that is simply because of this error else we just print uh message delivered okay simple as that message delivered to a particular topic and we usually the topic comes in with the with the data really so message. topic gives us actually the me the message and we can actually get the partition that was

it was delivered to uh by default I think it's zero so if you have multiple partitions for your topic you should be good if should be easily and fairly straightforward to distribute that usually in rring uh but it should be good afterward so let's see the delivery Port is done we have message delivered to this topic and the the partition so let's run this and see if uh we just do once we do one data me to produce one data uh first and see if the data gets loaded to CFA so we do python job

of meain and uh nothing is showing here in the terminal is that it so let's see if we have data in the CFA topic I'll just go into the exact Tab and then we do CFA topics if the data gets sent to CFA the topics will be created so we have a boot bootstrap boot strap server it's going to be broker and 292 or 9092 so let's see if we able to list all of the topics so it is still not sent at this time so let's see why that is the case all right so

going back to our code we have our producer in here and we're breaking all right so let's see why that is the case so let's handle one Edge case in this case so because we haven't handled that if if the car or the vehicle gets to Birmingham what happens we need to stop the the loop so if the vehicle data reports for the location uh which is the latitude in this case is greater than or he cross to Birmingham coordinate for the latitude that means uh it's about to go past latitude of uh Birmingham and

the vehicle and yeah and vehicle data location for the longitude all right is less than or equals to Birmingham coordinates for the longitude longitude okay that means the the vehicle has reached Birmingham so we can just easily say print um V vehicle has reached Birmingham and uh let's end our simulation simulation ending and easily we just do break and that's this condition so once the latitude is greater than latitude of uh Birmingham and the longitude is less than or equals to that then of course it's because it's Northeast so the latitude will make more sense

to be greater in that case all right so finally Let's see we don't need this break let's just uh give it some time to sleep and then to um to produce so let's say every second I'm going to increase this a little bit but for now let's leave it at every seconds I'll say 5 Seconds we can reduce this much later but let's leave it at that okay so um finally let's run this uh once we produce data to CFA in here we are missing something we do producer producer the flush so the data can

of course get delivered as expected yeah and I think that's it so let's run it one time and before we we run the full processing in fact let's just I think the process should be good so let's run it and see if everything is working as expected Okay so vehicle delivered to message delivered to vehicle data GPS and traffic good weather and emergency data good so the next time I think everything should be getting delivered together good so let's see how that looks like if we list this again in our uh broker and the docker

desktop you can see we have uh five topics that is producing data and the data is inside them so let's look at the vehicle information in here so if I do CFA console consumer and I get in the topic the topic is this and um what I'm doing is to get the broker as well uh boot bootstrap server the bootstrap server is broker 9092 and I want to start from the beginning okay and uh yeah let's see if that is uh good and you can see the data is getting produced as expected lovely so we

are heading all to Birmingham so we just wait for this to keep continuing until it gets to birmingam and uh maybe we just stop it we delete everything again and we start from the scratch so now at this point at this point we have uh our data that is producing and the vehicle is moving on to Birmingham so interestingly we've not really handled the streaming part of the thing it's just the production now and if you look at our architecture on the in the system you look at the architecture right now all of this has

been taken care of we have our Kafka data set up now but we haven't set up our streaming consumer that is listening to CFA and writing to S3 that is exactly what we're going to be doing next in this video before we continue on to the consumer section so let's configure our AWS because we going to be needing that so I just logged into to my AWS account and uh this is my console home I have uh buckets that has been created called spark streaming data and I was thinking I would create a new bucket

uh for that but I since I already have a bucket that is empty so there's nothing new about that so you they can just create a a unique bucket for you and then you should be good now one other thing that I want to mention is a permission for this bucket so in the bucket if you click on the permissions tab the block up Public Access should be disabled so when you are cleating this bucket it should be disabled all right otherwise you will not be able to access this from outside systems okay and the

bucket policy in this case is just for me to allow all get and put all right so you can access the get request and put object into that that's the only thing that I added to that and this is the structure of that if you want to see that you can check the link in the description uh to get you to all of these codes and all that okay so let's continue so once this uh bucket is set up and then you've set you set up your bucket policy by clicking on the edit and you

know maybe copy pasting this or typing into anyone works fine but once you have your policy and you block all public uh you disable block or public access the uh bucket will show publicly accessible so that means it's easily accessible by anybody and anybody can upload that so far they know your credentials all right now once that is done I also want to mention the user that I'm using is the admin user so it has all the permissions that is required so in my case I just have I have the administrator access Amazon S3 full

access glue console full access uh these are the three access that I have right now and that should be more than enough for me to work with all right so let's go back to our uh code and then continue so because we're going to be needing all of this information by the time we we get to the access and security credentials and all that all right so now in my code you know we already have we already have a job in here so what I'm going to do in this job is create a file that

is going to be holding my AWS credential so I'm going to just touch jobs and then config.py now in this config.py is where I'm going to be having my awx access so I'll just go into the config and have configuration equals to this and I have awx access key which is going to be in here all right that will be here then we have AWS secret key all right then the secret key will also be here so I'll just uh just in the background don't forget if you want to get the AWS access key and

secret key you have to go to I am for that particular user you're generating for so you just come to or you click on this and then click on security credentials once you click on the security credentials it takes you to the dashboard just click on the user and you see the users that you have access to now the I am credentials in this case you just have to come to the security credentials here so if you want to click on the security credentials I'll be deleting this security credentials because of some reason it has

been exposed even though you don't know the key but it's fine I'll delete it once uh it's done so you have the the access key in here and uh because it's active right now so if you want to add another access key you click on create access key and because your application is going to be running outside of aw you click on this uh application running outside of aw so I'll just click on next and give this temporary uh uh a tag temporary key all right and then create access key then you have access to

the access key in in here so if I copy this I'll just use that as my access key uh that will be removed once the video is done so it's okay I'll just copy this as well for the SEC secret key I'll just paste that and we should be good now with this created I'll just close my config key and in uh in my spark City that I created earlier this is where we are going to be writing our SP uh Apache spark uh uh script okay so I I don't know if you can still

recall that when we did our soltion we did uh PE if you look at our requirement of txt I think we already have it in here we have P spark 3.5 I'm still skeptical about 3.5 let's proceed if we have any issues with 3.5 we're going to downgrade this to maybe 3.3 and then we proceed but for now let's continue and let's see if everything is going to work fine or not if not we're going to dowr the pack version all right so let's get in our SQL session from ppar from pyspark.sql import spark session

and um we're going to be needing another thing like data frame but let's stick to this for now and then let's get our Dev main uh this is the entry point uh which is uh the function that will be called I'll pass this for now and if the name if name uh equals uh no equals to main all right then we call the main function so that means we're calling the main function from the entry point yeah that that that sounds soble yeah that's what we're doing basically so in the past we have a spark

which is going to be inheriting or getting data from spark uh spark session and uh we have spark session. Builder so in our Builder we're going to have an app name uh in in this case the app name is going to be uh smart smart uh City uh streaming okay but before we do that before we continue continue I can I can recall that I mentioned to you that we going to be uh uh fixing whatever issues we have with our Master the other time so we have 7077 which is where we are submitting jobs

to but if you click on this for 9,000 uh 9090 this is our spark Master URL obviously the spark master has been running for some time now maybe it's just sleeping somewhere we have two workers that have been created and assigned to this automatically but you can add more workers like I mentioned so for now let's stick to that and we're going to leave it at that for now so going back to our code I just wanted to show you that so the the port that we're accessing is 9090 not 7077 because 7077 is our

spark Master URL where we are submitting our spark jobs into but 1990 is where the UI is where you can see all the running jobs the workers that are collected and the activities that are going on on your sparkk cluster so going back to the code I just thought to mention that all right so when we once we have our spark City uh streaming we're going to have our config uh the config is going to be uh the spark jars so for our streaming we're going to be needing the Kafka jar which you can get

from Maven repository Maven Maven repository I'll just click on that and um you can search in here for SQL SQL Kafka SQL Kafka if you search for SQL CFA you see something like this spark SQL Kafka and then if you come down uh we're using the 3.5 version so if you click on 3.5 you see the group ID in here so you can copy the group ID so I'll just copy the group ID in here okay group ID uh to see yeah let's finish this spark. jazz. packages all right and then I'll just get this

as a string then we have a full column to get in your spark name which is the artifact ID that you're working with so this is the artifact ID I'll copy that as well and paste it in there then we have another column for the version of the artifact ID so this is 3.5.0 I'll copy that as well and uh get that in here good so this is my spark jar I'll just minimize this pronoun uh I have my spark SQL so that means my sparkk can connect to Kafka with this jav file now if

you don't have this you will not have you will not be able to access uh uh spark with the ja okay so that's one the next thing that I want to add is the AWS so there's a package that allows us to connect from spark to AWS so it's going to be uh the one that we're going to be working with in this case so I'm going to have the this type I think about two of them but let's see if we can continue with that first so we have um in this case if you

go to MAV rep repository again and type for Ado AWS and search for that you'll see Ado AWS in this case if you click on Ado AWS and you click on the 3.31 version uh I'm tempting to use the latest version but I'll use this this one that has been tested and working fine even though there are some vulnerabilities here but you can just uh ignore them for now um I'll just copy this o do yeah this one I'll just copy that all right then the Ado AWS which is this one get that in uh

I get this in all right then finally I put another column for the version which is 3.31 so like I said I'm tempted to use the latest version but uh we'll see how that goes maybe at the end of the day we try to optimize this and see if you can use a newer version all right so uh those are the two packages one other package that we're going to be needing is coming from AWS itself AWS Java SDK so let's see how that looks like in here AWS Java SDK so in here it's coming

from AWS so I'm going to be needing this as well and I'm going to be selecting the 1.11 not the 1.12 version H that's a long one so I'll just come down in here and search for 1.11 469 yeah this is the one that I'll be using um so we can like I said we can try to upgrade this for now but I'll stick to these ones that have been tested and I don't want any surprises all right so I'll paste that and then get in my artifact ID which is coming from this guy AWS

Java SDK copy that and then put it in here another full column yep and then finally the version which is 11 1.11 469 all right good yep that should be good for us and then let's configure our our AWS configuration so we have this set up now the Jaz has been set up then we have our config which is the the one that tells adop and Spark how to connect to your S3 bucket so we are going to have this as spark. ad. fs. s3a implementation now the implementation is just the class that this is

going to be calling by the time it wants to do that which is Apache do. fs. s3a s3a file system good and um that's one the next thing is the access key and the secret key of course so we getting the config uh config then let's get in our config spark. addf S3 a. access. key yeah not J key yep good then we have the access key that is coming in is going to be coming in from config in here right so we have um from config import configuration isn't it then in our configuration here

we have configuration. getet and then we getting our AWS access key I hope that is clear though yeah all right I hope hope you are following me though yeah let's duplicate this and then get this access uh uh instead of access key we change this to secret key all right AWS uh secret key see secret key if I can only type secret K okay finally we getting our one final thing which is the AWS credential provider and that is the one that is going to help us uh use the main class that is going to

be uh B a provider in this case so spark. fs. s3. AWS credentials do provider all right now let's get in that guy in so it's here o do not original or. Apache adup ad. fs. S3 a. implementation then simple simple AWS a us cred credential provider isn't it y now that's all we need to do and that's all we have to uh provision in our case so finally we just press that and then get or create now this establish a relationship with um ad dup and with spark with the Jaz that we've just added

to the system good now let's proceed now let's use our spark so we adjust the log level so let's quickly adjust the log level in this case even though this is optional I'll just minimize this the log level that the log level uh this is just to minimize the console output executor on executor really uh to minimize the the console output on executors so just not you don't just uh print out everything in Vios mode so spark do spark context okay simple as that log level and uh we just set it to one and that's

all it's it's nothing too serious really now let's get in our vehicle schema uh if you can still recall we have our vehicle schema in here so I'll just put this on the side and we go into our vehicle schema in here how does it look like vehicle weather emergency vehicle uh no not this one yeah this is it this is our vehicle schema so I get in our vehicle schema from here so we have um vehicle schema so the vehicle schema is going to be vehicle schema is going to be a struct type so

struct type all right and in this struct type let's import that in from SQL types so we have the struct field uh struct field now let's import this as well from SQL do types as well uh sparql types we have the ID which is going to be a string type because a uu ID of course the nullable is going to be true by default is uh I think it's by default just uh like that so have that uh ID then I'm going to just duplicate this a couple of times and just change the value in

there so we have the device ID we have the time stamp time stamp we have the location we have the speed we have the direction we have the make make we have the model and uh duplicate this a little bit more we have the year and we have the F Type okay and uh we don't need the last one in here so for the device ID it's going to be string for time stamp is going to be time stamp time time stamp type isn't it yeah let's bring that in as well location is going to

be a string speed is going to be double yeah let's leave it a double type isn't it yeah then direction is string make is string model is string the year is also going to be integer type integer type so let's bring that in as well import that and import this as well and we should be good with the structure for the schema for vehicle so let's get in other uh structures as well uh we getting a GPS schema uh let's bring that in yeah we have a GPS GPS schema and the GPS schema GPS schema

is going to be the same thing that we did in here just copy that and uh we just uh look at the GPS where is the GPS uh GPS data so we have ID device ID time stamp we remove the location there's no location in here we have the speed we have the direction we have the vehicle type so let's get in that vehicle type and that will be all for our GPS schema then uh I think we still have more uh we have the traffic schema as well traffic schema the traffic schema is going

to be uh traffic schema it's going to be struct uh before that I'll just look at that let's see uh traffic schema we have ID device ID I'll just copy this really everything in here I'll just copy that and paste it in here we have the ID device ID camera ID Tim stamp location so let's get in that so there's no speed so let's uh this is camera ID and that will be a string type as well it's a string type all right time stamp is a time stamp type and we have the location so

the location is going to be location it's going to be a string type time stamp and snapshot snapshot sh good and that's our traffic schema so final thing oh maybe not final we have the weather data so we have uh wether uh weather schema all right we weather schema and this will be wether schema is going to be struct type of course I'll just copy that as well uh this a little bit long so I'll copy this one all right and uh in my weather schema I'll paste it in here we have ID device ID

location time stamp temperature uh temperature let's get that in temperature and uh we I'll just minimize this a little bit all right I reduce that maybe not minimize temperature we have the weather condition um this is also going to be random the choice and the weather condition is a string so uh we have weather condition is a string uh we have the precipitation I'll just copy that and uh it's a uniform so it's going to be a double so double type and we have wind speed all right humidity humidity and a quality index good and

the rest is going to be uh humidity is going to be an integer this is going to be a double because it's a random do uniform and then we have this as well okay good and uh that's start for our weather schema and finally if you are interested we can get in our emergency data as well I'll just uh do emergency emergency schema and then it has ID the device location and I just copy this I think it's about the same size copy that equals to no emeny schema is going to be this and we

have ID device ID in ID so incident ID incident ID we have the location and time stamp which is good and we just getting our snapshot here and uh snapshot is going to be type uh it's going to be a string uh time stamp is going to come in next location status and description so let's get those in uh status is going to be that and the description is going to be this and that will be all really I think it's just comma and we should be good everything is fine now perfect now we have

all our schemas I just get this back into shape all right now once this is done we need to read the data so because there are multiple schemas in here it doesn't make too much sense to keep duplicating the code every now and then so what what makes sense is to have a single function or a single function that helps us with just passing parameters so we can reuse that one of the important thing about coding is Dr don't repeat yourself so let's try to not repeat ourselves so much in here okay so let's go

back into our code and let's uh write that so I'm going to have in my in my uh under emergency schema we're going to have a vehicle vehicle data frame this is going to be the data frame that is reading data from that we're going to have a function called read cka topic and we passing the vehicle vehicle data and then we have the vehic schema and we have the areas for that as uh vle uh read Kafka topic so let's create this function we don't have this function implemented uh we can do that by

just um underneath here we can just say Def and getting our function here so this is going to be topic and the schema and uh what we're going to be returning in this case is going to be the spark which is our spark variable uh read uh stream because we are reading from cafka really uh so I'll just say I'll just put this in here so we can have do format the format is going to be Kafka and uh oh by the way if you are interested in this Kafka of a thing there are a

lot of videos on this channel that helps us to do that or you you can visit data.com and you see more videos that you can uh you know watch uh and get more information about that so we are subscribing to this and this will be the topic all right the topic that we're bringing in uh of course we can subscribe to multiple TOS but that's a discussion for another day and we have the starting off sets and we're going to be starting at the earliest of course yep starting at the earliest then once that is

done we do a load now the loading is going to load the data for us but what we want to do is just quickly change the selection so we don't have to deal with the value Because by the time data gets selected you're going to have a column uh so we just want to quickly fix that so let's do the select expression for that select expression one time uh we have cast and then we have the value as a string now put this as capital and um uh we don't need that we're inside a bracket

already so we say select uh from Json uh let's bring this in from Json and we're bringing in column uh extracting data from the value column and uh using this particular schema that we pass into that and the alas of that is going to be data simple as that really data okay and finally uh we have a selection from the data so do s select okay select data. asterics and then yeah let's bring in this guys all right let's bring in uh from Json which is good Y and we get in our column from as