The math behind Attention: Keys, Queries, and Values matrices

260.91k views6658 WordsCopy TextShare

Serrano.Academy

This is the second of a series of 3 videos where we demystify Transformer models and explain them wi...

Video Transcript:

[Music] hello my name is Louis Serrano and in this video you're going to learn about the math behind attention mechanisms in large language models attention mechanisms are really important in large language models as a matter of fact they're one of the key steps that make Transformers work really well now in a previous video I showed you how attention Works in very high level with words flying towards each other and gravitating towards other words in order for the model to understand context in this video we're going to do an example in much more detail with all the math involved now as I mentioned before the concept of a transformer and the attention mechanism were introduced in this groundbreaking paper called attention is all you need now this is a series of three videos in the first video I showed you what attention mechanisms are in high level in this video I'm going to do it with math and in the third video that is upcoming I will put them all together and show you how a Transformer model works so in this video in particular you're going to learn about some concept similarity between words and pieces of text is one of the concepts one way to do this is with DOT product and another one with cosine similarity so we learn both and next you're going to learn what the key query and value matrices are as linear Transformations and how they have an involvement in the attention mechanism [Music] so let's do a quick review of the first video first we have embeddings and embeddings are a way to put words or longer pieces of text in this case it's the plane but in reality you put it in a high dimensional space in such a way that words that are similar get sent to points that are close so for example these are fruits there's a strawberry an orange a banana and a cherry and they are all in the top corner of the image because they're similar words so they get sent to similar points and then over here we have a bunch of Brands we have Microsoft we have Android then we also have a laptop and a phone so it's the technology corner and then the question we had in the previous video is where would you put the word apple and that's complicated because it's both a technology brand and also a fruit so we wouldn't know in particular let's take a look at this in the oranges on the top right and the phone is in the bottom left where would you put an apple well then you need to look at context so if you have a sentence like please buy an apple and an orange then you know you're talking about the fruit if you have a sentence like apple unveiled a new phone then you know you're talking about the technology brand so therefore this word needs to be given context and the way it's given context is by the neighboring words in particular the word orange is the one that helps us here so what we do is that we look at where orange is and then move the Apple in that direction and then we're gonna use those new coordinates instead of the old ones for the app so then now the apple is closer to the fruits so it knows more about its context given the other words the word orange in the sentence now for the second sentence the word that gives us the clue that we're talking about a technology brand is the word phone therefore what we do is we move towards the word phone and then we use those coordinates in the embedding so that apple in the second sentence knows that is more of a technology word because it's closer to reward phone now another thing we saw in the previous video is that not just the word orange is going to pull the Apple but all of the other words are going to pull the apple and how does this happen well this happens with gravity or actually something very similar to gravity words that are closed like apple and orange have a strong gravitational pull so they move towards each other on the other hand the other words don't have a strong gravitational pull because they're far away actually they're not far away but they're dissimilar so we can think of distance as a metric but in a minute I will tell you exactly what I'm talking about here but we can think of the words as being far away and as I said words that are closed get pulled together and words are far away get old but not very much and so after one gravitational step then the word apple and orange are much closer and the other words in the sentence well they may move closer but not that much and what happens is that context pulls so if I've been talking about fruits for a while and I said banana strawberry lemon blueberry and orange and then I say the word Apple then you would imagine that I'm talking about a fruit and what happens here in space is that we have a galaxy of fruit words somewhere and they have a strong pool so when the word Apple comes in it gets pulled by this Galaxy and therefore now the Apple knows that it's a fruit and not a technology brand now remember that I told you that words are far away but that's not really true in reality what we need to do is that the concept of similarity [Music] so what is similarity well as humans we have an idea of words being similar to each other's or dissimilar and that's exactly what similarity measures so before we saw for example that the words cherry and orange are similar and there different than the word phone and we kind of had the impression that there's a measure of distance like cherry and orange are closed so they have a small distance in between and Cherry and phone are far so they have a large distance but as I mentioned what we want is the opposite image of similarity which is high when the words are similar and low when the words are different so next I'm going to show you three ways to measure similarity that are actually very similar at the end the first one is called dot product so imagine that you have these three words over here Cherry orange and phone and as we saw before the axis in the embedding actually mean something it could be something tangible for humans or maybe something that the computer just knows and we don't but let's say that the axes here measure check for the horizontal axis and fruitness for the vertical axis so the cherry on the in the orange have high fruitness and low Tech that's why they're located in the top left and the phone has high tech and low furnace that's why it's located in in the bottom right now we need a measure that is high for these two numbers cherry and orange so the measure of similarity is going to be the phone we look at their coordinates 1 4 for cherry and zero three four orange and remember that one of them is the amount of tech and the other one is the amount of fruitness now if these words are similar we would imagine that they have similar amounts of tech and similar amounts of fruitness in particular they have both have low Tech so therefore if we multiply these two numbers it should be a low number that's one times zero but they both have high fructose so if we multiply those two numbers four times three we get a high number and when we add them together that's the product of the tech and the product of the fruitness we get a high number which is 12. now let's do the same thing for cherry and phone so the similarity should be a small number let's see between 1 4 and 3 0 what is the dot product well it's one times three the products of the tech values plus four times zero the problem of fruitness values and that's one times three plus four times zero which is a small number which is three notice that the reason is because if uh one of the words has low Tech the other one has high tech and one of them has low fruitness down and has high fruitness so we're not going to get very high by multiplying them and adding and the extreme case is orange foam this Orange phone the coordinates are zero three and three zero so when we multiply 0 times 3 we get zero plus three times zero equals zero and so we get zero notice that these two words are actually perpendicular with respect to the origin and when two words are perpendicular they're always gonna have dot product equals zero so dot product is our first measure of similarity it's High when the words are similar or closing the embedding and low when the words are far away and notice that it could be negative as well the second measure of similar is called cosine similarity and it looks very different from that product but they're actually very similar what we do here is we use an angle so let's calculate the cosine similarity between orange and Cherry we look at the angle that the two vectors made when they are traced from the origin this angle is 14. if you are not calculated it's actually the arctangent of one quarter and here it is so that number is 0.

97 that means that the similarity between Cherry and orange the cosine similarity is 0. 97 now let's calculate the one between Cherry and phone that angle is 76 because it's the arc 10 of 4 divided by one and that number is 0. 24 so the similarity between Cherry and orange is 0.

24 which is much lower than 0. 97 and finally guess what that third one is going to be the similarity to an orange and phone is going to be the cosine of this angle which is 90 and that is again zero so the similarity of zero cosine similarities since it's a cosine it is between 1 and -1 so one is for words that are very similar and then zero and negative numbers are for words that are very different now I told you that dot product similarity are very similar but they don't look that similar why are they so similar well because they're the same thing if the vectors have length one more specifically if I were to draw a unit circle around the origin and I take every point and I draw a line from the center to the point and put the word where the line meets the circle that means I scale everything down so that all the vectors have length one then cosine similarity and the dot product are the exact same thing so basically all my vectors have Norm 1 then cosine similarity and Dot product are the same thing so at the end of the day what we are saying is that dot product and cosine similarity are the same up to a scalar and the scalar is the product of the two lengths of the vector so if I take the dot product and divide it by the parts of the lengths of the vectors then I get the cosine similarity now there is a third one called scale dot product which as you can imagine it's another multiple of the dot product and that's actually the one that gets used in attention so let me show you quickly what it is it is the dot product just like before so here we get 12 except that now we're divided by the square root of the length of the vector and the length of the vector is 2 because these vectors have two coordinates and so we get 8. 49 for the first one for the second one we had a 3 divided by root 2 is 2.

12 and for the third one well we had a zero and that divided by root 2 is also 0. now now the question is why are we dividing by this square root of 2 well that is because when you have very long vectors for example with a thousand coordinates you get really really large dot products and you don't want that you want to manage these numbers you want these numbers to be small so that way you divide by the square root of the length of the vectors [Music] now as I mentioned before the one we use for attention is the scale dot product but for this example just to have nice numbers we're going to do it with cosine similarity but at the end of the day remember that everything gets scaled by the same number so let's look at an example we have the sentences an apple and orange and an Apple phone and let's calculate some similarity so first let's look at some coordinates let's say that orange is in position 0 3 phone is in position for zero and this ambiguous apple is in position two two now embeddings don't only have two Dimensions they have many many so to make it more realistic let's say we have three dimensions so there's an other dimension here but all the words we have are at zero over that Dimension so they're in that flat plane by the wall however the sentences have more words the words and an end so let's say that and an N are over here at coordinate 0 0 2 and 0 0 3. now let's calculate all the similarities between all these words that's going to be this table here the first easy step to notice is that the cosine similarity between every word and itself is one why well because every angle between every word in itself is zero and the cosine is zero is one so all of them are one now let's calculate the similarity between orange and phone we already saw that this is zero because the angle is 90.

now let's do between orange and apple angle is 45 same thing between Apple and phone and the cosine of 45 degrees is 0. 71 finally let's look at phone and and or actually any word between orange apple and phone makes an angle of 90 degrees with and an N so all these numbers are actually zero and finally between and and the angle is zero so therefore the cosine is one so this is our entire table of similarities between the words and we're going to use this table similarity to move the words around that's the attention step so let's look at this table but only for the words in the sentence an apple and an orange we have the words orange capital and an n and we're going to move them around so we're going to take them and change their coordinates slightly each of these words would be sent to a combination of itself and all the other words and the combination is given by the rows of this table so more specifically let's look at Orange Orange would go to one times orange which is this coordinate over here plus 0. 71 times Apple which is this coordinate right here plus 0 times n plus zero times n which means nothing else now let's look at where Apple goes Apple goes to 0.

71 times orange plus one times apple plus 0 times n plus zero times n now let's look at n and n they go to zero times orange plus zero times Apple plus 1 times and plus one times n and the same thing happens with the word and goes to zero times orange plus zero times apple plus 1 times n plus one times n so basically what we did is we took each of the words and sent it to a combination of the other words so now Orange has a little bit of Apple in it and apple has a little bit of orange in it etc etc so we're just moving words around and later I'm going to show you graphically what this means now let's also do it for the other sentence an Apple phone this one I'm going to do it a little faster phone goes to one times phone plus 0. 71 times apple plus 0 times n Apple goes to 0. 71 Times phone plus one times apple plus 0 times n and n goes to 1 times n so itself however there are some technicalities I need to tell you first of all let's look at the word orange it goes to one times orange plus 0.

71 times Apple but these are Big Numbers imagine doing this many times I end up being sent to 500 times orange plus 400 times Apple I don't want to have these big numbers I want to be scaling and everything down so in particular I want these coefficients to always add to one so that no matter how many Transformations I make I'm gonna end up with some percentage of orange some percentage of apple and maybe percentages of other words but I don't want these to blow up so in order for the coefficients to add to one I would divide by their sum 1 plus 0. 71 so I get 0. 58 orange plus 0.

42 times Apple so that process is called normalization however there's a small problem can you see it well what happens is this let's say that I have orange goes to 1 times orange minus one times motorcycle because remember that cosine distance can be a negative number so if I want these coefficients to add to 1 I would divide by their sum which is 1 minus one and dividing by zero is a terrible thing to do never ever ever divide by zero so how do I solve this problem well I would like these coefficients to always be positive find a way to take these coefficients and turn them into something positive I'm good however I still want to respect the order one is a lot bigger than minus one so I want the coefficient that 1 becomes to be still a lot bigger than the coefficient that minus one becomes so what is the solution well a common solution here is instead of taking a coefficient X take the coefficient e to the X so raise e to the all the numbers you see here and what do we get well if I take every number and turn it into e 2 to that number then I have e to the 1 times orange plus e to the 0. 71 times Apple divided by e to the 1 plus 0. 71 the numbers change slightly now there's 0.

57 and 0. 43 but what happens in the bottom one well one becomes e to the one negative 1 becomes e to the minus 1 and now I add them and the bottom becomes e to the one plus e to the minus 1 and that becomes 0. 88 orange plus 0.

12 motorcycle so we effectively turned the numbers into positive ones respecting their order so we do this step for the coefficients to always add to one this step is called Soft Max and it's a very very popular function in machine learning so now when we go to the tables and how those tables created these new words then we can change the numbers to the soft Mach numbers and get these actual new numbers so this is what's going to tell us how the words are going to move around but actually before I show you this geometrically I have to admit that I've been lying to you because softmax doesn't turn a zero into a zero in fact these four numbers get sent to e to the one e to the 0. 71 e to the 0 and e to the zero and e to the zero is one so this combination of words when you normalize it it actually becomes 0. 4 orange plus 0.

3 apple plus 0. 15 and plus 0. 15 and so those hand and end are not that hard to get rid of but as you may imagine in real life they will have such small coefficients that they're pretty much negligible but at the end of the day you have to consider all the numbers when you do softmax but let's go back to pretending that and and are not important in other words let's go back to the original equations Apple goes to 0.

43 Orange plus 0. 57 of apple and apple goes to 0. 43 phone plus 0.

57 of Apple so if we forget about the words and the end let's actually go back to the plane here where the three words are nicely fit in the plane so let's look at the equations again Apple going to 43 of orange plus 57 of Apple really means that we're taking 43 of the apple and turning it into orange geometrically this means that we're taking the line from Apple to Orange and moving the word Apple 43 along the way that gives us the new coordinates 1. 14 and 2. 43 for the second sentence we do the same thing we take 43 away from the apple and turn it into the word phone that means we trace this line from Apple to phone and locate the new Apple 43 along the way that means it's in the coordinates 2.

86 and 1. 14 so what does this mean that means that when we're gonna talk about the first sentence we're not going to use the coordinates 2 2 for Apple we're going to use the coordinates 1. 14 and 2.

43 and we're in the second sentence we're not going to to use the coordinates 2 2 we're going to use the coordinates 2. 86 and 1. 14 so now we have better coordinates because these new coordinates are closer to the coordinates of either orange or phone depending on which sentence the word Apple appears and therefore we have a better version of the word Apple now this is not much but imagine doing this many times in a Transformer the attention step is applied many times so if you apply it many times at the end the words are gonna end up much much closer to what is dictating the context in that piece of text and in a nutshell that is what attention is doing [Music] so now we're ready to learn the keys queries and values and matrices if you look at the original diagrams for scale.

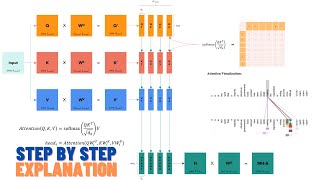

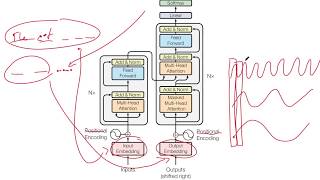

producted tension on the left and multi-headed tension on the right they contain these KQ and V those are the key query and value matrices that we're going to denote by Keys queries and values actually let's first learn keys and queries and we're going to learn values later in this video so let me show you how I like to see keys and queries matrices now recall from previously in this video that when you want to do the attention step you take the embedding and then you move these ambiguous Apple towards the phone or towards the orange depending on the context of the sentence now in the previous video we learned what a linear transformation is is basically a matrix that you multiply all the vectors by and you can get something like this another embedding or maybe something like this a good way to imagine linear Transformations is send that squared to any parallelogram and then the plane follows because the square tessellates the plane so therefore you just continue tessellating the plane impression parallelograms and you get a transformation from the plane to the plane so these two examples over here are linear transformations of the original embedding now let me ask you a question out of these three which one is the best one for applying the attention step and which one is the worst one and which one is so so feel free to pause the video and think about it and I'll tell you the answer well the first one is so-so because when you apply attention it kind of separates the fruit apple from the technology Apple but not so much the second one is awful because you apply the attention step and it doesn't really separate the two words so this one's really bad it doesn't really add much information and the third one is great because it's really spaces out the phone and the orange and therefore it separates the technology apple and the fruit apple very well so this one's the best one and the point of the keys and queries Matrix is going to help us find really good embeddings where we can do attention and get a lot of good information now how do they do it well through linear Transformations but let me be more specific remember that attention was done by calculating the similarity now let's look at how we did it let's say you have the vector for orange and the vector for phone in this example they have three coordinates but they could have as many as we want and we want to find the similarity so the similarity is the dot product it actually was the scale dot product or the cosine distance but at the end of the day it's the same up to a scalar so we're just going to take them all similarly and the dot product can be seen as the product of the first one times the transpose of the second one is a matrix product and as I said before if we don't care so much about scaling we can think of it as the cosine distance in that particular embedding now how do we get a new embedding well this is where the keys and queries matrices come in when we look at the keys and queries Matrix what they do is they modify the embeddings so instead of taking the vector for orange we take the vector for range times the keys matrices and instead of taking the vector for phone we take the vector phone times the queries Matrix and we get new embeddings and when we want to calculate the similarity then it's the same thing it's a product of the first one times the transpose of the second one which is the same as the transpose of queries times the transpose of phone and this over here is a matrix that defines a linear transformation and that's the linear transformation that takes this embedding into this one over here so the keys and queries Matrix work together to create a linear transformation that will improve our embedding to be able to do a tension pattern therefore what we're doing is that we're modifying the similarity in one embedding and taking the similarity on a different metric and we're gonna make sure that this is a better one actually we're going to calculate lots of them and find the best ones but that's coming a little later but imagine keys and queries matrices as a way to transform our embedding into one that is better suited for this attention problem and now that you've learned what the keys and queries matrices are let me show you what the values Matrix is recall that the keys and queries Matrix actually turn the embedding into one that is best for calculating similarities however here's the thing that embedding on the left is not where you want to move the words you only want it for calculating similarities let's say that there's an ideal embedding for moving the words and it's the one over here so what do we do well using the similarities we found on the left embedding we're gonna move the words on the right embedding and why is that the case well what happens is that the embedding on the left is actually optimized for finding similarities whereas the embedding on the right is optimized for finding the next word in a sentence why is this because a Transformer what it does and we're going to learn this in the next video but a Transformer finds the next word in a sentence and it continues finding the next word until it builds long pieces of text so if anyone you want to move the words around is one that's optimized for finding the next word and recall that the embedding on the left is found by the keys and queries matrices and the embedding on the right is found by the values matrices and what are the values Matrix do well it's the one that takes the embedding on the left and multiplies every Vector to get the embedding on the right so when you take the embedding on the left multiplied by The Matrix V you get another transformation because you can concatenate these linear Transformations and you get the linear transformation that corresponds to the embedding on the right now why is it that the embedding on the left is the best one for finding similarities well this one is one that knows the features of the words for example it would be able to pick up the color of a fruit to the size the amount of fruitness the flavor the technology that is in in the phone etc etc is the one that captures features on the words whereas the embedding for finding the next word is one that knows when two words could appear in the same context so for example if the sentences I want to buy a blank the next word could be car could be apple could be phone in the embedding on the right all those words are close by because the embedding on the right is good for finding the next word in a sentence so recall that the keys and queries matrices can capture the high level and the low level granular features of the words embedding on the right doesn't have that it's optimized for the next word in a sentence and that's how the key query and values Matrix actually give you the best embeddings to apply attention in now just to show you a little bit of the math that happens in the value Matrix imagine that these are your similarities that you found after the soft Max function then that means Apple goes to 0. 3 orange plus 0. 4 apple plus 0.

15 and plus Point 15 and that's given the second row of the table and when you multiply this matrix by the value Matrix then you get some other embedding like that now everything here is something 4 it could be length completely different because the value Matrix doesn't need to be a square it can be a rectangle and then the second row tells us that instead Apple should go to v21 Times orange plus V2 times apple plus V2 3 times n plus V2 4 times n so that's how the value Matrix comes in and transform the first embedding into another one well that was a lot of stuff so let's do a little summary on the left you have the diagram for scale dot product extension and the formula so let's break it down step by step first you have this step over here where you multiply K and Q transpose what is that well that is the dot product and you're dividing by the square root of DK DK is the length of each of the vectors remember that this is called scale dot product so what we're doing here is finding the similarities between the words I'm going to denote the similarities by angles with cosine distance but you know that I'm talking about scale dot product instead so now that we found the similarities we move to the step over here with the soft Max which is the one where we figure out where to move the words in particular the technology Apple moves towards the phone and the fruit apple moves towards the orange but we're not going to make the movements on this embedding because this embedding is not optimal for that this embedding is optimal for finding similarities so we're gonna use the values Matrix to turn this embedding into a better one V is acting as a linear transformation that transforms the left embedding into the embedding on the right and in the embedding on the right is where we move the words because this embedding over here is optimized for the function of the Transformer which is finding the next word in a sentence so that is self-attention now what's multi-head attention well it's very similar except you used many heads and by many heads I mean many key and query and value matrices here we're going to show three but you could use eight you could use 12 you could use many more and the more you use the better obviously the more use the more computing power you need but the more you use the more likely you'll be finding some pretty good ones so this 3K and Q Matrix as we saw before they form three embeddings where you can apply attention now K and Q are the ones that help us find the embedities where you find the similarities between the words now we also have a bunch of value matrices as many as key and query Matrix is always the same number and just like before these value matrices transform these embeddings where we find similarities into embeddings where we can move the words around now here is the magic step how do we know which ones are good and which ones are bad well right now we don't we concatenate them first what is concatenating means well if I have a table of two columns and another table of two columns and another table is two columns when I concatenate them I get a table of six columns geometrically that means if I have let's say an embedding of two dimensions and another one and another one I concatenate them then I get an embedding of Six Dimensions now I can't draw in Six Dimensions but imagine that this thing over here is a really high dimensional embedding of Six Dimensions so something with six axis in real life if you have a lot of big embeddings you end up with a very high dimensional embedding which is not optimal so that's why we have this linear step over here the linear step over here is a rectangular Matrix that is going to transform this into a lower dimensional embedding that we can manage but there's more this Matrix over here learns which embeddings are good and which embeddings are bad so for example the best embedding for finding the similarities was the third one so this one gets scaled up and the worst embedding was the one in the middle so this one gets scaled down so this Matrix over here this linear step actually does a lot and now if we know what matrices are better than others and the linear step actually scales those well and scales the bad ones by a small amount then we end up with a really good embedding and so we end up doing attention in a pretty optimal embedding which is exactly what we want now I've done a lot of magic here because I haven't really told you how to find this key query and value matrices I mean they seem to do great jobs but finding them is probably not easy well that's something we're gonna see more in the next video but the idea is that this key query and value Matrix get trained with the Transformer model here's a Transformer model and you can see that multi-headed tension appears several times I like to simplify this diagram into this diagram over here where you have several steps tokenization embedding positional encoding then a feed forward and an attention part that repeats several times and each of the blocks has an attention block so in other words imagine training a humongous neural network and a neural network has inside a bunch of key aquarium value matrices that get trained as the neural network gets trained to guess the next word but I'm getting into the next video this is what we're gonna learn on the third video of this series so again this was the second one attention mechanisms math the first one had a high level idea of attention and the third one is going to be in Transformer models so stay tuned when that is out I will put a link in the comments so That's all folks congratulations for getting until the end this this was a bit of a complicated video but I hope that the pictorial examples were helpful now time for some acknowledgments I would have not even able to make this video if not for my friend and colleague who is a genius and knows a lot about attention and Transformers and he actually helped me go over these examples and help me form these images so thank you Joel and some more acknowledgments Jay Alamar was also tremendously helpful in me understanding Transformers and attention we had long conversations where he explained this to me several times and my friend Omar as well Omar Flores was very helpful too I actually have a podcast where I ask him questions about Transformers for about an hour and I learned a lot from this podcast it's in Spanish but if you do speak Spanish check it out what link is in the comments and it's also in my Spanish YouTube channel serrano. academy and if you like this material definitely check out llm. university it's a course I've been doing at cohere with my very knowledgeable colleagues me or Amir and Jay Alamar the same J as before this is a very comprehensive course and it's taught in a very simple language it talks about all the stuff in this video including embedding similarity Transformers attention also it has a lot of labs where you can do semantic search you can do prompt engineering many other topics and so it's very Hands-On and it also teaches you how to deploy models basically it's a zero to 100 course on llms and I recommend you to check llm.

university and finally if you want to follow me well please subscribe to the channel and hit like or put a comment I love reading the comments it's serrano. academy you've also tweeted me at serrano.

Related Videos

44:26

What are Transformer Models and how do the...

Serrano.Academy

127,572 views

21:02

The Attention Mechanism in Large Language ...

Serrano.Academy

102,022 views

1:56:20

Let's build GPT: from scratch, in code, sp...

Andrej Karpathy

4,889,823 views

2:07:38

Unlocking Artificial Minds - Coordination

Cognitive Architectures

166 views

58:04

Attention is all you need (Transformer) - ...

Umar Jamil

417,120 views

26:10

Attention in transformers, visually explai...

3Blue1Brown

1,830,110 views

1:02:50

MIT 6.S191 (2023): Recurrent Neural Networ...

Alexander Amini

677,248 views

1:27:05

Transformer论文逐段精读

跟李沐学AI

420,249 views

25:28

Watching Neural Networks Learn

Emergent Garden

1,393,176 views

57:10

Pytorch Transformers from Scratch (Attenti...

Aladdin Persson

316,804 views

27:14

Transformers (how LLMs work) explained vis...

3Blue1Brown

3,702,603 views

18:21

Query, Key and Value Matrix for Attention ...

Machine Learning Courses

9,373 views

![[ 100k Special ] Transformers: Zero to Hero](https://img.youtube.com/vi/rPFkX5fJdRY/mqdefault.jpg)

3:34:41

[ 100k Special ] Transformers: Zero to Hero

CodeEmporium

51,593 views

1:01:31

MIT 6.S191: Recurrent Neural Networks, Tra...

Alexander Amini

196,498 views

![BERT explained: Training, Inference, BERT vs GPT/LLamA, Fine tuning, [CLS] token](https://img.youtube.com/vi/90mGPxR2GgY/mqdefault.jpg)

54:52

BERT explained: Training, Inference, BERT...

Umar Jamil

44,915 views

38:24

Proximal Policy Optimization (PPO) - How t...

Serrano.Academy

29,411 views

36:15

Transformer Neural Networks, ChatGPT's fou...

StatQuest with Josh Starmer

743,091 views

2:59:24

Coding a Transformer from scratch on PyTor...

Umar Jamil

205,301 views

27:07

Attention Is All You Need

Yannic Kilcher

651,461 views