Unknown

0 views12686 WordsCopy TextShare

Unknown

Video Transcript:

[Music] [Music] [Music] [Music] [Music] [Music] [Music] good morning everyone thank you for joining us today we will continue our exploration of the European Union artificial intelligence act also known as the AI act I am Mario odak law professor at University uh France um I have the great pleasure to organize this webinar with my colleague Arno latil from sban University he moderated the first webinar on the architecture of the AI act one month ago and actually you can watch or replay this webinar on the European commission's YouTube channel we are broadcasting today from the direction General

uh connect in charge of digital policies within the European Commission in Brussels DG connect hosts the EU AI office and I have the great pleasure to welcome members of the uh AI office with us today to explore the AI act they hold have a strong background and expertise in AI governance and regulation in EU jurisdiction and Beyond Yonka Ivanova welcome you are head of sector for legal oversight of the a act implementation at the regulation and Compliance Unit and you have been involved in the EI negotiations from the start then we have with us freder

gr ALS you are technology specialist in the AI safety uh unit and I heard you freshly arrived at the AI office to work in this new unit and finally we have antoan alexand Andre you are legal and policy officer from the AI Innovation and policy uh coordination unit and you have been working on uh the AI act notably from a standardization and implementation angle thank you very much for being uh with us today I briefly mention that this uh webinar is part of the AI pact so this is an uh initiative launched by the European

Commission in May 2024 to direct engage with stakeholders and to support them to uh implement the AI act today uh we will focus on three main topics first we will discuss obligations for providers and deployers uh to be implemented by uh February 2025 second we will uh analyze uh the regulation of general purpose AI models and uh finally uh we will uh have some discussion on the requirements for high-risk AI systems and the uh related standardization process we really aim to make today's agenda interactive so after each of the three uh parts of this webinar

we will take questions from the audience and for that we are using the slido application so normally you can already see on your screen a QR code and feel free to connect to slido and participate let us start with the AI act obligations so the AI Act is being implemented uh gradually depending on its uh provision so maybe uh Yona you could uh provide us with a general overview of the AI act uh implementation timeline thank you very much uh indeed um the AI Act is going to become applicable in a staged approach so we

give enough time for the providers deployers of AI systems to gradually adapt uh and uh to the relevant Provisions so the General application uh timeline for the a Act is 2nd of August 2026 when the whole regulation uh will start to apply but we have also certain Provisions that will become earlier into application and the first one are the proh inhibitions and the so-called AI literacy which providers deployers of AI system will have to develop within their organizations and this will become applicable as of 2 of February uh next year 2025 then um as of

2nd of August one year after the entry into uh uh force of the AI act uh also the governance framework and the enforcement provisions uh will start to apply so that means that the member states will have to designate their competent authorities uh notifying authorities to take responsibility for uh um different procedures and um also there will be the enforcement Provisions that will become applicable with the exception of the fines for general purpose uh AI models uh and importantly also at that moment 2nd of August um 2025 also the provisions for general purpose uh AI

models uh will start to apply and then um as I said um next 2026 uh we'll also have the full the main scope of the AI act with the key requirements and obligations for high-risk AI systems uh in particular those that are Standalone with fundamental rights implications uh listed in Annex three that will uh start to apply as well as the transparency obligations for certain AI systems related to uh obligations to notify individuals uh uh or related to uh AI generated content and the final and La last uh uh deadline for the application will be

in August 2027 uh where we'll have a bit more time also for the specific uh rules for uh AI systems embedded in regulated product such as machine medical devices and others U that will also then become applicable as of 2nd of August 20127 so we have also more time for this sector to integrate and adapt to the provisions thank you very much for this very comprehensive response from there maybe we can go into more detail uh with uh uh obligations uh first regarding the scope of the AI act uh we need to come back to

the AI systems uh definition it's it's very important so could you uh Yona uh give us the AI definition AI systems definition under the AI act and maybe um tell us more about the the inspiration of followed here by the EU legislators in adopting this uh definition yes indeed that's a very important element because it determines the scope of application of the AI act uh as this is our primary focus uh now and the objective of the co legislator has been really to develop uh a definition that is aligned to International approaches so we can

uh be converging also with the uh International Community and here we've also built a lot on the oecd work uh and the internationally agreed consensus what AI system is and also importantly to develop a definition that can be sufficiently future prooof with this fast technological changes uh that stand the time and we have a regulation uh that is um adaptable and flexible Enough by focusing on the key functional characteristics of the AI systems uh and adopt to the extent possible uh technique neutral approach uh so I have a slide where you see what are these

essential characteristics and the definition of the AI system uh as defined in the AI act which is a machine based system it's designed to operate with varying levels of the autonomy understood as the degree uh through which the system operates without human intervention that can differ depending on the different applications it may exhibit adaptiveness after deployment but this is not an essential characteristic meaning that there could be system that continue to self-learn during their use but there could be others also um that do not um it is a system that um works for uh explicit

or implicit objectives to achieve um um outcomes and the essential characteristic is that it informs from the input how to generate these output so this concept of influence at the design and development stage is very important uh to actually distinguish the AI systems from traditional software through this specific capability how from the input and the data uh they manage uh to generate uh these outputs that could be uh different kind of predictions content recommendations or decisions and they influence the physical and virtual environment in which the system operate so we'll be providing further clarification based

also on on these essential components already framed uh in the AI act thank you thank you very much uh maybe freder do you want to add to these and and give us an example for instance I can I can do that happy to happy to do that yes um so I mean if you look at the systems um and models that are on the market today a good example for an AI system would be for instance chat GPT um chat GPT is an interface where you can chat to an AI model the model behind that

interface can change right open a open AI has changed that from GPT 4 Turbo to gpt1 and many people maybe didn't even notice so um the AI system really is the way the human interacts with the AI and the AI model sits behind that this can now obviously also be true in in other cases and then I think one thing that is interesting to note from a technical perspective on this definition of AI systems that we have on the on the screen is it could transfer to other paradigms it doesn't only have to be large

language models right so the co-legislators really have taken a pretty future proof approach here to ensure that even further down the line if if there were ever other paradigms that this would also um this this this definition might also still hold so um yeah I think that that makes sense thank you thank you very much um let us turn maybe now to the stakeholders uh consultation on the concept of AI system uh this consultation one was launched by the uh AI office uh last month and uh it ended last week so maybe uh Yona uh

what were the main objectives uh of this consultation and uh I would say did you did you get what you expected in terms of number of responses uh use cases uh provided diversity uh what can you share with us today indeed uh we organized this public consultation together stakeholder input uh to help us prepare as I mentioned future guidelines to further clarify the AI system definition uh but also the prohibited AI practices that will become applicable as of 2nd of February and we see that these Concepts um legal concepts are defined in the ACT but

for us it was especially useful to get practical examples use cases and issues that Prov providers deployers and other organizations um are uh interested to see and get further Clarity to understand are there practical Solutions in the scope uh how these concepts are applied in practice uh so this was very important and we are very uh happy that actually we uh got a very diverse and useful input uh from um about 380 organizations and also individuals from all types of categories providers deployers business associations but also public authorities a lot of NOS academics and also

citizens because we are also very impacted and the purpose of this regulation is also to protect the citizens and our fundamental rights uh safety so um it was very interesting to see different examples uh for the system definition uh of concrete uh cases and also issues of these main components um uh that will further now give us input to explain uh in the guidelines and also to draw this borderline um of what kind of systems are just traditional software um data processing not falling within the scope compared to to these uh systems that fulfill uh

the essential characteristics okay okay thank you very much for this preview an Anis of uh the consultation uh result uh let us move on uh to the geographical uh scope of application of uh the ACT I think we are all aware of the global reach of the AI act but we could um use a little bit more detail on on this uh Yona could you provide us with the exact territorial scope of application of the of the AI act so very precisely which operators are geographically uh covered by the text yes so we have uh

the AI act as a market-based legislation applicable to the whole European market and we target providers and deployers of AI systems that are placed on the market used in the union or have an effect uh in the European Union so for the providers these are the actors uh placing those system on the market that can be uh based established in the European Union but they can be also in third countries uh as long as they Place uh their AI system or also general purpose AI model on European um market and for the deployers uh we

uh have uh um organizations that are or also natural or or legal persons who are deploying these systems uh and that are based uh established in the European Union uh uh or located also in the European Union and to avoid circumvention of the rules because uh systems uh and also their outputs can still have effect in the European Union even if uh they are uh used uh outside uh there is also a special um uh provision which envisages that deployers and uh providers even if they're established outside the European Union when they provide input uh

and uh that is intended to be used in the European Union and has effect here uh could be also covered within the territorial scope thank you thank you very much um we can now discuss the first obligation applicable from two February 2025 AI literacy so um maybe Yanka again could you could you start by explaining the scope of article four of the AI act so that is the provision on AI literacy and maybe more more precisely to which uh person this provision apply and what does it require uh yes that's a very important obligation uh

and it applies to both providers and deployers uh of AI systems and it is a best effort obligation that to the extent they can they should Ensure a sufficient level of AI literacy of their staff but also other actors persons that are acting on their behalf and this applies both when uh they are developing the systems uh or also using them with the overall objective to ensure this trustworthy and safe deployment and use of AI systems it is very important to say that this is a very context specific uh obligation because here we can have

very different expectations uh also depending on the types of systems and solutions that are used by this organization just to give you an example it's very different of what an organization that is just using um minimal to know high-risk Cas systems that are just doing summaries uh or simple uh translations um uh and compared to for example High risk sectors uh where much more expectations in terms of knowledge skills and also understandings is expected um to inform the self-development and deployment um of the AI systems and the overall objective is that uh this actually supports

and takes into account the obligations also inv visited uh in the AI act so there is a clear link uh also uh with the for specific systems with human oversight and others and aims to also ensure a sufficient consideration of the impact on the affected persons uh and also the broader context um um both to enable good understanding of the benefits and the usefulness of these systems as well as the risks thank you thank you it's it's very enlightening uh AI providers and deployers may still want where to find some guidelines some some inspiration to

to implement this o obligation and maybe onine you can uh you can help us on that yes yes I can and actually given the uh the overarching nature of this specific provision of this specific article in the ACT I can only encourage actually organization to start thinking very early on about their specific approach uh to uh to AI literacy basically as Yanka was saying AI is set of fast uh evolving uh Technologies therefore in the ey office we do not consider that there is one size fit all approach to AI literacy and therefore we want

actually to stress that we need to consider this specific provision with a certain degree of if I may say flexibility that does not mean though that uh AI companies have uh nothing to do and that or companies or organization have nothing to do and that actually the I office and Market surveillance authorities in the future will not act that just mean that actually there is a need to further understand the exact knowledge EI related knowledge and expertise of um of European workers and I would say even more generally of uh European citizens to be able

to fill potential gaps but in practice and in short um I would say that the first thing organization should consider right now is uh first to identify the types the different AI systems they are being used they're using or deploying or developing and then uh ask themselves actually several questions first um what is the role of the organization in the value chain of that AI systems so meaning are they developer or are they a deployer of a specific a system and second they should also think about the risk level of the specific AI systems they

are using developing or deploying based on these assessments they can then um um reflect back at their own uh internal AI literacy related organization policies and think about potential additional AI trainings uh for those uh um people as mentioned by yordanka that are directly developing deploying or interacting uh with an AI system in support of this actually the office is already taking actions uh we have been already organizing one first workshop with aipac signatures and we will be organizing very soon actually in February 2025 a first webinar on AI literacy open to all to really

try to get first interactions with the community on the way forward with this specific uh provision based on these inter initial uh interactions with the community we will be actually uh Drafting and Gathering all good practices that will then be uh widely uh disseminated in addition to this we are also discussing with member states within the I board uh about guidance on AI literacy that should be published actually in the second half of uh 20 25 and last but not least this actually article and a liter encompasses actually lots of the things uh normally notably

including for instance the skills needed to interact or to develop a specific AI systems but also it relates directly to the talents we manage to have in Europe and so the ne the next Flagship actually strategy of the commission the apply a strategy will directly include specific actions related to to this in order to make actually uh the European continent continent a true AI continent thank you for this very instructive response uh let us move maybe now uh on the second obligation applicable from February uh 2025 that is AI prohibited uh practices um maybe yodona

you could start with uh telling us uh what are these eight uh prohibited uh uh practices and give us uh some some examples and we have a slide uh to share on that with the audience indeed yes so uh the objective of the cleis lators would to set clear red lines and unacceptable practices that are uh incompatible with our European fundamental values and rights um and these are complementary to other existing also prohibitions uh so this is very important uh to stress um we have a broad categories and the first one is the um AI

used for manipulation and exploitation of vulnerabilities that can um uh distort people's behavior and make them uh uh and cause them significant harms and here examples are for uh example uh for specific vulnerabilities linked uh to especially vulnerable groups like children or disabled people for example if toys are manipulating kids um and incentivizing them to harmful Behavior or or we uh in relation to AI systems deploying deceptive or purposeful manipulatives and subliminal techniques if we are for example interacting with chat Bots that are deploying these kind of techniques uh uh exploiting our cognitive biases and

um pushing us into behaviors that are significantly harmful for example for our physical safety or psychological um harms um the other uh prohibited practices the so-called social scoring um which aims to evaluate and classify um people uh based on their social behavior or personal characteristics with um over a period of time with this score being used um to unfairly uh and detrimentally uh treat this these people in particular these could be examples where public or private also organizations because it could apply to both uh are using data from different kind of sources that are very

unrelated for example for the purpose of evaluation um and they are combining this um with detrimental consequences for example taking uh also specific um unjustified action also to um investigate certain uh citizens in case of fraud detection um or or other um public um Mass surveillance uh practices the next uh example is um biometric categorization where AI is used to deduce or infer from our biometric features for example uh our face certain very sensitive characteristics such as the race political opinions um religious or philosophical beliefs or even our sexual orientation um which is considered uh

inacceptable and um in not in line um and here we should draw a distinction uh for example with certain just AI systems used uh for labeling in uh of data which is not inferring this very sensitive characteristic or um violating dig human dignity and and our rights um the next example is the use of AI for realtime remote biometric identification for law enforcement purposes uh in publicly accessible spaces for example this could be uh systems that are deployed in streets public areas where we could be massive um by law enforcement um collecting our data without

appropriate restrictions and um uh safeguards so here because of the risk of mass surveillance um and uh um very strong interference with our fundamental rights this is also prohibited but there is a careful balance that has been um um made between the co legislator when the benefits of these systems uh could still outweight the impact and subject to uh strong safeguards uh they could be in a very targeted way deployed for certain um limited exceptions like for example to prevent a terrorist um uh threat um or imminent threat to self uh uh safety um um

of people or also to identify uh suspects based on known information for some of very serious crimes and um these exceptions could be relied upon only subject to very strong safeguards uh including prior authorization um by uh independent um authorities judicial authorities and a lot of safeguards that should be put in place to ensure they are very targeted and and not harmful the next prohibition um is when AI can be used uh to assess the likelihood or predict um when a person would be committing a crime CRI criminal offense um based solely on for example

our personal characteristics U such as for example um um nationality or uh certain origin and this is without any link uh to objective uh and verifiable facts um to uh criminal activity uh so this is really to prevent this um uh very uh harmful impact um and use of these systems that are not aligned with Fair Justice systems uh and rule of law um but on the other hand still uh use of AI when uh there are sufficient safeguards this is just to support human assessment um and it is uh based on such uh uh

objective facts linked to criminal activity would be still possible um the next prohibition is um when AI is is used to infer um emotions in very specific context in the area of education and the workplace um and there are exceptions um for medical and safety reasons so in particular the prohibition aims to prohibit um uh use of AI for example if employees or students would be uh harmed um uh when AI their emotions are being used and surve uh in this uh sensitive context when there is imbalance of power while at the same time um

uh with the exception for medical and safety reasons still beneficial applications um when this is Justified could be allowed and the final prohibition is when AI is used for the untargeted uh scraping um of uh facial images uh from internet and CCTV footage to build fa facial recognition databases so here the concept of untargeted is very important um basically these are as an example system that are used to widely scrape all images uh on the internet to build these databases that are then provided to uh all kind of deployers uh and that they can um

use them uh for further uh surveillance and um uh identification of people from these um databases so here it's also important to draw a distinction between the biometric databases that are built uh and not just uh use of um data for AI training for example and model development thank you thank you very much uh Yanka maybe a quick last question before the the qnation dealing with the consultation here again of the AI office because it deals with AI system definitions but also uh uh uh on uh AI prohibited practices so it will help you uh

draw up the guidelines on prohibited practices can you tell us a little bit about the the the result you already uh received yes that was also very informative and we are very thankful actually to all organizations who have provided input um primarily from European Union but also from abroad that have become very practical as examples for each of these prohibitions what could be considered as all these different elements that should be always fulfilled uh and what further clarification should be given for example what is a subliminal technique uh what is the threshold for the significance

of the harm um or um for example what is a real time uh in the case of a remote biometric identification so very practical issues that we need to clarify and also practical examples to put in the guidelines because our objective is indeed to be very informative and illustrative helping organizations uh in their daily work and issues they when they're developing these systems to know have Clarity if they would be in the scope or outside the scope uh and of course these are also uh just to emphasize also um living documents that we are going

to develop because we are just seeing the implementation of this legislation that we also gather more lessons learned more practical examples and um we are going uh of course to evolve and build this kind of use cases and knowledge also over time but this has been a very important First Step that will now inform our future work EX excellent thank you thank you very much so we received a a lot of uh questions so uh maybe we can start uh uh freder with you we have a question on AI systems uh definition and the question

is about the distinction between AI systems and it systems because it seems here for for the for the person that the definition seems to cover some regular it systems as as well can you clarify this point maybe um yes I can take a stab at this I would also like to offer Yanka if if she wants to step in um from my understanding the the definition the distinction here is really in the inference point so giving an input and then doing that inference and defer and inferring the output um is the the key characteristic of

the AI system that your um classical database recall or um simpler simpler recommender algorithms would do in a very different manner um so to to my understanding that would be the key differentiation but also Keen to see what Yanka has to add if if she would like to add anything yes I think clearly this concept indeed of inference and the different techniques that can be actually used to build this capability of the systems to uh to achieve these objectiv is the most important and here uh also the recital of the AI act has been giving

uh for example the machine learning techniques but also other knowledge uh logic based approaches uh so um it will be very important to distinguish just simple data processing simple software an automated system that do not have these capabilities and inference uh um capacities okay uh we have a maybe a more tricky question dealing with with the timeline uh because we will have this uh obligation applicable in February 20 25 but uh at the same time the uh enforcement scheme uh will not yet be uh uh applicable it will only be ready in August 2025 so

uh do we have something like a enforcement Gap that's the question here what do you think of that Yanka uh well it's important to say that uh the uh rules will become applicable and both providers and deployers we have to take measures to implement them so in this sense there is no Gap and that's why it we are working also hard to also help collect these use cases help organizations uh prepare and really uh make sure that they do not uh place on the market or deploy these kinds of prohibited uh AI systems and already

the ACT is there but these future guidelines will be also very important um in the sense also that this is a regulation that is directly applicable even uh affected persons who consider that um there are systems that are violating the AI act they could also uh bring cases to court uh but it is true that we would not have at this stage between uh February and August public authorities to actively start to in enforce uh with with fines or enforcement actions um however it's just a short period uh and uh already a set organizations should

take action because these are directly applicable rules and there could be still uh uh civil liability um consequences for them thank you it's it's very informative thank you very much a an interesting question deal is with with clarification and I think that the purpose here regarding the the territorial scope of application the AI act will not apply that's an affirmation but also a question there is a doubt uh to Providers from the EU placing AI system outside the EU that's correct because the AI Act is a market-based legislation so uh we are harmonizing internally the

EU rules uh for how we regulate the internal market for systems that are going to be exported outside the European Union we have other regulations uh including dual use regulations and others um uh and that they become still of course inforce and applied to these cases as well thank you it it's time now for us to delve into our second topic general purpose uh AI um maybe we will start with you uh freder could you please explain the concept of general purpose AI model under uh the AI act and maybe also um how this concept

interplay with uh the concept of gpai system yes I'm very happy too so in the AI act we have the article three um that explains that an AI model indeed is um an a model that display significant generality and is capable of competently performing a wide range of tasks now this can be achieved for instance through um training the AI model with a large amount of data through self-supervised learning that is how large language models work again you note that this is one option of how this significant generality and good performance at a wide variety

of tasks can be achieved so the legislation is somewhat future proofed here um but that is that is then the definition of a GP AI model it goes on to say that this can then be integrated into a variety of Downstream systems or applications and here is where the AI system part comes in so again going back to this to the example of GPT 4 and chat GPT GPT 4 or gp01 or whatever it may be is the model which can then be integrated into a variety of systems such as the chat GPT interface so

that you can chat to the model um now it's important to note that this is a functional definition so a gpai model will remain and be a gpai model regardless of whether um regardless of the way in which the model is is placed on the market um and yeah I think then there's a bit okay there's a bit more in recital um 97 and again this goes into examples of how these models are usually marketed nowadays for instance through apis um such as like or uh saying application programming interfaces um for people to build on

top of the the the gpai model because chat GPT in that sense is a particular example you can't really access gp01 beh behind the chat GPT interface for that you would have to go to the application programming interface and then you can also build your own AI system on top of the AI model this of course works even better with open source models such as marketed by meta or by Mistral um and here again you see the distinction between the model that can then be integrated by a variety of of different Downstream providers into different

types of AI systems excellent thank you very much for this clarifications um under the AI act GP a models are subject to different regimes depending on their level of risk uh could you uh uh freder here uh explain what are GPA model with systemic uh risks under the the AI act yes so um a gpai model with systemic risk would be one of the most advanced models which then has or is likely to have um significant and large scale impact on the Union Market on society as a whole um and this would be over and

above mere infringement on individual rights so this is really a quite widespread impact and as you note it is tied to the um most advanced capabilities so this gpai model with systemic risk would really be a small subset of all GPA models that are out there namely the ones that are at the frontier of capabilities and that are likely to have a widespread impact now this technical Criterion can be analyzed in in different ways anex 13 of the AI act provides some guidelines here there is currently um a technical Criterion in here that is the

floating Point operations that are used to train the model so above 10 to the 25 floating Point operations or flops is where the AI act currently stipulates um one should assume that this is a gpai model with systemic risk now notably if the provider believes that the model nonetheless does not have the most advanced capabilities even though it is trained with this amount of comput they could still alert the AI office to this um fact and then then could could have the um designation taken away from them but that is the the current threshold that

is in there there's also other criteria such as the reach of the model because going back to the initial definition it is about the large scale impact on the Union Market this obviously is only achieved if there is a wide reach and a wide usage of the model so this is another Criterion that is taken into account um in the public perception that 10 to the 25 floating Point operations the flops has um been very prominent I think it is important to note that Annex 13 indeed also has other criteria um for the gpai with

systemic risk thank you thank you very much it's it's very important what you what you said and based on this definition of GPA uh models with systemic risk I I mean for the audience it's crucial now to know who is in charge of the characterization of of GPA model the AI office uh uh the providers themselves both can you give us some clarity on that yes um so indeed there is two paths here per default the provider would be obliged to notify the AI office once they once it becomes known that a model they are

training will cross this threshold for systemic risk that could mean that they are planning to train for instance with more than 10 to the 25 flops and at this point they would have to notify that they are indeed in the process of training a gpai with systemic risk now it is important that the AI office understands at this point that this is what's happening because certain risks from these um GP models with systemic risk May indeed materialize in the development phase already so this is this is an important point however um the European commission as

in the AI office also is able to make this qualification ex official so where the AI office perceives that a certain model likely has systemic risk even though the AI office has not been notified the AI office could then um make the qualification ex ex official okay thank you thank you very much we now uh turn to the question of the legal regime of of uh GPA uh models um so here there is a two-tier legal regimes for GPI models under the AI act we we have a slide on that um maybe Yanka you can

start with the first level uh of obligations uh applicable to all GP model and and then freder maybe you will you will uh go on with a second uh level uh of of obligation so so Yonka you can start and we will share a slide with the audience on the two-tier regime for GPI models yes uh indeed so the main purpose of these obligations that would apply to all general purpose AI models in is to ensure transparency across the value chain so as freder was explaining because these are the models that can be integrated into

numerous Downstream applications and this is important also for further compliance with the AI act requirements it is important that these models um providers document certain characteristics um uh for example the tasks uh uh also the training data the methodologies uh they have used uh uh and specific information that they will have to keep uh at their disposal and give to the AI office as future uh supervisory authorities and also other competent authorities under the AI act there is also certain type of disinformation uh with another layer of transparency that they will also have to disclose

to Downstream providers who want to integrate these models into their uh AI systems uh so that will help them understand the capabilities the limitations of these models and then they can uh also uh enable comp their compliance with the a act requirements for example for high risk uh but also more broadly and there are also certain types of obligations that uh aim to bring uh more uh transparency towards the public uh these model providers will also have to publish a sufficiently detailed summary for the training data they have used uh in the development of these

models um and this is very important uh also to enable um legitimate parties with interest the public uh including right holders whose content has been used in the training stage of these models to know and have this transparency uh that will help them uh and facilitate the exercise of of their rights under Union copyright law or or other uh relevant laws and we as the AI office are going to develop a template that will help providers implement this obligation in a consistent and uniform Manner and then there is also the final very important obligation is

also that they'll also have to put in place a policy uh to comp with Union copyright law so this is beyond also the transparency issues uh including for example to uh Implement appropriate uh measures uh uh for this compliance and also to respect uh opt outs from the text and data mining exceptions for example if certain authors have reserved their rights when they have uh not um agreed for example their Works to be used for AI train training so um this is also very important and complementary so this policy is not changing the existing Union

copyright legislation but rather aims to uh facilitate its implementation and make sure that the provider puts in place effective measures in in its internal organization thank you very much yon so FR what about the second level of obligation for gpai models with systemic risk yes indeed so there is a second level of obligations um and it is important to stress that this applies or is foreseen to apply to a small number of providers of these most advanced models which may carry fairly severe risks recital 110 lists a couple of them um so for this small

number of providers developing and placing on the market the gpai models with systemic risk there are a couple more obligations and these are outlined in article 55 so briefly what uh needs to happen needs to happen here if you are placing on the market a GPI model with systemic risk is you need to evaluate its capabilities there is an obligation to fully understand what the model can do and how far Advanced its capabilities really are there is an obligation to conduct a comprehensive risk assessment of these systemic risks that the model might pose and then

put in place appropriate risk mitigation for each of the risks that have been assessed so an example here would be you conduct um a risk assessment seeing that the model might be misused in order to commit cyber crime it might write code that helps somebody to hack a critical system now you conduct an evaluation you see whether the model indeed is able to write code of the complexity such that it might be useful in order to breach a critical system and if you find out that that is the case you will need to put in

place risk mitigation and this can take several um forms you could for instance prevent the normal user from accessing this capability and restrict user access to a slightly less capable version of the model such that only verified and known users can access this very high um capability that could be potentially misused and similar approaches but this is one example of this steps of evaluation risk assessment and risk mitigation and then there are two more types of obligations cyber security and incident reporting now these are very important um for the security around the model itself so

cyber security pertains to the fact that if you are developing and placing on the market um this gpai model with systemic risk you are handling one of the most advanced AI models that are on the planet and this is a very desirable thing for many people who might want to do dangerous things uh with your GPI model with systemic risk hence you have the obligation to harden your cyber security such that the model cannot be filtrated either through mishaps internally where the model gains the capability to copy itself and it copies itself somewhere on the

internet and then gets misused or through uh theft by an external actor so cyber security applications apply and then there's incident reporting and this is really the sort of uh yeah bracket around all of this if there is a serious incident from such a gpai model with systemic risk this has to be reported to the AI office now this is simply to inform further policy development and further interaction with the model developer to understand you know the incidents that are happening even if they are internal but if there is a serious incident there is the

obligation to then inform the AI office and of course National authorities were relevant um and those are the the additional obligations for GP models with systemic risk again this should only apply to a small number of providers that are really uh developing and placing on the market these most advanced models thank you it's it's very helpful um we can now discuss the drafting of the of the code of practice which is currently uh being carried uh out on the basis of article 56 of the uh AI act uh freder how this uh code of practice

being uh drawn up and what is the role of the uh AI office in this uh process maybe we can share a slide on that uh with the audience yes um yeah happy to explain so the idea of the code of practice is that it should be a guiding document for providers of gpai models for how to comply with their obligations so this includes the transparency and copyright obligations that were outlined by my colleague Yanka and the second tier of obligations for general purpose AI with systemic risk that I just just outlined um so for

all of these the code of practice will provide um some some uh guiding document for providers how to comply with these obligations now the process of drawing it up is a very collaborative multi-stakeholder process we have uh worked with over thousand stakeholders ranging from Individual academics copyright holders members of the society um to the GPA providers themselves um industry associations again Civil Society organizations so there's really quite a wide net cast here stakeholders um have congregated into four working groups um to focus on different parts of the um of of these obligations and each working

group is chaired by independent experts so it is important to note that indeed the code of practice is being drafted by these independent chairs not by the AI office itself and the role of the AI office merely is to facilitate this process um and to yeah ensure that the timely delivery of a final draft of the code of practice which would then be given legal validity um via an implementing act and providers of AI models would have to sign up to this code of practice um in order to use it to demonstrate their compliance with

the AI act so this is the multi-stakeholder process that we are running through you can see the timeline for it on the screen um we have published the first draft of the Cod of practice on the 18th of November so everybody can see it online um and we have received over 400 submissions of feedback on this um and held multiple verbal and written interactions with different stakeholder groups including providers but also civil society and other other members of the working groups and are now gearing up to publish a second draft of the of the code

of practice and then this will continue until in May the final draft is expected and then as I said providers will have the opportunity to sign up to the code of practice and use this to demonstrate their compliance with the AI act thank you thank you very much a last uh quick question but very important one maybe for you Yonka uh uh uh still on the code of practice um what will be the legal uh effect of this code of practice the code of practice will be mandatory or not I mean the industry need to

to know that of course that's a very very important Point uh and it's important to clarify that this is a voluntary code of practice uh so that's why this collaborative process where uh providers are involved and also other stakeholders is so important we want of course the providers to sign the code and rely on it for future compliance but it is in the end a voluntary mechanism to which uh they can sign uh and as explained by freder we as the AI office are going to assess the code once it's uh finalized in May uh

whether this is um uh fulfilling an appropriate tool to implement the AI act obligations we'll do that together with the AI board and if this assessment is positive which we truly hope uh and we work uh so hard together with all stakeholders uh to to lead to that outcome then we can approve this uh code through implementing act and give it General validity so all these providers who sign to the code afterwards will be able to rely on it to demonstrate compliance but uh other s other GPI providers could also use other means so it's

still uh is a voluntary tool in that case they will however need to show that they also uh are uh implementing good enough Solutions and measures to effectively implement the obligations under the AI act as well so we in our role as the future AI office supervising uh will uh monitor everyone and that has to be clear thank you thank you so now we have our Q&A session uh so I uh I'm reading already some question maybe I have one uh important question on on enforcement and governance for for you freder so the AI office

will be in charge of uh enforcement of GP a models and the question deals with the relationship here between the AI office and national uh authorities uh when so uh governing these GP models yes um yeah that is a very important question indeed so in the case of gpai models the compliance with the provisions under article 55 that I outlined so evaluations risk assessment mitigation cyber security and incident reporting the uh compliance for dpai models with with systemic risk and for GPI models more generally is um enforced by the AI office so on this model

level the AI office is really the competent Authority and then the obligations on AI systems are enforced by the competent authorities in the member states so that is is the the distinction here thank you um I have also a more technical uh question so still for you I think FR um we need some clarity on the concept of fine tuning of an AI uh model so what fine-tuning of an AI model would entail and um is it the change uh of uh the parameters of of the model here yes so um I think the important

thing to not is that we are working on a guidance on this um so there will be more information in due course um maybe just to briefly explain the concept of find tuning this is really about the moment where a model such as a mistal or a meta model that's that is open source can be taken and can be provided with further data and then fine-tuned based on this further data to uh augment certain capabilities of the model so it might be for instance fine tuned to respond in a certain dialect that that would be

a classical case um and there is an an obvious and very important question here is when does somebody who is fine-tuning a model become a provider which obligations do they then have to comply with um compared to the original developer of of the the underlying model and as I said we are working on guidance on this thank you thank you very much we are a question on on chat gpts we talk about chat GPS already but uh here the question mentioned that uh the the AI Act was negotiated uh before the the the rise of

chat gpts and uh so it does not exactly fit with the risk per pyramid that we all uh know so the question is why was uh this not fitted under a limited risk AI so maybe y or or you fredero I don't know yeah so so maybe just a point of clarification after the commission made the proposal in 2021 we had a uh very intense process of negotiations with the co-legislators and at that time already ready the uh GPT and these uh very impactful uh capable systems uh were already emerging so already the co-legislators both

the council with the member states as well as the European Parliament have um examined and very thoroughly taken into account uh how actually to further improve the commission proposal to take a proportionate approach with specific rules um that are also future proof enough uh as we are seeing that they are very fast evolving and the further detailing of the code of practice and the mechanisms through which you implement these obligations were very important so it is reflected uh also with both chat GPT but also the impactful models uh that are powering this many application in

the approach uh in the legal rules so that's why we have both rules for the systems the applications but also the uh the powerful models um and they are very uh closely interrelated uh in the overall scheme because we should not forget also the different layers um and also um uh specificities that the high-risk also AI systems the way they are designed they can be also further review vied and updated over time by the AI office so it is really embedded in the architecture of the AI act to have this um future approach to adapt

the rules both in terms of uh the the types of systems but also the implementation measures that should be taken to um to comply with the different requirements thank you thank you uh very much I think it's time for us to uh start our uh third uh uh topic uh this afternoon and it deals with requirements um for high risk AI systems and the related technical uh standardization uh process um maybe we will start with you Antoine could you uh start by recalling us the main rational of this requirements for irisk AI system what are

they about what uh was here the inspiration of the AI a legislator please sure I can um let me for this actually give a bit of um historical background um also to understand exactly why we ended up ultimately with these specific rules in the in the ax ultimately so um the European commission and the Dig connect in particular actually we have been um actually for years now trying to create an ecosystem of trust and Excellence when it comes to uh AI policies and uh actually following the first um uh communication on AI which was released

in April 2018 um we have established a high level expert group on AI which was composed of 52 representative of Civil Society uh Academia and and Industry to uh make recommendation to the commission on how actually uh to address and handle opportunities but also potential risks uh brought uh by AI Technologies the overwork actually of this high level expert group has been very crucial in the making of the European uh commission's approach uh to AI uh some of the major Elements which were put forward by this high level expert group such as for instance the

concept of trustworthiness or the seven key requirements which were actually mentioned in the ethics guidelines which is a document that was published by this high level expert group really served as Baseline I would say for uh the commission's approach uh to the to the uh drafting of the a act proposal subsequently actually following these activities of this high level expert group but also following a broad public consultation that was organized in uh 2020 the commission decided that the best way forward what actually the best way to uh rely on the opportunities brought by these types

of Technologies while at the same time handling the specific risk was through the adoption of what we called hard law then uh what follow was really a reflection on how actually to uh to translate these generic principles into a European legislation and that's when uh the commission took actually uh several um very important regulatory decisions first it considered to follow a product safety logic and actually to consider AI systems as products so this is first very important uh regulatory decision second was actually that um in order not to put too much pressures on providers of

AI systems we would go for a risk based approach meaning that we would actually impose only obligation to do the system that poses risk to health safety and fundamental rights so all of this actually was a bit our journey uh together with actually the broad Community um that uh actually brought us brought us if I may say uh the different requirements that are highlighted in the legislation for what concerns highrisk Cas systems which covers things like uh data quality data governance human oversight robustness accuracy and others thank you thank you very much and and maybe

uh now a more practical question for the for the E AI organization how these uh requirements interplay with the Conformity assessment uh regulatory scheme under the the AI act onine yes so uh first it's always good and important to keep in mind that the the first uh uh regulatory decision I was I was explaining which is that AI systems are considered under the act as products now based on this fundamental decision what we used for most of the provision of the ACT actually for notably for the provision that relates to IR risk um AI system

is a regulatory approach um which is called actually the new legislative framework approach or NLF approach this specific approach is actually uh very common approach in the European context it has been actually uh developed for dozens of years now and it is really um uh putting actually as a central element products and the safety of products which are put on the internal uh uh Market uh it's actually used it is actually used for instance in regulations such as the ones which uh regulate the use and the put into uh onto the market of toys uh

of medical device or of uh for instance radio equipment uh uh materials this regulatory approach as a as a as key actually features one of them is um that it mostly Focus or actually it uh implies that the legislation will mostly focus on High level essential requirements while the translations of those requirements into practice will be be um left or will be Cod decided in a specific multi-stakeholders uh for forum for discussion which is standardization the second main feature of this regulatory approach is um it's rely on on its Reliance sorry on a Conformity assessment

procedure so this basically um is based on internal controls technical documentations and for instance the creation or the esta abl lishment of a quality management systems that allows actually a producer before a certain product is put on the internal Market to get a c marking that's actually presume that a certain that this product in our case that di systems is compliant uh with uh the European legislations yes thank you very much Antoine so you you mentioned this uh Conformity assessment based on on quality management system and Technical documentation so in this case a notified body

should conduct the Conformity assessment of the AI system so maybe y Yonka the audience May wonder who are these uh notified uh bodies and can stakeholders choose them freely can you can you help us yes so the notified bodies in in essence like 1 dependent parties uh whose objective and task is for certain types of high-risk AI systems in particular those that are already um embedded in regulated products because this is how we actually we do not touch any I system in a product but only those that are safety component already subject to this third

party Conformity assessment with notified bodies as high risk or there is also the other example uh of remote biometric identification systems that would be required to go through the this um notified bodies uh to have this independent check uh based on on their quality management system and documentation how they have fulfilled the requirements and there is a specific procedure under the AI act with national notifying authorities that will have to notify these bodies who have the uh necessary expertise uh but also um satisfy other conditions like independence from the provider internal control security procedures and

others so they will have to comply with these uh and after this notification procedure and um that they become uh recognized notified bodies providers in the whole European Union will be able to select uh uh whoever they wish to uh perform their third party uh Conformity check like this could be uh even not needed to be in the country itself but as long as they are um such notified bodies in other countries they can freely choose uh uh and use their services thank you verific thank you very much for your response uh let us move

on to the standardization now this is a very important dimension of the AI act so all the requirements we mentioned earlier um are intended to be translated uh into technical uh standards in the European format of harmonized Standards so this is article 40 Antoine could you explain how does it work so what are harmonized uh standards in EU law first and how will article 40 of the AI act be implemented yes so first really the important elements is these harmonized standards so U harmonized standards actually technical documents which are developed by recognized standardization organizations on

the basis of a mandates from the European commission which if evaluated positively by the commission and mentioned in the official of the official Journal of the EU would Grant a presumption of Conformity to a providers that is adopting that specific standards so basically this standardization process has been actually very much linked and used uh in implementing product safety legislation in the European Union as I mention actually we have lots of example of previous legislation which are based on the same types of implementation uh process um what is important is really that um this specific regulatory

approach and the Reliance on these harmonized standards which is actually a voluntary Reliance um provider of an a systems could still I mean go his own way but harmonized standards are really there to help him actually comply with a specific legislation this specific um uh process and this specific Reliance on these standardization activities was foreseen in order to make sure that actually the rules from the legislation would be practically implementable and so that's why we are often referring to the fact that it is a legislation which is putting rules on providers of a system but

is actually very much forward-looking and it's actually very much Innovation driven because ultimately the people that will be uh translating the high level requirements into practice are the ones which are able actually to contribute to these specific technical documents which are harmonized standards thank you thank you very much and can you go on and tell us who are in charge uh uh current ly of drafting these harmonized standards for the AI act yes so as I mentioned actually for standards to be to become harmonized standards and to actually ultimately um Grant a presumption of Conformity

to a company uh using it uh it needs to be based on a commission mandate uh in the jargon we are calling this uh actually a standardization request in the case of AI uh dig connect has been actually very very much following and involved in the uh AI related standardization activities for years now um we have been following closely the work uh ongoing the technical work ongoing at International and uh European level and we have been actually very early on which is very unusual very early on adopting a a standardization request to ask European standardization

organization and in our case sen and senc to uh develop standards in uh 10 uh different areas which are actually directly linked to the uh different requirements for highrisk um AI systems in addition in this specific mandate so in the standardization request we are also um suggesting or imposing uh certain specific rules on the procedures uh for uh the standardize the standards making process notably uh relating to the um to the uh to the um proper involvements of Civil Society experts in the standardization activities but also to the need of making sure that the standards

being developed are fully aligned with uh the objective of the legislation which are among others the protection of fundamental rights and so these are also Elements which have been specifically mentioned in the standardization request one last element is that actually we are requesting European standarization organization to come up with harmonized standards that does not mean though that uh standards being developed at International level cannot be used or taken into account that just mean that do specific standards that are developed in other types of uh International standardization fora needs to be aligned and needs to be

aligned not just with the approach but also with the taxonomy for instance and the definition that we are using in the European legislation but there are some standards which are being developed at International level which serve actually as a very good Baseline uh for the Practical implementations of the requirements of of uh the uh of the legislation thank you thank you very much uh you mentioned already a little bit the role here of the uh commission uh and the AI office in this uh uh standard procing standard setting uh process but could you maybe um

um give us a little bit more uh Insight of the role of the AI office um does the AI office have a form of monitoring uh role here uh in the current standard setting process onine yes so as I actually mentioned before we have been very proactive from the commission side and in dig connect we have been involved actually in this uh standardization activities for a long time and why actually we have been so much involved because um given the overing nature of the legislation but also given the risk uh and the opportunities uh we

were actually considering that there was a need to ensure that the technical experts which are in the standardization activities would understand the approach taken by the European legislature and also it would allow us it was actually allowing us to react very um fastly on certain specific assumption that were taken by a technical experts and that's why actually we have been uh very much involved in uh that uh specific process for Yom and that's why also we will be uh we will actually continue to be that much involved and indeed as you mentioned monitor the the

creations of and the developments of these technical uh documents to ensure actually that ultimately we ended up with practical documents that would allow um providers of EI systems on which specific requirements fall to be able early on very early on to um comply with the requirements of the legislation thank you thank you very much a last uh question before our Q&A session a very concrete uh uh question um do we already have technical standards on AI in the EU jurisdiction anine yes so actually I I was mentioning the fact that that there has been actually

standardization activities ongoing for years now at International level in two International standardization organization called ISO ISC in a special committee which was created just for that called the special committee 42 since 2017 actually and then we have also uh discussions which are ongoing at European level in sen and senc and especially actually in a joint technical committee called JTC 21 which is also since 2021 developing different types of Standards so at this stage at this moment in time we do have actually uh specific AI related standards which have been developed at International level nonetheless actually

not all of directly usable as is in the European context notably given that they are not uh directly first neither nor useful nor directly align with the approach of the legislation still there are I already mentioned Goods Baseline and here I want to stress for instance uh standards such as transparency taxonomy of a systems which is actually a good Baseline for uh the also the discussions ongoing at at European level then in the European context as as I mentioned we started later we started in 2021 we have very good progress and we have actually for

seen in the past few months uh these standardization activities uh rapidly evolving and we uh will as I mentioned continue to monitor closely to ensure uh proper adoption of these of very um easy to implement if I may say technical documents that would facilitate the compliance process of uh irisk a systems provider before the entry into applications of uh specific irisk AI uh requirements thank you very much uh Antoine uh it's time for our Q&A uh session and uh I have uh one uh uh precise question on requirements so maybe Antoine uh um concerning the

list of requirements under the AI act the question is asking do we have something uh related to sustainability sustainability is not directly actually mentioned in the initial standardization request but sustainability and actually standardization deliverables relating to the energy consumption of specific models and system is something that is mentioned in the legislation so in the future we will be uh actually um publishing new standardization requests that will ask for standardization deliverables directly link uh to the energy consumption of uh specific models and system and this is actually a requirement of the legislation okay thank you I

have a question maybe for for the the three of you um the the the the the person here here is saying that he often hear that the AI act would slow down AI development in the European Union so what uh uh arguments would you have here to say otherwise who want to to start with some uh element on I can take it yes um so basically as I mentioned uh given the specific approach taken in the European context we really tried actually or best to be Innovation driven rather that actually uh um in their uh

innovation it's also important to always remind uh ourselves that this is only one part of the European strategy so the creation of an ecosystem of trust is one side of the same coin we have also the creation of an ecosystem of Excellence which we have been actually building for more than six years now now that the legisl that the legislation has been uh finally adopted we will as the commission really focus on this uh uh making sure actually that we can we can um get the best from the Technologies and we can actually uh get

competitiveness gains in specific sectors uh when it comes to the use of these specific um broads set of of of Technologies so two elements first uh the legislation is actually protecting against the risk but it's actually based on a regulatory technique which is very much Innovation driven second element we will now have actually the biggest uh um part of the work of the of the commission will be on promoting actually uh the use of of uh AI Technologies uh in the internal Market but perhaps yenka wants to complement as well yes maybe I could complement

that uh the AI act and safe regulation is also very important for Innovation for the uptake for Trust of both deployers but also citizens so we are really taking the benefits of these Technologies well as Antoine mentioned with the very targeted rules focusing on really the risky applications having appropriate and proportionate mitigations while also giving the Practical tools for companies what to do in practice because we've seen that indeed this lack of legal certainty uh has been even before that preventing the development and take of these Technologies and the AI rules and requirements with the

standards with the code of practice are actual enabler helping organizations how to develop more transparent more safe more non-discriminatory systems um and really tackle these really harmful uh outcomes that will not allow us to benefit uh from these Technologies and maybe just another point that the a also has very targeted and specific tools to enable the safe Innovation and development including uh measures such as specific support measures for uh small and medium siiz Enterprises um uh also regulatory AI sandboxes that will allow uh developers to have dialogue support legal certainty and also safe environment to

develop those tools and uh also other supporting measures including through standards guidelines that are we are aiming to bring now and enable the effective implementation and uh wide uptake and availability of these systems also thank you thank you very much uh uh Yonka uh we we H we still have a lot of question but unfortunately uh our time together today is coming to an end thank you very much for all the questions on the slido application um so I I would like to thank uh again the speakers for the the time and excellent contributions uh

uh today really uh it was enlightening and um we have covered uh three uh complex uh uh topics so obligation applicable to February 2025 uh regulation of GPA models and a mandatory requirements for high-risk AI systems and the related standardization uh process uh but this two-part webinar is only the beginning of the AI act uh uh exploration so in 2025 we will uh delve deeper into these topics uh with additional uh sessions uh and we will focus on different uh areas still of course on obligations and requirements but also other topics such as ethical considerations

uh in AI GP aai open source uh models and also a sector specific application of the AI act uh also please help us uh to uh prepare the future seminars in uh filling in our satisfaction survey uh you have a QR code displayed on your screen right now for that so thank you thank you very much and uh okay it's time now so thank you again for your attention and we look uh forward to to further exchanges thank you e e e

Related Videos

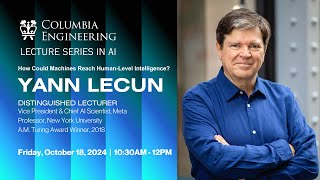

1:26:26

Webinar exploring the Architecture of the ...

DigitalEU

5,311 views

1:26:17

Lecture Series in AI: “How Could Machines ...

Columbia Engineering

58,179 views

1:00:07

Implementing the EU AI Act: Text to Practi...

Holistic AI

4,064 views

1:59:21

CONNECT University on The European Cancer ...

DigitalEU

139 views

1:01:16

Applied Research in VET Project: Lessons l...

EURASHE

307 views

1:30:43

Evolution of software architecture with th...

The Pragmatic Engineer

46,195 views

55:33

What is Agentic AI? Autonomous Agents and ...

CXOTalk

28,042 views

59:42

Michael Levin - Non-neural intelligence: b...

Institute for Pure & Applied Mathematics (IPAM)

17,124 views

1:00:21

Debunking the EU AI Act: an overview of th...

Fieldfisher Data & Privacy Team

16,193 views

57:03

Webinar: Unpacking the AI Act

Deloitte Nederland

5,170 views

58:06

Stanford Webinar - Large Language Models G...

Stanford Online

62,355 views

37:51

Why Did Fed Crash Markets Today? ‘Somethin...

David Lin

179,804 views

18:28

Are We Ready for the AI Revolution? Fmr. G...

Amanpour and Company

71,459 views

1:23:03

Best of BIM 2024 with Gavin Crump and Dana...

BIM Pure

957 views

41:45

Geoff Hinton - Will Digital Intelligence R...

Vector Institute

59,092 views

4:06:01

EDIH Network Summit 2024 | Day 1 |

DigitalEU

748 views

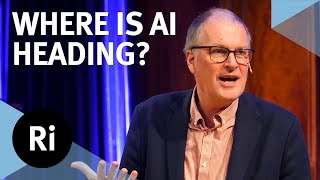

1:00:59

What's the future for generative AI? - The...

The Royal Institution

527,660 views

49:32

The Data Chronicles: The EU AI Act | What ...

Hogan Lovells

4,584 views

1:23:55

Webinar | The Impact of Generative AI on t...

FUSADES EL SALVADOR

20 views

45:46

Geoffrey Hinton | On working with Ilya, ch...

Sana

297,750 views