Kubernetes Explained in 6 Minutes | k8s Architecture

954.53k views904 WordsCopy TextShare

ByteByteGo

To get better at system design, subscribe to our weekly newsletter: https://bit.ly/3tfAlYD

Checkout...

Video Transcript:

What is Kubernetes? Why is it called k8s? What makes it so popular?

Let’s take a look. Kubernetes is an open-source container orchestration platform. It automates the deployment, scaling, and management of containerized applications.

Kubernetes can be traced back to Google's internal container orchestration system, Borg, which managed the deployment of thousands of applications within Google. In 2014, Google open-sourced a version of Borg. That is Kubernetes.

Why is it called k8s? This is a somewhat nerdy way of abbreviating long words. The number 8 in k8s refers to the 8 letters between the first letter “k” and the last letter “s” in the word Kubernetes.

Other examples are i18n for internationalization, and l10n for localization. A Kubernetes cluster is a set of machines, called nodes, that are used to run containerized applications. There are two core pieces in a Kubernetes cluster.

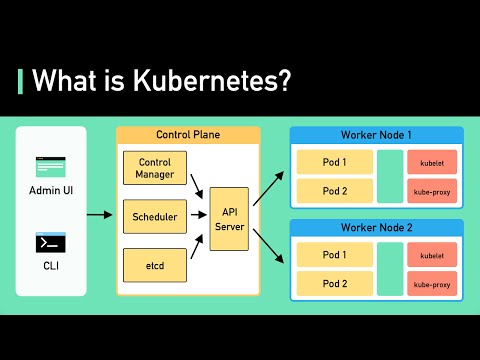

The first is the control plane. It is responsible for managing the state of the cluster. In production environments, the control plane usually runs on multiple nodes that span across several data center zones.

The second is a set of worker nodes. These nodes run the containerized application workloads. The containerized applications run in a Pod.

Pods are the smallest deployable units in Kubernetes. A pod hosts one or more containers and provides shared storage and networking for those containers. Pods are created and managed by the Kubernetes control plane.

They are the basic building blocks of Kubernetes applications. Now let’s dive a bit deeper into the control plane. It consists of a number of core components.

They are the API server, etcd, scheduler, and the controller manager. The API server is the primary interface between the control plane and the rest of the cluster. It exposes a RESTful API that allows clients to interact with the control plane and submit requests to manage the cluster.

etcd is a distributed key-value store. It stores the cluster's persistent state. It is used by the API server and other components of the control plane to store and retrieve information about the cluster.

The scheduler is responsible for scheduling pods onto the worker nodes in the cluster. It uses information about the resources required by the pods and the available resources on the worker nodes to make placement decisions. The controller manager is responsible for running controllers that manage the state of the cluster.

Some examples include the replication controller, which ensures that the desired number of replicas of a pod are running, and the deployment controller, which manages the rolling update and rollback of deployments. Next, let’s dive deeper into the worker nodes. The core components of Kubernetes that run on the worker nodes include kubelet, container runtime, and kube proxy.

The kubelet is a daemon that runs on each worker node. It is responsible for communicating with the control plane. It receives instructions from the control plane about which pods to run on the node, and ensures that the desired state of the pods is maintained.

The container runtime runs the containers on the worker nodes. It is responsible for pulling the container images from a registry, starting and stopping the containers, and managing the containers' resources. The kube-proxy is a network proxy that runs on each worker node.

It is responsible for routing traffic to the correct pods. It also provides load balancing for the pods and ensures that traffic is distributed evenly across the pods. So when should we use Kubernetes?

As with many things in software engineering, this is all about tradeoffs. Let’s look at the upsides first. Kubernetes is scalable and highly available.

It provides features like self-healing, automatic rollbacks, and horizontal scaling. It makes it easy to scale our applications up and down as needed, allowing us to respond to changes in demand quickly. Kubernetes is portable.

It helps us deploy and manage applications in a consistent and reliable way regardless of the underlying infrastructure. It runs on-premise, in a public cloud, or in a hybrid environment. It provides a uniform way to package, deploy, and manage applications.

Now how about the downsides? The number one drawback is complexity. Kubernetes is complex to set up and operate.

The upfront cost is high, especially for organizations new to container orchestration. It requires a high level of expertise and resources to set up and manage a production Kubernetes environment. The second drawback is cost.

Kubernetes requires a certain minimum level of resources to run in order to support all the features we mentioned above. It is likely an overkill for many smaller organizations. One popular option that strikes a reasonable balance is to offload the management of the control plane to a managed Kubernetes service.

Managed Kubernetes services are provided by cloud providers. Some popular ones are Amazon EKS, GKE on Google Cloud, and AKS on Azure. These services allow organizations to run the Kubernetes applications without having to worry about the underlying infrastructure.

They take care of tasks that require deep expertise, like setting up and configuring the control plane, scaling the cluster, and providing ongoing maintenance and support. This is a reasonable option for a mid-size organization to test out Kubernetes. For a small organization, YAGNI - You ain’t gonna need it - is our recommendation.

If you would like to learn more about system design, check out our books and weekly newsletter. Please subscribe if you learn something new. Thank you and we'll see you next time.

Related Videos

![Kubernetes Crash Course for Absolute Beginners [NEW]](https://img.youtube.com/vi/s_o8dwzRlu4/mqdefault.jpg)

1:12:04

Kubernetes Crash Course for Absolute Begin...

TechWorld with Nana

2,788,281 views

15:18

Kubernetes Explained in 15 Minutes | Hands...

Travis Media

87,412 views

9:54

System Design Interview: A Step-By-Step Guide

ByteByteGo

671,321 views

8:04

Kubernetes vs. Docker: It's Not an Either/...

IBM Technology

1,137,190 views

7:42

Cloud Native Architecture Fundamentals - I...

DiveInto with James Spurin

12,145 views

6:09

What is RPC? gRPC Introduction.

ByteByteGo

498,836 views

6:14

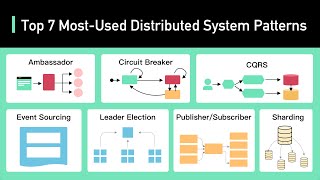

Top 7 Most-Used Distributed System Patterns

ByteByteGo

250,788 views

4:54

What Is Single Sign-on (SSO)? How It Works

ByteByteGo

599,820 views

9:31

Kubernetes Architecture Simplified | K8s E...

KodeKloud

347,146 views

5:02

System Design: Why is Kafka fast?

ByteByteGo

1,132,526 views

5:17

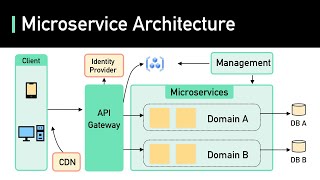

Microservices Explained in 5 Minutes

5 Minutes or Less

746,251 views

14:13

What is Kubernetes | Kubernetes explained ...

TechWorld with Nana

1,301,607 views

7:53

Apache vs NGINX

IBM Technology

296,203 views

4:45

What Are Microservices Really All About? (...

ByteByteGo

675,843 views

14:32

NGINX Tutorial - What is Nginx

TechWorld with Nana

158,845 views

29:34

you need to learn Kubernetes RIGHT NOW!!

NetworkChuck

1,224,475 views

5:17

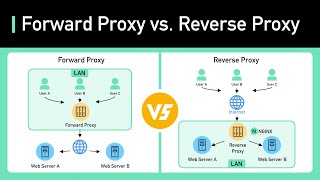

Proxy vs Reverse Proxy (Real-world Examples)

ByteByteGo

558,059 views

6:05

Top 7 Ways to 10x Your API Performance

ByteByteGo

329,663 views

8:28

100+ Docker Concepts you Need to Know

Fireship

957,783 views

10:59

Kubernetes Explained

IBM Technology

620,511 views