How AI Image Generation Works

7.88k views2483 WordsCopy TextShare

AltexSoft

Generating something from a written prompt is difficult enough, but modern AI tools like DALL-E, Sta...

Video Transcript:

The Uncanny Valley is an effect explaining the uneasy feeling we get from seeing images that closely resemble humans but are not quite realistic from wax figures in museums to humanoid looking robots we can typically spot the slightest Divergence from normal human faces and movements and feel uncomfortable wary of them these faces however don't produce quite the same uneasy feeling from us they may seem stilted and the details May indicate that this is not actually a photo but overall AI has managed to generate very convincing human portraits portraits of people that don't exist but definitely could

although these are fascinating examples you may notice that they're randomized to generate something from a written prompt is an entirely different deal but modern AI tools like DOL e stable diffusion and mid Journey seem to be conquering that challenge as well how do they do that the earliest technology to produce realistic images like that has been generative adversarial networks or Gans computer scientist Ian Goodfellow came up with the idea for Gans One Night in a bar in 2014 by that time an AI technique called Deep learning was all the rage deep learning uses neural networks

similar to those humans have to recognize objects on photos or videos or or words in a spoken sentence deep learning has shown great results in medicine identifying diseases on medical images in finance and e-commerce to uncover fraudulent transactions in Virtual assistants like Siri or Alexa to understand human speech one of the most prominent deep learning advancements was detecting humans cars and road signs in self-driving cars basically back then the technology was pretty good at describing objects in photos but not vice versa creating them from descriptions at that time Ian Goodfellow was interning at Google creating

a deep neural network capable of reading address numbers from images from street view so when he entered a popular student bar to celebrate his friend's graduation he was naturally involved in a tricky computer science topic I was at a bar with some friends at a going away party and we were arguing about how to get over this particular barrier so some of my friends are working on a different algorithm that can learn from unlabeled data and I was saying that their idea wasn't likely to work but a a different version of it that ended up

being Gans uh could actually do what they were hoping to do his idea was different what if you pit two neural networks against each other so I actually went straight home from the bar and coed them up that evening and it worked what does it mean to pit two models against each other think about how people learn imagine you're teaching a child about different animals you show them a picture of a horse and they must say what this animal is called if the child says that it's a dog we correct them and tell them that

it's a horse then the next time a child sees the picture of a horse they must be able to give a correct answer in machine learning this method is called supervised learning when a machine tries to identify the subject on an image the training data contains what the expect output should be and then the model adjusts Its Behavior to minimize the difference between its guess and the desired output but when it comes to imagining a new picture there's no desired output to compare it to we can only assess if the imagined picture is good or

not so instead of having a person judging every generated image we assign this task to another neural network that's the trick with generative adversarial networks one network is called a generator and it tries to generate realistic images another model is called a discriminator and it's fed both generated images and real ones so it tries to guess which ones are real at first both of them are really bad at their job a generator receives random noise as an input so it will start by creating something random as well a discriminator won't know synthetic from real data

at first but by not being able to guess correctly it will update itself for a new iteration as the generator learns from its mistakes it starts producing convincingly good results and as soon as both discriminator and human are fooled to believe the generated image is real the learning process is considered a success this is why they're called generative adversarial networks by playing against an adversary a generator learns to make realistic images images that don't look like training data but are rather completely new images that seem to be real in some way gansz are AI models

that that have imagination as we've already seen ganss are particularly good at generating faces as well as animals or Landscapes they've also been pretty popular for image editing for example aging people up Changing Horses to zebras or transferring a famous painters art style onto real photos Gans allowed us to experience the world where computers are capable of making up images but those images are not actually generated from complex written prompts the way we're used to today that's because state-of-the-art image generators have an important component for understanding human text how does it work you've probably already

had a few conversations with AI Siri Google or Amazon Alexa have been with us for a while and apart from some unfortunate fails they've become very good at giving us what we want the branch of AI tasked to teach machines to understand human speech is called natural language processing or NLP and for such complex tasks as image generation we need a model that correctly interprets all nuances of speech it's one thing to create an image of a duck but what if you want a duck with green rain boots and an umbrella the machine must understand

that boots are apparel and the duck must be wearing them and an umbrella is something you hold above your head so the task is not just translating human words into machine language but populating each word with context characteristics that indicate how the thing that this word describes should be portrayed one of the earliest ideas for generating images from a written description was to use captions if deep learning models are so good at describing what they see on the image maybe we could reverse the process too most images on the internet are accompanied by textual descriptions

so it only makes sense to train AI not only with images but also with their corresponding captions being trained on the pairs of images plus descriptions the model learns to extract important features and their relationships with one another of course this is done using numbers and not just numbers but vectors they are coordinates of particular Concepts in the multi-dimensional space the duck for example can have a myriad of characteristics or Dimensions it's a living being a bird it's typically small it can walk and swim and fly each of these characteristics is a separate dimension and

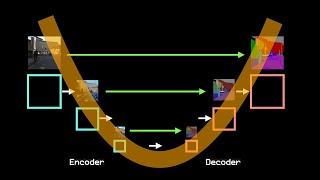

the word duck is placed somewhere in the space now the word duckling is very similar in meaning and shares most of the same characteristics so it would be placed very close to the word duck in the same space other animals might be close too while humans can be a bit farther and words for different objects or even Concepts even farther from them all there are thousands of images of ducks the model is trained with and they're all pretty different representations of the same same thing to simplify matters and make similar objects closer to each other

we reduce the dimensions and place data into a latent space a compressed and more abstract representation of the original multi-dimensional space this way thousands of ducks with their own set of characteristics become one idea of a duck that shares them all this way when tasked to generate a duck a model doesn't copy any particular image from the data set but crafts a completely new one from the combination of the characteristics that it captured now as we understand how computers apply context to the words they receive as prompt let's talk about the state-of-the-art technology that overthrew

gans's and Powers modern AI generation tools meet diffusion models just a year after Goodfellow came up with the idea of Gans a physicist Yasha Soul dixin invented diffusion models by by that time he already spent a few years helping NASA send Rovers to Mars and came to Stanford to research non-equilibrium thermodynamics computer science was actually his side interest but he managed to make a breakthrough thanks to his physics background so what are diffusion models and what are their connections to physics you know how when you spray some perfume in a room and the fragrance is

really strong in one part of the room but then it gets dispersed leaving only a trace behind in physics this principle is called diffusion and non-equilibrium thermodynamics actually describes the probability of finding a molecule of perfume in the room at each step of the diffusion process inspired by this concept Soul dixin applied it to generative modeling what if images were turned into noise just like perfume disperses in a room and then the machine learned to reverse the process and turn the noise back into images here's how it works first a diffusion model takes a training

image and starts adding noise to it with each step the image loses its details until it becomes only noise the idea is that the model learns what the image looks like at each stage so it can then reconstruct it during the reversal process the model tries to predict what the next less noisy image will look like at first the results will look far from the original image but you tweak the parameters so with each iteration the model does better until it finally shapes into a new image diffusion models quickly took the machine learning World by

storm by having multiple steps in the denoising process they can create extremely detailed images and the technology has been advancing at immense speed diffusion models are used not only to create new images but also to add generated objects to existing photos or extend them in just a few clicks and although they can deliver nonsense results in many cases with the right prompts used the outcome is mindblowing so let's talk about the tools that are powered by this technology these are the images generated by the first version of do e in 2021 before it used diffusion

models and this is the image created by the same prompt today by the most recent version do E3 the level of detail the realism and the consistency of art style is striking today do e by open AI the same people behind chat GPT is one of the most advanced image generation models one of the ways they managed to improve the model is by improving the data set with AI typically AI models are trained using pairs of images and corresponding captions from the internet of course quite often those captions are not as detailed or even remotely

helpful so for DOL E3 open AI used captions generated by a language model that can produce accurate descriptions on a whim for instance we've asked DOL E3 to create an image of a cat wearing sunglasses on a spacecraft and the model expanded The Prompt describing the sleek and metallic interior of the spacecraft the cat's fluffy coat and an air of confidence and the background with stars and distant planets another popular AI tool is mid Journey which entered open Beta in 2022 it's become one of the most well-known tools thanks to the controversy when an image

created using mid Journey won a fine art competition allegedly it also uses diffusion to generate images and although we don't know exactly how it works we can assume that thanks to the way users interact with generated images the model receives a lot of feedback on which images perform best and apply it to improve another popular tool is stable diffusion by stability AI released in 2022 it actually introduced the idea of latent diffusion models which we talked about before thanks to that image Generation Now happens much faster it's also the only Big Tool that's open source

and you can run on your own computer it has several built-in tools and you can download custom models and have more control over your images despite the fact that all three tools use the same diffusion technology they all produce different results due to different data sets and embedding processes some experienced users can even guess what tool would was used for what image let's check to see if you can guess which too which of these images do you think was generated by which tool press pause if you need more time now this night was created by

midj Journey this faceless hero by stable diffusion and the final Knight belongs to Dolly did you get any of them right AI still has a lot to learn before it can produce truly errorless pictures also many generated pictures today have the specific AI look that makes them easy to pinpoint if you've seen enough of them but the generic AI style and misunderstandings can be overcome with the right prompts an AI prompt engineer is a specialist that understands how NLP works and uses a variety of techniques to get the most value from generated results these capabilities

introduced us to the concept of AI assisted art where us users can get unique results by crafting complex prompts of course there's more to say on the topic of ethics and value of such art which we plan to explore in one of our future videos it's clear that we've accepted AI generated images in our work and personal lives we use it for inspiration and brainstorming for sharing ideas for enhancing images and videos and just for fun you can see our video on generative Ai and business for more ideas on using it just like any breakthrough

technology it poses a lot of questions that we are yet to answer and will require a lot of understanding and skills from us to benefit from it but this is only a matter of time join us as we explore the many sides of AI generated content and let us know in the comments what you'd like us to cover next and of course don't forget to subscribe and leave a like if you've enjoyed this video we'll see you soon [Music]

Related Videos

17:57

Generative AI in a Nutshell - how to survi...

Henrik Kniberg

2,611,832 views

16:02

10 AI Animation Tools You Won’t Believe ar...

Futurepedia

660,630 views

14:15

How Generative AI Works

AltexSoft

6,732 views

24:23

The Breakthrough Behind Modern AI Image Ge...

Depth First

1,926 views

30:21

How Stable Diffusion Works (AI Image Gener...

Gonkee

163,045 views

28:47

AI Art Explained: How AI Generates Images ...

Jay Alammar

37,904 views

20:38

Prompt Engineering Tutorial: Text-to-Image...

Learn With Shopify

16,802 views

27:35

DALLE-3 Masterclass: Everything You Didn’t...

AI cents

124,554 views

17:50

How AI Image Generators Work (Stable Diffu...

Computerphile

960,651 views

27:14

Transformers (how LLMs work) explained vis...

3Blue1Brown

4,340,109 views

16:10

8 AI Tools I Wish I Tried Sooner

Futurepedia

242,776 views

13:33

AI art, explained

Vox

2,480,530 views

17:58

This new type of illusion is really hard t...

Steve Mould

772,310 views

19:32

The 8 AI Skills That Will Separate Winners...

Liam Ottley

762,141 views

10:31

The U-Net (actually) explained in 10 minutes

rupert ai

135,124 views

18:05

How AI 'Understands' Images (CLIP) - Compu...

Computerphile

224,679 views

15:28

What are Diffusion Models?

Ari Seff

244,821 views

7:08

Diffusion models explained in 4-difficulty...

AssemblyAI

140,804 views

53:17

This AI Technology Will Replace Millions (...

Liam Ottley

225,472 views

37:22

You don't understand AI until you watch this

AI Search

1,016,778 views