How is data prepared for machine learning?

67.4k views2275 WordsCopy TextShare

AltexSoft

Data is one of the main factors determining whether machine learning projects will succeed or fail. ...

Video Transcript:

in 2014 amazon started working on its experimental ml driven recruitment tool similar to the amazon rating system the hiring tool was supposed to give job applicants scores ranging from one to five stars when screening resumes for the best candidates yeah the idea was great but it seemed that the machine learning model only liked men it penalized all resumes containing the word women's as in women's softball team captain in 2018 reuters broke the news that amazon eventually had shut down the project now the million-dollar question how come amazon's machine learning model turned out to be sexist a ai goes rogue b inexperienced data scientists c faulty data set d alexa gets jealous the correct answer is c faulty dataset not exclusively of course getdata is one of the main factors determining whether ml projects will succeed or fail in the case of amazon models were trained on 10 years worth of resumes submitted to the company for the most part by men so here's another million dollar question how is data prepared for machine learning all the magic begins with planning and formulating the problem that needs to be solved with the help of machine learning pretty much the same as with any other business decision then you start constructing a training data set and stumble on the first rock how much data is enough to train a good model just a couple samples thousands of them or even more the thing is there's no one-size-fits-all formula to help you calculate the right size of data set for a machine learning model here many factors play their role from the problem you want to address to the learning algorithm you apply within the model the simple rule of thumb is to collect as much data as possible because it's difficult to predict which and how many data samples will bring the most value in simple words there should be a lot of training data well a lot sounds a bit too vague right here are a couple of real-life examples for a better understanding you know gmail from google right it's smart reply suggestions save time for users generating short email responses right away to make that happen the google team collected and pre-processed the training set that consisted of 238 million sample messages with and without responses as far as google translate it took trillions of examples for the whole project but it doesn't mean you also need to strive for these huge numbers ai chang yay tam kang university professor used the data set consisting of only 630 data samples with them he successfully trained the model of a neural network to accurately predict the compressive strength of high performance concrete as you can see the size of training data depends on the complexity of the project in the first place at the same time it is not only the size of the data set that matters but also its quality what can be considered as quality data the good old principle garbage in garbage out states a machine learns exactly what it's taught feed your model inaccurate or poor quality data and no matter how great the model is how experienced your data scientists are or how much money you spend on the project you won't get any decent results remember amazon that's what we're talking about okay it seems that the solution to the problem is kind of obvious avoid the garbage in part and you're golden but it's not that easy say you need to forecast turkey sales during the thanksgiving holidays in the u. s but the historical data you're about to train your model on encompasses only canada you may think thanksgiving here thanksgiving there what's the difference to start with canadians don't make that big of a fuss about turkey the bird suffers an embarrassing loss in the battle to pumpkin pies also the holiday isn't observed nationwide not to mention that canada celebrates thanksgiving in october not november chances are such data is just inadequate for the u. s market this example shows how important it is to ensure not only the high quality of data but also its adequacy to the set task then the selected data has to be transformed into the most digestible form for a model so you need data preparation for instance in supervised machine learning you inevitably go through a process called labeling this means you show a model the correct answers to the given problem by leaving corresponding labels within a data set labeling can be compared to how you teach a kid what apples look like first you show pictures and you see that these are well apples then you repeat the procedure when the kid has seen enough pictures of different apples the kid will be able to distinguish apples from other kinds of fruit okay what if it's not a kid that needs to detect apples and pictures but a machine the model needs some measurable characteristics that will describe data to it such characteristics are called features in the case of apples the features that differentiate apples from other fruit on images are their shape color and texture to name a few just like the kid when the model has seen enough examples of the features it needs to predict it can apply learned patterns and decide on new data inputs on its own when it comes to images humans must label them manually for the machine to learn from of course there are some tricks like what google does with their recaptcha yeah just so you know you've been helping google build its database for years every time you proved you weren't a robot but labels can be already available in data for instance if you're building a model to predict whether a person is going to repay a loan you'd have the loan repayments and bankruptcy's history anyway it's so cool and easy in an ideal world in practice there may be issues like mislabeled data samples getting back to our apple recognition example well you see that the third part of training images shows peaches marked as apples if you leave it like that the model will think that pages are apples too and that's not the result you're looking for so it makes sense to have several people double check or cross-label the data set of course labeling isn't the only procedure needed when preparing data for machine learning one of the most crucial data preparation processes is data reduction and cleansing wait what reduce data clean it shouldn't we collect all the data possible well you do need to collect all possible data but it doesn't mean that every piece of it carries value for your machine learning project so you do the reduction to put only relevant data in your model picture this you work for a hotel and want to build an ml model to forecast customer demand for twin and single rooms this year you have a huge data set with different variables like customer demographics and information on how many times each customer booked a particular hotel room last year what you see here is just a tiny piece of a spreadsheet in reality there may be thousands of columns and rows let's imagine that the columns are dimensions on the 100 dimensional space with rows of data as points within that space it will be difficult to do since we are used to three space dimensions but each column is really a separate dimension here and it's also a feature fed as input to a model the thing is when the number of dimensions is too big and some of those aren't very useful the performance of the machine learning algorithms can decrease logically you need to reduce the number right that's what dimensionality reduction is about for example you can completely remove features that have zero or close to zero variance like in the case of the country feature in our table since all customers come from the us the presence of this feature won't make much impact on the prediction accuracy there's also redundant data like the year of birth feature as it presents the same info as the age variable why use both if it's basically a duplicate another common pre-processing practice is sampling often you need to prototype solutions before actual production if collected data sets are just too big they can slow down the training process as they require larger computational and memory resources and take more time for algorithms to run on with sampling you single out just a subset of examples for training instead of using the whole data set right away speeding the exploration and prototyping of solutions sampling methods can also be applied to solve the imbalanced data issue involving data sets where the class representation is not equal that's the problem amazon had when building their tool the training data was imbalanced with the prevailing part of resumes submitted by men making female resumes a minority class the model would have provided less biased results if it had been trained on a sampled training data set with a more equal class distribution made prior what about cleaning them data sets are often incomplete containing empty cells meaningless records or question marks instead of necessary values not to mention that some data can be corrupted or just inaccurate that needs to be fixed it's better to feed a model with imputed data than leave blank spaces for it to speculate as an example you fill in missing values with selected constants or some predicted values based on other observations in the data set as far as corrupted or inaccurate data you simply delete it from a set okay data is reduced and cleansed here comes another fun part data wrangling this means transforming raw data into a form that best describes the underlying problem to a model the step may include such techniques as formatting and normalization well these words sound too techy but they aren't that scary combining data from multiple sources may not be in a format that fits your machine learning system best for example collected data comes in xls file format but you need it to be in plain text formats like dot csv so you perform formatting in addition to that you should make all data instances consistent throughout the data sets say a state in one system could be florida in another it could be fl pick one and make it a standard you may have different data attributes with numbers of different scales presenting quantities like pounds dollars or sales volumes for example you need to predict how much turkey people will buy during this year's thanksgiving holiday consider that your historical data contains two features the number of turkeys sold and the amount of money received from the sales but here's the thing the turkey quantity ranges from 100 to 900 per day while the amount of money ranges from 1500 to 13 000 if you leave it like this some models may consider that money values have higher importance to the prediction because they are simply bigger numbers to ensure each feature has equal importance to model performance normalization is applied it helps unify the scale of figures from say 0.

0 to 1. 0 for the smallest and largest value of a given feature one of the classical ways to do that is the min max normalization approach for example if we were to normalize the amount of money the minimum value 1500 is transformed into a zero the maximum value 13 000 is transformed into one values in between become decimals say 2700 will be 0. 1 and 7 000 will become 0.

Related Videos

11:45

What is a Machine Learning Engineer

AltexSoft

80,972 views

49:43

Python Machine Learning Tutorial (Data Sci...

Programming with Mosh

3,015,716 views

17:40

Data Storage for Analytics and Machine Lea...

AltexSoft

3,727 views

29:19

How to Do Data Exploration (step-by-step t...

Mısra Turp

119,273 views

26:43

How I'd Learn Data Science In 2024 (If I C...

Data Nash

74,939 views

13:07

I Studied Data Job Trends for 24 Hours to ...

Thu Vu data analytics

284,731 views

26:09

Computer Scientist Explains Machine Learni...

WIRED

2,427,801 views

11:12

Roles in Data Science Teams

AltexSoft

57,626 views

12:01

Fraud Detection: Fighting Financial Crime ...

AltexSoft

72,234 views

14:14

How Data Engineering Works

AltexSoft

466,235 views

16:30

All Machine Learning algorithms explained ...

Infinite Codes

401,506 views

17:57

Generative AI in a Nutshell - how to survi...

Henrik Kniberg

2,436,348 views

15:12

FASTEST Way to Learn Modern GIS and ACTUAL...

Matt Forrest

123,029 views

8:45

AI Machine Learning Roadmap: Self Study AI!

Exaltitude

107,166 views

13:11

ML Was Hard Until I Learned These 5 Secrets!

Boris Meinardus

343,898 views

46:02

What is generative AI and how does it work...

The Royal Institution

1,157,679 views

24:20

"okay, but I want Llama 3 for my specific ...

David Ondrej

258,225 views

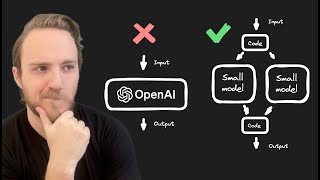

10:24

Training Your Own AI Model Is Not As Hard ...

Steve (Builder.io)

615,569 views

13:42

Beyond the Numbers: A Data Analyst Journey...

TEDx Talks

901,799 views

29:57

Data Engineering Principles - Build framew...

PyData

156,640 views