Your Guide to Quantum Circuits and Qiskit: Quantum Computing in Practice

14.73k views4517 WordsCopy TextShare

Qiskit

This episode will go over IBM Quantum hardware and Qiskit software. We will discuss the Qiskit primi...

Video Transcript:

hi my name is Olivia lanes and welcome back to Quantum Computing in practice this is episode two Quantum circuits we're going to be covering several topics in today's lecture but what I want you to walk away knowing are the following three things one what IBM quantum computers actually are so that you at least have a rough picture in your mind of what you're doing when you send a job to one of those over the cloud two what kuit is what Primitives are and how we can use them to create create and execute Quantum circuits we'll

also discuss at a high level the different types of IBM Quantum processors available and what distinguishes one from another and three lastly the typical workflow that we follow to run experiments at scale this includes selecting the best Primitives for your use case mapping a problem to a Quantum circuit and applying error suppression and mitigation which enable us to squeeze as much power out of these machines as possible now there's a lot of information here but stick with me because soon enough we're going to put it all together in the context of some real examples first

let's briefly discuss cubits and the actual Quantum Hardware as an experimentalist I can promise you that it is fascinating and it really helps connect the dots to visualize what's actually happening when jobs are sent to a quantum computer through the cloud the processors that we're going to be using are built with superconducting transmon cubits here's what a small IBM quantum processor looks like now these small squares here are the cubits themselves so there are a total of five on this chip the transmon itself is a capacitor and it consists of two plates of metal hooked

up in paril with a nonlinear inductor called the Jose injunction but to see the Joseph injunction you actually have to zoom in even more it's created from two overlapping layers of superconducting metal and where the two pieces overlap and touch is the junction a Jose Junction is an element that can be used to create an artificial atom with multiple energy levels and we isolate the bottom two energy levels as our Cubit State the squiggly lines here represent the microwave resonators basically 2D transmission lines coupled to the Cubit now when we program a gate that essentially

equates to choosing a highly calibrated microwave pulse and the specific frequency amplitude shape and duration of those pulses make the Cubit do specific things the instructions for the microwave pulse go from your computer to the cloud and then are read and interpreted by room temperature control Electronics which take those instructions and physically generate the pulses we call them microwave pulses because they all fit within the microwave frequency bandwidth which is around 1 GHz after the room temperature control boxes create the pulses they travel through the cables into the dilution refrigerator and eventually to our Quantum

chip the signal goes into the resonators through a wire Bond on these pads and then it flows down the transmission line into our cubits now I know this all might have been a little Technical and these details aren't crucial to understand for us going forward but I hope now that you can all at least picture the physical system that you're all about to interact with so where do these quantum computers actually exist well IBM has dozens of quantum computers all around the world and we've recently upgraded our Fleet to contain only 100 plus Cubit processors

as I mentioned in the previous lesson some of them are located in an IBM Quantum data center in Upstate New York those systems are deployed all over the cloud for everyone's use and some of them are dedicated on premise systems complete with all of the electronics to make them work that support our partners in the IBM quantum network now let's talk about kcit which is IBM's open-source software development kit designed to work on quantum computers from now on I'll be referring to this as the kkit SDK there's also the kkit ecosystem which is a collection

of software tutorials and functions that build on or extend the core functionalities of kkit as well as kkit runtime which is a Quantum Computing service and programming model that allows users to design and efficiently execute their Quantum workloads by using kcit Primitives and A Primitive is basically a small building block that you can use to design larger circuits or a job the two Primitives that are most important to us are going to be the sampler and the estimator which we'll discuss in more depth shortly if you haven't downloaded and installed the kiss kit SDK yet

there is no time like the present kiss kit 1.0 was recently released and it's more performative and stable than ever before so for those of you that are just getting started you came in at the perfect time for those of you that are already familiar with kcit you'll need to download and reinstall the newest version we recommend using pip to install kiss kit and kiss kit runtime and running them through a Jupiter notebook in a web browser of your choice so you're also going to need to install jupyter notebook or Jupiter lab in case you

haven't done that already these tools are all free and you can get them up and running on your own local machine without any cost make sure to add kiss kit with the visualization option dependencies so it works with Jupiter and lets you plot things you will also need to set up a virtual python environment and there are instructions on how to do this on the kit install page linked here once you've become familiar with kkit and you're ready to run jobs on real quantum computers you'll need to register at quantum.com and create an IBM Quantum

API token and there is also a link for here for that a detailed walkthrough of how to do this follow all the instructions in our documentation page like I mentioned or you can watch the coding with kkit installation video here both of which are linked and in the description now we're just about ready to start talking about Quantum circuits since the point of this course is to teach you the audience how to scale up your jobs to be run at 100 plus Cubit scale I believe the most constructive way forward is to First consider the

overall workflow that we're going to follow and the easiest way to understand a typical Quantum Computing workflow is through kiss kit patterns kiss kit patterns are a conceptual framework allowing users to run Quantum workloads by implementing certain steps with modular tooling this allows Quantum Computing task to be performed by powerful heterogeneous Computing infrastructure these steps can be performed as a service and can incorporate Resource Management which allows for seamless composability of new capabilities as they are developed here are the main steps which experienced kcit users will likely already recognize the first step is mapping this

step formalizes how we take a general problem that we're interested in and figure out how to map it in into a quantum computer in the form of a circuit next we optimize in this step we use the kkit transpiler to route and lay out the circuit onto an actual physical Quantum Hardware this includes translating the individual Quantum Gates into sequences of operations that are performed on the hardware as well as optimization in the layout of those Gates next we will execute kuit runtime Primitives provide the interface to IBM Quantum Hardware that allows transpiled circuit to

run this step also includes using error suppression and mitigation techniques which can largely be abstracted away from the user lastly we postprocess in this step the data from the quantum processor itself is processed providing the user with useful results on the original problem basically this step encompasses any further analysis of the data that is acquired now we're ready to discuss the foundation of quantum programs which are quantum circuits hopefully you're already familiar with Quantum circuits but in case you're not you might want to first check out lesson three Quantum circuits which is a part of

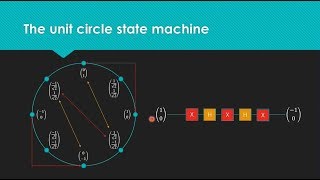

understanding Quantum information and computation linked below we're only going to be giving a short refresher here now a Quantum circuit is a network of quantum Gates and measurements linked by wires that represent the cubits Quantum circuits can be read like sheet music from left to right starting at Time Zero which is all the way on the left virtual cubits meaning cubits that haven't yet been assigned to an actual physical Cubit on a processor are listed in increasing order from top to bottom once we set up our circuit we can add gates to it single Cubit

Gates like this hadamar gate here affect only the Cubit whose wire it's placed on multiple Cubit Gates like this cot affect two or more cubits linked by the gate here in this example the state of Cubit one changes depending on what the state of Cubit zero is in after all of the gates are applied we can perform measurements which cause the results to be written onto classical registers one particularly important characteristic of a circuit is its depth the depth of the quantum circuit is the minimum number of layers of quantum Gates executed in parallel required

to complete the computation defined by the circuit to be clear Quantum Gates can be executed in parallel or in other words at the exact same time whenever they don't have any cubits in common but if two or more gates act on the same cubits then we can't perform them in parallel we're forced to perform them one after the other because Quantum Gates take time to implement the depth of a circuit roughly corresponds to the amount of time needed for quantum computers to execute the circuit so knowing the depth of a circuit is important for knowing

that it can be used to run on a particular device or not it also will tell us if it has a good chance of turning out an accurate result next let's move on to the selection of a Quantum processor IBM has recently released a whole new Fleet of processors all having over 100 cubits and you can log on to quantum.com to see which processors you have access to the processors are all named after birds for reasons and the different logos represent different types of processors so for instance these ones represent Eagle processors and this icon

is a heron the name of the specific processors are also found at the top they're all named after cities but that doesn't NE necessarily mean that the processor is physically located in that City those are just names Cubit count eppg and kops are also factors that are all listed we'll go over each of these cubits now this is pretty self-explanatory cubits is just the number of total cubits available to use on that sole Quantum processor or qpu EPG stands for the errors per layered gate which measures the average gate process error in a layered chain

of 100 cubits basically you want to be as small as possible kops stands for circuit layer operations per second and it measures how many layers of a Quantum volume circuit which is a virtual circuit a qpu can execute per unit time the higher the number the faster we can compute this is equivalent to what flops means in classical Computing now these different metrics may be more or less important depending on the specific application area that you're interested in next let's discuss at a high level mapping to Quantum circuits the first step is the mapping step

which essentially answers the question how do I translate my problem into a Quantum circuit that can be run on Quantum Hardware now there's no doubt about it mapping is a hard problem and it's an active area of research sometimes just being able to take a computational problem and come up with a sequence of quantum Gates that helps solve it is the hardest part there isn't a foolproof method that guarantees success but there are recommended guidelines and examples of problems that we already know how to map the first guideline is to let classical computers do whatever

work that they are better at themselves tests that are easy for classical computers probably won't benefit from a quantum computer quantum computers are for problems that are classically hard of course if this is your very first time using kkit or a quantum computer don't worry about finding a problem that is computationally complex break it down into smaller problems that you can learn to address before going straight to a utility scale project next translate the outcomes for your problem that you want to measure or understand into an expectation value problem or a sampling problem for most

types of problems this essentially corresponds to calculating a cost function a cost function is a problem specific function that defines the problem's goal as something to be minimized or maximized and they can be used to see how well a trial state or solution is performing with respect to that goal this notion can be applied to various applications in chemistry machine learning Finance optimization and so on also keep in mind that the hardware that you're going to be using has a specific topology some cubits aren't connected and some are and ultimately you'll need to map your

problem to a circuit that represents this IBM Quantum processors use what's called a heavy hex topology which essentially equates to Cubit Connections in a hexagonal lattice which we'll get into more later in the course for now the most important thing to keep in mind is that this stage requires practice you need to have a good understanding of not only your problem but also the quantum Hardware capabilities and we'll go through a specific example and use case later on to see how these can be best balanced now let's discuss how to optimize a circuit for the

hardware that we've selected the overall goal of the optimization step is to map all of the virtual cubits from our circuit to actual real cubits somewhere on the topology of the processor the name of the game is to reduce errors as much as possible to do this we first need to route and lay out our circuit efficiently first thing to keep in mind is that not all cubits are connected as I've already said some are actually far away from one another on the chip so we need to reduce or eliminate longdistance interactions whenever possible you

can apply a sequence of swap Gates between neighboring cubits to move Cubit information around but swap gates are costly and prone to errors so there may be better ways of doing this next layout and routing are iterative processes and they assign a virtual Cubit to your circuit on the physical Cubit on the hardware you can do this by hand but there are also Tools in kcit which can make recommendations for the physical Cubit layout based on approximate error rates next we can compose sequences of one cubit Gates acting on the same Cubit into single Gates

and we can also sometimes get rid of unnecessary Gates or combinations of gates for example some combinations of gates can be reduced to simpler combinations and sometimes combinations of gates even equate to just a simple identity operator so we can simply eliminate them you can do this automatically using the kkit transpiler or you can do it manually on a gate bygate basis if you have a good reason to do so and you want more control once we've improved the circuit layout routing and gate counts either by hand or by using the kuit transpiler we usually

want to visualize our circuit to make sure that the timing of all the gates makes sense there is an argument that you can flag in the transpiler to visualize the timeline of your circuit and make sure that everything is lined up the way that you would expect now let's talk about error suppression error suppression refers to various techniques that transform a C during compilation to minimize errors it's different from error mitigation which takes place after the circuit has already been run and we'll come to that shortly the two most common forms of error suppression that

we take advantage of are dynamical decoupling and poly twirling there is going to be some technical details here but you can always refer back to this later so the first dynamical decoupling is used to effectively cancel out some of the noise affecting the Cubit by applying a series of gates that together amount to the identity operation like XX sequences and whose net effect is to approximately cancel out the noise channels affecting the cubid poly twirling is a way of inserting random gates to simplify the average noise the randomness helps prevent the effects from different errors

from building up as fast and also makes the noise easier to characterize now this method also forms the basis of an error mitigation technique and we're going to be discussing that later again there's a lot of information here and don't worry if it doesn't all SN in immediately luckily a lot of this can be done automatically using the kcit transpiler transpilation can be tricky though but the transpilation is such a crucial step to achieve utility at scale that it requires a lot of trial and error in order to be mastered and obtained the best results

now I want to take a brief interlude to discuss the kiskat transpiler and its capabilities a bit more I'll do this at a very high level and we'll get into the details and see some examples later on in general transpilation is the process of rewriting a given input circuit to match the topology and specific Quantum device and optimize the circuit for execution and resilience against noise it also rewrites a given circuit into the basis Gates of that specific Quantum processor you have selected to use kkit has four built-in transpilation pipelines and unless you're already familiar

with Quantum circuit optimization I definitely recommend using one of those built-in pipelines by default the transpiler includes these six steps initialization layout routing translation optimization and scheduling now initialization refers to any initial pass that is required before we start embedding the circuit onto the back end this typically involves unrolling custom instructions and converting the circuit into just one and two Cubit Gates the layout stage applies a layout mapping the virtual cubits in the circuit to the physical cubits on a back end routing runs after a layout has been applied and injects Gates such as swap

Gates into the original circuit to make it compatible with the backend's connectivity the fourth stage is translation in this stage the gates in the circuit are translated to the Target backends basis set next is optimization this stage runs the main optimization Loop repeatedly until a condition such as reaching a certain Target depth is met we have four different optimization levels that we are able to choose from last is scheduling this stage is for any hardware aware scheduling passes at a high level scheduling can be thought of as inserting delays into the circuit to account for

any idle time on the cubits between the execution of instructions so like I said there are four optimization levels ranging from 0 to three where higher optimization levels take more time and computational effort but you might end up with a better circuit optimization level zero is intended for device characterization experiments and as such only Maps the input circuit to the constraints of the target backend it doesn't perform any optimization optimization level three spends the most effort to optimize the circuit however as many of the optimization techniques in the transpiler are based on heris sixs spending

more computational effort doesn't always result in an improvement in the quality of the output circuit if this is of further interest please check out the transpiler pages in the kisc documentation linked below now we are ready to discuss the actual execution of a Quantum circuit the kcit runtime Primitives provide an interface to IBM Quantum hardware and they also abstract away error suppression and mitigation from the user the sampler primitive Returns the Quasi probability distribution or counts representing the likelihood of measuring certain bit strings zeros and ones the estimator primitive returns expectation values of observables corresponding

to physical quantities so in a little bit more detail the sampler runs the circuit multiple times on a Quantum device performing measurements on each run and constructing the probability distribution from recovering those bit strings the more runs or shots it performs the more accurate the results will be but this requires more time and the Quantum Resources required to run that specifically it calculates the probability of obtaining each possible standard basis state by measuring the state prepared by the circuit kiskis estimator uses a complex algebraic process to estimate the expectation value on a real Quantum device

by breaking down the observable into a combination of other observables that have known igen bases and running under the hood of The Primitives are the techniques called error mitigation error mitigation is an umbrella term for all of this and it basically refers to the techniques that allow us to reduce circuit Errors By modeling the device noise at the time of execution typically this results in Quantum pre-processing overhead related to the model training and classical post-processing overhead to mitigate errors in the Raw results by using a generated model but in exchange for this overhead we're able

to get much more accurate results there are multiple techniques that we can Implement for error mitigation and we're going to go over three of them in more detail be aware however that this is an area of active research so new techniques will likely be invented and older ones will continue to be improved upon the resilience level increases the cost or in other words the time it takes to run your job we already said that at resilience level zero the transpiler doesn't really do anything to your circuit but starting at resilience level one it introduces a

method called twirled readout error Extinction or we call it T-Rex for short twirled readout error Extinction uses poly twirling to mitigate noise which is something that we've already touched upon it's a very general and effective technique that intuitively speaking makes the noise less irregular and easier to deal with starting at resilience level two we introduce zero noise extrapolation or zne for short this is a popular technique that we've recently had a lot of success with the idea behind Zen might be a bit surprising we actually add additional noise on top of what's already there but

what this does is it allows us to extrapolate in the reverse direction to predict what the results would look like if there was less noise this can be done in several specific ways for instance we can stretch out the gates to make them longer and thus more prone to error or we can run more gates that ultimately result in an identity operation so the circuit doesn't actually functionally change but we purposefully sample more noise you do have to do this for every circuit and every expectation value that you want to keep track of though so

you can see how it can end up being very expensive in terms of the time required one specific type of z& is called probabalistic error amplification paa for short once we've learned a noise model for a gate paa works by sampling errors from that noise model and deliberately injecting them into the circuit like I mentioned lots of new techniques are continuing to be developed and will hopefully be implemented as we see more and more come into play there's just one final form of error mitigation that I want to discuss called probabilistic error cancellation instead of

being at the third resilience level PE is a special capability that you have to manually turn on and kissk because the computational resources needed for it don't scale very well compared to the other air mitigation techniques that we've discussed You Begin by learning about the noise that's affecting your circuit by running noise learning or noise characterization circuits for each unique layer of two Cubit Gates that are in your circuit these results let you describe the noise in terms of poly operators once you know these noise terms you can modify your circuits so that they effectively

have the opposite PES built in to cancel these noise channels in some ways this process is similar to that of noise cancelling headphones however this way of undoing the noise is very costly with a time to run that grows exponentially quickly in the number of gates so it may not be the best choice for a very large circuit we have now reached the final stage postprocessing in the end if you've done a good job and employed proper error mitigation techniques you might see your final answer shift like like in this figure here an unmitigated distribution

shown in purple is quite far from the true expected value shown here by this line however once we apply our error mitigation we obtain a mitigated distribution shown here in blue which Peaks much closer to the True Value now this all comes at a cost and here we can see that the variance or the width of the distribution is increased which isn't generally what you want but everything comes at a cost luckily we can account for this by running more shots which causes the distribution to shrink back to an acceptable level of uncertainty so that's

about it for this lesson for next time we're going to take all of these steps and put them together and work through an area and an example of actual application interest there's a few things that I can recommend that we do until then if you haven't yet make sure that you download and set up kuit 1.0 you can also check out the Associated lesson for this chapter and the previous episode in the IBM Quantum learning platform and lastly if you have any interest in digging in more and learning more about error mitigation techniques check out

one of the numerous sources that I've linked below until then we'll see you next time [Music]

Related Videos

32:31

Your Guide to Utility Scale QAOA: Quantum ...

Qiskit

6,277 views

23:15

Your Guide to 100+ Qubits: Quantum Computi...

Qiskit

65,167 views

39:12

A Brief History of Superconducting Quantum...

Qiskit

51,484 views

13:22

Bell's Inequality: The weirdest theorem in...

Qiskit

2,318,106 views

55:21

Start Writing Quantum Code with Abby Mitch...

Qiskit

6,355 views

30:14

Which Problems Are Quantum Computers Good ...

Qiskit

4,730 views

1:10:02

Single Systems | Understanding Quantum Inf...

Qiskit

194,071 views

54:32

IBM Quantum State of the Union 2022

IBM Research

43,332 views

20:42

How To Code A Quantum Computer

Lukas's Lab

635,658 views

24:02

The Race to Harness Quantum Computing's Mi...

Bloomberg Originals

2,565,198 views

33:28

The Map of Quantum Computing - Quantum Com...

Domain of Science

1,755,061 views

25:39

I made a (useless) quantum computer at home

Looking Glass Universe

203,670 views

7:16

The Quantum Computing Collapse Has Begun

Sabine Hossenfelder

909,656 views

24:29

How Quantum Computers Break The Internet.....

Veritasium

9,206,773 views

1:36:04

Quantum Computing Course – Math and Theory...

freeCodeCamp.org

278,778 views

1:28:23

Quantum Computing for Computer Scientists

Microsoft Research

2,117,774 views

11:40

Quantum Computers Aren’t What You Think — ...

TED

348,853 views

46:58

Primitives | Coding with Qiskit 1.x | Prog...

Qiskit

13,155 views

33:16

What can my homemade quantum computer do?

Looking Glass Universe

346,398 views

1:09:32

Quantum Computing: Algorithm, Programming ...

Introduction to Quantum Computing

37,320 views