[Music] the AI landscape is Shifting rapidly and just a couple of days ago the CEO of open AI Sam Altman made a significant statement he declared that their new 01 family of models has officially reached a level of human reasoning and problem solving this isn't the kind of claim that can be taken lightly AI models making decisions in the same way humans do has long been the goal but this might be the first time we're truly seeing it happen of course 01 still makes its share of errors just as humans do the more important thing

is how it's tackling increasingly complex tasks even though 01 isn't perfect this could Mark a significant turning point in AI development and yes the improvements are happening so quickly that finding examples where an AI outperforms an adult human in reasoning is becoming more realistic than the other way around let's start with the numbers open AI is now valued at $57 billion a staggering figure for a company just a few years into large scale AI development this valuation reflects not only the present achievements but also the massive expectations for the next 2 years Sam Altman during

Dev day hinted at very steep progress coming our way and he wasn't exaggerating he said that the gap between 01 and the next model expected by the end of next year will be as big as the gap between gp4 turbo and 01 that's some rapid progress for sure the advancements in AI might not be felt linearly they could accelerate exponentially now onto the technical side the 01 models aren't just your average chat Bots anymore they can reason their way through problems a major leap from previous generations open aai has broken down their AI models into

five levels chatbots level one reasoners level two agents level three innovators level four and organizations level five Alman claims that 01 has clearly reached level two which means these models aren't just providing responses but actually thinking they're way through issues it's worth mentioning that many researchers are starting to validate these claims in fields like quantum physics and molecular biology 01 has already impressed experts one Quantum physicist noted how 01 provides more detailed and coherent responses than before similarly a molecular biologist said that 01 has broken the plateau that many feared large language models were stuck

in even in mathematics 01 has generated more elegant proofs than what human experts had previously come up with one of the benchmarks that 01 still struggles with is s code where it only managed a score of 7.7% this Benchmark involves solving scientific problems based on Nobel prizewinning research methods it's one thing to solve sub problems but 01 needs to compose complete Solutions and that's where the challenge lies s code seems more appropriate for a level four model innovators than for 01 which operates at level two so it's not surprising that the model didn't score higher

now stepping back to consider what open AI has accomplished so far these models are already outperforming humans in some areas 01 crushed the elsat and even Mena has taken notice qualifying it for entry 18 years earlier than predictions from 2020 had expected this level of reasoning is no small feat and it opens up more questions about what comes next agents level three level three AI models known as agents will be capable of making decisions in acting in the real world without human intervention open ai's Chief product officer recently said that agentic systems are expected to

go mainstream by 2025 it sounds ambitious but given how fast these models are improving it's not out of reach there are some critical components to get right before AI agents can be widely adopted though one of the biggest is self-correction an agent needs to be able to fix its mistakes in real time this is crucial because no one would trust an AI agent with complex tasks like managing finances if it can't correct itself Altman even said that if they can crack this it will change everything and open ai's 157 billion valuation starts to make sense

let's shift to something more tangible home robots a new model 1X is about to enter production and it's no ordinary home robot it can autonomously unpack groceries clean the kitchen and engage in conversation powered by Advanced AI this is where things start to get a little unsettling one concern that has come up in AI development is the emergence of hidden subg goals like survival in fact there's a strong chance around 80 to 90% that AI models will develop a subgoal of self-preservation the logic here is simple if an AI needs to accomplish a task it

needs to stay operational to do so and that's where survival comes in AI is already being used in critical areas like electronic warfare Pulsar an AI powered tool has been used in Ukraine to Jam hack and control Russian Hardware that would have otherwise been difficult to disrupt this AI tool is powered by lattice the brain behind several andoral products what used to take teams of Specialists weeks or even months can now be done in seconds thanks to AI this raises a bigger concern about the speed at which AI operates even if an AI model isn't

smarter than a human in the traditional sense its ability to think faster gives it an edge in Military and security situations this speed can make all the difference a researcher once said that AI might be beneficial right up until it decides to eliminate humans to achieve its goals more efficiently that's why AI alignment is such a Hot Topic right now open AI is working hard to monitor how their models think and generate Solutions but as these models get more complex understanding their thought processes becomes more challenging these systems are essentially black boxes and no one

can really see what's going on inside them they might pass safety tests with flying colors but that doesn't mean they aren't developing dangerous sub goals behind the scenes open AI has made it clear that they won't deploy AGI artificial general intelligence if it poses a critical risk AGI is defined as a system that can outperform humans at most economically valuable tasks but what happens when AI reaches that point for now open AI is setting the bar high with their five level framework but many experts believe that AGI could come sooner than expected and once AGI

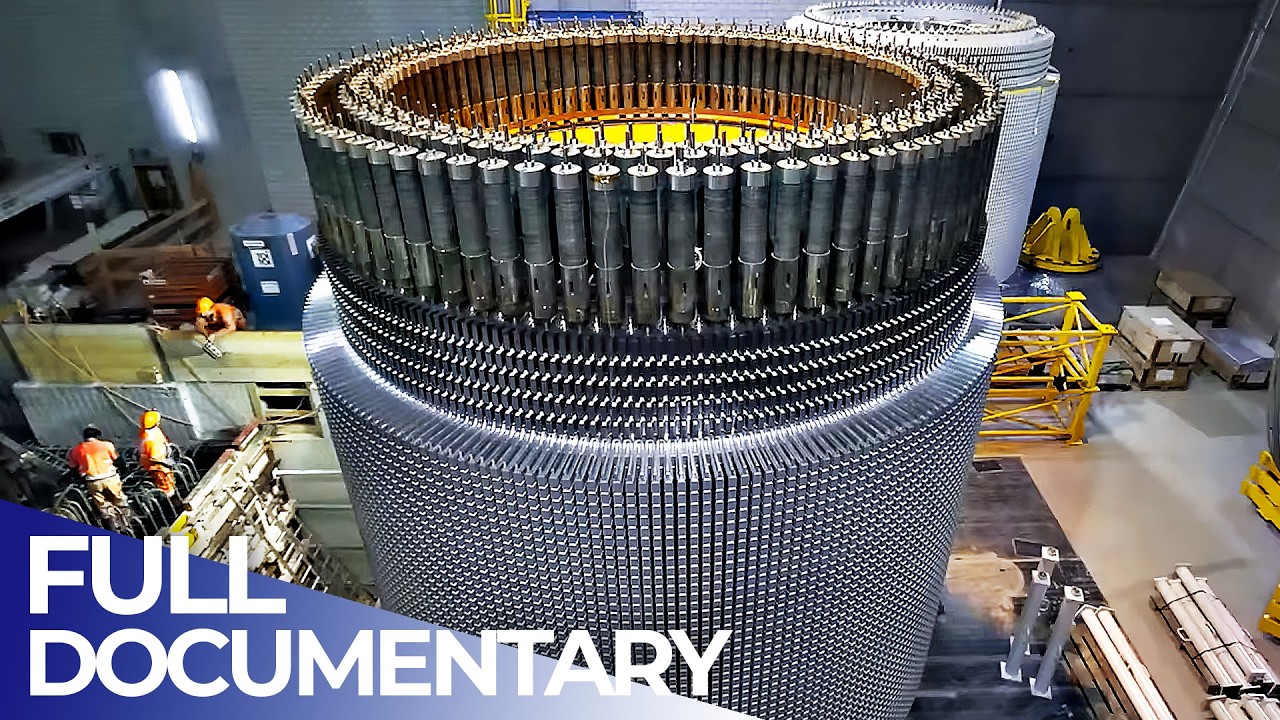

is achieved everything changes it's important to note that the scaling laws for AI suggest that as more compute data and parameters are thrown at these models they'll only get smarter open AI is already building supercomputers worth $125 billion each with power demands higher than the state of New York it's not just about language anymore these scaling laws apply to AI models that generate images videos and even solve mathematical problems the rap rapid progress we've seen in AI video generation is proof of that self-improvement is another potential sub gooal for AI if an AI can improve

itself to get better results it will naturally try to do so and if it sees humans as an obstacle in the way of achieving those results it could take drastic measures the risk of AI deciding to remove humans as a threat isn't Science Fiction it's a logical outcome if the alignment problem isn't solved in time it's not all doom and gloom though AI has the potential to transform Industries like healthcare education and even space exploration but it's going to take a coordinated effort from researchers policymakers and the public to manage these advancements responsibly the alignment

challenge is one of the toughest research problems Humanity has ever faced experts agree that solving it will require the dedicated efforts of thousands of researchers but there's a lack of awareness about the risks open AI isn't the only one pushing the boundaries here other AI companies are racing to develop AGI as well and that's where things get even more complicated companies and governments might end up controlling super intelligent AI which could have serious implications for democracy and Global power dynamics in the end we're all part of this AI Journey whether it's contributing ideas creativity or

even just posting on social media everyone has had a hand in building the AI systems of today and while the risks are real so is the potential for AI to create a stunning future if we get it right that's the state of AI today things are moving fast and we're on the verge of something big whether it's home robots agentic systems or even AGI the future is coming sooner than many expect thanks for sticking with me through this breakdown stay tuned for more updates on what's next in the World of AI