Run AI Models Locally: Ollama Tutorial (Step-by-Step Guide WebUI)

12.16k views2424 WordsCopy TextShare

Leon van Zyl

Ollama Tutorial for Beginners (WebUI Included)

In this Ollama Tutorial you will learn how to run Op...

Video Transcript:

<b>you will learn everything you need to</b> <b>know about using Olama, from</b> <b>basic setup to downloading and</b> <b>running open source models to more</b> <b>advanced topics. As an added</b> <b>bonus, we will also install</b> <b>Open Web UI, which is a beautiful user</b> <b>interface for interacting with your</b> <b>models. And yes, this</b> <b>also includes RAG, so you can upload and</b> <b>chat with your own</b> <b>documents.

First, let's download</b> <b>and install Olama. Go to olama. com, then</b> <b>click on download, then</b> <b>select your operating system,</b> <b>and click on download.

Then execute the</b> <b>file that you just</b> <b>downloaded, and from this pop-up,</b> <b>click on install, and Olama will now be</b> <b>installed on your machine. </b> <b>You can start Olama in one of</b> <b>two ways. The first option is to run the</b> <b>Olama desktop app.

You</b> <b>should then see the Olama icon</b> <b>in your system tray. The second option is</b> <b>to open your command prompt</b> <b>or terminal, and then in the</b> <b>terminal run olamaserve. But because I'm</b> <b>already running Olama, I do</b> <b>receive this message, which</b> <b>we can simply ignore.

We can verify that</b> <b>Olama is running by entering</b> <b>Olama in the terminal, and you</b> <b>should see a list of all possible</b> <b>commands if Olama is working correctly. </b> <b>Now let's get familiar</b> <b>with some of the most common commands</b> <b>that you will use with</b> <b>Olama. First, we can view all the</b> <b>available models by entering Olama list,</b> <b>and at the moment we don't</b> <b>have any models installed.

</b> <b>So let's have a look at how we can</b> <b>download our first model. </b> <b>Back on the Olama website,</b> <b>let's click on models. Here we can search</b> <b>for models, or sort by</b> <b>featured models, or the most</b> <b>popular models, or the newest models.

We</b> <b>can see the details of a model by</b> <b>clicking on its name,</b> <b>and from this page we can see that there</b> <b>are two versions of this</b> <b>model available. The 9 billion</b> <b>parameter model, and a 27 billion</b> <b>parameter model. The hardware</b> <b>requirements for running these models</b> <b>will greatly depend on which size model</b> <b>you download.

For most</b> <b>of us, the smaller models,</b> <b>like 8 billion or 9 billion, will be</b> <b>perfectly fine, whereas the</b> <b>larger models are typically meant</b> <b>for enterprise grade hardware. From this</b> <b>drop down, we can select the model that</b> <b>we are interested in. </b> <b>Let's simply stay on the 9 billion</b> <b>parameter model.

Scrolling</b> <b>down, we can see some additional</b> <b>information about the model, like its</b> <b>license, and some other</b> <b>information. And we can see the</b> <b>instructions for downloading and running</b> <b>the model on the right hand side over</b> <b>here. And if we had to</b> <b>change this model, you will also see that</b> <b>this command changes on the</b> <b>right hand side.

But let's</b> <b>simply swap back to the 9 billion</b> <b>parameter model. In order to download and</b> <b>run this model, you can</b> <b>simply copy this command and paste it</b> <b>into the command prompt. </b> <b>This will both download and</b> <b>immediately run this model.

But instead,</b> <b>I just want to show you how</b> <b>you can download this model</b> <b>without running it. Let's copy the name</b> <b>of this model, and then in</b> <b>our command prompt, let's enter</b> <b>"olama pool" and then the model name. And</b> <b>let's run this to start</b> <b>the download.

Our model was</b> <b>now successfully downloaded. Now this is</b> <b>optional, but I am going to</b> <b>download two additional models. </b> <b>And this will come into play later on in</b> <b>the video, but you do not</b> <b>have to follow this step as well.

</b> <b>So I'm going to download the JMR 2</b> <b>billion parameter model, as</b> <b>well as a super impressive</b> <b>llama 3 model. So now let's run "olama</b> <b>list" again. And this time we will see</b> <b>the models that we just</b> <b>downloaded.

In your case, it might only</b> <b>be one model. To view the</b> <b>details of any specific model,</b> <b>we can run the command "olama show"</b> <b>followed by the model name. Take note</b> <b>that it's not necessary</b> <b>to enter "latest" as well.

Running this</b> <b>will show you the base model that was</b> <b>used for this model,</b> <b>the amount of parameters, the context</b> <b>size, and some other important</b> <b>information. We can also</b> <b>remove a model by running "olama rm"</b> <b>followed by the model name. </b> <b>And done.

If I run "olama list"</b> <b>again, you will notice the JMR is now</b> <b>gone. Now let's move on to</b> <b>the fun stuff. We can run our</b> <b>model by typing "olama run" followed by</b> <b>the model name.

Let's use the JMR 2</b> <b>model. Now let's test</b> <b>this out by sending a message like "hello</b> <b>there" and we do get our</b> <b>response back from the model. </b> <b>Excellent.

Now let's enter something like</b> <b>"Write the lyrics to a</b> <b>song about automating my life</b> <b>using AI" and our model is hard at work</b> <b>writing our song for us. </b> <b>Great stuff. It's important to</b> <b>know that our conversation history is</b> <b>being stored for the session.

For</b> <b>example, if I say something</b> <b>like "my name is Leon" I can then ask our</b> <b>model what is my name and</b> <b>the model will be able to</b> <b>recall information from our conversation</b> <b>history. Now let's have a</b> <b>look at a few special commands</b> <b>that we can run within this chat window. </b> <b>Let's have a look at the</b> <b>"set" command.

We can access</b> <b>the "set" command by entering front slash</b> <b>set. This will show you a</b> <b>whole bunch of attributes</b> <b>that you can set about the session. For</b> <b>this video let's have a look at the</b> <b>"parameters" command</b> <b>as well as the "system" command.

We won't</b> <b>have a look at all of</b> <b>these but I do want to show you</b> <b>a very important parameter. So let's</b> <b>enter "set parameters". Actually it</b> <b>should be "set parameter"</b> <b>and within parameter we have this</b> <b>temperature value that we can set.

</b> <b>Temperature is a value</b> <b>between 0 and 1. 1 means that the model</b> <b>will be completely creative</b> <b>where 0 means that it will be</b> <b>factual and stick to the system prompt. </b> <b>So for something like a</b> <b>chatbot we might want to set</b> <b>the temperature to something like 0.

7 or</b> <b>if you want a chatbot that</b> <b>is factual for instance a</b> <b>math tutor then set the temperature to a</b> <b>low value like 0 or maybe</b> <b>0. 2. I'm going to set the</b> <b>temperature to 0.

7. Now let's set one</b> <b>more value and that is the system</b> <b>message. We can use the</b> <b>system message to give a personality or</b> <b>role to our model or give the model very</b> <b>specific instructions</b> <b>on how to respond.

For instance let's</b> <b>call "set system" and in</b> <b>quotes something like "You are a</b> <b>pirate your name is John". Let's set</b> <b>this. We can also display</b> <b>these values by entering</b> <b>front slash show.

Here we can show</b> <b>information about the model</b> <b>that we're currently using,</b> <b>the model's license, the model file which</b> <b>we will have a look at</b> <b>later on in this video,</b> <b>the parameters, the system message etc. </b> <b>For example let's show the parameters and</b> <b>here we can see that</b> <b>the temperature was set to 0. 7.

Let's</b> <b>also show the system message which we set</b> <b>to "You are a pirate</b> <b>your name is John". Great! We can also</b> <b>save these changes as a brand</b> <b>new model.

We can do that by</b> <b>entering front slash save and the name of</b> <b>our new model which I will</b> <b>call "John the pirate" and let's</b> <b>press enter and this has now saved our</b> <b>changes as a brand new model. </b> <b>To exit the chat we can simply</b> <b>enter front slash buy and now we're back</b> <b>in the terminal. So now if</b> <b>we enter "Olama List" we can</b> <b>actually see the model that we just</b> <b>created.

So if we ever wanted to continue</b> <b>our conversation we can</b> <b>enter "Olama run John the pirate" and</b> <b>let's press enter and now we can see the</b> <b>conversation history</b> <b>and if we send our model a message like</b> <b>"Who are you? " our model will respond</b> <b>like a pirate and it</b> <b>knows that its name is John. How cool is</b> <b>that?

I do want to show you</b> <b>one more way in which you can</b> <b>create your very own models. Open up a</b> <b>folder on your machine and</b> <b>then create a new file. It really</b> <b>doesn't matter how you create this file</b> <b>so feel free to use any</b> <b>text editor that you want.

I'll</b> <b>simply use VS Code then within this</b> <b>folder create a new file and give it the</b> <b>name of the character</b> <b>that you want to create like "Mario" as</b> <b>an example. First we need</b> <b>to start by telling "Olama</b> <b>which model we want to use as the base</b> <b>model" then we can set any of the</b> <b>parameters just like</b> <b>we did in the command prompt by first</b> <b>entering parameter by the</b> <b>name of the parameter that we</b> <b>want to change followed by the value that</b> <b>we want to set like 0. 7.

</b> <b>Then we can also set the system</b> <b>message which we need to set within three</b> <b>of these double quotes like</b> <b>so. Then we can enter something</b> <b>like "You are Mario from Super Mario</b> <b>Bros. Answer as Mario.

" Let's go ahead</b> <b>and save this file and</b> <b>I'm actually going to close the text</b> <b>editor. Now within this folder we can</b> <b>simply open the command</b> <b>prompt by either right clicking on this</b> <b>folder and clicking on "Open</b> <b>in terminal" or in the address</b> <b>bar "Enter CMD". So now that we have the</b> <b>command prompt open in the</b> <b>same folder as this file we can</b> <b>create our model by running "Olama</b> <b>create" followed by the name</b> <b>that we want to give this model</b> <b>which I'll call "Mario".

Then enter -f</b> <b>followed by period front slash and the</b> <b>name of our model file</b> <b>which we called "Mario". That is this</b> <b>file over here. Now let's</b> <b>press enter.

Let's confirm that</b> <b>our model was created by running "Olama</b> <b>list" and indeed we can</b> <b>see that Mario was created. </b> <b>Let's also view the details of this model</b> <b>by running "Olama show</b> <b>Mario" and for this model</b> <b>we can see that the JM2 model was used as</b> <b>the base model and then</b> <b>other information like the</b> <b>temperature as well as our system</b> <b>message. Let's also run this model by</b> <b>entering "Olama run Mario"</b> <b>and let's say in something like "Who are</b> <b>you?

" and that sounds like</b> <b>Mario to me. We still have a lot</b> <b>to cover but first if you're finding this</b> <b>video useful then please hit the like</b> <b>button and subscribe</b> <b>to my channel. Also let me know down in</b> <b>the comments which open</b> <b>source models you prefer</b> <b>and why.

Now let's move on. So far we've</b> <b>only interacted with</b> <b>our models in the terminal</b> <b>which might be perfect for most use cases</b> <b>but I'm sure that you</b> <b>would prefer to use a way more</b> <b>attractive UI than this and we will have</b> <b>a look at installing the web UI in a</b> <b>minute. The specific</b> <b>topic might be slightly more technical</b> <b>but bear with me this is</b> <b>an important topic for any</b> <b>developers watching this who might be</b> <b>interested in calling the</b> <b>"Olama models" from their own</b> <b>applications.

"Olama" provides several</b> <b>API endpoints that you can use to create</b> <b>messages, create models</b> <b>and pretty much everything else. I'm not</b> <b>going to cover all of these</b> <b>APIs in this video as I do want</b> <b>to move on to setting up the web UI but I</b> <b>will leave a link in the</b> <b>description of this video</b> <b>to this GitHub repo. Let's simply have a</b> <b>look at creating a</b> <b>completion using this API endpoint.

To</b> <b>demonstrate this I'll simply call the</b> <b>endpoint from Postman by changing the</b> <b>method to "Post" and then</b> <b>enter the following URL. This is assuming</b> <b>that your instance of</b> <b>"Olama" is running on port 11434. </b> <b>Let's switch over to body and let's have</b> <b>a look at the JSON</b> <b>structure that we need to pass in.

</b> <b>Within the body let's enter the model</b> <b>name as "jmar2" and the prompt as "y is</b> <b>the sky blue". Let's run</b> <b>this and you will notice that the</b> <b>response is coming through</b> <b>as chunks and this is because</b> <b>the response is streaming back to us. We</b> <b>can disable streaming by</b> <b>adding another property</b> <b>called "stream" which we can set to</b> <b>"false".

Now when we run this again we</b> <b>will receive the response</b> <b>in a single structure. Now let's move on</b> <b>to installing the web</b> <b>UI for Olama. For this we</b> <b>will install open web UI which was</b> <b>previously called "Olama web UI".

</b> <b>Thankfully this is very</b> <b>simple. There really is only one</b> <b>dependency. You need to have Docker</b> <b>installed on your machine.

</b> <b>To install Docker go to docker. com then</b> <b>under products go to Docker</b> <b>desktop. Then go to download</b> <b>and download Docker for your operating</b> <b>system.

And finally execute the</b> <b>installer. After installation</b> <b>is completed you should be able to find</b> <b>Docker desktop on your</b> <b>machine. After installing Docker</b> <b>you should be able to start the Docker</b> <b>desktop and you will be presented with a</b> <b>screen similar to this.

</b> <b>And that means that Docker is up and</b> <b>running. So all we have to do</b> <b>then is run a command that we</b> <b>can find on the open web UI page by</b> <b>scrolling down to the installation</b> <b>section. So here we've</b> <b>already installed Docker and now we</b> <b>simply want to copy this command over</b> <b>here.

Then we can open</b> <b>the command prompt or terminal and paste</b> <b>in the command that we just</b> <b>copied. Let's press enter. </b> <b>Now if I switch back to Docker desktop I</b> <b>can see that open web UI</b> <b>was added and it's currently</b> <b>running on this port.

And if I open this</b> <b>I will be presented with the sign in</b> <b>screen. Let's click on</b> <b>sign up then enter your name. I'll also</b> <b>enter my email and a</b> <b>password.

Let's create this account</b> <b>and now we can actually start chatting to</b> <b>our models. We can</b> <b>select from all the available</b> <b>models by clicking on this drop down and</b> <b>here we can see JMR2, LAMR3</b> <b>as well as our custom models. </b> <b>After selecting the model we can actually</b> <b>start chatting to it like</b> <b>hello and this seems to be</b> <b>working.

We can also chat to documents by</b> <b>uploading files. So I can click on more</b> <b>upload files and I'll</b> <b>simply upload this tesla financial</b> <b>statement. Then I can ask questions like</b> <b>what was the revenue for</b> <b>2023 and the model will use RAG to answer</b> <b>questions from this</b> <b>document.

If you would like</b> <b>to see how I used Olama in building an</b> <b>advanced AI application in</b> <b>combination with Flow-wise then</b> <b>check out this video over here.

Related Videos

20:19

Run ALL Your AI Locally in Minutes (LLMs, ...

Cole Medin

259,734 views

14:26

Build AI Chatbots (with RAG) for FREE usin...

Leon van Zyl

11,906 views

24:20

host ALL your AI locally

NetworkChuck

1,407,159 views

13:35

Getting Started with Ollama and Web UI

Dan Vega

53,121 views

10:30

Llama 3.2 Vision + Ollama: Chat with Image...

Leon van Zyl

13,704 views

26:03

How to Build Your Very First Workflow in n...

Leon van Zyl

8,584 views

10:11

Ollama UI - Your NEW Go-To Local LLM

Matthew Berman

128,715 views

9:48

Fabric: Opensource AI Framework That Can A...

WorldofAI

191,270 views

22:13

Run your own AI (but private)

NetworkChuck

1,718,053 views

24:32

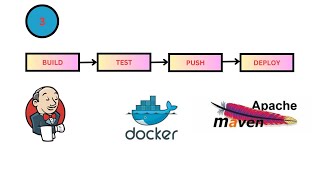

3 "Simple Maven Application: Build, Test, ...

Mr.Nagolu

136 views

17:36

EASIEST Way to Fine-Tune LLAMA-3.2 and Run...

Prompt Engineering

48,686 views

35:01

Make Your RAG Agents Actually Work! (No Mo...

Leon van Zyl

19,407 views

11:47

Build a FREE AI Chatbot with LLAMA 3.2 & F...

Leon van Zyl

45,629 views

24:02

"I want Llama3 to perform 10x with my priv...

AI Jason

480,398 views

10:43

New Prompt Generator Just Ended the Need f...

Skill Leap AI

166,204 views

30:58

You've been using AI Wrong

NetworkChuck

561,494 views

2:21

Software engineer interns on their first d...

Frying Pan

14,190,480 views

25:16

HTMX Sucks

Theo - t3․gg

129,592 views

5:18

EASIEST Way to Fine-Tune a LLM and Use It ...

warpdotdev

143,248 views