Before You Use AI in Research, Watch This! New Rules Explained

31.41k views1541 WordsCopy TextShare

Andy Stapleton

In this video, I explore the critical topic of using AI for research and what researchers need to kn...

Video Transcript:

don't use AI tools for research until you've watched these videos look at this all of these people have rules for using generative AI in their journals this is ele look at all this boring stuff this is Wy look at all of this stuff this is another one plus plus one here's all the stuff they want you to know about using AI this is all the stuff that Sage wants you to know nature group of people that's actually not too long that one but science look it's all there this one is Mega along all this stuff

and you need to know how it relates to using AI in research and in this video we're going to go through all of the rules and the first one is the most important do you remember a time when people were like oh you shouldn't use AI it's cheating well they realize now that they like using AI so now they're like it's okay just here are the rules so the first thing you should know is that they want you to disclose whether or not you're using AI so the way you can do that is by putting

it in acknowledgement section you can put it in um like a disclosure statement but essentially each Journal has their own way and this is what people are saying about it so in ele journals it says authors should disclose in their manuscript the use of AI and AI assisted technology um and a statement will appear in the published work Wy author Services says if an author has used gen AI tool to develop any portion of manuscript it must be described transparently and in detail the detail is very important it's the type of tool you're using how

you've used it as well as the large language model that you've used for example gp4 or clae anthropic those sort of things are very important and it says in the method section or in a disclosure within the acknowledgement section as applicable so some places want you to put it into the method section of writing that paper if you've used it for any sort of like process to do with your research but you can't use it for all of your research the next one is just as important one of the biggest rules is AI should not

be used for original research now what does that mean they only want you to use AI tools for language stuff essentially they don't want you to alter images which we all know is not right not ethical you can't make up results and you shouldn't be using AI um to kind of like change the words or the outcome of your conclusions because that is clearly not your own work so here El s also says we do not permit the use of gen AI or AI sister tools to create or alter images in submitting manuscripts we've all

been there we've got these little results and we're like oh if only that little smudge wasn't there or if only this was a little bit clearer don't use it for that only use it if you're submitting to a journal for language based things so we all know that you shouldn't fabricate results so don't use AI to fabricate results and it's one of the easiest ones to comply with I think I often get like questions like well I use this which probably is AI like uh grammarly or some other language tool but there are different rules

for different journals this is what elsaa says it says um these Technologies should only be used to improve readability and the language of the work now that's really important because you can use it to make it more academic you can use it to make sure that your conclusions are clear that it's structured in the right way but what you can't do is say I've got this conclusion is there anything else I can say about it if you're adding to the knowledge no no no naughty naughty spank naughty but you can use it to change the

language it's a fine line sometimes but those are the rules and then it says down here tools that are used to improve spelling grammar and general editing are not included in the scope of these guidelines so if you're using a tool like spell Checkers or grammarly you don't need to worry about putting it in there as an AI assisted tool it's only if if it really helps you sort of like formulate the language that you're going to use now listen up you this is all about making sure you are responsible for the language and the

text that you are presenting as your own work so here plus one says all of the statements in the article reporting to all of those things represent the author's own ideas and so the use of AI tools and Technologies to fabricate or otherwise misrepresent primary research data is unacceptable that is your warning you need to be sure that you are comfortable and it truly represents your thoughts on the results or the conclusions you are presenting Wy goes a little bit further and says the author is fully responsible for the accuracy of any information provided by

the tour and for correctly referencing any supporting work on which that information depends so AI is this great like plausibility machine sometimes it gives you stuff that sounds so so plausible but in fact is made up so you need to make sure that you're referencing any bits of information just like you would if you using your meat brain and typing it out over caffeine fueled Fury I love it that all of the different journals have said this that chat GPT or any large language model does not constitute authorship so in nature they say large language

model such as chat gp2 do not currently satisfy our authorship criteria and that's because they need to be able to be sort of like questioned and made accountable for the stuff they're putting in to the research paper so you should be able to um go to an author and say why did you do this justify to me why this is a thing and chat GPT can't do that because it doesn't have the intimate knowledge of your results of the background that you're trained in all of those things mean that it doesn't sort of like qualify

as authorship merely a tool and El says authors should not not list Ai and AI assisted Technologies as an author or C Core author nor site AI as an author so there we are just don't put it as the top like you can't have Stapleton at Al you can't have chat GPT at Al no no no well I think we all know that right don't we yeah good and the last thing I think we need to know about using AI in research is the most important one in making sure that research stays credible now AI

tools should not be used to evaluate someone else's ideas in peer review first of all uploading their information into chat GPT or a large language model could break confidentiality and that is a big no no we want to make sure that the peerreview process is as robust as possible if AI starts to get involved it means that people and experts aren't using their expertise to evaluate a peerreview paper because the peer part is very important in peer review the peer part is what makes it and if you're Outsourcing that it's no longer peer review it's

AI review which probably isn't too far away but it's not there yet so don't use it to peer review papers this is what science has to say about it reviewers may not use AI technology in generating or writing their reviews because this could breach the confidentiality of the manuscript and Wy down here says gen IR tools should only be used on a limited basis in connection with peerreview so much stricter guidelines it can only be used by an editor or peer reviewer to improve the quality of the written feedback and this must be done transparently

declared upon submission of the peerreview report to the manuscript handling editor so you can only use it to make sure that your ideas are communicated clearly in writing form you can't get your ideas from AI I think that makes sense all right now the last thing is look at this this is a PowerPoint presentation so I always put a little clap at the end well done Andy you did so great I hope you're clapping at home clap clap clap clap clap makes me feel good if you like this video go check out this one where

I talk about using chat GPT to make research simple now you can also take the prompts and use these across other large language models but I think this one is a good place to start go check it out e

Related Videos

10:40

NotebookLM Is a Game-Changer for Research ...

Andy Stapleton

13,301 views

8:46

How Academia Rewires Your Brain: The PhD E...

Andy Stapleton

143,304 views

![How To Write An Exceptional Literature Review With AI [NEXT LEVEL Tactics]](https://img.youtube.com/vi/wz8lg_3j3Ok/mqdefault.jpg)

14:22

How To Write An Exceptional Literature Rev...

Andy Stapleton

336,666 views

8:52

AI Just Reached Human-Level Reasoning – Sh...

AI Revolution

51,786 views

6:08

Pursuing a PhD as an older student - is it...

The PhD Place

27,251 views

10:26

How I Find Research Gaps In Under 5 Minute...

Prof. David Stuckler

54,299 views

21:37

Elena Lasconi. Momentan, la “vorbe, nu fap...

Starea Natiei Oficial

150,727 views

11:52

4 AI Tools For Research You Missed (But Ca...

Andy Stapleton

10,245 views

24:12

Sam Altmans New AI Device Unleashes AI Age...

TheAIGRID

71,597 views

19:58

The fastest way to do your literature revi...

Academic English Now

18,125 views

9:18

I Hated Academic Writing Until I Discovere...

Andy Stapleton

22,059 views

11:55

What 9 Out of 10 PhD Students Get Wrong Ab...

Andy Stapleton

11,080 views

9:21

‘Godfather of AI’ on AI “exceeding human i...

BBC Newsnight

321,848 views

9:59

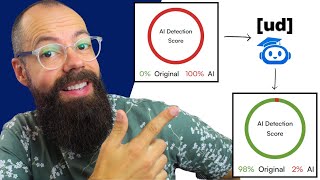

Unbelievable! The Easiest Way to Bypass AI...

Andy Stapleton

154,163 views

22:02

Cine mai e și Valeriu Nicolae ăsta? Docume...

Starea Natiei Oficial

114,017 views

14:12

AI Just Made Literature Review a Piece of ...

Andy Stapleton

35,481 views

8:37

Carlsen Just Faced The Greatest Opening In...

Epic Chess

5,210 views

10:05

4 research paper hacks to get published in...

Academic English Now

4,168 views

24:02

The Race to Harness Quantum Computing's Mi...

Bloomberg Originals

2,282,552 views

13:02

This AI Tool Does Literature Reviews in SE...

Andy Stapleton

34,593 views