Top 50 AWS Services Explained in 10 Minutes

1.57M views2380 WordsCopy TextShare

Fireship

Amazon Web Services (AWS) is the world's largest and most complex cloud with over 200 unique service...

Video Transcript:

amazon web services launched in 2006 with a total of three products storage buckets compute instances and a messaging queue today it offers a mind-numbing 200 and something services and what's most confusing is that many of them appear to do almost the exact same thing it's kind of like shopping at a big grocery store where you have different aisles of product categories filled with things to buy that meet the needs of virtually every developer on the planet in today's video we'll walk down these aisles to gain an understanding of over 50 different aws products so first

let's start with a few that are above my paygrade that you may not know exist if you're building robots you can use robomaker to simulate and test your robots at scale then once your robots are in people's homes you can use iot core to collect data from them update their software and manage them remotely if you happen to have a satellite orbiting earth you can tap into amazon's global network of antennas to connect data through its ground station service and if you want to start experimenting and researching the future of computing you can use bracket

to interact with a quantum computer but most developers go to the cloud to solve more practical problems and for that let's head to the compute aisle one of the original aws products was elastic compute cloud it's one of the most fundamental building blocks on the platform and allows you to create a virtual computer in the cloud choose your operating system memory and computing power then you can rent that space in the cloud like you're renting an apartment that you pay for by the second a common use case is to use an instance as a server

for web application but one problem is that as your app grows you'll likely need to distribute traffic across multiple instances in 2009 amazon introduced elastic load balancing which allowed developers to distribute traffic to multiple instances automatically in addition the cloudwatch service can collect logs and metrics from each individual instance the data collected from cloudwatch can then be passed off to auto scale in which you define policies that create new instances as they become needed based on the traffic and utilization of your current infrastructure these tools were revolutionary at the time but developers still wanted an

easier way to get things done and that's where elastic bean stock comes in most developers in 2011 just wanted to deploy a ruby on rails app elastic beanstalk made that much easier by providing an additional layer of abstraction on top of ec2 and other auto scaling features choose a template deploy your code and let all the auto scaling stuff happen automatically this is often called a platform as a service but in some cases it's still too complicated if you don't care about the underlying infrastructure whatsoever and just want to deploy a wordpress site lightsail is

an alternative option where you can point and click at what you want to deploy and worry even less about the underlying configuration in all these cases you are deploying a static server that is always running in the cloud but many computing jobs are ephemeral which means they don't rely on any persistent state on the server so why bother deploying a server for code like that in 2014 lambda came out which are functions as a service or serverless computing with lambda you simply upload your code then choose an event that decides when that code should run

traffic scaling and networking are all things that happen entirely in the background and unlike a dedicated server you only pay for the exact number of requests and computing time that you use now if you don't like writing your own code you can use the serverless application repository to find pre-built functions that you can deploy with the click of a button but what if you're a huge enterprise with a bunch of its own servers outpost is a way to run aws apis on your own infrastructure without needing to throw your old servers in the garbage in

other cases you may want to interact with aws from remote or extreme environments like if you're a scientist in the arctic snow devices are like little mini data centers that can work without internet and hostile environments so that gives us some fundamental ways to compute things but many apps today are standardized with docker containers allowing them to run on multiple different clouds or computing environments with very little effort to run a container you first need to create a docker image and store it somewhere elastic container registry allows you to upload an image allowing other tools

like elastic container service to pull it back down and run it ecs is an api for starting stopping and allocating virtual machines to your containers and allows you to connect them to other products like load balancers some companies may want more control over how their app scales in which case eks is a tool for running kubernetes but in other cases you may want your containers to behave in a more automated way fargate is a tool that will make your containers behave like serverless functions removing the need to allocate ec2 instances for your containers but if

you're building an application and already have it containerized the easiest way to deploy it to aws is app runner this is a new product in 2021 where you simply point it to a container image while it handles all the orchestration and scaling behind the scenes but running an application is only half the battle we also need to store data in the cloud simple storage service or s3 was the very first product offered by aws it can store any type of file or object like an image or video and is based on the same infrastructure as

amazon's ecommerce site it's great for general purpose file storage but if you don't access your files very often you can archive them in glacier which has a higher latency but a much lower cost on the other end of the spectrum you may need storage that is extremely fast and can handle a lot of throughput elastic block storage is ideal for applications that have intensive data processing requirements but requires more manual configuration by the developer now if you want something that's highly performant and also fully managed elastic file system provides all the bells and whistles but

at a much higher cost in addition to raw files developers also need to store structured data for their end users and that brings us to the database aisle which has a lot of different products to choose from the first ever database on aws was simpledb a general purpose no sql database but it tends to be a little too simple for most people everybody knows you never go full it was followed up a few years later with dynamodb which is a document database that's very easy to scale horizontally it's inexpensive and provides fast read performance but

it isn't very good at modeling relational data if you're familiar with mongodb another document database option is documentdb it's a controversial option that's technically not mongodb that has a one-to-one mapping of the mongodb api to get around restrictive open source licensing speaking of which amazon also did a similar thing with elasticsearch which itself is a great option if you want to build something like a full text search engine but the majority of developers out there will opt for a traditional relational sql database amazon relational database service rds supports a variety of different sql flavors and

can fully manage things like backups patching and scale but amazon also offers its own proprietary flavor of sql called aurora it's compatible with postgres or mysql and can be operated with better performance at a lower cost in addition aurora offers a new serverless option that makes it even easier to scale and you only pay for the actual time that the database is in use relational databases are a great general purpose option but they're not the only option neptune is a graph database that can achieve better performance on highly connected data sets like a social graph

or recommendation engine if your current database is too slow you may want to bring in elastic cache which is a fully managed version of redis an in-memory database that delivers data to your end users with extremely low latency if you work with time series data like the stock market for example you might benefit from time stream a time series database with built-in functions for time-based queries and additional features for analytics yet another option is the quantum ledger database which allows you to build an immutable set of cryptographically signed transactions very similar to decentralized blockchain technology

now let's shift gears and talk about analytics to analyze data you first need a place to store it and a popular option for doing that is redshift which is a data warehouse that tries to get you to shift away from oracle warehouses are often used by big enterprises to dump multiple data sources from the business where they can be analyzed together when all your data is in one place it's easier to generate meaningful analytics and run machine learning on it data in a warehouse is structured so it can be queried but if you need a

place to put a large amount of unstructured data you can use aws lake formation which is a tool for creating data lakes or repositories that store a large amount of unstructured data which can be used in addition to data warehouses to query a larger variety of data sources if you want to analyze real-time data you can use kinesis to capture real-time streams from your infrastructure then visualize them in your favorite business intelligence tool or you can use a stream processing framework like apache spark that runs on elastic mapreduce which itself is a service that allows

you to operate on massive datasets efficiently with a parallel distributed algorithm now if you don't want to use kinesis for streaming data a popular alternative is apache kafka it's open source and amazon msk is a fully managed service to get you started but for the average developer all this data processing may be a little too complicated glue is a serverless product that makes it much easier to extract transform and load your data it can automatically connect to other data sources on aws like aurora redshift and s3 and has a tool called glue studio so you

can create jobs without having to write any actual source code but one of the biggest advantages of collecting massive amounts of data is that you can use it to help predict the future and aws has a bunch of tools in the machine learning aisle to make that process easier but first if you don't have any high quality data of your own you can use the data exchange to purchase and subscribe to data from third-party sources once you have some data in the cloud you can use sagemaker to connect to it and start building machine learning

models with tensorflow or pi torch it operates on multiple levels to make machine learning easier and provides a managed jupyter notebook that can connect to a gpu instance to train a machine learning model then deploy it somewhere useful that's cool but building your own ml models from scratch is still extremely difficult if you need to do image analysis you may as well just use the recognition api it can classify all kinds of objects and images and is likely way better than anything that you would build on your own or if you want to build a

conversational bot you might use lex which runs on the same technology that powers alexa devices or if you just want to have fun and learn how machine learning works you might buy a deep racer device which is an actual race car that you can drive with your own machine learning code now that's a pretty amazing way to get people to use your cloud platform but let's change direction and look at a few other essential tools that are used by a wide variety of developers for security we have im where you can create rules and determine

who has access to what on your aws account if you're building a web or mobile app where users can log into an account cognito is a tool that enables them to log in with a variety of different authentication methods and manages the user sessions for you then once you have a few users logged into your app you may want to send them push notifications sns is a tool that can get that job done or maybe you want to send emails to your users ses is the tool for that now that you know about all these

tools you're going to want an organized way to provision them cloud formation is a way to create templates based on your infrastructure in yaml or json allowing you to enable hundreds of different services with the single click of a button from there you'll likely want to interact with those services from a front-end application like ios android or the web amplify provides sdks that can connect to your infrastructure from javascript frameworks and other front-end applications now the final thing to remember is that all this is going to cost you a ton of money which goes directly

to getting jeff's rocket up so make sure to use aws cost explorer and budgets if you don't want to pay for these big bulging rockets that's the end of the video it took a ton of work so please like and subscribe to support the channel or become a pro member at fireship io to get access to more advanced content about building apps in the cloud thanks for watching and i will see you in the next one

Related Videos

17:17

The only Cloud services you actually need ...

NeetCodeIO

129,027 views

50:07

Intro to AWS - The Most Important Services...

Be A Better Dev

418,670 views

13:18

100+ Web Development Things you Should Know

Fireship

1,501,375 views

9:53

7 Database Paradigms

Fireship

1,630,668 views

38:54

Introduction to AWS Services

AWS with Chetan

2,241,963 views

8:16

10 regrets of experienced programmers

Fireship

1,358,010 views

13:48

24 MOST Popular AWS Services - Explained i...

IT k Funde

31,407 views

49:26

AWS & Cloud Computing for beginners | 50 S...

in28minutes - Get Cloud Certified

168,389 views

28:08

AWS CEO - The End Of Programmers Is Near

ThePrimeTime

435,208 views

10:42

Ranking IT and Cybersecurity Jobs by STRES...

Josh Madakor

126,864 views

9:18

Reacting to Controversial Opinions of Soft...

Fireship

2,103,703 views

5:25

Why you're addicted to cloud computing

Fireship

917,227 views

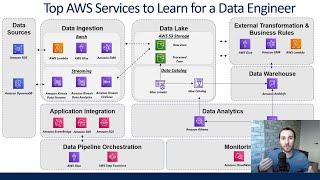

13:11

Top AWS Services A Data Engineer Should Know

DataEng Uncomplicated

163,808 views

12:34

Amazon/AWS EC2 (Elastic Compute Cloud) Bas...

Tiny Technical Tutorials

100,953 views

7:17

How I would learn AWS Cloud (If I could st...

Tech With Lucy

551,900 views

9:00

How to Burn Money in the Cloud // Avoid AW...

Fireship

553,800 views

2:11:42

AWS VPC Beginner to Pro - Virtual Private ...

freeCodeCamp.org

640,318 views

3:48

Serverless was a big mistake... says Amazon

Fireship

1,644,207 views

![Docker Crash Course for Absolute Beginners [NEW]](https://img.youtube.com/vi/pg19Z8LL06w/mqdefault.jpg)

1:07:39

Docker Crash Course for Absolute Beginners...

TechWorld with Nana

1,723,723 views

17:57

Generative AI in a Nutshell - how to survi...

Henrik Kniberg

1,974,705 views