Deep Reinforcement Learning: Neural Networks for Learning Control Laws

141.12k views3652 WordsCopy TextShare

Steve Brunton

Deep learning is enabling tremendous breakthroughs in the power of reinforcement learning for contro...

Video Transcript:

welcome back I'm Steve Brenton and today I'm gonna talk to you a bit more about reinforcement learning so in the last video I introduced the reinforcement learning architecture how you can learn to interact with a complex environment from experience and today we're gonna talk about deep reinforcement learning or some of the really amazing advances in this field that have been enabled by deep neural networks and these advanced computational architectures so again I am I can Steve on Twitter please do subscribe and do like do share this if you find it useful and tell me other things that you would like me to talk about ok so again in the last video we introduced this this agent the environment the agent measures the environment through this state s it takes some action to interact with the environment given by a policy so it has a control policy based on how it acts based on what state it's in and it is optimizing this policy to maximize its future rewards that it gets from the environment and so mathematically this policy is probabilistic so the agent has some kind of some probabilistic strategy for interacting with the environment because the environment might be stochastic or have some randomness to it and there is a value function that tells the agent how valuable being in a given state is given the policy PI that it is enacting and so today what we're going to do is we're going to augment this picture by introducing deep neural networks for example to represent the policy and so here we have now we've replaced our policy with a deep neural network so this PI is parametrized by theta where theta describes this this neural network and again it Maps the current state to the best probabilistic action to take in that environment and so the whole name of the game is to update this policy to maximize future rewards and again we have this discount rate gamma here that says that rewards in the near future are worth more than rewards in the distant future okay because again remember these rewards are gonna be relatively sparse and infrequent most of the time because we're in a semi supervised learning framework where these rewards are only occasional and so it's difficult to figure out what actions actually gave rise to those rewards this is gonna be a pretty hard optimization problem to learn this best policy of what actions to take but you know and actually the whole reinforcement learning paradigm is biologically inspired it's it's essentially inspired by this observation so there's this this notion called hebbian learning you may have heard this before and the little rhyme goes neurons that fire together wire together and basically what that means is that when you have neural activity kind of when things fire together they will essentially strengthen the wiring and the connections between those neurons and biological systems and so in these kind of deep reinforcement learning architectures the idea is that the reward signal that you get occasionally should somehow strengthen connections that led to a good policy when when the right policy is firing when these neurons are connected in a way that causes the policy the correct policy and you get a reward you want to somehow reinforce that architecture and there's lots of ways of doing this you know essentially through back propagation and so on and so forth so another area where a lot of research is going into deep learning for reinforcement learning is called cue learning I talked about this in the last video where this cue or quality function essentially kind of combines the policy and the value and it tells you jointly how good is a current state given a current action a-okay and so assuming that I do the best possible thing for all future states and actions so right now if I find myself in state s an action a I can assign a quality based on the future value that I expect given that state and given the best possible policy I can cook up and again there are deep queue networks where you learn this quality function and once you learn this quality function then when you find yourself in a state s you just look up the best possible a that gives you the highest quality for that state and this makes a lot of sense this is a lot like how a person would learn how to play chess is they would kind of simultaneously be building a policy of okay here's how I move in these situations these are the trades I'm willing to make and you're also building a value function of how you value different board positions and kind of how you gauge your strength and the strength of your position based on on the state so it kind of makes sense that this would be an area for really expanding with deep neural networks because these functions might be very very complex functions of s and a and that's exactly what neural networks are good at is giving you very very complex representing very complex functions if you have enough training data so that's what we're talking about here these still suffer from all the same challenges of regular reinforcement learning like the credit assignment problem so the fact that I might only get our reward at the very end of my a chess game makes it very hard to tell which actions actually gave rise to that award reward and so you're gonna do some of the same things that you would normally do like you might use reward shaping to give intermediate rewards based on some expert intuition or guidance and there's lots of other strategies like hindsight and replay and things like that The basic idea is we're gonna take the same reinforcement learning architecture and we're going to either replace the policy or the the Q function with a policy network or a Q network And all of this kind of exploded on the scene because of this 2015 nature paper "Human level control through deep reinforcement learning" where these authors from DeepMind essentially showed that they could build a reinforcement learner that could beat human level performance in lots of classic Atari video games. So I'm gonna hit play. I love this one.

. . this is one of the first ones that got me really excited about this.

So this reinforcement learner is essentially trying to maximize this score by breaking all of these these blocks and after a few hours of training it has an epiphany that only really excellent human players ever reach so it essentially finds an exploit in the game where it realizes that if it tunnels in one side. . .

so It's going to tunnel through here. If it tunnels through one side it can essentially use the physics of the game to break all of these blocks for it. And that's pretty amazing.

So it in a short amount of time learns a really advanced strategy that only a few humans only a small percentage of humans would actually learn eventually so really impressive. . .

this is a beautiful paper by the way, you should should go read this they talk about how they actually build their networks so they use the pixels of the screen itself as the input and they use convolutional layers and fully connected layers eventually deciding what the joystick should do what actions to take. And a lot like other examples of neural networks this was the paper that really brought reinforcement learning and deep reinforcement learning back to everyone's to the forefront of everyone's mind because this showed performance that hadn't been attainable before so this is a lot like the image net of reinforcement learning okay this brought it back into the forefront.

Related Videos

17:35

Deep Reinforcement Learning for Fluid Dyna...

Steve Brunton

51,985 views

18:40

But what is a neural network? | Deep learn...

3Blue1Brown

19,266,285 views

26:03

Reinforcement Learning: Machine Learning M...

Steve Brunton

316,549 views

1:02:00

MIT 6.S191: Reinforcement Learning

Alexander Amini

19,285 views

1:15:11

Veritasium: What Everyone Gets Wrong About...

Perimeter Institute for Theoretical Physics

1,724,757 views

42:45

Pieter Abbeel: Deep Reinforcement Learning...

Lex Fridman

70,213 views

24:00

Reinforcement Learning with Neural Network...

StatQuest with Josh Starmer

16,206 views

24:50

Overview of Deep Reinforcement Learning Me...

Steve Brunton

78,951 views

1:00:19

MIT 6.S191 (2024): Reinforcement Learning

Alexander Amini

108,247 views

3:44:18

Understanding AI from Scratch – Neural Net...

freeCodeCamp.org

536,145 views

27:10

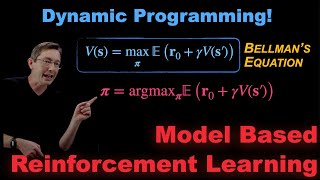

Model Based Reinforcement Learning: Policy...

Steve Brunton

117,261 views

40:08

The Most Important Algorithm in Machine Le...

Artem Kirsanov

691,627 views

1:48:01

David Silver: AlphaGo, AlphaZero, and Deep...

Lex Fridman

414,006 views

1:09:26

MIT Introduction to Deep Learning | 6.S191

Alexander Amini

294,650 views

35:35

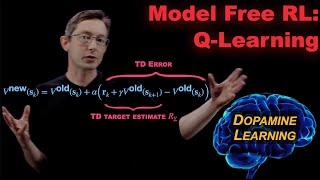

Q-Learning: Model Free Reinforcement Learn...

Steve Brunton

126,938 views

50:43

12a: Neural Nets

MIT OpenCourseWare

542,185 views

21:37

Reinforcement Learning Series: Overview of...

Steve Brunton

126,716 views

1:09:58

MIT Introduction to Deep Learning (2024) |...

Alexander Amini

991,233 views

16:27

An introduction to Reinforcement Learning

Arxiv Insights

684,842 views

11:51:22

Harvard CS50’s Artificial Intelligence wit...

freeCodeCamp.org

3,393,966 views