2025 AI AGENT Masterclass - Learn How To Build ANYTHING With LLMs

5.99k views7200 WordsCopy TextShare

All About AI

2025 AI AGENT Masterclass - Learn To Build ANYTHING With LLMs

👊 Become a YouTube Member for GH acc...

Video Transcript:

over the weekend uh I've been diving kind of into this post by anthropic that is called building effective agents uh this came out a few weeks ago but I haven't really looked at it and I just found it very interesting and I think it could make a good introduction video to agents how you can actually use them yourself so what we're going to do in this video is uh you can see here uh anthropy goes through like an example of how you can use an agent in different ways so what we're going to do is we're going to build out all of these examples in code just to do an example so we're going to build out an example of the augmented llm we're going to do some prompt chaining we're going to do some model routing we're going to do parallelization so each of these categories is going to be a new code that we're going to test and see how they work in practice but uh I think we're just going to start on top here uh just to know what uh anthropic uh Define as an agent so they have kind of two uh ways to do this so at a Tropic we categorize these variations as agentic systems but through an important architectural distinction between workflows and agents I think that's good so they say workflows are system where llms and tools are orchestrated through predefined code paths agents on the other hand are systems where llms dynamically direct their own processes and Tool usage maintaining control over how they accomplish tasks so this is what we're going to look at today uh I think we're just going to go through this from top to bottom so we're going to start with uh building blocks workflows and agents we're going to start with the first building block that is the augmented llm I will be using cursor for this so if you are using cursor to it should be easy to follow along uh I think we just want to start by just creating a simple API call I think we're just going to use open AI uh I'm most familiar with their tool calling so let's just head to their documentation here on platform. open. com let's just grab some information about their text generation and what I did is I just created a text file pasted it in here I also did grab the full article from anthropic effective agents.

MD so we have kind of the documentation if we need some extra help uh I think we're going to try to do it without that but let's just get going here so uh I think the first thing we want to do is just make a simple call to open AI so uh I'm just going to add the text and let's do a simple prompt here so I have my open a AP key set in mymv uh I added kind of the context here and let's just to write a text gen call to the open I API print the response only print content so this should be pretty strong straightforward uh we just want to have this base for when we start using um start using an in so let's just apply this and I think we need from load and let's do this OS let's use gp4 o and yeah that looks pretty good so let's do python augmented do PI hopefully this works first time uh yeah why do programmers prefer dark mode because light attracts bugs so this was just a short joke about programming uh yeah we want to use GPT 40 it always defers to gp4 I don't know why it's a bit strange uh but that is kind of our setup now we have um code here we can work on and expand on uh like I said I have my open AI key just set here and that is basically it we can remove this so if we head back to the article now what is the augmented llm so if you look at the schema here you can see the basic building blocks L llm hands with augmentations as retrieval tools and memory so our current model can uh actively use these capabilities so basically a simple agent that can do search queries use tools uh you can see here we have some retrieval maybe rag we have tools here we have memory so this is a pretty basic setup uh I think we just going to create a simple call using uh some kind of tool and that is should be a good example of the or Meed llm right uh you can also say they talk about model context protocol I have a lot of videos on that so mCP that is also an example of the augmented llm so let's just do this uh let's just uh we can continue let's start a new chat uh let's grab our code uh but I think we want to create a file here that's called Fun uh fun function call. MD or something uh let's head over to the open AI uh add documentation let's go to function calling and let's copy page so let's grab all the documentation on function calling paste it in our MD here and add that to the context so you can see I added an open vther API key yeah you can just use this if you want to this is just to so we can make uh an API call so let me just do a simple prompt here so let's just do uh I want to use the open Weather API as a function call tool in our augment py code use uh or add tool use so we can find the weather for a specific City uh I think that should be enough because we only need to create a we only need to add some code here that has kind of the tool schema set up and yeah it's using the get weather function good that's what we need we want to capture temperature description and humidity here is our tool schema that looks pretty good and GPT 4 we need to change that we have some cleaning uh let's see now so if we apply this let's go over here we really want to use GT40 same here and let's save that and yeah that looks pretty good so what we're going to do now is we have this function here that's called get weather so this is going to call or use our open vther API and it's going to take a city as an argument I think and it's going to use that to search up to find the temp hum humity and the description of the weather from this city here we have our tool schema this is uh calling up the function we just creat the get weather function get weather for a specific City and the city name of the get weather information so when we make the call we want to decide a city here so this could be Tokyo let's start with that and we have Tokyo to send it back to the model so this uh interior now when we run python augmented P hopefully this finds the weather in Tokyo using our API yeah today's weather in Tokyo uh characterized by Broken clouds temperature of 4. 5 Celsius and 47% humidity so that work pretty good so what we can do now we can change Tokyo let's do Oslo so I think we need to change it both places so let's check the weather in Oslo uh again we are using basically the same setup uh Oslo is overcast minus 11c and 87% humidity so this is how you augment the llm we give it some kind of action it can take or some kind of tool in this case is was the tool to actually get the weather here uh there are some more things we can do to make it more reliable we could add the say like a system here so to do that I think we just create a new role yeah system role you're a helpful assistant that can get with information uh use the tool or something like this to get the information so we can add uh a system message here if we are struggling the model to actually call in this so I think we need to add this uh in both places like this and this should also work now I think uh it doesn't make a big difference but I like to have um system messages right and in the system message we can also specify something like uh return the response as a poem or something like this and this could be we can do that here too return uh return the response as a poem right so now we should probably get um response from the weatherin Oslo as a poem right yeah you can see in os's Embrace quite cold great story so this is how we can change up things by just ch using a system role here so always think of that if you have some specific requirement of the output uh so that is basically how you can augment an llm so this is how anthropic is thinking about to we can of course add memory to this we can add query rag results and mix all of these together uh but I think the tool calling kind of uh represents what they were thinking about here uh next up we have something called prompt chaining prompt chaining decomposes a task into sequence of steps where each llm call processes the output from the previous one you can add prity checks see gate so we have a gate here and uh when you use this workflow ideal for situations where the task can easily and cleanly decomposed into fixed subtasks main goal is to trade off latency for higher accuracy making uh each llm call an easier task so we have some examples so basically you can can see we have a call we get an output from this llm so we're going to decide is the output okay if it's good or if we get an output we should pass this to a new call and so on so this is like prompt chaining so let's just set up a simple prompt chain here and you can kind of see how this works to do some chain P thing I thought we could just continue on the code we have but uh I want to turn it into a function so I'm just going to do turn this into a function named open a tool call where the argument should be a string with a city name uh because I think that's just easier if we have a function and then we can kind of manipulate the responses so let's see what we get here uh let me take a quick look return yeah that looks good so um now we just need a city name as the argument so what's the weather like in city today uh and yeah so let's just do probably something like print uh let's do Oslo let's see if this works so now we're just going to send in Oslo and we're going to print the response okay that looks pretty good uh but now uh I kind of want to store this in a value so let's call this weather one or something and we can do this print and this should work too so now we're just going to store for this uh in this value here and here now we can kind of use this uh in our next API call since this has captured the weather from the first API call and that is kind of we what I mean by Chain prompting so if we create a new function here now let's do let me just come up with a simple text generation function here so let's just do create a simple text generation function that uses to open a i API as an argument so let's just use um cursor to create this for us and now you can see we have a generate text this take the prompt as an argument we call gp40 uh we don't need a system message here but now we are going to do something else here so let me just show you so let's just bring this weather one down here and now we can do like an F string prompt right so let's just do something uh compare uh weather weather and we can do equals and let's do generate text but now let's do like an F string here and we can do Oslo uh and we can do weather uh but let's compare not Tokyo compare the in Oslo now um against the average or something and we can do something like um let's bring this to the front so let's do weather in Oslo now right compare this weather in Oslo now against average weather in Oslo so now you can see we take the response from our first call that is going to be just to get the weather and we're going to feed that into a new call that is going to use like an F string to we have the live weather in Oslo now we can compare this right so if we run this now uh hopefully we will uh yeah get a response okay so now you can kind of see uh to compare the weather with the average you can see the average is around - 7 to minus1 now it's - 7.

8 uh conditions that are typical slightly colder than the average humidity uh humidity of 41 is quite low for Oslo in the winter so you can see we kind of use the response from the tool call into a new prompt uh that kind of compares the weather with the averages that comes from the original training of open AI GPT 40 right and we can just continue now we could take this variable and use it in a new prompt so we can chain them together uh this is could be very helpful because sometimes the response you get here is what you need to solve the next problem so I think this is what an Tropic is talking about when it made promp chaining we want some initial answer we need to pass this because we need answer from one to answer two we need answer from two and one to answer tree and yeah so and so on right uh I'm going to be making a video where we going to do loads of prom chaining with different AI agents soon so look out for that uh yeah I think that example kind of covers prom chaining uh now we want to go on to rooting so I thought we can do this uh let's see how they they talk about this so routing classifies an input and directs it to a specific followup task uh this workflow allows for separation of concerns building more specialized prompts without this workflow optimizing for one kind of input can hurt performance of other in of other inputs right when to use this workflow routing works well for complex task distinct categories okay uh Direction different types of customer services rooting easy and common question into smaller models uh I think we're going to do a model rting example so how are we going to do this I think so I think we need to create a router that kind of decides is this call going to go to GPT 40 mini or maybe 01 mini or gp4 oh we'll see So based on the input we need to create some kind of router that decides yeah what model should answer this and then out so I think that is kind of what I mean by routing but they are bit different you can have different prompts you can route to we can maybe do that too let's see so yeah let's head back to cursor and do this example just to make this uh a bit more easy let's just use what we have here so we want to keep the generate text function but I think we want to remove the get weather and tool call function so let's start with this generate text function I think I'm going to call it rotor agent or something like this so this is just going to take in a prompt that comes from yeah we can fix that afterwards so this is going to be the router agent so I think we want to give this a system roll maybe so let's do yeah a system roll for this uh I'm going to come up with a system roll here because this is quite important uh we need to decide what kind of model is going to be the rotor to uh let me think two seconds about this and how we can do that so now we're just going to do the rotor agent is to send the user input to the correct model so yeah you can see here I changed up the system message you are an llm router agent you're are to rout the user input to the correct model uh or agent uh what's my ID here I'm just going to call it agent doesn't matter too much so you can see we have some parameters to consider when to uh root the users's query do the user input require extensive reasoning if yes send this to the reasoning agent matter coding yes send it to the reasoning agent does the user request just a simple conversational answer send it to the conversational uh agent so it's a pretty basic setup so now we just need to create two different agents so we need a def reasoning agent right so this should be pretty straightforward yeah GT40 for that and for the def uh conversational agent we can they pretty much do the same so let's do GPT 40 mini for this one is that the correct I think so so now we kind of have a mini uh that is cheaper and faster for the conversational requests and we have uh GPT for o that is the reasoning agent maybe that could have been like 03 or 01 uh that would have been a better choice but uh yeah just to keep it like this for the example set so now we're going to start sending uh The Prompt is going to go to this agent that is going to decide we need to add some code here uh where to go so I think I'm just going to request CLA to help us set this up so I'm just going to do the prompt we need a router agent to take the user input first then we decide where to rout the question next the rout agent should just decide where the request go it shouldn't answer it so if the user input this hello the agent should send this to the conversational agent and so on then the user should only see the uh output from the conversational agent and get the answer back from this agent so let's implement this okay so see uh I think this is going to be pretty straightforward uh yeah I had a suspicion that it was going to change up my system prompt so this is just going to relook at the response should be reasoning if it's one of those and conversational if the response is this uh we can just apply this and I can kind of show you here what I meant so now we just going to have like a uh so if uh root two is reasoning we're going to send it to this uh if not we're going to send it to the conversational agent so let's do a quick test here so let's just start by doing like the router agents we're going to send in just hello right so hopefully now we will only get the response from gp40 mini that's going to be the conversational agent yeah you can see we routed to conversational agent hello how can I help you today uh let's do a coding question here uh so let's put in a different argument here so now let's do write a python code to calculate the area of a circle so hopefully now this should mean be are routing to the other agent yeah reasoning agent and that is kind of how we set this up uh we should now get like a python code to calculate uh yeah so that is pretty straightforward too but it can be powerful because we can save money right H let's say we have this in a loop sometimes we get the answer from mini if it's just conversational but if we need more power we get it from the reasoning agent uh the thing is we lose latency because everything has to go now we use GPT 4 that was a bit slow but that's fine uh so everything has to go through the router agent and we need to be sure that this is able to make the right decision uh but it shouldn't be that hard to evaluate that and of course this is going to be more expensive too since we need to make a a additional query to the API uh but if you look at what anthropic said here so this could work well in like yeah picking models and different types of customer server server queries so sometimes we rout to a prompt about refunding request technical support uh so what we didn't do here is like like I said instead of having conversational agents we could have different prompts so this router kind of decide what input should be what kind of prompt this input should be sent to that is also something you can do and it's not so hard to set up uh but I think this show how we can create a simple router that divert the input where it's most likely to get the best or the cheapest or most optimized uh response the next workflow is going to be parallelization so here you can see llms can sometimes work simultaneously on a task and have the outputs aggregated programmatically so what we're going to do here is uh sectioning I think uh we could do breaking a task into independent subtask run in parallel uh they also suggest voting so this is running the same time uh same task multiple times to get different outputs uh I think we're going to do sectioning so we're going to do I think we can do like an async call do two uh prompts in parallel to get two different answers to kind of yeah let's say we ask for the weather here we ask for some kind of other stuff in llm C 2 we aggregate all this into yeah some kind of prompt and then we can use both results to get the output so when to use this workflow uh paralyzation are effective divided subtext that can be paralyzed for Speed or perspectives right so yeah we have some examples of how we can use sectioning and how we can use voting uh it shouldn't be hard too hard to set up if you use Python we can just do like a um Asing call here I think so let's head over to cursor and try to set this up so in our code here I just went back to one simple call here so we're just going to use the llm agent uh function that takes up prompt in and gives our response back so we can use this maybe we need to add one more function but let's uh show you I going to show you some kind of uh workflow we can do in cursor here so we have our code here remember we added the full effective agent article here in uh our documents so if we add this to our context here right uh effective agents.

Related Videos

26:52

Andrew Ng Explores The Rise Of AI Agents A...

Snowflake Inc.

351,914 views

18:35

Build Anything with Claude Agents, Here’s How

David Ondrej

229,975 views

10:23

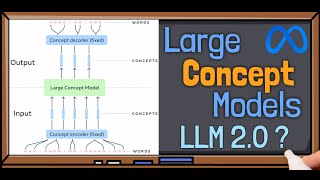

Large Concept Models (LCMs) by Meta: The E...

AI Papers Academy

9,372 views

29:46

How to Build Super Effective AI AGENTS - F...

All About AI

26,837 views

47:53

Can an AI Agent do Data Science? | Advance...

W.W. AI Adventures

3,696 views

58:06

Stanford Webinar - Large Language Models G...

Stanford Online

87,292 views

22:36

What is Agentic AI? Important For GEN AI I...

Krish Naik

85,629 views

20:19

Run ALL Your AI Locally in Minutes (LLMs, ...

Cole Medin

330,580 views

31:04

Reliable, fully local RAG agents with LLaM...

LangChain

79,571 views

57:45

Visualizing transformers and attention | T...

Grant Sanderson

290,475 views

24:33

SmolAgents: Build a Production-Ready Agent...

Prompt Engineering

9,511 views

26:51

RAG for VPs of AI: Jerry Liu

AI Engineer

2,840 views

25:37

AI Agents Tutorial For Beginners

codebasics

116,339 views

30:45

New - Easy to Learn - AI Agents: Smolagent...

Discover AI

6,799 views

20:34

Finally My AI AGENTS Has Money + the xAI A...

All About AI

4,879 views

19:15

GraphRAG: The Marriage of Knowledge Graphs...

AI Engineer

78,185 views

20:51

This AI AGENT Will Disrupt Industries BIG ...

All About AI

13,015 views

39:26

Build anything with o1 agents - Here’s how

David Ondrej

137,469 views

23:21

CUDA Mode Keynote | Andrej Karpathy | Eure...

Accel

19,731 views

24:02

"I want Llama3 to perform 10x with my priv...

AI Jason

522,097 views