Gaussian Processes : Data Science Concepts

12.97k views5488 WordsCopy TextShare

ritvikmath

All about Gaussian Processes and how we can use them for regression.

RBF Kernel : https://www.youtu...

Video Transcript:

[Music] what is up everybody welcome back today I want to talk to you about gaussian processes and specifically we'll be using gaussian processes to solve a regression problem today so as always on this channel let's set it up with something fun let's say that this is you you're a fisherman and you are fishing in this big Long Lake now some areas of the lake have a lot of fish that you could catch per day like this middle section here and there's other areas of the lake where there's lot less fish like these outer regions over

here so you set up this as a problem in two dimensions on the x-axis you have the number of meters down the river so on the very left is the beginning of the river at 0 m and at the very right is the end of the river at 100 m and on the y- AIS you have the number of fish that you can catch per day at that point on the river now these two orange dots here at 20 M and the other one at 100 m are places that you have observed are two past

days where you've actually gone out to those places caught fish and recorded the number of fish that you've been able to catch that day so at 20 M down the river you've been able to catch 10 fish a day and at 100 m down the river you've been able to catch 40 fish a day now the question arises if my goal is to maximize the number of fish that I'm going to catch per day where should I next try down the river where along the river between the zero and 100 Mark should I go try

next all I have right now is just these two data points of known quantities of fish that I've been able to catch per day now there's a really easy way to solve this problem you just draw a line between these points and you say that's my best estimate of how many fish I would catch per day and that's perfectly fine just a basic linear regression but we actually want to know not just on average how many fish could I expect to catch at any point on the river but much more importantly what is the uncertainty

around that estimate is it going to be perfectly accurate that many number of fish or is it very very uncertain it could be far fewer than that or it could be far more than that and to give a little bit of taste of that let's say that we're trying to figure out if we go 30 m on the river then maybe the center the expected number of fishes around this quantity a little bit over 10 and there's a little bit of uncertainty and the reason there's only a little bit of uncertainty is because 30 m

down the river is pretty close to the known quantity 20 M down the river where we do know how many fish we're going to catch so even though there is some uncertainty there it's not massive because it's close to a known quantity a place where we have already observed data very similar argument for 90 M down the river maybe the average is a little bit less than 4 but the uncertainty is a little bit but not too big because it's close to a known data point that we already have now I mentioned those two points

of course to contr contrast it with 60 M down the river which if you'll do the quick math is 40 m away from this known point and 40 m away from this known point which means that maybe again the average around here maybe the average of 40 and 10 so maybe like 25 fish per day we could catch there but the more important part is that the uncertainty is logically going to be a lot bigger because it's not that close to any of the known data thus far the uncertainty is going to be reflecting the

fact that it's far away from data points that we do have information about and so basically our goal is codified in that story that I just said given some known data here the fact that we have 10 fish with 20 meters down the river and 40 fish with 100 meters down the river so that known data is going to get encoded as X which is the 20 and 100 m the x axis here and F ofx which is going to be the number of fish that we catch so that's our known data given that known

data we want to find the distribution of that F that number of fish that we're going to catch for some unknown data points why for example 30 M 60 M 90 M and The crucial word here The crucial word here is the word distribution we want to know not just what's an average just what's the expected number of fish I can catch but I want a whole distribution I want to know what's the uncertainty around that estimate because it's going to be that average coupled with that uncertainty that's going to let me make a well-rounded

decision about out do I want to go try a spot that has an high expected number of fish but has a huge uncertainty around it or something that has a moderate number of expected fish but has a very much lower uncertainty around that and so it's exactly that that we're after gaussian process regression is exactly trying to find the distribution around some unknown quantities given some known data that we already have and as the name gaussian process which suggest we are going to make a key assumption here which good to point out that it's an

assumption that these distributions this F ofx and this this F of Y or the number of fish for known points and the number of fish for unknown points is going to be distributed normally with some mean mu and some covariance Matrix Sigma so the reason that this is a covariance matrix and not just a single standard deviation Sigma as you might expect here is because this F ofx and this F of Y are vectors f ofx is going to be a vector containing the number of training data points we have or the number of known

data points we have here just being two and F of Y is going to be a number of unknown data points whose distributions we are aiming to predict in this case three data points 30 60 and 90 and the number of fish that we would expect at each of those points down the river so this is really the key assumption this is the first thing to get across is that gaussian process regression is going to be assuming that these both known and unknown quantities follow a multivariate normal distribution with some mean and some covariance Matrix

now why is this important to set up let's say that we figured out what the mean should be and what the covariance Matrix should be the rest of this video is just explaining how exactly we get to that but pretend we got to that magically for a second if we knew that mu and we knew that coari Matrix then what we could do at this point is set up the distribution of Y given X which we'll get to this later in the video but it's going to be a normal distribution as well and so y

given X is going to be a normal distribution with some different mean and some different covariance Matrix but crucially if we have that information that's exactly going to give us what we're after y given X is exactly the mathematical formulation of the sentence we wrote here which is given some known data so given this known data X and I guess I should technically say F ofx what should the distribution of f of Y be we want to find the distribution of f of these unknown points y so that's exactly why it's important to set up

this F ofx and F of Y assumed to be normal distributions here and so now how are we going to figure out what that mean and covariance Matrix should be the first part is really anticlimactic and is a common simplification or assumption that we make in these gaussian process regressions which is that we're going to assume that this mu is going to be equal to the zero Vector now this seemed like a strange assumption to me so maybe I'll spend in 30 seconds and just kind of go over this I thought that if we assume

that mu is zero how do we predict anything here with a mean that's different from zero for example at F of 30 or F of 60 or F of 90 well that comes down to this conditional here once we set up this conditional F of y's distribution given F ofx the mean of that conditional is no longer going to be zero and we'll get more into that later but suffice to say here that it's safe to set this mean equal to zero to simplify a lot of the math that comes later but that does not

imply that the mean of these F of y's the mean of these distributions for the number of fish at 30 60 and 90 are also going to be zero and we'll see that just a little bit later on but the more interesting part of the story and I would argue the power of gassian process regressions come in setting up this covariance Matrix Sigma and how are we going to populate the elements of Sigma first how many elements are there in this Sigma covariance Matrix well it's going to be the number of training data data points

plus the number of testing data points so in this case there's going to be five total data points in our multivariate our five dimensional gaussian or normal distribution and we're going to fill in those 25 numbers which is 5^ squar using the power of kernels now in this context all you really need to consider kernels as is some function that we're going to use to fill in the entries of this covariance Matrix and that function can take many many different forms but a very popular form that folks use is the radial basis function kernel or

RBF kernel which you may be very familiar with from support Vector machines and we have a whole video on that I'll post it in the description below it looks a little bit scary but let's go ahead and break this formulation down this formulation is saying that hey if you want to fill in the IJ entry of this covariance Matrix Sigma then it's going to be this form it's going to start with a sigma squar we'll come back to that in just a second and then it's going to be e to the power of negative the

distance between two x's x i XJ squ all divided 2 l^ 2 and this L parameter will come back to in just a second as well but the key part really driving all this is this right here we'll just call D for the distance between two of our X's now I put that in this Norm notation because in general you could be operating on your input being multi-dimensional here our input is just single dimensional so this distance this Norm is really just a absolute distance between two points so for example if I put x i

being 20 and I put XJ being 30 then the distance between them is just 10 m if I put 20 and 100 then it's 80 M and so that's what I would plug in right here and square that and you see this negative sign being in front of this thing that always has to be positive basically saying that as the distance between two points increases because of this negative sign and because we have an exponential function here this Sigma i j is going to decrease it's going to decrease exponentially with this increasing distance and that's

the story mathematically but the story much more intuitively is just saying that data points who are far away don't affect each other their function values don't affect each other as much as data points who are closer and that is exactly mathematically encoding the story we were telling in the beginning which is saying that hey this 20 and 30 are kind of close to each other so when I'm figuring out what the value of this function should be the average and the standard deviation of this function or the whole distribution of that function should be at

30 I am going to take a lot of influence from my neighbor 20 because it's just it's just 10 m away what I'm not going to take a lot of influence from is my neighbor over at 100 because that's a whole 70 MERS away and filling out the covariance Matrix in this way whether we're using a radial basis function kernel a periodic kernel linear kernel any kind of Kernel we can think of is going to encode a very similar assumption which is generally that certain data points are going to be assumed to have more influence

on unknown data points than others and in our case it's just a simple assumption that The Closer a data point is to you the more influence its value is going to have on your own mean and on your own standard deviation and then this Sigma and this L are just hyperparameters so this L basically just controls the rate of this Decay and the sigma controls the overall magnitude of this covariance and to see what Sigma is doing pretend that this distance was zero so we're really just considering what is the covariance of a data point

with itself also known as the variance then of course this distance would be zero e to the^ of 0 is 1 and so this whole thing Sigma I I in this case is just going to be Sigma squar so this is a comment on what the variance is going to be at a given data point so the bigger we make Sigma the more uh uncertainty we're expecting on average and the smaller we make Sigma the less uncertainty we're expecting on average and so that's what L and sigma are doing but again the key Point here

is that if we use this radial basis function kernel which is the most popular kernel you're going to use in gaussian processes then we're encoding this assumption we talked about verbally and graphically over here which is that close by data points are going to have their distribution affect your distribution a lot more than data points who are further away from you and that folks really is the meat of gaussian processes and if we understand that then the rest of this video is going to be a breeze so let's switch Pages here and so now what

we're going to do is go ahead and use that formulation of the radial basis function kernel to just fill out this covariance Matrix here using an appropriately set Sigma and L and so let's go ahead and share some notes about things to draw our attention to so the first thing is that if we look at the The covariance Matrix at two data points 20 and 30 so 20 and 30 that value is 98 if you look at two other data points 90 and 100 who are also 10 meters apart so 90 and 100 are 10

meters apart and 20 and 30 are also 10 MERS apart so if we track down 90 and 100 then we see that that is also 98 so the fact that I'm trying to get across here is that our Co covariance Matrix is set up so that all that really matters when you're trying to come up with the co-variance between two data points is there distance between each other and you can see that mathematically on the previous page if this distance is fixed at 10 no matter if XI was 90 and XJ was 100 or XI

was 20 and XJ was 30 as long as that distance is 10 the value of this covariance Matrix is going to be the same and that's what we call a kernel who is invariant invariant to the actual data points and cares just about the distances between them now crucially they don't have to be that way but it is a very nice property in general that we have so that's observation number one another observation which we pointed out in the previous page is that if you look down the diagonal all of these numbers are 100 because

as we showed mathematically they're all going to be equal to Sigma squ which shows in our case that Sigma was equal to 10 so we can rederive Sigma that way by just looking at the diagonals and the other thing to know is that as we must have for cor variance matrices this thing needs to be symmetric so you can see that this is a symmetric Matrix which is what this last statement is saying and we can see that's very much intentional because if we look at this form the this distance between x i and XJ

if you flip the rules of J and I the norm is going to stay the exact same and so of course the corresponding element in the covariance Matrix has to be the same so we have a well functioning covariance Matrix that meets the criteria that we want for this problem so now going back to the previous page again for a second we wanted to find some kind of normal distribution with a mean and a covariance matrix the mean has been set at zero for Simplicity and the covariance Matrix has been filled in using the radial

basis function kernel although any number of kernels could worked as we'll revisit in just a second so now we have that joint distribution we have that joint distribution of F ofx and F of Y it's a normal distribution with that known mean and that known covariance Matrix so now crucially what we wanted is that we want the distribution of f of y y again being these unknown data points at 30 60 and 100 whose function values whose number of fish that we can expect to catch at those values is exact of the distributions that we're

trying to predict in this whole problem we want to know what are those distributions given these F of x's or the known number of fish at these known places on the river at 20 M and 100 m and this is one of those rules about normal distributions if F of Y is a normal distribution and F ofx is a normal distribution which they are according to marginalizing F ofx and F of Y out of this joint distribution above then we find that the resulting conditional distribution is also normal with a certain mean mu Prime and

a certain covariance Matrix Sigma Prime so actually there's a lot going on in and just these three lines here I don't want to dwell on them too much but for the mathematical among you we will say that we did do quite a couple operations here to make this be true so all we had said in the very beginning is that we have some joint distribution of the known number of fish F ofx and the unknown number of fish at the unknown data points f of Y and we said that that joint distribution is a normal

distribution with the mean and covariance Matrix that we just derived now the first thing we did was marginalize F ofx and F of Y and we can show that those are normal distributions in themselves and then once we've shown that those are normal distributions in themselves then when you condition one normal distribution on another normal distribution again the rules of normal distribution say that that conditional is also going to be a normal distribution all these things have different means and different covariance matrices but that's the reason that normal distributions are used here and the reason

we call them gausian processes is because Normal distributions have a lot of very nice properties when it comes to Marginal Iz in out and conditioning now the main question that you probably have is okay we have some mu Prime and we have some Sigma Prime if we knew what mu Prime and sigma Prime were we're done with this problem because we would have all of the means for these F of y's and we could read off of the diagonal of this covariance Matrix here to get all of the variances and therefore standard deviations associated with

those and we would have full normal distributions for all of our unknown data points now this is a place where again I'm not going to delve into the math too much but if you're interested please leave a comment below and I can definitely point you to the appropriate equations all we really need to know is that if you want to know mu prime or the new mean of these F of y's then all you need to know is Sigma which is the original coari Matrix which we literally have right here and also you need to

know the values at F ofx which we also know because that's our training data we know that if you're at 20 M down the river then you can catch 10 fish and if you're 100 MERS down the river you can catch 40 fish so all that information is known therefore we also know mu Prime and this is a call back to the previous page where remember we assumed that mu was equal to zero so it seemed a little impossible that we would have mu for all of these different unknown points be anything other than zero

but this is exactly how that works out when it comes to actually getting the average of these normal distributions for all these unknown data points it ends up being a function of the covariance Matrix and of the known number of fish down the river and so that's the way in which it doesn't stay at zero it gets it's updated and getting the value of Sigma Prime is even simpler all it depends on is just knowing the covariance Matrix Sigma so it's purely just a function of this covariance Matrix here so all that is to say

that we have closed forms in order to get mu Prime and get Sigma Prime and so that's it once we have mu Prime mu Prime is going to be a vector of how many data points three data points because remember now we're just getting a distribution of the number of fish for our unknown points down the river of which there are three so there's going to be one two three points in the vector that is Mu and therefore Sigma Prime is going to be a 3X3 coari Matrix explaining on the diagonal the variances of the

number of fish you can expect at those three points and off of the diagonal the co-variances and so we can just read off of that and if we do the math we find that the distribution for the number of fish you can expect at 30 m down the river is around 14 fish with a standard deviation of 1.7 for 60 M on the river it's around 28.4 with a higher standard deviation so this is the main thing I wanted to point out is that notice that this standard deviation for this 60 and maybe it's good

if I go back here for a second for this 60 who is further from all the data that we know about is going to have as we expected and as we wanted a bigger standard deviation than the points 30 and 90 which have a standard deviation of 1.7 and 1.7 as well and so we see exactly that's how we get the distributions for 30 60 and 90 M down the river and of course we could do it for every single value in between as well so at the end of the day at the end of

the day what we're going to trace out here is going to be something that looks like this something that looks like this and what this is representing is at these places that we already know about 20 M down the river and 100 meters down the river well we've observed those so there's no uncertainty there and I'll get back to that you might be thinking there can be uncertainty there and you're absolutely correct but for our simple case here we assume that those are known I know if I go 20 M down the river I'll get

10 fish I know if I go meters down the river I'll get 40 fish so those are known they have no standard deviation as we move away from those quantities both right of 20 and to the left of 100 the standard deviation starts increasing and increasing and increasing and increasing until you reach this point which is equidistant from both of them where the standard deviation is the highest I'm expecting if I go 60 M down the river that I'll get 28 is fish but you know it really could be plus or minus 4 I don't

really know exactly what that's going to be there's a good amount of uncertain C associated with that and that's exactly what we get when we use this cool technique called gaussian process regression over something like a simple linear regression which is just going to give you a point estimate for all these points along the way so the main takeaway for this video is that gaussian process regression is a really powerful tool for having a set of known data points and a set of unknown data points and they don't have to be between them they can

also be further to the right and further to the left here having a set of unknown data points which you're going to try and estimate the distribution of them assuming all these distributions are gaussian or normal using the values of these known data points and filling out this covariance Matrix using the power of kernels a couple of notes that I would leave you with here one is that the kernel really does matter so here we used a radial basis function kernel but even within that you can set different values for Sigma and different values for

L and you can also set different kernels alt together for example on the lower right hand side of this image you can see what if we choose a periodic kernel which makes very different cyclical assumptions about which which data points are related to which other data points which we did not make with the radio basis function kernel and these kernels can be combined in all sorts of creative ways so picking these kernels and then even once you picked a type of Kernel picking these hyper parameters for the kernel is one of the Arts that we

didn't delve into too much in this video but it is very important and can really affect the outcome these distributions that you get for your unknown data points and another thing I would say is that we did the easy version of gaussian process regression where for these data points that we knew about here at 120 we assume that if you went back to the river down at 20 M down the river and fished again for 100 days it would always get 10 fish a day of course that doesn't seem realistic that's not true sometimes you'll

get 10 sometimes you'll get 12 sometimes you'll get five and so this model is extendable so that we even assume that there is some uncertainty associated with points that we did observe and because that's how the real world works really these are not just known true it's always going to be like that these points themselves we may have data for them but that data is just one or two draws from a much wider distribution but because we do have data about them because we do have data about them their uncertainty is going to be lower

than places where we don't have any data about whatsoever so this model can be extended to deal with the cases where even for known data points there's some uncertainty around that and the last thing I'll leave you with today is that this whole thing even though I didn't frame it in that way is just an instance of basian Statistics because what are we doing in basian statistics we're starting with some kind of prior then given that prior we're going to add some data and as that data gets added our posterior distributions change and change and

change as we add more and more and more data now how does that narrative fit this problem well in the absence of any data whatsoever we would just have some prior our me would just be zero and we'd pick some kind of standard deviation for all of our data points now assume that we did observe some data at 20 meters down the river and 100 meters down the river that's our F ofx so now we have F ofx how are we going to update our posterior ior which is going to be F of Y given

F ofx and we're going to do it using this mathematical framework here we're going to do it using this assumption that it's a normal distribution which is based off of our covariance Matrix which is based off of the kernels that we used to fill it in and also these known data points here this whole framework can be thought of as a basian statistical framework and we can just extend it for example let's say that you go 60 M on the river and you find that you know what actually I ended up getting something like 50

fish over there now we would just update our posteriors for 30 and 90 places that we still don't know about let me use a different color here because it's getting a little bit messy but now that we observed a known data point here here and here our distributions might look very different in fact they might have a mean that looks kind of like this but then these standard deviations start looking like this like in brown here and then we might have a mean that comes down here these standard deviations might look like this and this

is updated because now at 2060 and 100 we have no uncertainty we' observed the number of fish we would catch there but now at 30 and 90 our uncertainty might actually shrink because now if you look at 90 we have some data that's just 10 met this way and we have some other data that's 30 m this way which is much better than when we just had one data point that was 10 meters away to the right and so actually I should have drawn these even narrower here really to be narrower than the previous orange

distributions but I think you get the point there how this is all just an instance of basian Statistics you add more and more and more data that's going to update so that known data gets added to your F ofx and then your F of y's that are remaining get updated they're distributions get different means and different standard deviations so uh thank you so much for watching like And subscribe for more videos just like this and I'll see all you wonderful wonderful people next time

Related Videos

7:57

Should You Scale Your Data ??? : Data Scie...

ritvikmath

11,951 views

42:24

Multiple Linear Regression By Hand (formul...

Stabelm

58,478 views

16:30

All Machine Learning algorithms explained ...

Infinite Codes

206,010 views

23:47

Gaussian Processes

Mutual Information

129,562 views

13:59

The Most Important Integral in Data Science

ritvikmath

6,214 views

17:36

The algorithm that (eventually) revolution...

Very Normal

74,610 views

25:52

Kernel Density Estimation : Data Science C...

ritvikmath

24,192 views

26:24

The Key Equation Behind Probability

Artem Kirsanov

121,605 views

29:32

I get confused trying to learn Gaussian Pr...

IntuitiveML

29,900 views

16:28

Bayesian Linear Regression : Data Science ...

ritvikmath

81,704 views

1:05:56

A tutorial on Bayesian optimization with G...

Pupusse LINCS

13,673 views

52:41

Machine Learning Lecture 26 "Gaussian Proc...

Kilian Weinberger

70,958 views

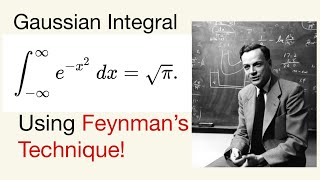

24:05

The Gaussian Integral is DESTROYED by Feyn...

Jago Alexander

80,767 views

24:08

EM Algorithm : Data Science Concepts

ritvikmath

71,597 views

15:57

Gaussian Process - Regression - Part 1 - K...

Meerkat Statistics

31,288 views

1:53:32

ML Tutorial: Gaussian Processes (Richard T...

Marc Deisenroth

135,000 views

20:30

What the Heck is Bayesian Stats ?? : Data ...

ritvikmath

66,902 views

20:18

Why Does Diffusion Work Better than Auto-R...

Algorithmic Simplicity

346,008 views

29:04

"A Random Variable is NOT Random and NOT a...

Dr Mihai Nica

21,932 views

50:53

Machine Learning Lecture 27 "Gaussian Proc...

Kilian Weinberger

29,491 views