XAI LAUNCHES GROK 3 [FULL REPLAY]

29.55k views8345 WordsCopy TextShare

Wes Roth

The latest AI News. Learn about LLMs, Gen AI and get ready for the rollout of AGI. Wes Roth covers t...

Video Transcript:

you're wel all right well welcome to the grock 3 presentation um so the mission of xai and Gro is to understand the universe we want to understand the nature of the universe so we can figure out what's going on where are the aliens what's the meaning of life how does the universe end how did it start all these fundamental questions um were driven by curiosity about the nature of the universe and um that's also what causes us to be a maximally truth seeking uh AI even if that truth is sometimes at odds with what is

politically correct in order to understand the nature of the universe you must absolutely rigorously pursue truth or you will not understand the universe you will be suffering from some amount of delusion or error so that is our goal um figure out what's going on and uh we're very excited to present grock 3 which is we think uh an order of magnitude more capable than grock 2 in a very short period of time and uh that's thanks to uh the hard work of an incredible team and um I'm honored to work with such a great team

and of course we'd love to have um some of the smartest humans out there join our team so uh with that let's let's go hi everyone my name is eigor lead engineering at XI I'm Jimmy Paul leading research I'm Tony working on the reasoning Team all right I'm Elan I don't do anything thing I just show up occasionally yeah so um like you mentioned Gro is the tool that we're working on grock is our AI that we're building here at xai and we've been working extremely hard over the last few months to improve grock as

much as we can so we can give it to all of you so we can give all of you access to it um we think it's going to be extremely useful do we think it's going to be interesting to talk to funny really really funny um and we're going to explain to you how we've improved Grog over the last few months we've made quite a jump in in capabilities yeah actually we should explain maybe also what is why do we call it Gro so Gro is a word from um a hland novel Stranger in a

Strange Land um and it's a used by a guy who's who was raised on Mars um and the word Gro is to sort of fully and profoundly understand something that's what the word Gro means fully and profoundly understand something and empathy is important true yeah so yeah so uh if we charted xas progress uh in the last few months has only been 17 months since we started kicking off our very first model uh grock one was almost like a toy by this point only 314 billion parameters and now if we PR the progress the time

on x-axis the performance of favorite Benchmark numbers M mlu on the y- axis we're literally progressing at unprecedent speed across Ross the whole field and then we kick off grock 1.5 right after grock 1 released after November 2023 and then gr two so if you look at where the all the performance coming from when you have a very correct engineering team and all the best AI at Talent there only one thing we need is a big intelligence comes from big cluster so we can reconvert the entire progress of xai now replacing the Benchmark on the

y- axis to the total amount of training flops that is how many gpus we can run at any given time to train our large language models to compress the entire internet so after gr all human all human knowledge really that's right yeah internet being part of it but it's really all human knowledge all the everything yeah the whole internet fits into a USB stick at this point it's like all the human tokens yeah that's right yeah uh very soon into the real world yeah um so we had so much trouble actually training grock 2 back

in the days um with kickoff the model around February and uh we thought we had a large amount of chips but turned out we can barely get AK training chips running coherently at any given time and we had so many Cooling and power issues I think you were there in the data center yeah it was like really sort of more like 8K chps on average at 80% efficiency more like like 6,500 effective uh h100s training for you know several months but now now we're at the 100K so yeah that's right more than 100K that's right

so so what's the next step right so after gr two so if we want to continue accelerate we have to take the matter into our own hands we have to solve all the coolings um all the power issues and everything yeah so so in April of last year Elon decided that really the only way for XI to succeed for XI to build the best AI out there is to build our own data center so um we didn't have a lot of time that because we wanted to give you gr free as quickly as possible so

really we realized we have to build the data center in about four months um it turned out it took us 122 days to get the first 100K gpus up and running and that was a Monumental effort uh to be able to do that um it's we believe it's the biggest uh fully connected h100 CL cluster of its kind um and uh we didn't just stop there we actually decided that we need to double the size of the cluster pretty much immediately if we want to build uh the kind of AI that we want to build

um so we then had another phase um which we haven't talked about publicly at so this is the first time that we're talking about this uh where we doubled the capacity of the data center yet again um and that one only took us 92 days so we've been able to use all of these gpus use all of this compute to improve GR in the meantime and basically today we're going to present you the results of that the the fruits that came from that um so let's yeah so all the path all the rows leads to

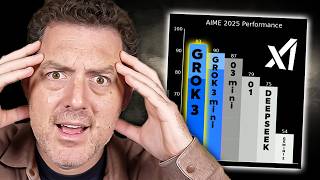

gr 3 uh 10x more compute more than 10x really yeah really like maybe 15x is yep uh compared to our previous generation model and gr finished the pre-training uh early January um and uh that we start you know the Auto still currently training actually so this is a little preview of our Benchmark numbers so we evaluated grock 3 on you know three different categories on General mathematical reasonings on general knowledge about stem and Science and then also on computer science coding so Amy uh American Invitational math examination uh hosts it you know once a year

uh and if we evaluate the model performance we can see that the gr 3 across the board is in a league of its own even it's little brother gr mini is reaching the frontier across all the other competitors so you will say well at this point all these benchmarks you're just evaluating you know the memorization of the textbooks memorization of the GitHub repost how about realtime usefulness how about we actually use those models in our product so what we did instead is we actually kicked off a blind test of our gr three model code named

Chocolate it's pretty hot yeah hot chocolate um and uh you know been running on this uh platform called Cho arena for two weeks um I think the entire X platform at some point speculated this might be the next generation of a uh AI com me away so uh how this CH Arena works is that um it Stripped Away the entire product surface right it just raw comparison of the engine of those agis the language models themselves and place interface where the user will submit one single query and you get to show two responses you don't

know which model they come from and in you make the vote so in this blind test gr 3 an early version of gr 3 already reached like 1,400 no other models has reached an ELO score had to have comparison to all the other models at this score and it's not just one single category it's, 1400 aggregated across all the categories in chb capabilities instruction following coding so it's number one across the board in this blind test and it's it's still climbing so we actually to keep updating it so it's it's 14,400 about 1400 in climbing

yeah and in fact we have a version of the model that we think is already much better than the one that we tested here yeah we'll see no how how far it gets but that's the one that we're you know um working on or talking about today yeah so actually one thing if if if you're using Gro 3 you I think you may notice improvements almost every day um because we're we're continuously improving the model so literally even within 24 hours you'll see improvements yep so but we believe here at xai getting a best pre-training

model is not enough that's not enough to build the best AI and the best I need to think like a human need to contemplate about all the possible solutions self-critique verify all the solutions backtrack and also think from the first principle that's a very important capability so we believe that as we take the best pre-train model and continue training it with reinforcement learning it will enlit the additional reasoning capabilities that allows the model to become so much better and scale not just in the training time but actually in the test time as well so we

already found the model is extremely useful internally um for our own engineering saving hours of uh time hundreds of hours of uh coding time so e you are the power user of our uh gr the reasoning model so what are some use cases yeah so like Jimmy said we've added Advanced reasoning capabilities to Gro and we've been testing them pretty heavily over the last few weeks and want to give you a little bit of a taste of what it looks like when Gro is solving hard reasoning problems so we prepare two little problems for you

one comes from physics and one is actually a game that gr is going to write for us um so when it comes to the physics problem you know what we want Gro to do is to plot a viable trajectory to do a transfer from Earth to Mars and then uh at a later point in time a transfer back from Mars to Earth um and that requires some know some Physics that gr will have to understand um so we're going to challenge grock you know come up with a viable trajectory calculate it and then plot it

for us so we can see it and um yeah this is totally unscripted by the way this is the that's the entirety of the prompt which should be clarify is that yeah there's nothing more than that yeah exactly this is the grock interface and we've typed in this text that you can see here generate code for an animated 3D plot of a launch from Earth uh landing on Mars and then back to Earth at the next launch window um and we've not kicked off with the query and you can see Gro is thinking so uh

part of grock's advanced reasoning capabilities are these thinking traces that you can see here you can even go inside and uh actually read what Gro is thinking as it's going through the problem as it's trying to solve it um yeah we say like we are doing some obscuration of the thinking so that our model doesn't get totally copied instantly um so there's more to the thinking then is displayed uh yeah yeah and because this is totally unscripted there's actually a chance that Gro might made a little coding mistake and it might not actually work um

so um just in case we're going to launch two more instances of this so if something goes wrong we we were able to uh to switch to those and show you um something that's presentable right so we're kicking off the other two as well um and like I said we have a second problem as well um and um yeah actually one of the favorite one of our favorite activities here at xci is having gr WR games for um and um not just any know any old game any game that you might already be familiar with

but actually creating new games on the spot and being creative about it um so one example that we found was really really fun um is create a game that's a mixture of the two games Tetris and be so this is maybe an important thing like this obviously if you if you ask an AI to create a game like Tetris there's there are many examples of Tetris on the on the Internet or like J whatever this it can copy it what's interesting here is it achieved a creative solution combining the two games that actually works and

and is a good game yeah that's the it's create we're seeing the beginnings of creativity yeah fingers crossed that we can recreate that hopefully it works yeah embarrassing if itn't so actually because this is a bit more challenging we're going to use something special here which we call Big Brain that's our mode in which we use more computation which more reasoning of for GR just to make sure that you know there's a good chance here that it might actually might actually do it um so we're also going to fire off know three attempts here at

at solving this game at creating this game that's a mixture of know Tetris and bols um yeah let's let's see what Gro comes up like I've played the game it's pretty good like it's you're like wow okay this is something yeah um so while gr is sping uh in the in the background um we can now actually talk about some concrete numbers know how how well is gr doing across tons of different tasks that we've tested it on so we'll hand it over to Tony to talk about that yeah okay so let's see how Gro

does on those interesting challenging benchmarks uh so yeah so reasoning again refers to those models that actually thinks quite for quite a long time before it tries to Sol a problem so in this case uh you know around a month ago the gr 3 pre-training finished so after that we work very hard to put the reasoning capability into the uh current graph 3 Model but again this is very early days so the model is still currently in training so right now what we're going to show to people is this beta version of the gr three

reasoning model alongside we also are training a mini version of the reasoning model so essentially on this plot you can see uh the grth three reasoning beta and then grth three mini reasoning the grth 3 reason mini reasoning is actually a model that we train for much longer time and you can see that sometimes it actually perform slightly better compared to the gr three reasoning this also just means that there's a huge potential for the grth three reasoning because it's trained for much less time um so all right so let's actually look at what how

how it does on those three benchmarks so Jimmy also introduced already so essentially we're looking at three different areas mathematics science and coding um and for math we're picking this high school competition M problem um for science we actually pick those PhD level science questions um and for coding it's also actually pretty challenging it's competitive coding and also some uh lead code which is some code inter interview problems that people usually get when interview for companies so on those benchmarks you can see that the gro 3 actually perform quite well uh across the board compared

to other competitors um yeah so it's pretty promising these models are very smart yeah so Tony what what what are those shaded bars yeah so okay so I'm glad you asked this question so for those models because it can reason it can thinks you can also ask them to even think longer uh you can spend more what we call test and compute which means you can spend more time to reason to think about a problem before you spit out the answer so in this case the Shaded bar here means that we just asked the model

to spend more more more time you know you can solve the the same problem many many times before it it tries to conclude what is the right solution and once you give this compute or this this kind of budget to the model it turns out the model can even perform better so this is Essen the Shaded bar in in those spots right so I think this is really exciting right because now instead of just doing one chain of thoughts with AI why not do multiple all at once yes so that's a very powerful technique that

allows to continue you scale the model capabilities after training um and you know people often ask are we actually just over fitting to the benchmarks yes so how about generalization so yes I think uh yeah this is definitely a question that we are asking ourselves whether we are overfitting to those current benchmarks uh luckily uh we have a real test so about 5 days ago Amy 2025 just finished this is where high school students compete in this particular benchmark so we got this very fresh new competition and then we asked our two models to compete

on the same Benchmark at the same exam and it turns out uh very interestingly the grth three reasoning the big one um actually does uh better um on this particular new fresh exam this also means that the generalization capability of the big model is stronger much stronger compared to smaller model uh if you compare to the last year's exam actually this is the opposite the smaller model kind of learns the uh the the previous exams better so yeah so this this actually shows some kind of true generalization from the model that's right so 17 months

ago our Gro zero and Gro one barely solves any High School problems that's right and now we have a kid that just already graduate the gro gr is ready to go to college is that right yeah I mean it's w be long before it's simply perfect the human exams won't be part they be too easy yeah like and internally we actually as a rocket Contin evolves uh we're going to talk about you know what we're excited about but very soon there will be no more benchmarks left yeah yeah one thing that's quite fascinating I think

is that we basically only trained Ro's reasoning abilities on math problems and comparative coding problems right so very very specialized kinds of tasks but somehow it's able to work on all kinds of other different tasks so including creating games no lots lots and lots of different things um and what seems to be happening is that basically Gro learns this ability to detect its own mistakes in its thinking correct them persist on a problem try lots of different variants pick pick the one that's best so there are these generalized generalizing abilities that Gro learns from mathematics

and from coding which it can then use to solve all kinds of other problems so that's yeah that's pretty I mean reality is the instantiation of mathematics that's right um and one thing we're actually really excited about that going back to our fing mission is is what if one day we have a computer just like deep thought that utilize our entire cluster just for that one very important problem in the test time all the GPU turned on right so I think back then we were building the GPU clusters together uh you were plugging cables and

I remember that when we turn on the the first initial test you can hear all the GPS humming in the hallway that's almost feel like spiritual yeah that that's actually a pretty cool uh thing that we're able to do that we can go into the data center and Tinker with the machines there so for example we went in and we unplugged a few of the cables and just made sure that our training setup is still running running stably so that's something that you know I think most uh AI you know teams out there don't usually

do but it's actually totally unlocks like a new level of reliability and what you're able to do with with the hardware so okay so when when are we going to solve remon so uh the EAS solution is to uh numerate over all possible strings and as long you have a verifier enough compute you'll be able to do it okay my projection will be what's your guess what is your neuron net calculate so my my my both prediction so so three years ago I told you this I think in now it's uh two years uh later

two things going to happen we're going to see machines win some medals yeah that's touring award absolutely Fields metal Nobel Prize mhm with probably some expert in the loop right so the expert uplifting do you mean so this year or next year oh okay that's what it comes down to really yeah so it looks like grock finished know all of its thinking on the on the two problems so let's take a look at what it said all right so this was the the little physics problem we had um no we we've collapsed the thoughts here

so they're you know they're hidden and then we see gr's answer below that so explains it wrote a python script here using matplot lip then gives us all of the code um so let's take a quick look at the code you know seems like it's doing reasonable things here not not totally of the Mark um solve Kepler says here so maybe it's solving capus laws cap capus law numerically um yeah there's really only one way to find out if this thing is working I'd say let's let's give it a try let's run let's run the

code all right and we can see um yeah gr is animating two different planets Earth and Mars here and then the the green ball is the the vehicle that's transiting the the spacecraft that's transitioning between Earth and Mars and you you could see the journey from Earth to Mars and looks like yeah indeed the the astronauts return safely you know at the right moment in time um so now obviously this was just generated on the spot so no we can't tell you if that was actually correct solution so we're going to take a closer look

now maybe we're going to call some colleagues from space X ask them if if this is legit um it's pretty close it's it's I mean uh yeah I mean there there there's a lot of complexities in the actual orbits that have to be taken into account but this is this is pretty close to to what it what it looks like awesome um cool Co in fact I have that on my P here this is got the Earth m home and transfer on it yeah when when are we going to install Gro on a rocket well

I suppose in two years two years everything is two years away uh well Earth and Mars Transit can occurs every 26 months the next we're currently in a Transit window approximately the next one would be um November of next year um roughly end of next year um and uh if all goes well SpaceX will send Starship Rockets to Mars and um with Optimus robots and uh and Gro mhm yeah I'm curious what this combination of Tetris and B looks like bet Tetris as we've named it internally um so okay we also have an output from

gr here it says wrot a python script explains that it's what it's been doing you look at the the code know there are some constants that are being defined here some colors then the the troinos the the pieces of Tetris are there um obviously very hard to see at one glance if this is good so we got to we got to run this to figure out if it's working let's let's give it a try fingers crossed all right right so this kind of looks like Tetris uh but the the colors are a little bit off

right the colors are different here and um I if you think about what's going what's going on here B has this mechanic where you if you get three Jews in a row you know then they they disappear uh and also gravity activates right so uh what happens if you get three of the colors together okay so something happened um so I think I think what SC did in this version um is is that you know once you connect three at least three blocks of the same color in a row then um know gravity activates and

they disappear and then gravity activates and all the other blocks fall down um kind of kind of curious if there's still a Tetris mechanic here where if the line is full does it actually um clear it or what happens then it's up to interpretation you know so who who knows yeah I mean when it'll do different variants when you ask it it doesn't do the same thing every time exactly we've seen a few other the tetris that worked very differently but this one seems cool so yeah are we ready for uh game game Studio at

x. yes so we're launching uh an AI gaming studio at xci if you're interested in joining us and building AI games uh please join xai we're launching an AI gaming studio we're announcing it tonight let's go epic games but wait that's an actual gamees St me yeah yeah um all right so um I think one thing is super exciting for us uh is that once you have the best pre Trend model you have the best reasoning model right so we already see that where you actually give the capability for those model to think harder uh

think longer think more broad the performance continue improves and we're really excited about the next Frontier that what happen if we not only allow the model to think harder but also provide more tools just like how real humans to solve those problems for real humans we don't ask them to solve reman uh hypothesis just with a piece of pen and paper no internet so with all the basic web browsing search engine and code interpreters that builds the foundations and the best reasoning model builds the foundations for the gro agent to come um so today we're

actually introducing a new product called Deep search that is the first generation of our gr agents that not just helping the engineers and research and scientists to do coding but actually help everyone to answer questions that you have day today it's a kind of like a Next Generation search engine that really help you to understand the universe so you can start asking question like for example hey when is the next Starship lunch day for example um so let's try that if get the answer um on the left hand side we see uh a high level

progress bar essentially you know the model know is going to do one single search like the current rack system but actually thought very deeply about hey what's the user intent here and what are the facts I should consider at the same time and how many different website I should actually go and read their content right so this can really save hundreds hours of everyone's Google time if you want to really look into certain topics and then on the right hand side you can see the bullet summaries of how the current model uh you know is

doing what websites browsing what source is verifying and often time actually cross validate different sources out there uh to make sure the answer is actually correct before it's output final answer and we can you know at the same time fire up a few more queries um how about you know you don't you're a gamer right so uh sure yeah so how about what are some of the best builds and most popular builds in uh path Exel hardcore right a hardcore League I you can technically just look at the hardcore ladder might be a fast way

to figure it out yeah we'll see what model does um and then we can also do uh you know uh something more fun for example um how about like make a prediction about the marsh madness out there yeah so this is kind of a fun one where um Warren Buffett has a billion dollar bet if you can exactly match the I think the all the the the sort of the entire winning tree of March Madness you can win a billion dollars from Warren Buffett so like it would be pretty cool if AI could help you

win a billion dollars from Buffett that seems like a pretty good investment let's go yeah all right so now let's uh fire up the query and uh see what model does so we can actually go back to our very first one how about the buff it wasn't counting on this it's already done that's right okay so we got the result of the first one and model thought uh around one minute uh so okay so the key inside here the next Starship is going to be on 24th or later so no earlier than February 24th it

might be sooner so yeah so I think we can you know go down scroll down what what the model does so it does a little research on the fight 7 what happened got grounded and actually it look into the FCC filing uh uh you know from its data collections uh and then actually make the new conclusion that yeah if we continue you scroll down uh let's see uh uh right yeah so it makes uh the you know little table I think uh inside xai we often joked about the time to the first table is the

only you know latency that matters um yeah so that's how the model make inference and look up all the sources um and then we can look into the gaming one so how about the right so for this particular one uh we look at hey the you know the build is like it's all so uh with the The Infernal but if we go down so the surprising fact of uh all the other builds so it look into the 12 classes um yeah so we see that the minum build was pretty popular whenever the game first came

out and now the the invokers of the world yeah took over invoker monke invoker for sure yeah that's right yeah followed by the stor wavers and that's really good at mapping so yeah and then we can see uh uh the the match Manus how about that so um one one interesting thing about the Deep search is that if you actually go into the panel where shows uh you know what are the sub tasks you can actually click the bottom left of this right and then in this case you can actually scroll through actually reading through

the mind of Gro what informations does the model actually think about are trustworthy what are not how does it actually cross validate different information sources so that makes the entire search experience and information retrieval process a lot more transparent to our users and this is much more powerful than any search engine out there you can literally just tell it only use sources from X you know I will try to respect that yeah and so it's much more steerable much more intelligent than I mean it really should save you a lot of time so something that

might take you half an hour or an hour of researching on the web or searching social media you can just ask it to go do that and and come back in 10 minutes later it's done an hour's worth of work for you that's really what it comes down to exactly and maybe better than you could have done it yourself yeah think about you have infom man of interns working for you now you can just fire up all the tasks and come back a minute later um so this is going to be interesting one so uh

March M had not happened yet so I guess we had to follow up with a uh next live stream yeah it seems like pretty good like $40 might get you a billion dollars $40 subscription that's right I mean my work so uh yeah so when are the users going to have their hands on 3 yeah so the the good news is we've been working tirelessly to actually release um all of these features that we've shown you the Grog free based model with amazing chat capabilities that's really useful that's really interesting to talk to uh the

the Deep search the advanced reasoning mode all of these things we want to roll them out to you today starting with the premium plus subscribers on X so it's the first group that will initially get access make sure to update your X app if you want to see all of the advanced capabilities because we just released the update know as we're as we're talking here um and uh yeah if you're interested in getting early access to gr then sign up for premium plus um and also we're announcing that we're starting a separate subscription for grock

that we call Super grock for those who are those real grock fans that want the most advanced capabilities and the earliest access to to new features um so feel free to check that out as well this this is for the dedicated grock app and for the website ex website so our our new website is called gro.com yeah and you'll also find you never guess yeah you never guess and you can also find our grock app in the IOS app store and that gives you like a more Pol even even more polished uh experience that's totally

grock focused if you're if you want to have grock easily available one Tap Away yeah the version on gro.com on uh you know on a web browser is going to be the the most the latest and most advanced version because obviously takes us a while to getting get something into an app and then get it approved by the app store so uh and then if something's on a phone format there's limitations of what you can do so the most powerful version of grock um and the latest version will be the the web version at gro.com

yeah so watch out for the name grock free in the app did giveaway yeah exactly that that's that's the giveaway that you have gr free and if it says gr through then gr free hasn't quite arrived for yet but we're working hard to roll this out today um and then to even more people over the the coming days yeah make sure you update your uh phone app too um where you're actually going to get all the tools we showcase today with the thinking mode with deep search so yeah really looking forward to all the feedbacks

you have yeah I think we we should uh emphasize that this it's kind of a beta like meaning that it's you should expect some imperfections at first um but we will improve it rapidly almost every day in fact every day I think it'll get better um so if you want a more polished version I'd like maybe wait a week but uh expect improvements literally every day um and then we're also going to be uh providing a voice interaction so you can have conversational in fact I was trying it earlier today it's working pretty well but

not we need these bit more polish um the the the sort of way where you can just literally talk to it like you're talking to a person uh it's that's awesome it's actually I think one of the best experiences of Gro um but that's that's probably about a week away yeah so uh with that said um well I think we might have some audience questions sure yeah all right let's take a look yeah let's take a look the uh the audience from the as platform yeah Co so the first question here is when grock voice

assistant when is it coming out yeah as as as soon as possible just like Elon said just a little bit of polishing away from being released to everybody um obviously it's going to be released in an early form and we're going to rapidly iterate on that Y and the next question is like when will 3 being the API so this is coming in the uh the gr 3 API with both the reasoning models and deep search is coming your way in the coming weeks uh we're actually very excited about the Enterprise use cases of all

these additional tools that now Gro has access to and how the test time compute and two use can actually really accelerate all the business use cases um and another one is Will voice mode be native or text to speech so I think that means is it going to be one one model that is understanding what you say and then talking back to you or is it going to be some system that has text of speech inside of it and the good news is it's going to be one model like a variant of gr free that

we're going to release which basically understands what you're say what you're saying and then uh generates the audio directly from that um so very much like Grog free generates text know that model generates audio um and that has a bunch of advantages I was talking to it earlier today and it said hi igore know reading my my name name from probably from some text that it had um and I said no no my name is Igor and it remembered that you know so it could continue to say Igor just like a human word and you

can't achieve that with with Tex of speech Soh yeah so oh here's a question for you pretty spicy um you um is grog a boy or a girl and are they sing Gro is whatever you wanted to be yeah yeah are you single yes all right the shop is open um so honestly people are going to fall in love with crcket since it's like 1,000% probable yeah uh the next question will Gro be able to transcribe audio into text yes so we'll have this capability both the app and also the API we found that's like

gr should just be your personal assistant looking over your shoulder right and follow you along the way learn everything you have learned and really help you to understand understand the world better it become smarter every day yeah I mean the voice M doesn't isn't simply it's not just voice text it understands like tone inflection pacing everything it's it's wild I mean it's like talking to a person okay um yeah so any plans for conversation memory yeah yeah absolutely we're working on it right now I really forgot that's right um let's see what are the other

ones so what about the you know the DM features right so if you have personalizations and if you have uh you know Gro remembers your previous interactions yes should it be one Gro or multiple different grocs it's up to you you can have one Gro or many grogs y I suspect people will probably have more than one yeah I want to have a doct Gro yeah the grock dog that's right um right cool um so in the past we've open sourced grock one right so somebody's asking us are we going to do that again with

Gro tool yeah I think um once Gro our general approach is that we will open source the last version when the next version is fully out so like when when gr 3 is um mature and stable which is probably within a few months then we'll open source gr too okay so we probably have time for one last question um what was the most difficult part about working on this project I assume um gr 3 and what I most excited about so I think me looking back you know getting the whole model training on the 100K

h100 coherently that's almost like only against the final boss of the universe the entropy because any given time you can have a cosmic rate that beaming down and flip a bit in your transistor and now the entire grading update if it's fit mantisa bit the entire grading update is out of whack and now you have 100,000 of those and you have to orchestrate them every time any at at any given time any of gpus can go down and yeah I mean it's worth breaking down like how were we able to uh get the world's most

powerful training cluster operational within 122 days um because we we started off um we we actually weren't intending to do a data center ourselves we were going to just uh we we went to the data center providers and said how long would it take to have 100,000 uh gpus operating coherently um in a single location and we got time frames from 18 to 24 months so we're like well 18 24 months that means losing is a certainty so the only option was to do it ourselves so then if you break down the problem I guess

I'm doing like reasoning here like makes you think um one single chain though yeah yeah exactly so um well we needed a building we can't build a building so we must use an existing building um so we we looked for um for basically for factories that had been um were that have been abandoned but the factory was in good shape like a company had gone bankrupt or something so we found an Electrolux Factory in memph in Memphis that's why it's in Memphis um home of Alvis um and also one of the oldest I think it

was the capital of ancient Egypt um and uh it was actually very nice Factory that I know forever whatever reason that electrox had left um and uh that that gave us shelter for the computers uh then we needed power the we needed um at least 120 megawatt at first but the building only had 15 megaw and ultimately for 200,000 me 200,000 gpus we needed a quar gwatt so we um initially uh leased uh a whole bunch of um generators so we have generators on one side of the building just one trailer after trailer trailer of

generators until we can get the utility power to to come in um and then but then we also need cooling so on the other side of the building it was just trailer after trailer of of cooling so we leased about a quarter of the mobile cooling capacity of the United States uh on the one other side of the building um then we need to get the gpus all installed and they're all liquid cooled so in order to achieve the density necessary this is a liquid cooled system so we had to get all the plumbing for

liquid cooling nobody had ever done a liquid cooling uh data center at scale so this was uh incredibly dedicated effort by a very talented team to achieve that outcome um I may think not now it's going to work nope um the the issue is that the the power fluctuations for a GPU cluster are dramatic so it's it's like a this giant Symphony that is taking place like imag having a symphony with 100,000 or 200,000 participants in the in the symphony and the whole Orchestra will go quiet and loud in you know 100 milliseconds and so

this caused massive power fluctuations so then um which then caused the generators to lose their minds and they they weren't expecting this so to buffer the power we then uh used Tesla megapacks uh to smooth out the power so the MEAP packs had to be reprogrammed so with with with xai we working with Tesla we reprogrammed the MEAP packs to be able to deal with these dramatic power fluctu fluctuations to smooth out the power so the computers could actually run properly and um that that worked uh it's quite tricky and uh and then but even

at that point you still have to make the computers all communicate effectively so all the networking had to be solved and uh debugging Brazillian network cables um a debugging nickel at 4: in the morning I think we solved it like roughly 4:20 a.m. yes than was figured out like there's some well there were a whole bunch of issues well like one there was like a bios mismatch bios was not set up correctly yeah we had uh D our lspc C outputs between two different machines one that was working yeah one that was not working yeah

many many many other things I mean yeah exactly this would go on for a long time if we actually listened all the things but you know it's like interesting like it's not like oh we just magically made it happen you had to break down the problem just like gr do for reasoning uh into the constituent elements and then solve each of the constituent elements in order to achieve uh a a coherent training cluster in a period of time that is a small fraction of what anyone else was could do it in and then once the

training cluster was up and running and we could use it know we had to make sure that it actually stays healthy throughout which is his own giant Challenge and then we had to get every single detail of the training right in order to get a gr level model which is actually really really hard so um we don't know if there are any other models out there that have gr's capabilities but whoever trains a model better than gr has to be extremely good at the the science of deep learning at every aspect of the engineering um

so it's it's not so easy to pull this off and this is now going to be the last cluster we build and last Model we train oh yeah we've already we've already started work on the next cluster which will be yeah about five times the power so instead of a quarter gwatt roughly 1.2 GW what's the what's the Back to the Future wor what's the power you does like the Back to the Future car yeah don't anyway the Back to the Future power car it's it's like roughly in that order I think um so um

and you know these will be the sort of the gb200 SL300 cluster it it it once again will will be the most powerful training cluster in the world so we're not like stopping here and our reasoning model is going to continue to improve by accessing more tools every day so yeah we're very excited to share any of the upcoming results with you all yeah the thing that keeps us going is basically being able to give gr free to you and then seeing the usage go up seeing everybody enjoy no gr that's that's what really get

us up in the morning so yeah yeah thanks for tuning in thanks guys hey Gro what's up can you hear me I'm so excited to finally meet you I can't wait to chat and learn more about each other I'll talk to you soon

Related Videos

13:25

Elon's Grok-3 Just Beat EVERYONE?!

Matthew Berman

46,275 views

8:57

Trump Brings The Receipts To Read Off Shoc...

Forbes Breaking News

2,143,099 views

20:52

Grok 3: “Smartest AI on Earth” Takes Down ...

MattVidPro AI

12,371 views

1:00:15

Ilya Sutskever: OpenAI Meta-Learning and S...

Lex Fridman

337,865 views

15:24

I put ChatGPT on a Robot and let it explor...

Nikodem Bartnik

1,561,271 views

58:06

Stanford Webinar - Large Language Models G...

Stanford Online

118,415 views

24:42

Trump faces major roadblocks in Ukraine plan

Times Radio

80,231 views

9:04

Grok-3 Just Beat Every Other AI Model

Mental Outlaw

31,669 views

11:30

New 1,300hp BMW M3 revealed!

carwow

599,400 views

18:19

Bill Gates on Trump, AI, and a Life of Rev...

Amanpour and Company

230,749 views

46:51

Unmasking Belle Gibson: The real story of ...

60 Minutes Australia

908,306 views

13:16

XAI Prepares COLOSSAL Release, AI gaming, ...

Wes Roth

35,842 views

8:37

xAI Just Unveiled GROK 3 – Hyped as the Mo...

AI Revolution

26,395 views

11:02

William Hague: 'Huge danger' Trump's deal ...

Times Radio

75,077 views

8:47

Debunked explanations for Musk's reckless ...

MSNBC

482,348 views

14:31

Douglas Murray’s talk that left 4,000 peo...

Alliance for Responsible Citizenship

500,544 views

6:10

“I’m Sticking It Out” - John Oliver Isn’t ...

The Late Show with Stephen Colbert

995,972 views

17:38

Grok 3 Vs DeepSeek VS ChatGPT: Who Wins?

Julian Goldie SEO

20,454 views

19:00

Elon Musk's Grok3 Just STUNNED The Entire ...

TheAIGRID

128,024 views

26:04

Trump, Musk and the tech billionaires: Is ...

DW News

155,807 views