Neural and Non-Neural AI, Reasoning, Transformers, and LSTMs

18.47k views15256 WordsCopy TextShare

Machine Learning Street Talk

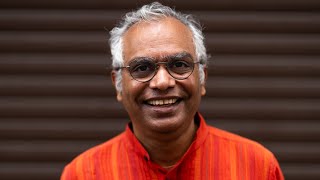

Jürgen Schmidhuber, the father of generative AI shares his groundbreaking work in deep learning and ...

Video Transcript:

they think now AGI is closed just because of Chad GPT I think the only reason for that is because they don't really understand the nature of these large language models and their incredible limitations welcome back to mlst today is a big one we have youan Schmid huba The Original Gangster of artificial intelligence Google Apple Facebook Microsoft Amazon all of these companies emphasized that AI is Central to what they are doing and all of them are using in a massive way these learning algorithms that we have developed in our little labs in unic and in Switzerland

since the early '90s basically the other day was was the best day of my life pretty much uh I got to spend 7 hours with yugan schmidhuber we basically spoke about everything literally everything so there's something for everyone in here the most important thing we did was we felt the AGI together I've been feeling the AGI since the 1970s he was feeling the AGI before ilas s was even born oh yes this guy is the [Music] daddy there is much more than just another Industrial Revolution it's something that transcends humankind and even life itself in

the not so distant future for the first time you will have something like um animal like AI once we have something like that it may take just a few more decades until we have true human level Ai and most of AI is going to immigrate because most of the resources are far away and it's not going to stop with the solar system and within a few tens of billions of years totally within the limits of physics and expanding AI sphere is going to transform the entire visible Universe humans are not going to remain the crown

of creation but that's okay because you can still see beauty and Grandeur in realizing that we are part of a grander scheme leading the entire universe from low complexity to higher complexity it's a privilege to live at a moment in time where we can witness the beginnings of that and contribute something to it you know that Jurgen said the future will be much more surprising than most people can imagine well here's something that might surprise you that the best search API you should be using right now is the brave search API the unsung hero of

the search world just as Schmid ho laid out the groundwork for modern AI Brave is Paving the way for a new era of independent unbiased search with an index covering over 20 billion web pages it's like having Schmid hu's vast knowledge at your fingertips and indeed behind an API of course minus the German accent built from scratch without big Tech biases Brave search index is powered by real anonymized human page visits it's like crowd sourcing Knowledge from a global brain trust filtering out the noise and keeping the good stuff Braves index is refreshed daily with

tens of millions of new pages and for all you AI enthusiasts out there this is your chance to train your models on data that's as fresh as Schmid Huber's oldest research it's perfect for AI model training and retrieval augmented generation offering ethical data sourcing at prices that won't make your wallet cry over 2,000 free queries monthly go to Brave /i now enjoy part one of our Schmid huba show you again welcome to mlst it's an absolute honor to have you on the show my pleasure thank you for having me I I just wondered whether you

thought that there might be a breakthrough in AI technology which reduces the the amount of computation we need now to give you an example franois Chet I'm interviewing him in August but I also interviewed the current WI of his Arc challenge Jack Cole last week and his basic idea is that we need discret program synthesis we need symbolic AI or neuros symbolic Ai and um it could be neurally guided so there could be some kind of meta patent program search but Jack Cole actually had an interesting thing he said he said that neural networks are

wide but shallow but symbolic methods are kind of narrow but deep if if that makes sense so there's something interesting there with having with having a touring machine and having symbolic methods what are your Reflections on that yes I totally agree there are so many problems in computer science which deep learning cannot solve at all you know like um just basic theorem proving where you have deep search trees and you are trying to prove new theorems in a way that is guaranteed to be correct yeah so there you you wouldn't want to use neural network

for doing that you might want to use neural networks to um to try to find shortcuts through the trees or something like that given certain patterns that you see in the proofs and stuff like that however the the basic principles have nothing to do with deep learning and there are so many things so many problems that can be solved by uh machines that are not deep Learners and um and they are much faster and um more efficient all of symbolic integration for example has nothing to do with deep learning this is very simple uh simple

manipulation and the most successful language models today uh what do they do well whenever there's a problem of symbolic manipulations and they call one of these traditional uh symbolic integration machines and then try to figure out what exactly did this thing compute and how can I embed it into my language flow such that it makes sense yeah so uh there are millions of problems where you don't get anywhere with steep learning yeah it's so interesting because we often make the argument that neuron networks are finite state automater that they're not tearing machines and um this

is really interesting because for many years Lon and Hinton they resisted this argument that they were trying to say that there's no reason in principle why you couldn't do symbolic abstract manipulation in a neuron network but it's it's really interesting just to hear you be so clear about that that there's a difference no no uh a recal network is a general purpose computer yeah so with a recal network in principle you can compute anything that you can compute on your laptop on that though there were I think it was a paper in 1995 which proved

that and I think that it was um with arbitrary Precision so it was kind of cheating it was pretending to be a touring Machine by um adding more and more Precision to the weights you mean the paper by zman and Z exactly that one yeah and I think it was more like 91 or something like that okay but uh the argument in there um is not very convincing for um the reason that you just mentioned because you need infinite Precision on the weights yes my favorite proof that um recurrent networks are general purpose um computers

is not that it's much simpler with a recal network you have these um hidden units and in those you can Implement nand Gates not and Gates and so one of the first exercises in computer science is build the arithmetic processing unit of of your laptop of the control unit from nand Gates which you can do so there's a a way of um using only nand gates in a recurrent Network to to create what is driving your laptop so anything your laptop can be can do can also be done by this recur Network unlimited and infinite

are two different things there are no infinite things in the known universe unlimited just means finite but without limit um since lsdm is a Recon you can always make it one step Deeper by increasing the length of the incoming input sequence by one and this just means that the unfolded lstm will have one more virtual layer and already 20 years ago we had practical applications where we used um the residual connections of lstm uh to propagate errors through a really really deep um unfolded lstm with tens of thousands of virtual layers so deep learning is

all about depth and the same principle enabled later the highway net which is a feet forward network but using these lsdm principles and half a year later the resets so um in this universe there is nothing that is infinite there's no infinite Precision there is no infinite storage space and whatever so there are no Universal computers because truly Universal computers need unlimited potentially infinite storage and the recur networks don't have that but the limitations of the recur networks are exactly the same which are the limitations of your laptop so from any practical sense they are

just as powerful as your laptop or any other computer if you need more storage space you just make them bigger or you add additional storage to laptop I I agree I think many people make that argument but could could you not say there's a difference in kind in a touring machine being able to address a potentially infinite number of situations so it's a little bit like um even though it's a a finite object it can represent a potentially infinite size and it does that through being able to expand its memory so even though you can

just have a huge neural network in it and if it's big enough it can represent and perform any computation that my laptop does doesn't the touring machine class give us something more but it's just a theoretical construct you know the the touring machine is a variant of what goodle had in 1931 so where he used also an unlimited storage um to to talk about proofs and um and he showed that there are fundamental limitations to computation and also to AI how did he do that well he showed that if you uh look at proofs that

can be generated through a computational the theorem proving procedure then uh there are certain statements that you cannot prove in a given acatic system but you also cannot prove the country so they are undecidable and suddenly you have limitations of all these um logically possible um machines where you can really execute the programs that are running and generating the proofs and so on all of these um devices such as as what goodle had and then charge and um 1935 and during 1936 and post in 1936 all of these are theoretic constru constructs which have nothing

to do with what you can build in this universe because in this universe all the computers are finite State autometer well well final push back on that because it sounds like a theoretic you know like why am I making that argument but a great example is your python interpreter can interpret an an infinite number of possible programs it it's infinite and and a neuron network will always be able to recognize a finite number of things that it was trained on so that that that's the difference to me yeah but um you are talking about this

theoretical limit of python programs but what you can Implement on your laptop is not as general because most of the possible python programs you cannot Implement on your laptop because the storage isn't big enough true and the same is true for touring machines or for you know G codes and all this stuff so um in practice we are always limited to finite State autometer and the only thing that we can admit is that the finite State autometer that are represented by recal networks sometimes are much more inefficient than other kinds of finite State autometer which

which are better for you know multiplying numbers or which are better for proving theorems or which have stacks and stuff like that very traditional um but very useful Concepts which are not obvious and where it's not obvious how to implement them in neural networks in principle they are all on the same level anything that you can Implement on any practical computer is not better than a finite State machine however most of the practic problems that we want to solve are so um so simple that the storage limitations don't make a big difference and we don't

have to add storage all the time because the computation expands and the laptop isn't big enough and so on so we are practical guys and we are limiting ourselves to those practical problems that be can be currently solved through either Recon networks or Transformers which are more limited than recurrent neural networks or um or um traditional the improvers F final push back I mean um Hillary putam spoke about multiple realizability so you could represent any computation you know for example with a series of hoses and and water pipes and and buckets and so on or

you know even any open physical system so it sounds to me like you're talking about multiple realization whereas for me the magic is in kind of representation and generalization so it's the ability to have a compact symbolic representation of something that could work in potentially infinitely many situations because surely that is a great form of doing AI rather than memorizing all of the different ways of doing something yeah but uh it's very hard to draw the line between these things so remember before we uh talked about parity and we we learned if we didn't know

that already that Transformers cannot even learn the Logics behind parity you know yeah so parity very simple 0 1 0 0 is the number of one bits odd or even so questions like that a recurr network is a neural network and can easily solve that because to solve that problem what you have to learn you have to learn to become a flipflop so the way to solve that problem is you read the bits one by one you know first bit and second bit and you have it you have a tiny little Recon network with one

single Recon connection from a hidden unit to itself wide up such that whenever a new one comes in the internal State flips from you know 1.0 to 0.0 something like that and then this very simple device which looks like a like a little logic ciruit can solve parity which Transformers cannot where is now exactly the difference between these little logic devices that can do that and Recon networks well this little logic circuit is just a special case of a Recon Network so everything that you can do with logic ciruits you can do with recur networks

and that's why I've been so fascinated with recurr networks since the 80s because they are general purpose computers not in the Universal uh sense that doesn't exist in this universe but in this very general sense that um um that you can say well all you need to do to make it truly Universal is add a little bit more storage whenever you need it and that is good enough I think I think there there's a distinction between the substrate of the RNN as a computation model and its utility in respect of being a trainable neural network

I think that 1991 paper that wasn't trainable with SGD it was just a a patterned way of you know imputing information into an RNN to make it behave as if it was a touring machine so it is is that an issue here that we we want them to be trainable we want them to be useful yeah it is an issue um because look at this little Network which learns parity it has only five connections there's the input unit and there's a hidden unit and then there's an output unit and so now we have we have

two connections we have made an extra bias weight you know coming from a bias unit which is always on so now we have three connections and we have a recur connection from the hidden unit to itself so how do we learn now um from a bunch of training examples parity training examples to uh implement the correct thing it turns out grading descent is not very good at that the best thing you can do is just randomly initialize all the weights between minus 10 and 10 and then can see whether this device now solves parity for

your three training examples one has three bits length the other one 15 and another one with 27 bits length if it solves all these three training examples you can almost be sure that it's going to generalize on all um lengths of all par strengths so it's much more powerful than a fe forward Network because if you train a f Network to solve you know nine bit Pary with nine inputs then yeah there's a way of doing that um but it's not going to generalize to 10 bits or 11 bits but this little recurr thing is

going to do that it's going to generalize to every um type of parody um input yes so here we have a learning algorithm a non-traditional learning algorithm which is just random weight search and you just um try a thousand times does you does your initialization of the weight solve the um the three elements in the training set and it's going to generalize almost certainly on the much bigger test set so it's an un unusual learning algorithm but it's a very good one because within a few hundred trials it's going to learn that this is actually

in the old um 1997 lsdm paper it's one of the examples so there are certain things which you don't want to learn through graining scent no there are better ways and discreete program search the program of a recur network is the weight Matrix of course and and often gr descent gets stuck and is not going to find what you really want but other types of search among these weights or the programs of this network they are going to work and um and if you look at at our past you know Publications since the since 1987

you always will find lots of purely symbolic U obviously symbolic things where it's about proof search you know and asymptotically optimal um problem solvers like the like the oops in 2003 or the Gir machine and optimal um self-improver which doesn't care at all for deep learning so it's it's a very different kind of machine and then there are the you know the the neural networks which when nobody can prove that they are going to work well on on these particular problems but they do so practical experience show they are really useful and uh and and

and to find the boundaries between these symbolic approaches and these less symbolic approaches is impossible they are totally blurred you know and back then in the early '90s we had subol generators that do something that sounds like a like a symbolic thing but no it was implemented through gradian descent in a system that learned to decompose the um the um action sequences that you have to execute to achieve goals into meaningful chunks such that you go from start to goal and so and um and start to sub go and then from sub go to goal

all the stuff that looks a little bit symbolic but no we showed that it can be done by by neural networks and even can be learned by gra descent but then we had other problems that um that make grain descent fail completely so you you don't want to elevate grain descent uh to this um holy approach that's going to solve everything no there are millions of things that cannot be solved by grain descent this does not mean that this is um a problem for neural networks because neural networks can also be trained by many methods

that are not great in descent so just in closing on on this bit um it's fascinating because you've also spent much of your career talking about meta learning you know which is kind of second order or perhaps even third order and Beyond learning and perhaps as you were just alluding to in that ladder of metal learning you could mix modalities so there could be kind of stochastic radient descent and then a symbolic mode and then a a patented met meta reasoning mode and I just wondered for all of the folks doing the arc challenge now

they're doing this kind of discrete program search and they're experimenting with having meta patented neural search on the top or something completely different what would your approach be to that you have to look at the nature of these problems I didn't but uh but I'm sure that for many of these problems what you want to do is probably something like the optimal ordered Problem Solver which is an asymptotically optimal way of finding a program that um solves some given computational problem uh where the verification time for the problem is linear in the size of the

solution such that you have cheap verification that's an important thing it's related to this P versus NP issue and there's an optim and asically optimal way of doing program search like that and goes back to Le 1973 Universal search it is called among all these programs and the optimal order Problem Solver is a um is a curriculum learning uh approach based on this old method which always solves a new problem given the programs which are solutions for previously solved problems and it does that in an asympt L optimal way so uh this method doesn't care

at all for neural networks or deep learning however it mentions in the paper that you can use neural networks as Primitives primitive instructions and you measure how much time do they consume so there's an optimal way of running uh of of allocating runtime to all these programs that you are testing in a way that you represents a super strong bias towards the simple and fast programs and then these programs can have all kinds of primitive instructions and the Primitive instruction again can something can be something really complicated can be you know back propagation for a

Transformer or something like that but you have to measure the time that it's consumed you are going to interrupt uh whenever it's consuming too much time in the current phase and you're allocating more and more runtime to all these programs that trying to find a solution which is easy to ver verify you have to take into account the verification time as well and then um all of this some super symbolic but the same principles I already um applied in the '90s also to neural networks so it's just a non-traditional way of searching for weight matrices

of neural networks through something that is not um GR in ense something that is actually in many ways much smarter and if you're lucky will lead to much better generalization results because what what these methods are essentially trying to do is find the the shortest and fastest way of solving a given problem basically they're trying to minimize the algorithmic complexity or thear complexity under runtime constraints of some neural network in a way that allows it to generalize much better yeah so there we have all kinds of overlaps between this traditional symbolic reasoning and um traditional

program search and these these fuzzy things called neural networks so did the tech industry try to poach your team they did um they try to hire my collaborators of course for example um back then in 2010 2011 when we had these successes with very fast convolutional neural networks Apple um really managed to hire um one of my award-winning team members and um some people think that Apple came late to the party um and to the Deep learning GPU CNN party but no they they got active as soon as this became commercially relevant and uh and

Google Deep Mind Of course was co-founded by a student from our lab and their first employee was another PhD student of mine and later they hired many of my post darks and and uh PhD students by the way I saw a funny Twitter me the other day where a lady said I don't want AI to do my sort of Art and creativity for me I want it to wash the dishes but that's that's an aside that's what my mom said in the 70s really you know yeah build me a r that does my dishes yeah

yeah ex exactly but but the thing I want to get to though is why is it that people look at chat GPT and they are convinced that it is on the path to AGI and and I look at it and I and I think of it as as a database it has no knowledge acquisition so no reasoning it has no creativity it has no agency it doesn't have many of the cognitive features that we have yet people look into it and either they are willfully anthropomorphizing or deluding themselves or maybe they really see something what

do what do you think explains that I think that those people who um were very skeptical of AGI have been skeptical of AGI for decades and suddenly were convinced the other way because of chat BT because suddenly you had a machine that is really pretty good at the touring test um they thought oh now AGI is here but I think all those guys who suddenly are worried about AGI just because of chat CBT and other large language models it's mostly the guys who don't know much about AI they don't know much about uh the incredible

limitations of the neural networks that are behind chat gbt and today we we already mentioned a couple of things that these neural networks cannot do at all um so it's kind of weird actually um I have been advocating I've been hyping I must say um AGI for many decades I claimed to my my mom in the 70s that within my lifetime is going to be there and everything and my colleagues later in the 80s they they thought I'm crazy but um but suddenly many of these guys who have wrinkled their eyebrows uh about my predictions

they have uh turned around and and they they think now AGI is close just because of chat GPT I think the only reason for that is because they don't really understand the nature of these large language models and their incredible limitations I know but I can't get my head around this because many of these folks especially in Silicon Valley they they work in the tech industry they're working on this technology and they apparently don't understand how machine learning works and and I can only understand it in in the sense that sometimes you have brilliant people

who are incredibly deluded in in some other way or that there's there must be something to explain why they just can't see that I mean these things are still machine learning models right they're only as good as fitting a parameterized curve to some data distribution and they'll work really well where you've got lots of density and they won't won't work very well when you don't have lots of density why do they think it magically reasons well probably it's car many of them are Venture capitalists and um they are being convinced by a couple of scientists

who are forming startup companies that now their new startup is really close and they must invest a lot and so I think um at least one of the reasons for this misunderstanding is that some of the machine learning researchers are overhyping the abilities of what what's now possible of large language models and you know the venture capitalist they don't really have any idea of what's going on they just are trying to find out where to put their money and uh and are willing to jump on any additional hype Terin AGI is possible it's going to

come in the not so distant future but it will be it will have large language models only as a as a as a subm module something something like a sub module um because uh the the central goal of AGI is something that's really different that's much closer to reinforcement learning now you can profit a lot um as a reinforcement learner from supervised learning for example you can build a predictive model of the world and then you can exploit this model um which might be uh built by the same Foundation models that are used for language

models uh you can use then this um this model of the world to plan future action sequences but now this is really a different story and now you need to have some embodied AI like a robot which is um which is operating in the real world and in the real world you can't do what you can do in the video games you know in the video games you can do a trillion um uh simulations a trillion trials to optimize your performance and whenever you get shot you get resurrected now in the real world you have

a robot and you do three Trials of something simple and and a tendon is broken of the finger you know something like that and you have to deal with the incredible frustrations of the real world and with the planning that you have to execute in the real world to minimize your your interactions with the world do mental planning into the future to optimize your performance but then also um uh be really efficient uh when you are collecting new training examples through your actions because you want to minimize the effort uh for acquiring new data that

uh improves your model of the world which you using for planning so uh what I now mentioned in a nutshell is essential for agis and several of these components that I mentioned don't work well at all at the moment yes now the existing neural networks can be used in a certain way as components for a slightly larger system that does all of these things and our first systems of this kind date really back to 1990 when I when I so maybe I was even the first to to use the world um the word world model

back then for for recurrent neural network that is used to plan uh action sequences for a controller that is trying to maximize its reward um but but these um these more complex um problem solvers and decision makers they you know are really a different game are really different from just large language models yes and I think I read your paper um with David har was that good few years ago um he was the first person to to use imagination um you know this this um uh uh modelbased reinforcement learning for playing computer games but that's

a bit of a side I guess my point is is that right now in Silicon Valley you can earn an incredible amount of money and have very high status for training neural networks with a thousand lines of code and it's easy and why why would they bother doing anything else because it works so well and again this is an example where you've already done the work a third of a century you've already thought about the next step and the next step and they simply I don't know whether they're just deluding the I why why aren't

they just doing the hard work maybe it's because life is too easy just saying that this is Agi now yeah um I I guess um many of these guys who are now overhyping AGI are um in search of funding for their next company yeah and um there are enough gullible Venture capitalists who are jumping on that bandwagon on the other hand it's also clear that the techniques that we currently have in principle they are good enough to also do the The Next Step Which is far beyond pure language models and the same techniques as I

mentioned that you can use to create language models can also be used to uh create models of the world and then the important question is how do you learn to use this model of the world in a in a hierarchical efficient way uh to to plan action sequences that lead to success so you have a problem that you want to solve you don't know how to solve it there's no human teacher now you want to figure out through your own experiments and through these mental um planning procedures how to solve that problem and um you

know in 19 90 we did it did it in the wrong way in the naive way back then in 1990 um I said okay let's have the recur network controller and the recur Network World model which is used for planning and then we do the naive thing which is millisecond by millisecond planning what does that mean it means that you are simulating every little step of your possible Futures and you're trying to pick one where in your mental simulation you will get a lot of um predicted reward you know and um and that's silly that's

um not what humans do so when humans have a a problem like how do I get from here to Beijing then um they decompose the problem into subs and they say okay uh first thing so I'm they are not going to plan like this they are not going to say okay first I activate my pinky and then the other fingers and I'm going to grip my phone and then I'm going to call this taxi station and do all these things no they just have a high level internal representation of call the taxi then at the

airport checkin then for 9 hours nothing is going to happen until you exit in Beijing and so on so you are not simulating millisecond by millisecond all these possible Futures now most of current reinforcement learning is still doing this stepbystep uh simulation for example in chess or in go you really do Monte Carlo sampling along these possible future and pick one that is seems promising and um your world model is improving over time such that if if you make wrong decisions at least the world model gets better so next time you can make better um

informed decisions so um yeah but even uh back then in 1990 we uh really uh said okay this is not good enough we have to learn sub calls we have to decompose these long um sequences of actions into chunks we have to decompose the entire um uh stream of inputs into chunks that somehow belong together you know and are separated uh in a way that indicates uh that the abst representations of these chunks should um you know should be different for this kind of sequence and for this kind of sequence but they are similar for

these um certain sequences and then um you you can use these adaptive subal generators which we also have had in 1990 to uh to you know put them together in a new way that uh efficiently and quickly solves your problem because you are referring to subprograms that you already have learned like going from uh here to the taxi station and stuff like that so we had that but it was not smart enough in comparison to what we uh then did later in 2015 when we had better ways of um of using these predictive World models

to um to plan in an abstract way so in 2015 I had this paper on learning to think which I still think is important today and I think many people who don't know it should probably read it so what is this 2015 paper about it's about a reinforcement learning machine which again as a predictive model of the world and the the model is trying to predict everything but we are not really interested in everything no we are just um interested in these internal representations which it is creating to predict everything um and and and usually

it cannot predict everything because the world is so unpredictable in many ways but certain things can be predicted and these internal representations some of them become really predictable and um and that's why and and and and it includes everything that you can imagine so if you for example have to predict this pixel correctly maybe it depends a little bit on something that happened 1,000 steps ago so these internal representations of the prediction machine they will take that into account over time and so the these um internal representations they will convey information that is relevant um

for for the world and for this particular pixel but and so now you want to plan in a smarter way how do you use that well the controller has to solve a certain task maximize its reward and instead of using the model of the world uh in in this millisecond by millisecond fashion instead it should um it should ignore all the stuff that is not predictable at all and just focus on these abstract predictable internal Concepts and which are those well answer the controller has to learn which they are and how can it learn that

well what can it do you can give it extra connections into the world model and it can learn to send in queries the queries are just number vors you know in the beginning it doesn't know how to send good queries into this world model uh and then something is coming back from the world model because you wake up some interal representations and it's and and stuff is coming back and the the connections that send in the creas and interpret the answers they belong to the controller so they have to be learned through whatever the controller

is doing reinforcement learning or something like that and um and so now the uh controller is essentially laring to become a prompt engineer that was my 2015 uh reinforcement learning prompt engineer which learn to send in data into the uh World model and then get back data from the world model which somehow should represent the algorithmic information about whatever is relevant so basically the controller has to learn to address in this huge World model which maybe has seen all YouTube videos of everything um someh must learn to address this internal knowledge and um and and

in an abstract planning way and um interpret what's coming back and the a test is uh does this controller learn whatever it has to learn faster without the model by you know just setting all the connections to zero or with the model by somehow learning that it is cheaper to address relevant algorithmic information in the world model that you can reuse to become a better controller U faster so um learning to think I believe in principle this is the way to go in in um in in robotics and reinforcement learning Robotics and and uh all

these fields that currently do not work well yeah could I play back a couple of things there because um the the kind of the ab abstract principle you were just talking about is very similar to generative adversarial networks in the sense that you're playing this game and you're trying to increase the algorithmic information or the information conversion ratio but what you were saying before is really interesting and I understood it to be um cooning or abstractions and one interesting thing as you said is you could start off in the micro action space or you can

move towards the action abstraction space where you're actually learning patterns in action space and this makes sense because when you're driving a car for example you you think about the the the microscopic you you ignore the leaves on the road you're thinking about the big picture and you have this kind of csing this resolution and jumping you know depending on on how you think about the problem and as as I understand it your learning to think your controller uh pattern that you just described is kind of modeling that process yeah so the controller there is

just trying to extract the algorithmic information of another Network which could have been trained on anything for example as I mentioned before all possible you YouTube videos so maybe among the billions of YouTube videos are maybe millions of videos where people are throwing stuff and some robots are also throwing stuff and basketballers and footballers and whoever they all throwing stuff so there's a lot of information about um gravity and about how the world works you know and um and physics of the world and the 3D nature of the world it's all there in these videos

however these videos they contain this um this information in um in a way that is not directly accessible to the controller because U the controller he has these actuators and he sends signals to the actuators and then something happens now the actuators of the robot controlled by the controller they may um be very different from the actuators of the basketball players in the in the videos you know but they have a lot in common also because they also have to work in this world dominated by gravity and maybe the robot has only three fingers and

not five you know but there's a lot you can learn from Five Finger guys about how to use a three finger hand and um and how to throw objects and what do these objects and how do they tumble and you know and as you throwing them so um you somehow want to inject queries prompts into this model of the world and you want to do it such that IT addresses the right stuff in the world model such that the good stuff that you need for your for your improved Behavior as a controller is extracted and

such that you need maybe not just a few additional bits of information that you have to learn as a controller in addition to this um you know information that you cannot use immediately to throw well um but such that you just have to change a little bit you know a few bits here and there such that you can learn to throw a ball much faster than without access to this um this huge set of examples so because it's very complicated um in a in a given environment to find the right planning algorithm and to find

the good way of addressing all of that it has to be learned you know you can't pre-program it so you have to learn it in an environment specific fashion under all these resource constraints that you have because you have only so many um neurons uh in your controller and only so many neurons in your um model and you have only so many um time steps per millisecond to act you know so all of this is nicely and beautifully built in and you just have to learn as a controller to become a good prompt engineer to

send in good prompts and understand what's coming back you have to learn that so in principle that's what I think is going to be the future of planning and hierarchical analogical reasoning and all these things you build a system that is General enough to learn all these things by itself yeah so that's um that's I think the future of um practical planning non Universal planning like what we had in the early 2000s with the uh Universal AI stuff of my postto maros and the good machine Universal self changing and what so very practical and down

to earth um under limited um Resources with resource constraints and everything it's all already there and the controller just has to learn to become a better prompt engineer now you give it a a sequence of um problems can always reuse the previously learned things again and learns more and more subprograms um which can be encoded in recal networks you know so these are general purpose computers so they can encode all these hierarchical reasoning things with subprograms and everything so in principle of that uh should work well but it doesn't work as well as um as

um as you know as the supervised limited techniques the large language models that are now so fascinating for so many people yes this is another example of when you were ahead of your time I I interviewed a couple of University students at Toronto about a month ago and they're applying uh control theory to large language model prompting and they actually use it for exploring the space of reachability so um you know they they um have a controller that tries to optimize the language model to say a particular thing and they can use that to reason

about the the space of possible tokens but but you know the point though is that we're starting to see Meta architectures where language models are a component in that architecture and and I think that kind of thinking out the box is really interesting yeah for example uh one um of our recent papers inspired by this 2015 um learning prompt engineer is our societ y of Mind paper where we had um not just two you know not just the controller and the world model but lots of models U many Foundation models you know some of them

know a lot about computer vision some of know some of them know a lot about how take how to take an an image and generate a caption out of it others know a lot about how to you know just answer questions in natural language now you have a a society of these guys and um and you give them a problem that they cannot solve individually so now you ask them to collectively solve a problem that none of them can solve by itself and so what do they do well they they start becoming prompt Engineers for

each other and uh and they have something they conduct something which We call we call it a Mindstorm a Mindstorm because these natural language based Society of Mind well the members of the Society of Mind they are interviewing each other and why would what would you do and and what what do you suggest should we do and then there are different types of of societies for example we had monarchies where there's a king an new network King that decides based on the suggestions of the underlings what should be done next and then we had democracies

where you have voting mechanisms between these different guys uh and so they have all their ideas and put them out there on the Blackboard and they consume all the ideas of the do and come up with with a solution in the end that often is pretty convincing so in all kinds of um um applications like um generate a a better design of an image that shows that or um manipulate in a 3D environment the while such that you achieve a certain goal stuff like that that um that works well in a in a in a

way that opens up the that opens up whole series um a whole bag of new questions like is a monarchy better than a um democracy if so under which conditions and vice versa stuff like that what what interests me about that is I mean I I think that knowledge acquisition is a really important thing and and when you look at how we do it for example I'm building a startup I'm building a YouTube channel I'm learning how to edit videos and do audio engineering and so on there's so much trial and error because reasoning and

creativity and intelligence is about being able to have that flash of insight and compose many bits of knowledge that you already have in this incredible way and when you see it you go ah you have this aha moment and you can't unsee it and it now changes the way that you see the entire world but sometimes there's an aha moment but sometimes through our collective intelligence people are just trying lots of different things and we're sharing information and evaluating and then this new thing happens this creative insight and then it changes the whole world and

we use that knowledge and and share it so it's an interesting process yeah it does and um you can have an AA moment also based on something that somebody else discovered you know yes when Einstein discovered a great simplification of physics uh through the general theory of relativity um many people were fascinated and had these internal Joy moments um once they understood when was going on there you know so suddenly the world became simpler through this Insight of a of a single guy which quick spread to to I don't know billions of guys and um

and now what happened there what we had there was a moment of data compression in a novel way and in fact all of science is a history of data compression progress so science is not what I proposed in 1990 where you just have these generative adversarial networks where the controller is just trying to maximize the same error functions that the prediction machine is trying to minimize you know so the errors of the predictor are the rewards of the controller that's that's a quite limited artificial scientist what you really want to do is this you want

to um as a controller you want to create action sequences which are experiments that lead to data that are not just unpredictable and surprising in that sense with high error for the model no you want to create data that has a regularity in it that the model didn't know yet what does it mean a regularity it always means that you can compress the data so um let's take my favorite example videos of falling apples somebody generated through his actions through his experiments these videos are falling apples and it turns out these apples they all fall

in the same way you see three frames of the movie and you can predict not everything but many of the pixels in the in the fourth frame whatever you can predict you don't have to store extra which means that many of these pixels which were well predicted don't have to be stored extra which means you can greatly compress the video of the falling apples which means that the neural network that is encoding the predictor the predictions of all these pixels can be really simple you know can be a simple Network that you can describe a

few bits of information just because you can greatly compress the video because if you know gravity you can greatly compress the video uh first you need so many megabytes but then afterwards because you know a lot about gravity you only have to encode the deviations from the predictions of the model so if the model is simple then you can save lots of bits so that's how how people discovered gravity yeah the example you just gave with the apple is really interesting because um it made me think of the the spectrum of memorization and generalization again

so in deep networks we do these inductive priors and they take the form of symmetry and scale separation so for example we could have translational you know like local Weight sharing so translational equivariance and that would allow the model to use less representation or capacity for modeling the ball in different positions but there's a kind of Continuum isn't there because we could just go all of the way and eventually we'd end up with a model that had hardly any degrees of freedom and still represented the Apple fooling but there's this spectrum of representation or Fidelity

yes and uh you have to take into account the time that you need to translate uh the internal representations into meaningful actions uh as you are uh so what are what are babies doing as they are watching these falling apples they also learn to predict the next pixels you know and that's how they also learn to compress now they don't know anything about squared laws of mathematics and the simple um um you know five symbol law that describes gravity for so many different objects um but in principle they understand that there's a quadratic acceleration as

these apples are coming down and he learns this part of physics even without being able to name it or Translate related into symbols that is not the objective but it allows to greatly compress and then you know um at some point Kepler was a baby 400 years ago and um then he grew up and he um he saw the data of the planets circling the sun and it was noisy data but then suddenly um he realized that um that there's a regularity in the data because you can greatly compress all these data points once you

realize they all on an ellipse you know there's a a simple mathematical law and he was able to do all kinds of predictions based on this um simple insight and these were all correct the predictions were all really good um and then slightly afterwards a couple of decades later um another guy Newton he um saw that uh the falling apples and the planets on these ellipses they are driven by the same simple and um and that allowed many additional simplifications and and predictions that really worked well and then it took 300 years or something until

another guy um was worried about the deviations from the predictions and and and the whole thing became uglier and uglier the the traditional World model became ugli and uglier because you need more and more bits of information to encode these deviations from the predict because if you look far out there what the stars are doing they are doing things they shouldn't do according to the standard Theory and then he came up with this super simplification again many people think it's not simple but it's very simple you can phrase the um the nature of general relativity

in a single sentence um it basically says no matter how hard you accelerate or how hard you decelerate or what is the gravity in the environment that you are currently living in light speed always looks the same so that's the whole program behind general relativity and if you understand that you have to you know learn tens of calculus to formalize that and to derive predictions from that but that is just a a side effect of the basic Insight which is again very simple so uh this very simple Insight allowed to greatly compress the data again

and all of science all of science is just this history of data compression progress and as we are trying to build artificial scientists we are doing exactly that so whenever we uh generate through our own data collection procedures through our own experiments whenever we generate data that has a previously unknown regularity a compressibility and we discover this um compressibility because for first we need so many synapses and and neurons to store that stuff but then only so many the difference between before and after that's the fun that we have as scientists so we understand the

principle we just built um artificial scientists driven by exactly the same desire to to maximize insights along these lines data compression progress essentially and then we have AIS that are like little artificial scientists um and they set themselves their own goals because to maximize their scientific reward their joy as scientists they are trying to invent experiments that lead to data that has the property that there's a regularity in the data that they didn't know yet but can extract and they realize oh my regularity U that I didn't know allows me actually to take the data

and compress it by predicting it in a better better way by understanding the the rules the rules behind the data so we can Implement that stuff in in artificial systems so we already do have artificial scientists um not working as well as you know chat GPT in its more limited domain where it's just about addressing the knowledge of the world but it's going to come it's going to change everything yes a couple of quick comments on that when children look at the the apples fooling I think one interesting difference is they're constantly getting feedback from

the world and that allows them to build quite local models because they'll have lots of prediction errors but they'll be constantly updating the models so it kind of doesn't matter which then makes it seem all the more impressive that we can be mathematicians and scientists and we can build increasingly abstract models yeah and that abstract analogy making that you were talking about so we see the apples falling we see the movements of the stars and then we do this meta analogy which is gravity that to me is is fascinating now I don't know whether you

just think of that as yet another form of compression or do you think that analogical reasoning is something special no no it's just uh another um symptom of the general principles so as you are compressing you are exploiting the millions of different types of compressibility you know for example everything that is symmetric is um compressible because the the left half can be easily computed from the right half so you have to store only the right half plus a tiny algorithm for flipping it or something and um and there are many additional types of compressibility you

know if you're living in a society where there are lots of people then it's really good to have some you know prototype face um encoded in your brain and whenever a new face is coming along uh to encode it all you need to encod is the deviations from the Prototype so that allows you to greatly compress and there are millions of way ways of compressing things it's the world of compression algorithms which is basically the world of all algorithms and there are many names uh that are special cases of that like reasoning by analogy but

analogy just means hey there's a a method an algorithm that explains how these planets move around the Sun and a very similar algorithm I just have to change a few bits there also explains how the electrons move around the uh nucleus of the so uh there's a lot of shared algorithmic knowledge between these atoms and the planets and um and you can compress both of them better than one of them by by itself because the algorithm the compression or the regularity is common to both which means you one is just an instance of the other

one so you can better compress so all of um regularity detection is about compressibility it's not quite true because you also should take into account the time that you need to compress and decompress as you are doing practical applications but we are doing that also so we have systems that do all of that or take all of that into account in a in a good way it's just that they are not working as well yet as chat but it's going to happen and that's the stuff that is going to enable AI in the physical world

where you have to deal with the very limited resources that you have and the very um small number of experiments that you can conduct to acquire data that allows you to inere how the world works and then use that knowledge for planning what do you think of the idea that you know rationalists they think that the the knowledge about how the world Works can be factorized into surprisingly few Primitives so the idea is that when we do reasoning when we do knowledge acquisition we it's a bit like Lego and we're just plugging all of these

bits together and some of the the the science knowledge that you're just talking about those are modules so there're lots of Lego that have been stuck together that we reuse But ultimately the idea is that all the knowledge that you can know is just compositions of of Lego and there's nothing else yeah and I love what you just saying is just a special case of compressibility you know so whenever you have uh building blocks that reappear again and again like Lego blocks and whatever then you have compressibility because you need to encode only one of

these Lego blocks and then you just make copies of it and then you have maybe other high level structures and these structures also repeat themselves like in a fractal um object like a like a mountain for example you know where the little part of the mountain looks like the entire Mountain which means that um that there's a lot of algorithmic information shared between the mountain and the parts of the mountain and that's what you call fractal similarity so it's just one of many types of compressibility all the fractal stuff is compressible stuff just like the

an analogical reasoning part is compressible stuff just like the hierarchical ReUse of subprograms is all about compression so machine learning machine learning is is essentially the signs of data compression plus the signs of selecting actions that lead to data that you compress that you can compress in a way that you didn't know but you can learn that's how you better understand the compressibilities the regularities in the environment so that's what we have to build into our artificial scientists modern large language models like Chachi they're based on self attention Transformers and even given their obvious limitations

they are a revolutionary technology now you must be really happy about that because you know a third of a century ago you published the first Transformer variant what are your Reflections on that today in fact in 1991 um when compute was maybe five million times more expensive and today um I I published this um model that you mentioned which is now called uh the unnormalized linear Transformer I had a different name for it I called it a fast weight controller but names are not important the only thing that counts is the math so this linear

Transformer is a neural network with um lots of nonlinear um operations within the network so it's a bit weak that it's called a linear Transformer however the linear and that's important refers to something else it refers to scaling a standard Transformer of 2017 a quadratic Transformer if you give it 100 times as much input then it needs 10,000 times 100 * 100 is 10,000 times as many computations and um a linear Transformer of 1991 needs only 100 times um the compute which makes it very interesting actually because at the moment many people are trying to

come up with more efficient Transformers and um and and this old linear Transformer of 1991 is therefore um a very interesting starting point for additional improvements of Transformers and similar models so what did the linear Transformer do assume the goal is to predict the next word in a chat given the chat so far and essentially the linear Transformer of 1991 does this to minimize its error it learns to generate patterns that um in in modern Transformer terminology are called keys and values keys and values uh back then I called them from and to but that's

just terminology and and it does that to reprogram parts of itself such that its attention is directed in a context dependent way to what is important and and a good way of thinking about this linear Transformer um is is this the traditional artificial neural networks uh have storage and control all mixed up the linear Transformer of 1991 however has a novel neural network system that separates storage and control like in traditional computer in traditional computers for many decades storage and control is separate and the control learns to manipulate the storage and so with these linear

Transformers you also have a slow Network which learns by gradient descent to compute the weight changes of a fast weight Network how it learns to create these Vector valued key patterns and value patterns and uses the outer products of these keys and values to compute rapid weight changes of the fast Network and then the fast network is applied to Vector valued queries which are coming in so essentially um in this fast Network the connections between strongly active parts of the keys and the values they get stronger and others get weaker and this is um a

fast update rule which is completely differentiable which means you can propagate through it so you can use it as part of a larger learning system which learns to back propagate errors through this um Dynamics and then um learns to generate good keys and good values in certain contexts such that the um entire system can reduce its error and can become a better and better predictor of the next Warrior in the in the chat so um sometimes people call that today a fastweight matrix memory uh and and and the modern quadratic Transformers they use um in

principle uh exactly the same approach you mentioned your fabulous year 1991 where so much of this amazing stuff happened um actually at the Technical University of of Munich so chat GPT um you had invented the T in the chat GPT the Transformer and um also the p in the chat GPT the the pre-trained um Network as well as the first adversarial um networks as well Gans could you say a little bit more about that yeah so uh the Transformer of 1991 was a linear Transformer so it's not exactly the same as the quadratic Transformer of

today okay but nevertheless it's using these Transformer principles and um and the P the p GPT yeah that's the pre-training and back then deep learning didn't work but then we had um uh networks that could use predictive coding to greatly compress long sequences uh such that suddenly you could work on this reduced space of these compressed uh data descriptions and deep learning became possible where it wasn't possible before and then the generative adversarial networks also in the same same year 1990 to 1991 um how did that work well back then we had two n two

networks uh one is the controller and the controller has certain um probabilistic stochastic units within itself and they can learn the mean and the variance of a gaussian and there are other nonlinear units in there and then it is a generative Network that generates outputs output patterns actually probability distributions over these output patterns and then another Network the prediction machine the predictor learns to look at these outputs of the first Network and learns to predict their effects in the environment so to become a better predictor it's minimizing its error predictive error and at the same

time the controller is trying to generate outputs where the second network is still surprised so the first guy tries to fool the second guy trying to maximize the same objective function that the second network is minimizing so today this is called generative artificial no generative adversarial networks and I didn't call that generative adversarial networks I called it artificial curiosity because you can use the same principle to uh let robots explore the environment controller is now generating actions that lead to behavior of the robot the prediction machine is trying to predict what's going to happen and

it's trying to minimize its own error and the other guy is trying to come up with good experiments that lead to data uh where the where the predictor or the discriminator as it is now called can still learn something it's a bit like artificial capitalism um in a way but I also wanted to talk about stms because those also have their roots in that fabulous year 1991 yes the lstm in principle dates back to um 1991 and um and back then my brilliant um student SE PO he wrote his diploma thesis July 91 I believe

something like that and um and this technique wasn't called lstm yet but the basic principles were already there U because um the problem with deep learning back then was um that the gra gradients of these Arrow signals um as you're trying to propagate Arrow signals back they vanish so that's called The Vanishing gradient problem and SE in his diploma thesis identified that problem and then he uh came up with a solution which is residual connections today people call it residual connections back then it was called the Conant error flow and that's um the principle that

allows recur networks to become really really deep and um and then in 1995 I I came up with this name lstm but you know that's just a name and the important thing is the math and um and finally our 1997 paper on lstm uh uh really has become the most cited AI of the 20th century the most cited AI paper of the 20th century what are your Reflections on that yeah that's pretty cool um the lstm has become really popular uh just look for example at Facebook um at some point Facebook used lstm to make

more than four billion translations per day compare that to the most um successful you YouTube video of all time it's a song called baby shark baby shark and this song has more than 10 billion clicks but it needed several years to get 10 billion clicks and back then lstm working for Facebook within three days got more clicks than baby shark in its entire life yeah I mean given that we now live in the information World algorithms are just so important because they they can um have so much leverage over our lives but just coming back

to sep for a minute I'm a big fan of his and um I heard on the Great Vine that he's working on a newer more scalable version of lstms called X lstms can you tell us about that the X lstm seems to be great we'll get into that let me first mention that the first large language models of Google and and the other guys uh in the 2010s were actually based on the old lstm until quadratic Transformers took over um towards the end of the 2010s um like the linear Transformers of 1991 the lstm is

more efficient than Transformers in the sense that it scales linearly rather than quadratically furthermore one should always keep in mind that recurrent Network such as lsdm can solve all kinds of problems that Transformers cannot solve uh for example parity the parity problem seems very simple you would just look at bit strings such as 0 1 011 or one1 or one one 01111 1 01 and given such a bit string the question is is the number of ones odd or um even so that looks like a simple task but Transformers fail to generalize on it while

a recur Network can easily be programmed to solve that task on the other hand Transformers are um much easier to parallelize than lstm and this is also very important because you want to profit from today's massively parallel um Computing architectures in in particular Nvidia gpus recently however sep and his team have created this very promising lstm extension called X lstm it outperforms Transformers on several language benchmarks and also scales linearly not quadratically so that's good and um particularly noteworthy is X lstm's Superior understanding of text semantics and uh some of the X lstm versions can

also be highly parallelized um there is also an X lstm variant with a matrix memory like the one that we have discussed before when we um talked about unnormalized linear Transformers of 1991 uh but in addition to that scheme there's also the forget gate and the input gate and the output gate are the uh original lstm and and and that's a good thing because in a in a matrix memory like that you can store much more stuff than what you usually can store in just the hidden units of an lsdm or any Recon Network what

about recurrent States state space models like mamba mamba and similar um models actually use key elements of lstm uh I don't know why they invented a new name for that um like um lstm itself the Mamba lstm also has a forget gate an input gate and an output gate but it's a little bit um like a reduced version of lstm because these gat depend only on the previous input um so not on the hidden State representing the history of everything that happened before and and that's why uh the Mamba lstm cannot solve parity either just

like Transformers cannot solve parity also with Mamba uh the computation of the next state is it's just a linear mapping um but this reduced Mamba lsdm works well for natural language and for small models and um it also scales linearly that's good rather than quadratically like the quadratic Transformers but apparently the xlm uh works much better in 1991 is it fair to say that you were just too soon you you had all of the algorithms in the Insight but you just didn't have enough computes power that's true but we had a friend back then in

19991 and the friend was a trend an old Trend dating back at least to the year 1941 when Conrad Su built the world's first working general purpose program controlled computer in Berlin and his machine could do roughly one Elementary operation per second so for example one addition per second since then however every five years computer has get gotten 10 times cheaper and so we have greatly uh profited from that so 45 years after T Built the Z1 this famous machine um in 1986 when I was working on my diploma thesis on artificial general intelligence which

we now call AGI based on meta learning on learning to learn this trend was already old and compute was a billion times cheaper than uh what Su had back then in 1941 and the first desktop computers back then in the 80s they allowed us to conduct experiments that seemed INF feasible just a few decades earlier however by today's standards um our our models still were tiny but in the last 30 years we have gained another factor of a million so we now have a million billion times more compute per dollar than what Su had in

1941 and that's why now everybody has impressive AI on their smartphones although the basic AI techniques running on these smartphones all date back to the previous Millennium so assuming that this trend won't break anytime soon what what's going to happen next then we'll soon have cheap computers with the raw computational power of a human brain U and a few decades later of all 10 billion human brains um together our Collective human intelligence is probably limited to fewer than 10 to the 30 meaningful Elementary operations per second why there are 10 to the 10 people almost

and none of them can do more than 10 to the 20 elementary operations per second probably just a fraction thereof because while I'm talking to you yes I have 100 billion neurons but only a few million of them are really active all of humankind cannot compute more than 10 to the 30 operations per second so will this trend go on forever it can't go on forever because there are physical limits um there is this baman limit discovered in 1982 uh which says with one kilogram of of mass you cannot compute more than 10 to the

51 operations per second I have a kilogram of matter in here for thinking and it's just a tiny tiny fraction of that nevertheless as soon as you have reached this limit then the um acquisition of additional Mass to build even bigger uh computers cannot proceed in an exponential way because we have this 3D nature of our universe and so we will have a polinomial growth factor factor afterwards as we are trying to expand into space and and use more and more of these kilograms out there for building um better computers so if I understand your

your lstm was also used um you know for language models by many of the tech giant you know apple and and Microsoft and and Google um is is that true yeah yeah um many of these original language models they started as um as lsdm based systems for example there was this thing Microsoft T I think was the name and it was a chatbot basically and it kept learning and soon um some of the users realized that you um Can retrain it to make it some sort of Nazi and they quickly abandon that one but you

know that's how it started um as I mentioned before uh LM actually doesn't have certain limitations that you have in Transformers but you cannot paralyze it as well except that there are now new things such as xlsm now um we were hinting to this a little while ago but there's actually a striking similarity between the lstm and the resnet so we were talking about they now call it the residual stream this idea of kind of sharing information between the layers and um it actually Bears striking resemblance to one of your earlier papers which was called

the highway net could could you tell us about that yes we uh published the the highway Network and May 2015 with rupes sasava and Claus G my my students and um the uh reset is basically a highway network uh whose gates are always open always 1.0 so the resonant was published half a year later and um and the highway network has the residual connections of the lsdm so the recurrent lsdm but the highway network is the V forward networks and and that's what allowed it to become really deep and the highway Network also has the

gates of the 2000 lstm uh um so so this combination was the basis for the highway Network and if you you know open the gates then it's IR resonate one one thing that I'm curious about is the role of depth in in deep learning models I mean I recently interviewed Daniel Roberts from MIT he wrote The Deep learning theory book and I also interviewed Simon prince who who just put a great textbook out on on deep learning and it's quite mysterious because you know in the in the infinite width in in Shallow networks they can

still presumably represent any computable function but depth does something very interesting it seems a bit mysterious what what's your take on it and why is it useful yeah I I think you are referring to this old kogoro Sao theorem or something um which shows that if you have enough hidden unit if if you have enough hidden units then a single layer is enough to implement any piecewise uh continuous function in a in a in a neural network but um but then of course you need maybe really many hidden units and that means you need a

lot of weights and then um the more weights you need the smaller your chance of generalizing because what you really want to find um as you're trying to solve a problem you want to find the simplest solution to that problem you want to find the simplest neural network that can solve that problem and um in our world the simplest neural network that can solve that problem is often deep uh especially when it comes to temporal processing and that's why you um can have very simple recurrent neural networks that are really really deep as they are

memorizing things that happened a thousand steps ago but you needed only a thousand steps later to compute the correct um Target value yes so um there's a there's a spectrum between memorization and generalization which is a bit like what we were talking about earlier with the difference between fsas and touring machines so this Universal function approximation theorem basically said that you can approximate a function with a piece-wise linear function to arbitrary precision based on how many basis functions you place but the magic of deep neuron networks is that we're moving into this regime of generalization

which is being able to represent more possible future situations using much more abstract representations yes and basically it all boils down to compression so if you have a a huge network with only one single layer but lots of units and lots of Weights then you have lots of parameters and um and you need a lot of information to encode all these parameters if however your problem can be solved by something that has maybe 20 layers but all of them are really tiny and um then you have only very few weights and you maybe don't need

many bits of information to encode them then of course and the sense of Arkham's Razer uh that's much better because then um you you will have um a rather small albate um deep Network that uh is working well on your training uh set and you can hope it's also going to generalize much better on your test set interesting and that Daniel Robert guys from Daniel Robert guy from MIT in his theory he took into account the aspect ratio of a neural network which is to say the relationship between the width and the depth and he

was looking at the training Dynamic so he was modeling it as a you know like a sort of renormalization uh group and he found that there was a kind of criticality that you could find an optimal configuration of a neuron network based on the width and the depth and the initialization to give optimal training Dynamics and that's actually an interesting thing to think about because there aren't many Grand theories of of deep learning but I just wondered if you were familiar with that or you know just thought about yeah some of the theories I'm not

familiar with that particular paper but the way you phrase it it seems um to be conditioned on on the traditional learning algorithm which is great descent right yes uhhuh so generally speaking um often you want to have another learning algorithm which is very different from grade and descent as you are trying to find a really simple solution as you're trying to find a short program that computes the weights of the network that is solving the problem and we have many many Publications since the early 90s on exactly that so you want to you want to

compress the networks you want to find networks that have low kogar complexity low algorithmic information content that is the program that generates that computes this network should be as small as possible and that's uh what we mentioned earlier um when we when we um disc discussed these um super generalizers which for example can learn parity uh from just three training examples of different sizes and generalize to everything uh that the world of parity offers and the world is Big because there are infinitely many bit strings in that world so just to play that back I

think that's a very interesting insight for the audience you said the program which generates the neural network should have as small as possible minimum description length that's right yes so it reminds me of something I first did in 1994 and the paper was called discovering Solutions with low kogar complexity and then 1997 discovering neural networks with low colog complexity and there I didn't use grain descent no I used the principles of optimal Universal search to um find neural networks that work on the training set and it turns out they they journalized on the test set