Mastering Chaos - A Netflix Guide to Microservices

2.3M views9762 WordsCopy TextShare

InfoQ

InfoQ Dev Summit Boston, a two-day conference of actionable advice from senior software developers h...

Video Transcript:

all right wow full room I'm just going to jump right in about 15 years ago my stepmother and we'll call her Francis for the sake of this conversation became ill she had aches through her whole body she had weakness she had difficulty standing and by the time they got her to the hospital she had paralysis in her arms and legs and it was a terrifying experience for her and obviously for us as well Well turns out she had something called gon Beret Syndrome has anybody heard of this before I'm just curious oh lots of people

good well not good hopefully you haven't had firsthand experience with it it's an autoimmune disorder and it's a really interesting one uh it's triggered by some kind of external factor which is fairly common with autoimmune disorders but what's interesting about this one is that the antibodies will directly attack What's called the myin sheath that wraps around the axon that long SE in nerve cells and it essentially eats away at it such that the signals become very diffuse and so you can imagine these symptoms of pain and weakness and paralysis are all quite logical when you

understand what's going on the good news is is that it's treatable uh either through plasmapheresis where they filter your blood outside your body or antibody therapy and that was successful the latter was successful for Francis what was interesting also was her reaction to this condition she became much more disciplined about her health she started eating right exercising more she started pictate doing chiong and taii to improve her health and she was committed to having this kind of event never happened to her again and what this event uh really underscores for me is how amazing the

human body is uh and how something as simple as the act of breathing or interacting with the world is actually a pretty morac ulous thing and it's actually an act of Bravery to a certain extent there are so many forces in the world so many allergens and bacterial infections and various things that can cause problems for us and so you might be wondering why the hell are we talking about this at a microservices uh talk but just as breathing is a miraculous Act of Bravery so is taking traffic in your microservice architecture you might have

traffic spikes you might have a Dos attack you might introduce changes into your own environment that can take the entire service down and prevent your customers from accessing it and so this is why we're here today we're going to talk about microservice architectures which have huge benefits but also talk about the challenges and the solutions that Netflix has discovered over the last seven years uh wrestling with a lot of these kinds of failures and conditions so I'm going to do a little bit of an introduction I'll introduce myself and I'm going to spend a little

bit of time LEL setting on microservice Basics so we're all using the same vocabulary as we go through this and then we'll spend the majority of our time talking about the challenges and solutions that Netflix has encountered and then we'll spend a little bit of time talking about the relationship between organization and architecture and how that's relevant to this discussion so by way of introduction hello I'm Josh Evans uh I started at Netflix uh had a career before this but I this is the most relevant part uh in 1999 joined Netflix about a month before

the subscription DVD service launched uh I was in the e-commerce space as an engineer and then a manager uh and got to see that transition from an e-commerce perspective in terms of how we integrated streaming into the existing DVD business 2009 I moved over right into the heart of streaming managing a team that's today called playback services this is the team that does DRM and manifest delivery recording Telemetry coming back from devices uh I also managed this this team during a time when we were going International getting onto every possible device in the world and

just this side project of moving from data center to Cloud so it's actually quite an interesting and exciting time for the last three years I've been managing a team called operations engineering where we focus on operational excellence engineering velocity uh monitoring and alerting so think about things like delivery uh chaos engineering a whole wide variety of functions to help Netflix Engineers be successful operating their own services is in the cloud so you'll see that there's an end date there I actually left Netflix about a month ago and today I'm actually thinking a lot about Ariana

Huffington uh catching up on my sleep for the first time in quite some time uh taking some time off spending time with my family trying to figure out what this work life balance thing looks like uh actually this will be mostly life balance which will be great big shift from what I was doing before uh Netflix as you know is the leader in subscription Internet TV service it produces es or licenses Hollywood independent and local content has a growing slate of pretty amazing originals and at this point is at about 86 million members uh globally

and growing quite rapidly Netflix is in about 190 countries today and has localized in tens of languages that's user interface subs and dubs thousands of device platforms and all of this is running on microservices on AWS so let's dig in and let's talk about the microservices uh from the abstract sense and I'd like to start by talking about what microservices are not I'm going to go back to 2000 my early days at Netflix when we were a web-based business where people put DVDs in their queue and had them shipped out and returned and all of

that so we had a pretty simple infrastructure this was in a data center Hardware based load balancer actually very expensive Hardware that we put put our that we used as our Linux hosts running a fairly standard configuration of an Apache reverse proxy and tomcat and this one application that we called Java web it's kind of everything that was in Java that our customers needed to access now this was connected directly to an Oracle database using jdbc which was then interconnect with other Oracle databases using database links the first problem with this architecture was that the

codebase for Java web was monolithic in the sense that everybody was contributing to one code base that got deployed on a weekly or bi-weekly basis and the problem with that was is when a change was introduced that caused a problem it was difficult to diagnose we probably spent well over a week troubleshooting a slow moving memory leak took about a day to happen we tried pulling out pieces of code and running it again to see what would happen and because so many changes were rolling into that one application this took an extended period perod of

time the database was also monolithic in even a more severe sense it was one piece of Hardware running one big Oracle database that we called the store database and when this went down everything went down and every year as we started to get into the holiday Peak we were scrambling to find bigger and bigger Hardware so that we could vertically scale this application probably one of the most painful pieces from the engineering perspective other than the outages that might have happened was the lack of agility that we had because everything was so deeply interconnected we

had Direct calls into the database we had many applications directly referencing table schemas and I can remember trying to add a column to a table was a big cross functional project for us so this is a great example of how not to build services today although this was the common pattern back in the late '90s and early 2000s so what is a microservice does uh anybody want to volunte hear their understanding or definition of what a microservice is sort of curious what I'll get here somebody some brave soul there we go what's a microservice data

own say it again Contex and data ownership context Bound in data ownership I like that I'm going to give you the Martin Fowler definition this is a good place to start that's definitely a key piece I'm going to read this to you so you don't have to microservice architecture Style is an approach to developing a single application as a suite of small Services each running in its own process and commuting with a lightweight mechanis lightweight mechanisms often an HTTP resource API so I think we all know this it's it's a somewhat abstract definition it's very

technically correct but it doesn't really give you enough of the flavor I think of what it means to build microservices when I think about it I think of it as this extreme reaction to that experience that I had back in 2000 with monolithic applications separ op ation of concerns being probably one of the most critical things that it encourages modularity the ability to encapsulate your data structures behind something so that you don't have to deal with all of this coordination scalability they tend to lend themselves to horizontal scaling if you approach it correctly and workload

partitioning because it's a distributed system you can take your work and break it out into smaller components which make it more manageable and then of course none of this really works well from my perspective unless you're running it in a virtualized and elastic environment it is much much harder to manage microservices if you're not doing it in this kind of environment you need to be able to automate your operations as much as possible and On Demand provisioning is a huge huge benefit that I wouldn't want to give up without we building this going back to

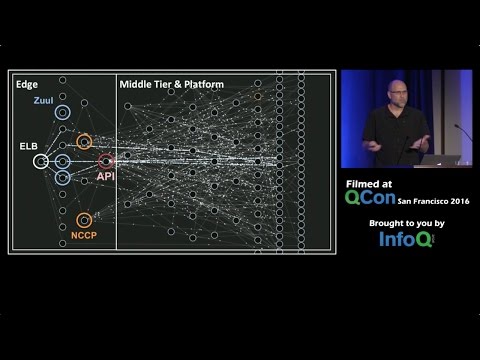

that theme of the human body and biology you can think of microservices also as organs in an organ system and these systems that then come together to form the overall organism so let's take a look at the Netflix architecture a little bit and see how that Maps there's a proxy layer that's behind the elb called Zuul that does dynamic routing there's a tier that was our Legacy tier called nccp that supported our earlier devices plus fundamental playback capabilities and there's our Netflix API which is our API Gateway that today is part really core to our

modern architecture calling out into all of the other services to fulfill requests for customers this aggregate set that we've just walked through we consider our Edge service there's some a few auxiliary Services as well like DRM that support this that are also part of the edge and then this soup on the right hand side is a combination of middle tier and platform services that enable the service to function overall to give you a sense of what these organs look like these entities here are a few examples we have an AB testing infrastructure and there's an

a B service that returns back values if you want to know what tests a customer should be in we have a subscriber service that is called from almost everything to find out information about our customers a recommendation system that provides the information necessary to build the list of movies that get presented to each customer as a unique experience and then of course there's platform services that perform the more fundamental capabilities routing to get to to so microservices can find each other Dynamic configuration cryptographic operations and then of course there's the persistence layers as well these

are the kinds of objects that live in this ecosystem now I also want to underscore that microservices are an abstraction we tend to think of them very simplistically as here's my nice horizontally scaled microservice and people are going to call me which is great if it's that simple but it's it's almost never that simple at some point you need data uh your service is going to need to pull on data for a variety of reasons it might be subscriber information it might be recommendations but that data is typically stored in your persistence layer and then

for convenience and this is a really a uh Netflix approach that I think many others have embraced but definitely specific to Netflix as well is to start providing client libraries and this were mostly Java based uh so client libraries uh for doing those basic data access uh types of operations now at some point as you scale you're probably going to need to front this with a cache um because the Service Plus the database may not perform well enough and so you're going to have a cash client as well and then now you need to start

thinking about orchestration so I'm going to hit the cache first then if that fails I need to go to the service which is going to call the database it'll return a response back and then of course you want to make sure you backfill the cach so that it's hot the next time you call it which might just be a few milliseconds later now this client library is going to be embedded within the applications that want to consume your microservice and so it's important to realize from their perspective this entire set of Technologies this whole complex

configuration is your microservice it's not this very simple stateless thing which is is nice from sort of a pure perspective but it actually has these sort of complex uh structures to them so that's the level set on microservices and now let's go ahead and let's dig in on the challenges that we've encountered over the last seven years and some of the solutions and philosophies behind that so I love junk food um and I love this image because I think in many cases the problems and solutions have to do with the habits that we have and

how we approach microservices and so the goal is to get us to eat more vegetables uh in many cases um we're going to break this down into four sort of primary areas that we're going to investigate there are four dimensions uh in terms of how we address these challenges dependency scale variance within your architecture and how you introduce change we're going to start with dependencies and I'm going to break this down into four use cases within dependencies in service requests this is the call from microservice A to microservice B in order to fulfill some larger

requests and just as we were talking about earlier with the nerve cells and the conduction everything's great when it's all working but when it's challenging it can feel like you're crossing a vast chasm in the case of a service calling another service you've just taken on a huge Risk by just going off process and off your box you could run into Network latency and congestion you could have Hardware failures that prevent routing of your traffic or the service you're calling might be in bad shape it might have had a bad deployment and have some kind

of logical bugs or it might not be properly scaled and so it can simply fail or be very slow and you might end up timing out when you call it the disaster scenario and we've seen this more than I'd like to admit is the scenario where you've got one service that fails with improper defenses against that one service failing it can Cascade and take down your entire service for your members and God forbid you deployed that bad change out to multiple regions if you have a multi- region strategy because now you've really just got no

place to go to recover you just have to fix the problem in place so to deal with this Netflix created histrix which has a few really nice properties it's got a structured way for handling timeouts and retries it has this concept of a fallback so if I can't call service B can I return some static response or something that will allow the customer to continue using the product instead of Simply getting an error and then the other big benefit of histrix is isolated thread pools and this concept of circuit if I keep hammering away at

service B and it just keeps failing maybe I should stop calling it and fail fast return that fall back and wait for it to recover so this has been a great Innovation for Netflix it's been used quite broadly but the fundamental question comes in now I've got all my historic settings in place and I think I've got it all right but how do you really know if it's going to work and especially how do you know it's going to work under at scale the best way to do this going back to our biology theme is

inoculation where you might take a Dead version of a virus and inject it to develop the antibodies to defend against the live version and likewise fault injection in production accomplishes the same thing and Netflix created fit the fault injection test framework in order to do this you can do synthetic transactions which are overridden basically at the accounter or the device level or you can actually do a percentage of live traffic so once you've determined that everything works functionally now you want to put it under load and see what happens with real customers and of course

you want to be able to test it no matter how you call that service whether you call it directly whether you call it indirectly you want to make sure that your requests are decorated with the right context so that you can fail it universally just as if the service was really down in production without actually taking it down so this is all great this is sort of a point-to-point perspective but imagine now that you've got 100 microservices and each one of those might have a dependency on other services or multiple other services there's a big

challenge about how do you constrain the scope of the testing that you need to do so that you're not testing millions of permutations of services calling each other this is even more important when you think about it from an availability perspective imagine you've only got 10 services in your entire microservice infrastructure and each one of them is up for four nines of avail availability that gives you 53 minutes a year that that service can be down now that's great as an availability number but when you combine them all the aggregate failures that would have happened

throughout that year you actually will end up with three nines of availability for your overall service and that's somewhere in the ballpark of between eight and nine hours a year a big difference and so to address this Netflix defined this concept of critical microservices the one ones that are necessary to have basic functionality work can the customer load the app browse and find something to watch it might just be a list of the most popular movies hit play and have it actually work and so we've taken this approach and identified those Services as a group

and then created fit recipes that essentially Blacklist all of the other services that are not critical and this way we can actually test this out and we have tested this for sure short periods of time in production to make sure that the the service actually functions when all those dependencies go away so this is a much simpler approach to trying to do all of the point-to-point interactions and has actually been very successful for Netflix and finding critical errors so let's now talk about client libraries shifting gears completely when we first started moving to the cloud

we had some very heated discussions about client libraries there were a bunch of folks uh who had done great work from Yahoo had who had come to Netflix who were espousing the model of barebones rest just call the service don't create any client libraries don't deal with all of that um just go Bare Bones and yet at the same time there are really compelling Arguments for building client libraries if I have common logic and common access patterns for calling my service and I've got 20 or 30 different dependencies do I really want every single one

of those teams writing the same code or slightly different code over and over again or do we want to Simply consolidate that down into common business logic and common access patterns and this was so compelling that this is actually what we did now the Big Challenge here is that this is a slippery slope back towards having a new kind of monolith where now our API Gateway in this case which might be hitting a 100 Services is now running a lot of code in process that they didn't write this takes us all the way back to

2000 running lots of code in the the same common codee base it's a lot like a parasitic infection if you really think about it this little nasty thing here is not the size of Godzilla it's not going to take down Tokyo but it will infest your intestines it'll attach to your blood vessels and drink your blood like a vampire this is called a hookworm and a full-blown infestation can actually lead to pretty severe anemia and make you weak and likewise client libraries can do all kinds of things that you have no knowledge of that might

also weaken your service they might consume more Heap than you expect they might have logical defects that cause failures within your application and they might have transitive dependencies that pull in other libraries that conflict in terms of versions and break your builds and all of this has happened especially with the API team because they're consuming so many libraries from so many teams and there's no cut and dry answer here there's been a lot of discussion about this it's been somewhat controversial even over the last year or so the general consensus has been though to try

to simplify those libraries there's not a desire to move all the way to that barebones rest model but there is a desire to limit the amount of logic and Heap consumption happening there and you want to make sure that people have the ability to make smart thoughtful decisions on a case-by Case basis so we'll see how this all unfolds this is sort of an ongoing conversation and mostly I'm bringing up here so all of you can be sort of thoughtful about these trade-offs and understand that now persistence is something that I think Netflix got right

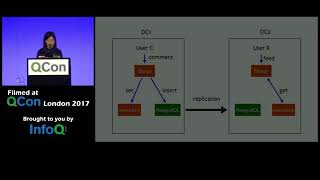

early on there isn't there isn't a war story here about how we got it wrong um and let me tell you how we got it right we got it right by starting off thinking about the right constructs and about cap theorem I assume how many people are not familiar with cap theorem I'm just sort of curious Okay so we've got a few let's go ahead and level set here this is the simplest definition that that allowed me to get my brain around what this really was in the presence of a Network partition you must choose

between consistency and availability in this case here you might have a service running a network a and it wants to write to databases a copy of the same data into into databases that are running in three different networks or in AWS this might be three different availability zones the fundamental question is what do you do when you can't get to one or more of them do you just fail and give back an error or do you write to the ones you can get to and then fix it up afterwards Netflix chose the latter and embraced

this concept of eventual consistency where we don't expect every single right to be read back immediately from any one of the sources that we've written the data to and Cassandra does this really well and has lots of flexibility so the client might write to only one node which then orchestrates and writes to multiple nodes and there's a concept of local Quorum where you can say I need this many nodes to respond back and say that they've actually committed the change before I'm going to assume that it's written out and that could be one node if

you really want to take on some risk in terms of the durability but you're willing to get very high availability or you can dial it up the other way and say I want it to be all the nodes that I want to write to so let's move on I'm just going to briefly talk about infrastructure because there's this is a whole topic unto itself but at some point your infrastructure whether it's AWS or Google or things that you've built built yourself is going to fail the point here is not not that Amazon can't keep their

services up they're actually very very good at it but that everything fails and the the the mistake if I were going to put blame on anybody in terms of what happened in Christmas Eve of 2012 when the elb control plane went down was that we we put all our eggs in one basket we put them all in Us East one and so when there was a failure there and by the way we've induced enough of our own to know that this is also true there was no place to go and so Netflix developed a multi-

region strategy with three AWS regions such that if any one of them failed completely we can still push all the traffic over to the other surviving regions so I did a talk on this earlier in the year uh so I would encourage you to take a look at it if you want to get really deep into the multi- region strategy and all the reasons that it evolved the way that it did um but at this point I'm going to put a pin in this I'm going to move forward so let's talk about scale now and

with scale I'm going to give you three cases uh the stateless service scenario the stateful service scenario these sort of fundamental components and then the hybrid similar to the diagram that we were looking at earlier where it's an orchestrated set of things that come together okay another question what's a stateless service anybody have an idea want to throw out their idea their definition of it ah brave soul good okay that's close that's interesting um I start with it's not a cach or a database you're not storing massive amounts of data you will frequently have frequently

accessed metadata cached in memory so there's the nonvolatile nature of that or configuration information um typically you won't have instance Affinity where you expect a customer to stick to a particular instance repeatedly and the most important thing is that the loss of a node is essentially a non-event it's not something that we should spend a lot of time worrying about and it's it and it recovers very quickly so you should be able to boot and spin up a new one to replace a bad node relatively easily and the best strategy here is one going back

to biology is one of replication just as with mitosis we can create cells on demand our cells are constantly dying and constantly being replenished autoscaling accomplishes this I'm sure most people are familiar with autoscaling but I can't underscore enough how fundamental this is and how much this is table Stakes for running microservices in a cloud you've got your Min and your max you've got a metric you're using to determine whether you need to scale up your group and then when you need to have a new instance spun up you simply pull a new image out

of S3 and you spin it back up the advantages are several you get compute efficiency because you're typically using on demand capacity your nodes get replaced easily and most importantly actually is when you get traffic spikes if you get a DS attack if you introduce a performance bug autoscaling allows you to absorb that change while you're figuring out what actually happens so this has saved us many many times strongly recommend it and then of course you want to make sure it always works by applying chaos chaos monkey was our very first sort of chaos tool

and it simply confirms that when a node dies it everything still continues to work this has been such a non-issue for Netflix since we've implemented chaos monkey I kind of want to knock wood as I say that um this just doesn't take our service down anymore losing an individual node is is very much the non-event that we want it to be so let's jump in let's talk about stateful services and they are the opposite obviously of a uh stateless one it is databases in caches it is sometimes a custom app and we did this a

custom app that has internal ized caches but of like large amounts of data and we had a service tier that did this and as soon as we went multi- region and tried to come up with generic strategies for replicating data this was the biggest problem we had so I strongly recommend you try to avoid storing your business logic and your state all within one application if you can avoid it now in this case what's meaningful is again the opposite of stateless which is that the loss of a node is a notable event it may take

hours to replace that node and spin up a new one so it is something that you need to be much more careful about so I'm going to talk about and I'm going to sort of tipping my hand here two different approaches for how we dealt with caching to sort of underscore this and again we as I said we had a number of people who were from Yahoo who were uh who had experience using ha proxy and Squid caches and a pattern where they had dedicated nodes for customers so a given customer would always hit the

same node for the cache and there was only one copy of that data the challenge is of course when that node goes down you've got a single point of failure and those customers would be unable to access that service but even worse because this was in the early days we didn't have proper historic uh settings in place we didn't have the bulk heading and the separation and isolation of thread pools and so I can still remember being on a call where one node went down and all of Netflix went down along with it it took

us three and a half hours to bring it back up to wait for that cash to refill itself before we could fulfill requests so that's the anti- pattern the single point of failure pattern and going back to biology redundancy is fundamental we have two kidneys so that if one fails we still have another one we have two lungs same thing now it does give us increased capacity but we can live with only one of them and just as um your human body does that Netflix has approached an architecture using a technology called evach and evach

is essentially a wrapper around mcash D it is sharted similar to the squid caches but multiple copies are written out to multiple noes so every time a write happens not only does it write it out to multiple nodes but it writes them into different availability zones so it sprays them across and separates them across the network partitions and likewise when we do reads reads are local because you want that local efficiency but the application can fall back to reading across availability zones if it needs to to get to those other nodes this is a success

pattern that has been repeated throughout um EV cache is is uh used by virtually every service that needs a cache today uh at Netflix uh and it's been highly useful to us uh in lots of good ways now let's talk about the combination of the two this this is the scenario we talked about earlier where you've got a hybrid service it's very easy in this case to take EV Cas for granted let me tell you why it can handle 30 million requests per second across the Clusters we have globally which is 2 trillion requests per

day it stores hundreds of billions of objects in tens of thousands of M casd instances and here's the the biggest win here it consistently scales in a linear way such that requests can be returned within a matter of milliseconds no matter what the load is obviously you need to have enough nodes but it scales really well and uh we had a scenario several years ago where our subscriber service was leaning on EVC a little bit too much and this is another anti pattern worth talking about it was called by almost every service I mean everybody

wants to know about the subscriber and you know what's their customer ID and how do I go access some other piece of information it had online and offline calls going to the same cluster the same evach cluster so batch processes doing recommendations looking up subscriber information plus the real-time call Path and in many cases it was called multiple times even with the same application within the life cycle of a sing single request it was treated as if you could freely call the cash as often as you wanted to so that at Peak we were seeing

load of 800,000 to a million requests per second against the service tier the fallback was a logical one when you were thinking about it from a oneoff perspective I just got a cash Miss let me go call into the service the problem was the fallback also when the entire EV cash layer went down was still a fallback to the service and the database and that's the anti pattern the service in the database couldn't possibly handle the load that EVC was shouldering and so the right approach uh was to fail fast so with this excessive load

we saw EV Cas go down it took down the entire subscriber service and the solutions were several the first thing is is stop hammering away at the same set of systems for batch and real time do request level caching so you're not repeatedly calling the same service over and over again as if it was free make that first hit expensive and the rest of them free throughout the life cycle of the request and something we haven't done yet but will uh very likely do is embed a secure token within the devices themselves that they pass

with their requests so if the subscriber service is unavailable you can fall back to that data stored in that encrypted token it should have enough information to identify the customer and do the fundamental operations for uh keeping the service up for that customer they can get some kind of reasonable experience and then of course you want to put this under load using chaos exercises using tools like fit now let's move on and let's talk about variance this is Variety in your architecture and the more variance you have the greater your challenges are going to be

because it increases the complexity of the environment you're managing I'm going to talk about two use cases one is operational drift that happens over time the other is the introduction that we've had recently over the last few years of new languages and containers within our architecture operational drift is something that's unintentional we don't do this on purpose but it does happen quite naturally drift over time looks something like you know setting your alert alert thresholds and keeping those maintained because those will change over time your timeouts and your retry settings might change maybe you've added

a new batch operation that should take longer your throughput will likely degrade over time unless you're constantly squeeze testing because as you add new functionality that's likely to slow things things down and then you can also get this drift sort of across Services let's say you found a great practice for keeping Services up and running but only half of your teams have actually embraced that practice so the first time we go and reach out to teams and say hey let's go figure this out let's go get your alerts all tuned let's do some squeeze testing

let's let's get you all tuned up and make sure service is going to be highly reliable and well performant and usually we get a pretty enthusiastic response on that first pass but humans are not very good at doing this very repetitive sort of manual stuff uh most people would rather be doing something else or they need to do their day job like go and build product for their product managers the next AB test we need to roll out and so the next time we go we tend not to get that same level of enthusiasm when

we say hey sorry but you're going to need to go do this again uh you can really take a lesson again from biology with this concept of autonomic the the autonomic nervous system there's lots of functions that your body just takes care of and you don't have to think about it you don't have to think about how you digest food you don't have to think about breathing or you would die when you fell asleep and likewise you want to make sure you set up an environment where you can make as many of these best practices

subconscious or not even not required for people to really spend a lot of time thinking about and the way that we've done that at Netflix is by building out a cycle of continuous learning and Automation and typically that learning comes from some kind of incident we just had an outage we get people on a call we hopefully alleviate customer pain we do an incident review to make sure that we understand what happened and then immediately do some kind of remediation hopefully to make sure at least tactically that that works well but then we do some

analysis is this a new pattern is there a Best practice that we can derive from this is this a recurring issue where if we could come up with some kind of solution it would be very high impact and then of course you want to automate that where ever possible and then of course you want to drive adoption to make sure that that gets integrated this is how knowledge becomes code and gets integrated directly into your microservice architecture over the years we've accumulated a set of these best practices we call it production ready this is a

checklist and it's a program within Netflix virtually every single one of these has some kind of automation behind it and a continuous Improvement model where we're trying to to make them better whether that's having a great alerting strategy making sure you're using Auto scaling using chaos monkey to test out your stateless service doing red black pushes to make sure that you can roll back quickly and one of the really important ones staging your deployments so that you don't push out bad code to all regions simultaneously of course all of these are automated and now I'm

going to jump over I'm going to talk about polyglot and containers this is something that's come about really just in the last few years and this is an intentional form of variance these are people people consciously going I want to introduce new technologies into the microservice architecture when I first started managing operations engineering about three years ago we came up with this construct of the paved road the paveed road was a set of sort best breed technologies that worked best for Netflix with Automation and integration sort of baked in so that our developers could be

as agile as possible that if they got on the pave Road they were going to have a really really efficient experience we focused on Java and what I'm now going to call barebones ec2 which is a bit of an oxymoron um but basically using ECT as ec2 as opposed to Containers while we were building that out and very proud of ourselves for getting this working well our internal customers our engineering customers were going off-road and building out their own paths started innocuously enough with python doing operational work made perfect sense we had some back office

applications written in Ruby and then things got sort of interesting when our web team said you know we're going to abandon the jvm and we're actually going to rewrite the web application in node js that's when things got very interesting and then as we add it in Docker Things become very challenging now the reasons we did this were logical it made a lot of sense to embrace these Technologies however things got real when we start talking about putting these Technologies into the critical path for our customers and it actually makes a lot of sense to

do so let me tell you why so the API Gateway actually had a capability or has a capability to integrate groovy scripts that can act as endpoints for the UI teams and they can verion every single one of those scripts so that as they make changes they can deploy a change out into production that has a into onto devices out in the field and have that sync up with that endpoint that's running within the API Gateway but this is another example of the monolith lots of code running in process cess with a lot of variety

and people with different understandings of how that service works and we had situations where endpoints got deleted or where the script or some script went rogue generated too many versions of something and ate up all of the memory available on the API service so again a monolithic pattern to be avoided and so the logical solution is to take those endpoints and push them out of the API service and in this case the plan is to move those into node.js little nodejs applications running in Docker containers and then those would of course call back into the

API service and now we've got our separation of concerns again now we can isolate any breakage or challenges that are introduced by those node applications now this doesn't come with a CO come without a cost in fact there's a rather large cost that comes with these kinds of changes and so it's very important to be thoughtful about it the UI teams that were using the groovy scripts were used to a very efficient model for how they did their development they didn't have to spend a lot of time managing the infrastructure they got to write scripts

check them in and they were done and so trying to replicate that with a nodejs and Docker container methodology takes a substantial amount of additional work the insight and triage capabilities are different if you're running in a container and you're asking about how much CPU is being consumed or how much memory you have to treat that differently you have to have different tooling and you have to instrument those applications in different ways we have a base Ami that's was pretty generic that was used across all of our applications now that's being fragmented out and more

specialized node management is huge there is no architecture out there or no technology out there today that we can use out of the box that allows us to manage these applications the way that we want to in the cloud and so there's an entirely new tier called Titus being built that allows us to do all the workload management and the equivalent of autoscaling and node rep placement and all of that so Netflix is making a fairly huge investment in that area and then all the work we did over the years running in the jvm with

our platform code uh making people efficient by providing a bunch of services now we have decisions to make do we duplicate them do we not provide them and let those teams running in node have to write their own direct rest calls and manage all of that themselves uh so that's being discussed and there's a certain amount of compromise happening there some of the platform functionality is going to be written natively in node for example and then of course anytime you introduce a new technology into production we saw this when we move to the cloud we

saw this every time we've done a major re architecture you're going to break things and they things will break in interesting and new ways that you haven't yet encountered and so there's a learning curve before you're actually going to become good at this and so rather than one pave road we now have a proliferation of pave roads and this is a real challenge for the teams that are centralized that that are finite that are trying to provide support to the rest of the engineering organiz a so we had a big debate about this a few

months ago and The Stance where we landed was the most important thing was to make sure that we really raised awareness of cost so that when we're making these architectural decisions people are well informed and they can make good choices we're going to constrain the amount of support and focus primarily still on jvm but obviously this new use case of node and doncker is pretty critical and so there's a lot of energy going into supporting that and then of course logically logically we'd have to prioritize by impact with a finite number of people who can

work on these types of things and where possible seek reusable Solutions delivery is relatively generic so you can probably support a wide variety of languages and platforms with delivery um so that's one example there one other example is client libraries that are relatively simple can potentially be autogenerated so you can create a Ruby version and a python version and a Java version so we're seeking those kinds of solutions again this is one of those places where there's no one cut and dry right way to do this um hopefully this is good food for thought if

you're dealing with these kinds of situations so let's talk about that last element now change what we do know is that when we change when we are in the office when we are making changes in production we break things this is outages by day of week lo and behold on the weekend things tend to break less here's the really interesting one by time of day 9:00 in the morning boom time to push changes time to break Netflix so um we know that that happens um and so the fundamental question here is how do you achieve

velocity but with confidence how do I move as fast as possible and without worrying about breaking things all the time the way that we address that is by creating a new delivery platform this replaced Asgard which was our Workhorse for many years this new platform was a global Cloud management platform but also a delivery an automated delivery system and here's what's really critical here spinner was designed to integrate best practices such that as things are deploying out into production we can integrate these Lessons Learned these automated uh components directly into the path for delivery in

the pipeline we see here it's using two things that we value highly automated Canary analysis where you put a trickle of traffic or some traffic into a new version of the code with live production traffic and then you determine whether or not the new code is as good or better than the old code and Stage deployment ments where you want to make sure you deploy uh I'm getting the 5 minute sign you want to make sure you deploy one region at a time so that if something breaks you can go to other regions you can

see the list here of other functions that are integrated and long term the production ready checklist we talked about earlier is fodder for a whole wide variety of things that longterm should be integrated into the delivery pipeline I'm cheating a little bit here because of time constraints luckily I can um and I did a talk last year reinvent that might be of interest to you if you really want to dig into how these functions deeply integrate with each other how does the production ready performance and reliability chaos engineering integrate with Spiner and continuous delivery monitoring

systems Etc so I would encourage you to check it out now I'm going to close this out with a short story about organization and architecture in the early days there was a team called electronic delivery that's what actually the first version of streaming was called electronic delivery we didn't have a term streaming back then in fact originally we were going to do download and had a hard drive in some kind of device and the very first version of the Netflix ready device platform looks something like this it had fundamental capabilities like networking capabilities built in

the platform functionality around security activation playback and then there was a user interface the user interface was actually relatively simple at the time it was using something called a que reader where you'd go to the website and add something into your queue and then go to the device and see if it show showed up what was also nice is this was developed under one organization which was called electronic delivery and so the client team and the server team were all one organization so they had this great tight working relationship it was very collaborative and the

design that they had developed or that we had developed was XML pay XML base payloads custom response codes within those XML responses and versioned firmware releases that would go out over long Cycles now in parallel the Netflix API was created for the DVD business to try and stimulate applications external applications that would drive traffic back to Netflix we said let a thousand flowers bloom we hoped that this would be wildly successful it really wasn't it didn't really generate a huge amount of value to Netflix however the Netflix API was well poised to help us out

with our UI Innovation it contained content metadata so all the data about what movies are available and could generate lists and it had a generalized rest API Json based schema HTTP based response codes this starting to feel like a more modern architecture here and it did Du as an ooff security model because that's what was required at the time for external apps that evolved over time to something else but what matters here is that from a device perspective we now had fragmentation across these two tiers we now had two Edge Services functioning in very very

different ways one was rest base Json ooth the other was RPC XML and a Custom Security mechanism for dealing with tokens and there was a firewall essentially between these two teams in fact because the API originally wasn't as well scaled as nccp there was a lot of frustration between teams every time API went down my team got called and so there was some friction there we really wanted them to be able to get that up and running but this distinction this unique Services protocols schemas security models meant that God forbid you were a client developer

and you had to span both of these worlds and try to get work done you were switching between completely different contexts and we actually had examples where we wanted to be able to do things like return limited duration licenses back with the list of movies that were coming back for the user interface so when I you click through and hit play it was instantaneous as opposed to having to make another roundtrip call to do DRM so because of this I had a conversation with one of the engineers a very senior engineer at Netflix and I

asked him what's the right long-term architecture can we do an exercise here and go figure this out and this is a gentleman named out and of course Very thoughtfully the first question he had to me seconds later was do you care about the organizational implications what happens if we have to integrate these things what happens we have to break the way we do things well this is very relevant to something called Conway's law does anybody I'm hearing some laughter so whoever laughed first uh tell me what Conway's law is relationship your servic relationship all right

good here's the sort of more sort of uh detailed explanation organizations which Design Systems are constrained to produce designs which are copies of the communication structures of these organizations they're very abstract I like this one a little better any piece of software reflects the organizational structure that produced it here's my favorite one you have four teams working on a compiler you'll end up with a four pass compiler so Netflix had a forast compiler uh that's where we were and the problem with this is this is the tail wagging the dog this is not Solutions first

this is organization first that was driving the architecture that we had and when we think about this this is this is essentially going back to this illustration we had before we had our Gateway we had nccp which is handling Legacy devices plus playback support we had API this was just a mess and so the architecture we ended up developing was something we call Blade Runner because we're talking about the edge services and the capabilities of nccp became decomposed and integrated directly into the Zu proxy layer the API Gateway and that appropriate pieces were pushed out

into new smaller microservices that that handle more fundamental capabilities like security and features around playback like subtitles and dubs and serving metadata so the Lessons Learned here this gave us greater capability and it gave us greater agility long term by unifying these things and thinking about the client and what their experience was were able to produce something much more powerful and we ended up refactoring the organization in response I ended up actually moving on my whole team got folded under the Netflix API team and that's when I moved over to operations engineering and that was

the right thing to do for the business Lessons Learned Solutions first team second and be willing to make those organizational changes so I'm going to briefly recap I have zero minutes I'm going to go over just a couple of minutes uh just so we can wrap up cleanly here so microservice architectures are complex and organic and it's best to think about them that way and their health depends on a discipline and about injecting chaos into that environment on a regular basis for dependencies you want to use circuit breakers and fallbacks and apply chaos you want

to have simple clients eventual consistency and a multi- region failover strategy for scale Embrace autoscaling please it's so simple and it's a great benefit reduce single points of failure partition your workloads have a failur driven design like embedding requests and doing request level caching and of course do chaos but again chaos under load to make sure that what you think is true is actually true for variance engineer your operations as much as possible to make those automatic understand and socialize the cost of variance and prioritize the support if you have a centralized organization and most

most organizations do prioritize by impact to make sure that you're you're as efficient as possible on change you want automated delivery and you want to integrate your best practices on a regular basis and again Solutions first team second there's a lot of technologies that support these strategies that Netflix has open sourced if you're not familiar with it I think a lot of people are um go check out Netflix OSS and also check out the Netflix Tech blog where there are regular announcements about how things are done at Netflix how things are done at scale announcements

about new open- source tools like visceral which is the tool that generated the visuals we've been looking at throughout and I think we're out of time at this point um do we have time for questions or should I just take it out outside if you can all right well I push the limits thank you very much thank you everybody

Related Videos

48:30

Design Microservice Architectures the Righ...

InfoQ

714,284 views

49:10

Scaling Push Messaging for Millions of Dev...

InfoQ

95,493 views

49:54

How Slack Works

InfoQ

153,868 views

55:08

Introduction to Microservices, Docker, and...

James Quigley

1,636,547 views

20:52

Microservices with Databases can be challe...

Software Developer Diaries

70,946 views

38:44

When To Use Microservices (And When Not To...

GOTO Conferences

545,281 views

53:14

Mastering Chaos - A Netflix Guide to Micro...

DevOps Federation

3,827 views

40:44

Top 5 techniques for building the worst mi...

NDC Conferences

235,115 views

52:07

Managing Data in Microservices

InfoQ

142,649 views

![Microservices Full Course [2024] | Microservices Explained | Microservices Tutorial | Edureka](https://img.youtube.com/vi/iWJzmV0xRVE/mqdefault.jpg)

3:57:25

Microservices Full Course [2024] | Microse...

edureka!

59,238 views

57:43

AWS re:Invent 2019: A day in the life of a...

AWS Events

66,014 views

50:06

The Many Meanings of Event-Driven Architec...

GOTO Conferences

632,276 views

49:10

Using sagas to maintain data consistency i...

Devoxx

306,009 views

1:35:43

Architecture Evolution - Discovering Bound...

Victor Rentea

3,890 views

51:31

Scaling Facebook Live Videos to a Billion ...

InfoQ

90,652 views

1:09:50

Developing microservices with aggregates -...

SpringDeveloper

277,066 views

51:12

Scaling Instagram Infrastructure

InfoQ

281,762 views

24:56

Martin Fowler – Microservices

Thoughtworks

213,036 views

36:45

Rich Harris - Rethinking reactivity

You Gotta Love Frontend

321,175 views

10:43

Monolithic vs Microservice Architecture: W...

Alex Hyett

89,428 views