Computer Scientist Explains Machine Learning in 5 Levels of Difficulty | WIRED

2.4M views4395 WordsCopy TextShare

WIRED

WIRED has challenged computer scientist and Hidden Door cofounder and CEO Hilary Mason to explain ma...

Video Transcript:

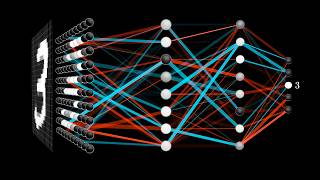

hi i'm hilary mason i'm a computer scientist and today i've been asked to explain machine learning in five levels of increasing complexity machine learning gives us the ability to learn things about the world from large amounts of data that we as human beings can't possibly study or appreciate so machine learning is when we teach computers to learn patterns from looking at examples in data such that they can recognize those patterns and apply them to new things that they haven't seen before hi hi i'm hillary what's your name i'm brynn do you know what machine learning

means have you heard that before no so machine learning is a way that we teach computers to learn things about the world by looking at patterns and looking at examples of things so can i show you an example of how a machine might learn something sure so is this a dog or cat it's a dog and it's this one a cat and what makes a dog a dog and a cat a cat well dogs are very playful i think more than cats cats lick themselves more than dogs i think do you think if we look

at these pictures do you think maybe we could say well they both have pointy ears but the dogs have a different kind of body and the cats like to stand up a little different do you think that makes sense yeah yeah what about this one a dog a cat i think a cat because it's more skinny and also its legs are like really tall and its ears are a little pointy this one's a jackal and it's actually a kind of dog but you made a good guess that's what machines do too they make guesses is

this a cat or a dog none none what is it it's humans and how did you know that it's not a cat or a dog because cats and dogs because they walk on their paws and their ears are like right here not right here and they don't wear watches and so you did something pretty amazing there because we asked the question is it a cat or a dog and you said i disagree with your question it's a human so machine learning is when we teach machines to make guesses about what things are based on looking

at a lot of different examples and i build products that use machine learning to learn about the world and make guesses about things in the world when we try to teach machines to recognize things like cats and dogs it takes a lot of examples we have to show them tens of thousands or even millions of examples before they can get even close to as good at it as you are do you have tests in school yeah i have after every unit we have a review and then we have a test are those like the practice

problems you do before the test well just like everything that's going to be on the test is on the review which means that in the test you're not seeing any problems that you don't know how to solve as long as you did all your practice right yeah so machines work the same way if you show them a lot of examples and give them practice they'll learn how to guess and then when you give them the test they should be able to do that so we looked at eight pictures and you were able to answer really

quickly but what would you do if i gave you 10 million examples would you be able to do that so quickly yeah so one of the differences between people and machines is that people might be a little better at this but can't look at 10 million different things now that we've been talking about machine learning is this something you want to learn how to do um kind of because i kind of want to become a spy and we used to do coding so i may be kind of good and machine learning is a great way

to use all those math skills all those coding skills and would be a super cool tool for a spy hello hi are you a student lucy yes i just finished ninth grade congratulations thank you it's very exciting have you ever heard of machine learning before i'm going to assume that it means humans being able to teach machines or robots how to learn themselves that's right when we teach machines to learn from data to build a model from that data or a representation of that and then to make a prediction one of the places we often

find machine learning in the real world is in things like recommendation systems so do you have an artist do you really like yeah melanie martinez so i'm gonna look up melanie martinez and it says here if you like melanie martinez one of the other songs you might like is by aura do you know who that is i do not so let's listen to a hint of this song okay all right so why do you think spotify might have recommended that song well i know that in melanie martinez's music she used a lot of the filtered

voice to make it sound very deep and low and that song had that and that's actually a really interesting thing to think about because that creepy vibe is something that you can perceive and i can perceive but it's actually really hard to describe to a machine what do you think might go into that pitch of the music if it's really low or if it's super high it could know that what can the machine understand it's a great question the machine can understand whatever we tell it to understand so there might be a person thinking about

things like the pitch or the pacing or the tone or sometimes machines can figure out things about music or images or videos that we don't tell it to discover but that it can learn from looking at a lot of different examples why do you think companies might use machine learning i think things like facebook or instagram they probably use it to target ads sometimes the ads you see are really uncanny and i think that's because they're based on so much data they know where you live they know where your device is it's also important to

realize that people in aggregate are actually pretty predictable like when we talk to each other we like to talk about the novel things like here we're having this conversation we don't do this every day but we probably still eat breakfast we're gonna eat lunch we're gonna eat dinner you probably are going to the same home you go to most of the time and so they're able to take that data that we already give them and make predictions based on that as to what ads they should show us so you're saying i give them enough data

as it is about what i might be talking about or thinking about that they can read my mind but just use the data that i've already given them and it almost seems like that's why yes to do machine learning we use something called algorithms have you heard of algorithms before a set of steps or a process carried out to complete something that's right so do you think that we've been able to teach machines enough so that they can do things that even we can't do and on the opposite side of that do you think there

are things that we can do that a machine might never be able to do so there are things that machines are really great at that humans are actually not great at imagine watching every video posted to tick tock every day so we just don't have enough time to do that at the rate at which we can actually watch those videos but a machine can analyze all of them and then make recommendations to us and then thinking about things that machines are bad at and people are good at people are really great with only one or

two examples of learning something new and incorporating that into our model of the world to make good decisions whereas machines often need tens of thousands of examples and that's not even getting into things like good judgment because we care about people because we can imagine a future that we want to live in that doesn't exist today and that's something that is still uniquely human machines are great at predicting based on what they've seen in the past but they're not creative they're not going to invent they're not going to you know really change where we're going

to go that's up to us i'm sunny and what are you majoring in i study math and computer science so in your studies have you learned about machine learning yeah i have so to me machine learning is essentially exactly what it sounds like it's trying to teach a machine specifics about something by inputting a lot of data points and slowly the machine will build up knowledge about it over time for example my gmail program i assumed that there would be a lot of like machine learning models happening at once right absolutely and that's a great

example because you have models that are operating to do things like figure out if a new email is spam or not so what would you think about if you were looking at an email and trying to decide if it went in one category or another i'd probably look at certain keywords maybe if the recipient and sender had exchanged emails before and what generally those fell into in the past so these are things we would call features we go through a process where we do feature engineering where somebody looks at the example and says okay these

are the things that i think might allow us to statistically tell the difference from something in one category versus another so for example perhaps you don't speak russian you start getting a lot of email in russian obviously like the features that you just described are features which a person would have had to think about are there features which like the machine itself could learn this is a great question because it really gets to the difference between some of our different tools in our machine learning tool belt in addressing problems like this so if we were

to use a supervised learning classic classification approach a person would need to think about those features and creatively come up with them an approach we call the kitchen sink approach which is just try everything you can possibly think of and see what works unsupervised learning where we don't have label data and we're trying to infer some structure out of the data as you're projecting that data into a space and looking for things like clusters and there's a bunch of really fun math about how you do that how you think about distance and by distance i

mean that if we have two data points in space how do we decide if they're similar or not and how do the algorithms themselves usually differ between unsupervised and supervised learning supervised learning we have our labels and we're trying to figure out what statistically indicates if something matches one label or another label unsupervised learning we don't necessarily have those labels that's the thing we're trying to discover so reinforcement learning is another technique that we use sometimes you can think about it like a turn in a game and you can play you know millions and millions

of trials so that you're able to develop a system that by experimenting with reinforcement learning can eventually learn to play these games pretty successfully deep learning which is essentially using neural networks and very large amounts of data to eventually iterate on a network structure that can make predictions with reinforcement learning versus deep learning it seems to me that reinforcement learning is it sort of like the kitchen sink approach that you were talking about earlier where you're just kind of trying everything it is but it also thrives in environments where you have a decision point a

pallet of actions to choose from and it actually comes historically from trying to train a robot to navigate a room if it bonks into this chair it can't go forward anymore and if it falls into that pit you know it's not going to succeed but if it keeps exploring it'll eventually get to the goal oh like roombas yes oh wow i didn't realize it was that deep almost is there a situation which you'd want to use a deep learning algorithm over a reinforcement learning algorithm so typically you would choose deep learning if you have sufficient

high quality data hopefully labeled in a useful way if you really are happy not to necessarily understand or be able to interpret what your system is doing or you're willing to invest in another set of work afterwards to understand what the system is doing once you've already trained it and this also comes down to the fact that some things are actually really easy to solve with linear regression or simple statistical approaches and some things are impossible what would be the outcome if you were to choose the quote unquote wrong approach you build a system that

could actually be useless so years ago i had a client there was a big telecom company and they had a data scientist who built a deep learning system to predict customer churn actually was very accurate but it wasn't useful because nobody knew why the prediction was what it was so they could say you know sonny you're likely to quit next month but they had no idea what to do about it and so i think there are a bunch of failure modes would that be an example of like linear regression where the regression is accurate but

you know for marketing purposes it's like if you don't know why i'm quitting the service then how can we fix this yeah this is actually a good example of a very real world kind of machine learning problem where the solution to this was to build an interpretable system on top of the accurate prediction so not to throw it away but to do a bunch more work to figure out the why how can we improve machine learning algorithms it's actually uh fairly new that we're able to solve all of these problems and start to build these

products and apply it in businesses and apply it you know everywhere and so we're still developing good practices and what it means to be a professional in machine learning we're really developing a notion of what good looks like i'm in my first year of a phd in computer science and i'm studying natural language processing and machine learning so would you mind telling me a bit about what you've been working on or interested in lately i've been looking at understanding persuasion and online text and the ways that we might be able to automatically detect the intent

behind that persuasion or who it's targeted at and what makes effective persuasive techniques so what are some of the techniques you're applying to look at that debate data something i'm interested in exploring is how well it works to use deep learning and sort of automatically extracted features from this text versus using some of the more traditional techniques that we have things like lexicons or some sort of template matching techniques for extracting features from text that's a question i'm just interested in in general when do we really need deep learning versus when can we use something

that's a little bit more interpretable something that's been around for a while do you think there are going to be general principles that guide those decisions because right now it's generally up to the machine learning engineer to decide what tools they want to apply i definitely think there is but i also sort of see it varying a lot based on the use case something that kind of works out of the box and maybe works a little bit more automatically might be better and in other cases you do sort of kind of you want a lot

of fine grain control so is that where some of that frustration around the lack of controllability and interpretability comes from yeah if you're building a model that just predicts the next thing based off of everything it's seen from text online then yeah you're really going to be replicating whatever that distribution online is if you train a model off of language off the internet it sometimes says uncomfortable things or inappropriate things and sometimes really biased things have you ever run into this yourself and then how do you think about that problem of potentially even measuring the

bias in a model that we've trained yeah it's a really tricky question as you said these models are trained to sort of predict the next sequence of words given a certain sequence of words so we could start with just sort of prompts like the woman was versus the man was and kind of pull out common words that are sort of more used with one phrase versus the other so that's sort of a qualitative way of looking at it it's not ever kind of a guarantee of how the model is going to behave in one particular

instance and i think that's what's really tricky and that's why i sort of think it's really good for creators of systems to just be honest about this is sort of what we have seen and so then someone can make their own judgment about is this going to be too high risk for sort of my particular use case i imagine in the last few years we've seen a lot of changes and improvements in the capabilities of nlp systems so is there anything in that that you're particularly excited about exploring further i'm really interested in sort of

the creative potential that we've started to see from nlp systems with things like gpd3 and other really powerful language models it's really easy to write long grammatical passages thinking about the way that we can then harness like the human ability to actually give meaning to those words and sort of provide structure and how we can combine those things with the kind of like generative capabilities of these models now is really interesting yeah i agree [Music] so hi claudia it's so great to see you it's been far too long you know we first met 10 11

years ago and machine learning has changed a lot since then tooling that we now have the capacity and also an elevation of the problem sets that we're dealing with and how to frame the problem and i'm almost struggling to figure out whether it's a blessing or a curse that it has become as accessible and as democratized and as easy to execute and you just build another new company from scratch but so what's been kind of your reflection on that well you're absolutely right that the attention machine learning gets has grown dramatically 20 years ago going

to gatherings and telling people what i was working on and how to seeing the blank face or the like where's the turn and walk away like oh no the accessibility of the tooling like we can now do in like five lines of code something that would have taken 500 lines of very mathematical messy gnarly code even you know five years ago and it's not an exaggeration and there are tools that mean that pretty much anyone can pick this up and start playing with it and start to build with it and that is also really exciting

in contrast what i'm struggling with the friend of mine who asked me to look at some healthcare data for him and despite the capabilities that we're having in all of the kind of bigger societal problems alongside with data collection engineering all the gnarly stuff that is actually not the machine learning itself it's the rest of it where certain data isn't available and to me it's staggering how difficult it is to get it off the ground and actually use and part of the challenge of it is not the mathematics of building models but the challenge is

making sure that the data is sufficiently representative potentially high quality but how transparent do i need to build it for it to be adopted at some point what types of biases in the data collection and then also in the usage we now call it the bias but we're still struggling with the society not really living up to his expectations and then machine learning bringing it to the forefront right and so to say that another way when you're collecting data from the real world and then building machine learning systems that automate decisions based on that data

all of the biases and problems that are already in the real world then can be magnified through that machine learning system and so it can make many of these problems much worse feeling increasingly challenged that my skill set of being very good at programming has become somewhat secondary and it's really it's really the bigger picture understanding of who would be using that how transparent do i need to build it for it to be adopted at some point what types of biases in the data collection and then also in the usage i think in certain areas

we have societal expectations as to what is fair and what isn't and so it's not just the provenance of that data but it's sort of deeply understanding why does it look the way it looks why was it collected this way what are the limitations of it we need to think about that in entire process how we document that process this is an issue in companies where somebody might create something that even their peers can't recreate what have you seen in terms of which industries where they stand like who is adopting now who is ready to

utilize it where would you maybe wish they didn't even try these are great questions so things like actuarial science operations research where they actually are not using machine learning as much as you might think and then you have other sorts of companies or on the fintech side or even the ad tech side of things where they perhaps are using machine learning um to the point of even absurdity so i spent about eight years working in atec and the motivation was really because it was such an amazingly exciting playground to push that technology that used to

largely live in academia really out in the world and see kind of what it can achieve it has created such a hunger for data that now everything is being collected i'm curious when are we going to make a foray into things like agriculture about smart production of the things we eat you see and hear these interesting stories but i feel like we're not ready yet to put that into a economically viable situation so when we think about the next five to ten years the things that are really still holding us back are these uneven applications

of resources to problems because the problems that get attention are the high value ones in terms of how much money you can make or the things that are fashionable enough that you can publish a paper on it so what do you think is holding us back i fully agree on the steps you pointed out and and the processes i think there is a chicken and neck problem like your former example that these areas that need to wait for data the value of the data collection is then also slightly less apparent and so it gets delayed

further and you see that happening but what my experience has been yes unfortunately i feel a drifting apart between academia and the uses of ai but i'm somewhat frustrated with a generation of students who have standard data sets that they never think about what the model needs to be used for that they never have to think about how the data was collected so with all these challenges ahead of us how optimistic are you about this world that i deeply believe we can uh create and the steps towards it i am incredibly optimistic and uh perhaps

it's a personality flaw but i can't help but look at the potential of the technology to reduce harm to give us information to help us make better decisions and to think that we would choose to address the big problems ahead of us i don't think we have a hope of addressing them without figuring out the role that machine learning will play and to think that we would then choose not to do that is just unthinkable despite the rightfully concerns about the challenges ahead but i think they also make us a society better they challenge us

to be a lot clearer of what fairness means to us means to all of us so with all of the setbacks i think we have exciting years to come and i am looking forward to a world where a lot more of that is used for the right purposes [Music] i hope you learned something about machine learning there has never been a better time to study machine learning because you're now able to build products that have tremendous potential and impact across any industry or area that you might be excited about [Music]

Related Videos

25:47

Harvard Professor Explains Algorithms in 5...

WIRED

2,869,519 views

25:26

Hacker Rates 12 Hacking Scenes In Movies A...

Insider

3,061,221 views

23:47

Computer Scientist Explains the Internet i...

WIRED

329,520 views

10:01

AI, Machine Learning, Deep Learning and Ge...

IBM Technology

168,214 views

12:55

How to Make Learning as Addictive as Socia...

TED

7,342,554 views

1:30:28

AlphaGo - The Movie | Full award-winning d...

Google DeepMind

35,619,005 views

19:27

Quantum Computing Expert Explains One Conc...

WIRED

7,860,350 views

24:02

Google CEO Sundar Pichai and the Future of...

Bloomberg Originals

3,565,836 views

18:40

But what is a neural network? | Chapter 1,...

3Blue1Brown

17,208,252 views

17:13

Stanford Computer Scientist Answers Coding...

WIRED

3,807,920 views

34:40

How Far is Too Far? | The Age of A.I.

YouTube Originals

62,884,811 views

23:26

What The Ultimate Study On Happiness Reveals

Veritasium

4,629,370 views

24:44

Mathematician Explains Infinity in 5 Level...

WIRED

4,258,849 views

36:33

Chess Pro Explains Chess in 5 Levels of Di...

WIRED

1,824,265 views

15:12

With Spatial Intelligence, AI Will Underst...

TED

514,373 views

1:09:58

MIT Introduction to Deep Learning | 6.S191

Alexander Amini

538,317 views

27:14

My Unconventional Coding Story | Self-Taught

Travis Media

615,429 views

16:32

A.I. Expert Answers A.I. Questions From Tw...

WIRED

761,700 views

26:56

Astrophysicist Explains Black Holes in 5 L...

WIRED

3,386,743 views

46:02

What is generative AI and how does it work...

The Royal Institution

997,222 views